Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey

Stars: 206

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

README:

Efficient Multimodal Large Language Models: A Survey [arXiv]

Yizhang Jin12, Jian Li1, Yexin Liu3, Tianjun Gu4, Kai Wu1, Zhengkai Jiang1, Muyang He3, Bo Zhao3, Xin Tan4, Zhenye Gan1, Yabiao Wang1, Chengjie Wang1, Lizhuang Ma2

1Tencent YouTu Lab, 2SJTU, 3BAAI, 4ECNU

⚡We will actively maintain this repository and incorporate new research as it emerges. If you have any questions, please contact [email protected]. Welcome to collaborate on academic research and writing papers together.(欢迎学术合作).

@misc{jin2024efficient,

title={Efficient Multimodal Large Language Models: A Survey},

author={Yizhang Jin and Jian Li and Yexin Liu and Tianjun Gu and Kai Wu and Zhengkai Jiang and Muyang He and Bo Zhao and Xin Tan and Zhenye Gan and Yabiao Wang and Chengjie Wang and Lizhuang Ma},

year={2024},

eprint={2405.10739},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

In the past year, Multimodal Large Language Models (MLLMs) have demonstrated remarkable performance in tasks such as visual question answering, visual understanding and reasoning. However, the extensive model size and high training and inference costs have hindered the widespread application of MLLMs in academia and industry. Thus, studying efficient and lightweight MLLMs has enormous potential, especially in edge computing scenarios. In this survey, we provide a comprehensive and systematic review of the current state of efficient MLLMs. Specifically, we summarize the timeline of representative efficient MLLMs, research state of efficient structures and strategies, and the applications. Finally, we discuss the limitations of current efficient MLLM research and promising future directions.

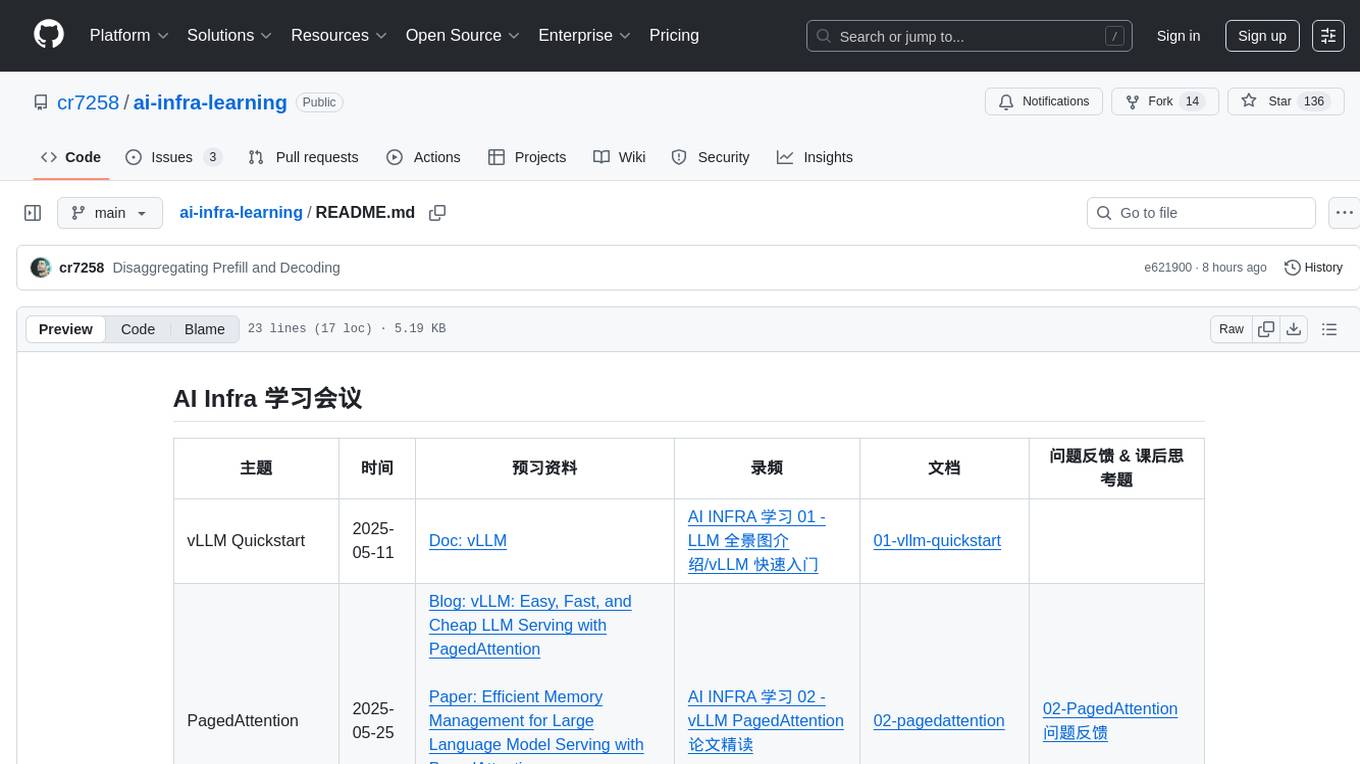

| Model | Vision Encoder | Resolution | Vision Encoder Parameter Size | LLM | LLM Parameter Size | Vision-LLM Projector | Timeline |

|---|---|---|---|---|---|---|---|

| MobileVLM | CLIP ViT-L/14 | 336 | 0.3B | MobileLLaMA | 2.7B | LDP | 2023-12 |

| LLaVA-Phi | CLIP ViT-L/14 | 336 | 0.3B | Phi-2 | 2.7B | MLP | 2024-01 |

| Imp-v1 | SigLIP | 384 | 0.4B | Phi-2 | 2.7B | - | 2024-02 |

| TinyLLaVA | SigLIP-SO | 384 | 0.4B | Phi-2 | 2.7B | MLP | 2024-02 |

| Bunny | SigLIP-SO | 384 | 0.4B | Phi-2 | 2.7B | MLP | 2024-02 |

| MobileVLM-v2-3B | CLIP ViT-L/14 | 336 | 0.3B | MobileLLaMA | 2.7B | LDPv2 | 2024-02 |

| MoE-LLaVA-3.6B | CLIP-Large | 384 | - | Phi-2 | 2.7B | MLP | 2024-02 |

| Cobra | DINOv2, SigLIP-SO | 384 | 0.3B+0.4B | Mamba-2.8b-Zephyr | 2.8B | MLP | 2024-03 |

| Mini-Gemini | CLIP-Large | 336 | - | Gemma | 2B | MLP | 2024-03 |

| Vary-toy | CLIP | 224 | - | Qwen | 1.8B | - | 2024-01 |

| TinyGPT-V | EVA | 224/448 | - | Phi-2 | 2.7B | Q-Former | 2024-01 |

| SPHINX-Tiny | DINOv2 , CLIP-ConvNeXt | 448 | - | TinyLlama | 1.1B | - | 2024-02 |

| ALLaVA-Longer | CLIP-ViT-L/14 | 336 | 0.3B | Phi-2 | 2.7B | - | 2024-02 |

| MM1-3B-MoE-Chat | CLIP_DFN-ViT-H | 378 | - | - | 3B | C-Abstractor | 2024-03 |

| LLaVA-Gemma | DinoV2 | - | - | Gemma-2b-it | 2B | - | 2024-03 |

| Mipha-3B | SigLIP | 384 | - | Phi-2 | 2.7B | - | 2024-03 |

| VL-Mamba | SigLIP-SO | 384 | - | Mamba-2.8B-Slimpj | 2.8B | VSS-L2 | 2024-03 |

| MiniCPM-V 2.0 | SigLIP | - | 0.4B | MiniCPM | 2.7B | Perceiver Resampler | 2024-03 |

| DeepSeek-VL | SigLIP-L | 384 | 0.4B | DeepSeek-LLM | 1.3B | MLP | 2024-03 |

| KarmaVLM | SigLIP-SO | 384 | 0.4B | Qwen1.5 | 0.5B | - | 2024-02 |

| moondream2 | SigLIP | - | - | Phi-1.5 | 1.3B | - | 2024-03 |

| Bunny-v1.1-4B | SigLIP | 1152 | - | Phi-3-Mini-4K | 3.8B | - | 2024-02 |

- Mobilevlm: A fast, reproducible and strong vision language assistant for mobile devices. arXiv, 2023 [Paper]

- Llava-phi: Efficient multi-modal assistant with small language model. arXiv, 2024 [Paper]

- Imp-v1: An emprical study of multimodal small language models. arXiv, 2024 [Paper]

- TinyLLaVA: A Framework of Small-scale Large Multimodal Models. arxiv, 2024 [Paper]

- (Bunny)Efficient multimodal learning from data-centric perspective.arXiv, 2024 [Paper]

- Gemini: a family of highly capable multimodal modelsarXiv, 2023 [Paper]

- Mobilevlm v2: Faster and stronger baseline for vision language model. arXiv, 2024 [Paper]

- Moe-llava: Mixture of experts for large vision-language models. arXiv, 2024 [Paper]

- Cobra:Extending mamba to multi-modal large language model for efficient inference. arXiv, 2024 [Paper]

- Mini-gemini: Mining the potential of multi-modality vision language models. arXiv, 2024 [Paper]

- (Vary-toy)Small language model meets with reinforced vision vocabulary. arXiv, 2024 [Paper]

- Tinygpt-v: Efficient multimodal large language model via small backbones.arXiv, 2023 [Paper]

- SPHINX-X: Scaling Data and Parameters for a Family of Multi-modal Large Language Models.arXiv, 2024 [Paper]

- ALLaVA: Harnessing GPT4V-synthesized Data for A Lite Vision-Language Model.arXiv, 2024 [Paper]

- Mm1: Methods, analysis & insights from multimodal llm pre-training.arXiv, 2024 [Paper]

- LLaVA-Gemma: Accelerating Multimodal Foundation Models with a Compact Language Model.arXiv, 2024 [Paper]

- Mipha: A Comprehensive Overhaul of Multimodal Assistant with Small Language Models.arXiv, 2024 [Paper]

- VL-Mamba: Exploring State Space Models for Multimodal Learning.arXiv, 2024 [Paper]

- MiniCPM-V 2.0: An Efficient End-side MLLM with Strong OCR and Understanding Capabilities.github, 2024 [Github]

- DeepSeek-VL: Towards Real-World Vision-Language Understanding .arXiv, 2024 [Paper]

- KarmaVLM: A family of high efficiency and powerful visual language model.github, 2024 [Github]

- moondream: tiny vision language model.github, 2024 [Github]

- Broadening the visual encoding of vision-language models, arXiv, 2024 [Paper]

- Cobra: Extending Mamba to Multi-Modal Large Language Model for Efficient Inference, arXiv, 2024 [Paper]

- SPHINX-X: Scaling Data and Parameters for a Family of Multi-modal Large Language Models, arXiv, 2024 [Paper]

- ViTamin: Designing Scalable Vision Models in the Vision-Language Era. arXiv, 2024 [Paper]

- Visual Instruction Tuning. arXiv, 2023 [Paper]

- Improved baselines with visual instruction tuning. arXiv, 2023 [Paper]

- TokenPacker: Efficient Visual Projector for Multimodal LLM. arXiv, 2024 [Paper]

- Flamingo: a Visual Language Model for Few-Shot Learning, arXiv, 2022 [Paper]

- BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models, arXiv, 2023 [Paper]

- Broadening the visual encoding of vision-language models, arXiv, 2024 [Paper]

- MobileVLM V2: Faster and Stronger Baseline for Vision Language Model, arXiv, 2023 [Paper]

- Mobilevlm: A fast, reproducible and strong vision language assistant for mobile devices. arXiv, 2023 [Paper]

- Vl-mamba: Exploring state space models for multimodal learning.arXiv, 2024 [Paper]

- Honeybee: Locality-enhanced projector for multimodal llm.arXiv, 2023 [Paper]

- Llama: Open and efficient foundation language models. arXiv, 2023 [Paper]

- Vicuna: An open-source chatbot impressing gpt-4 with 90%* chatgpt quality.website, 2023 [[web](https://vicuna. lmsys. org)]

- Phi-2: The surprising power of small language models. blog 2023 [[blog](Microsoft Research Blog)]

- Gemma: Open models based on gemini research and technology. arXiv, 2024 [Paper]

- Phi-3 technical report: A highly capable language model locally on your phone. 2024

- Llava-uhd: an lmm perceiving any aspect ratio and high-resolution images. arXiv, 2024 [Paper]

- A pioneering large vision- language model handling resolutions from 336 pixels to 4k hd. arXiv, 2024 [Paper]

- Llava-uhd: an lmm perceiving any aspect ratio and high-resolution images. arXiv, 2024 [Paper]

- Texthawk: Exploring efficient fine-grained perception of multimodal large language models. arXiv, 2024 [Paper]

- Tiny- chart: Efficient chart understanding with visual token merging and program-of-thoughts learning.

- Llava-prumerge: Adaptive token reduction for efficient large multimodal models. arXiv, 2024 [Paper]

- Madtp: Multi- modal alignment-guided dynamic token pruning for accelerating vision-language transformer. arXiv, 2024 [Paper]

- CROSSGET: CROSS-GUIDED ENSEMBLE OF TOKENS FOR ACCELERATING VISION-LANGUAGE TRANSFORMERS. ICML, 2024 [Paper]

- Matryoshka Query Transformer for Large Vision-Language Models. arxiv, 2024 [Paper]

- Mini-gemini: Mining the potential of multi-modality vision language models. arXiv, 2024 [Paper]

- When do we not need larger vision models? arXiv, 2024 [Paper] arXiv, 2023 [Paper]

- Plug-and-play grounding of reasoning in multimodal large language models. arXiv, 2024 [Paper]

- Mova: Adapting mixture of vision experts to multimodal context. arXiv, 2024 [Paper]

- Elysium: Exploring object-level perception in videos via mllm. arXiv, 2024 [Paper]

- Extending video-language pretraining to n-modality by language-based semantic alignment. arXiv, 2023 [Paper]

- Video-llava: Learning united visual representation by alignment before projection. arXiv, 2023 [Paper]

- Moe-llava: Mixture of experts for large vision-language models. arXiv, 2024 [Paper]

- Mm1: Methods, analysis & insights from multimodal llm pre-training. arXiv, 2024 [Paper]

- Mixtral of experts. arXiv, 2024 [Paper]

- Cobra: Extending Mamba to Multi-Modal Large Language Model for Efficient Inference, arXiv, 2024 [Paper]

- Mamba: Linear-time sequence modeling with selective state spaces. arXiv, 2023 [Paper]

- Vl-mamba: Exploring state space models for multimodal learning. arXiv, 2024 [Paper]

- On speculative decoding for multimodal large language models. arXiv, 2024 [Paper]

- An image is worth 1/2 tokens after layer 2: Plug-and-play inference acceleration for large vision-language models. arXiv, 2024 [Paper]

- Boosting multimodal large language models with visual tokens withdrawal for rapid inference. arXiv, 2024 [Paper]

- Tinyllava: A framework of small-scale large multimodal models. arXiv, 2024 [Paper]

- Vila: On pre-training for visual language models. arXiv, 2023 [Paper]

- Sharegpt4v: Improving large multi-modal models with better captions. arXiv, 2023 [Paper]

- What matters when building vision- language models? arXiv, 2024 [Paper]

- Cheap and quick: Efficient vision-language instruction tuning for large language models. nips, 2023 [Paper]

- Hyperllava: Dynamic visual and language expert tuning for multimodal large language models. arXiv, 2024 [Paper]

- SPHINX-X: Scaling Data and Parameters for a Family of Multi-modal Large Language Models.arXiv, 2024 [Paper]

- Cobra:Extending mamba to multi-modal large language model for efficient inference. arXiv, 2024 [Paper]

- Tinygpt-v: Efficient multimodal large language model via small backbones.arXiv, 2023 [Paper]

- Not All Attention is Needed: Parameter and Computation Efficient Transfer Learning for Multi-modal Large Language Models. arXiv, 2024 [Paper]

- Memory-space visual prompting for efficient vision-language fine-tuning. arXiv, 2024 [Paper]

- Training small multimodal models to bridge biomedical competency gap: A case study in radiology imaging. arXiv, 2024 [[Paper]]

- Moe-tinymed: Mixture of experts for tiny medical large vision-language models. arXiv, 2024 [Paper]

- Texthawk: Exploring efficient fine-grained perception of multimodal large language models. arXiv, 2024 [Paper]

- Tiny- chart: Efficient chart understanding with visual token merging and program-of-thoughts learning. arXiv, 2024 [Paper]

- Monkey: Image resolution and text label are important things for large multi-modal models. arXiv, 2024 [Paper]

- Hrvda: High-resolution visual document assistant. arXiv, 2023 [Paper]

- mplug-2: A modular- ized multi-modal foundation model across text, image and video. arXiv, 2023 [Paper]

- Video-llava: Learning united visual representation by alignment before projection. arXiv, 2023 [Paper]

- Ma-lmm: Memory-augmented large multimodal model for long-term video under- standing. arXiv, 2024 [Paper]

- Llama-vid: An image is worth 2 tokens in large language models. arXiv, 2023 [Paper]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Efficient-Multimodal-LLMs-Survey

Similar Open Source Tools

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and applications. It discusses current limitations and future directions in efficient MLLM research.

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

LLM-Tool-Survey

This repository contains a collection of papers related to tool learning with large language models (LLMs). The papers are organized according to the survey paper 'Tool Learning with Large Language Models: A Survey'. The survey focuses on the benefits and implementation of tool learning with LLMs, covering aspects such as task planning, tool selection, tool calling, response generation, benchmarks, evaluation, challenges, and future directions in the field. It aims to provide a comprehensive understanding of tool learning with LLMs and inspire further exploration in this emerging area.

Awesome-LLM-Post-training

The Awesome-LLM-Post-training repository is a curated collection of influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies. It covers various aspects of LLMs, including reasoning, decision-making, reinforcement learning, reward learning, policy optimization, explainability, multimodal agents, benchmarks, tutorials, libraries, and implementations. The repository aims to provide a comprehensive overview and resources for researchers and practitioners interested in advancing LLM technologies.

ai-infra-learning

AI Infra Learning is a repository focused on providing resources and materials for learning about various topics related to artificial intelligence infrastructure. The repository includes documentation, papers, videos, and blog posts covering different aspects of AI infrastructure, such as large language models, memory management, decoding techniques, and text generation. Users can access a wide range of materials to deepen their understanding of AI infrastructure and improve their skills in this field.

Awesome-GUI-Agents

Awesome-GUI-Agents is a curated list for GUI Agents, focusing on updates, contributing guidelines, modules of GUI Agents, paper lists, datasets, and benchmarks. It provides a comprehensive overview of research papers, models, and projects related to GUI automation, reinforcement learning, and grounding. The repository covers a wide range of topics such as perception, exploration, planning, interaction, memory, online reinforcement learning, GUI navigation benchmarks, and more.

Awesome-Code-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, in lowercase letters: 'description' (detailed description of the repo, must be less than 400 words,Ensure that no line breaks and quotation marks.),'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,user may use those keyword to find the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in lowercase letters), 'answer' (in english languages)

dive-into-llms

The 'Dive into Large Language Models' series programming practice tutorial is an extension of the 'Artificial Intelligence Security Technology' course lecture notes from Shanghai Jiao Tong University (Instructor: Zhang Zhuosheng). It aims to provide introductory programming references related to large models. Through simple practice, it helps students quickly grasp large models, better engage in course design, or academic research. The tutorial covers topics such as fine-tuning and deployment, prompt learning and thought chains, knowledge editing, model watermarking, jailbreak attacks, multimodal models, large model intelligent agents, and security. Disclaimer: The content is based on contributors' personal experiences, internet data, and accumulated research work, provided for reference only.

Awesome-LWMs

Awesome Large Weather Models (LWMs) is a curated collection of articles and resources related to large weather models used in AI for Earth and AI for Science. It includes information on various cutting-edge weather forecasting models, benchmark datasets, and research papers. The repository serves as a hub for researchers and enthusiasts to explore the latest advancements in weather modeling and forecasting.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

HuatuoGPT-II

HuatuoGPT2 is an innovative domain-adapted medical large language model that excels in medical knowledge and dialogue proficiency. It showcases state-of-the-art performance in various medical benchmarks, surpassing GPT-4 in expert evaluations and fresh medical licensing exams. The open-source release includes HuatuoGPT2 models in 7B, 13B, and 34B versions, training code for one-stage adaptation, partial pre-training and fine-tuning instructions, and evaluation methods for medical response capabilities and professional pharmacist exams. The tool aims to enhance LLM capabilities in the Chinese medical field through open-source principles.

hcaptcha-challenger

hCaptcha Challenger is a tool designed to gracefully face hCaptcha challenges using a multimodal large language model. It does not rely on Tampermonkey scripts or third-party anti-captcha services, instead implementing interfaces for 'AI vs AI' scenarios. The tool supports various challenge types such as image labeling, drag and drop, and advanced tasks like self-supervised challenges and Agentic Workflow. Users can access documentation in multiple languages and leverage resources for tasks like model training, dataset annotation, and model upgrading. The tool aims to enhance user experience in handling hCaptcha challenges with innovative AI capabilities.

amber-train

Amber is the first model in the LLM360 family, an initiative for comprehensive and fully open-sourced LLMs. It is a 7B English language model with the LLaMA architecture. The model type is a language model with the same architecture as LLaMA-7B. It is licensed under Apache 2.0. The resources available include training code, data preparation, metrics, and fully processed Amber pretraining data. The model has been trained on various datasets like Arxiv, Book, C4, Refined-Web, StarCoder, StackExchange, and Wikipedia. The hyperparameters include a total of 6.7B parameters, hidden size of 4096, intermediate size of 11008, 32 attention heads, 32 hidden layers, RMSNorm ε of 1e^-6, max sequence length of 2048, and a vocabulary size of 32000.

For similar tasks

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

For similar jobs

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

HebTTS

HebTTS is a language modeling approach to diacritic-free Hebrew text-to-speech (TTS) system. It addresses the challenge of accurately mapping text to speech in Hebrew by proposing a language model that operates on discrete speech representations and is conditioned on a word-piece tokenizer. The system is optimized using weakly supervised recordings and outperforms diacritic-based Hebrew TTS systems in terms of content preservation and naturalness of generated speech.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.