Best AI tools for< Analyze Multimodal Data >

20 - AI tool Sites

Tempus

Tempus is an AI-enabled precision medicine company that brings the power of data and artificial intelligence to healthcare. With the power of AI, Tempus accelerates the discovery of novel targets, predicts the effectiveness of treatments, identifies potentially life-saving clinical trials, and diagnoses multiple diseases earlier. Tempus's innovative technology includes ONE, an AI-enabled clinical assistant; NEXT, a tool to identify and close gaps in care; LENS, a platform to find, access, and analyze multimodal real-world data; and ALGOS, algorithmic models connected to Tempus's assays to provide additional insight.

Manifold

Manifold is an AI data platform designed specifically for life sciences. It offers a collaborative workbench, data science tools, AI-powered cohort exploration, batch bioinformatics, data dashboards, data engineering solutions, access control, and more. The platform aims to enable faster collaboration and research in the life sciences field by providing a comprehensive suite of tools and features. Trusted by leading institutions, Manifold helps streamline data collection, analysis, and collaboration to accelerate scientific research.

GoodGist

GoodGist is an Agentic AI platform for Business Process Automation that goes beyond traditional RPA tools by offering Adaptive Multi-Agent AI with Human-in-the-loop workflows. It enables end-to-end process automation, supports unstructured and multimodal data, ensures real-time decision-making, and maintains human oversight for scalable performance. GoodGist caters to various industries like manufacturing, supply chain, banking, insurance, healthcare, retail, and CPG, providing enterprise-grade security, compliance, and rapid ROI.

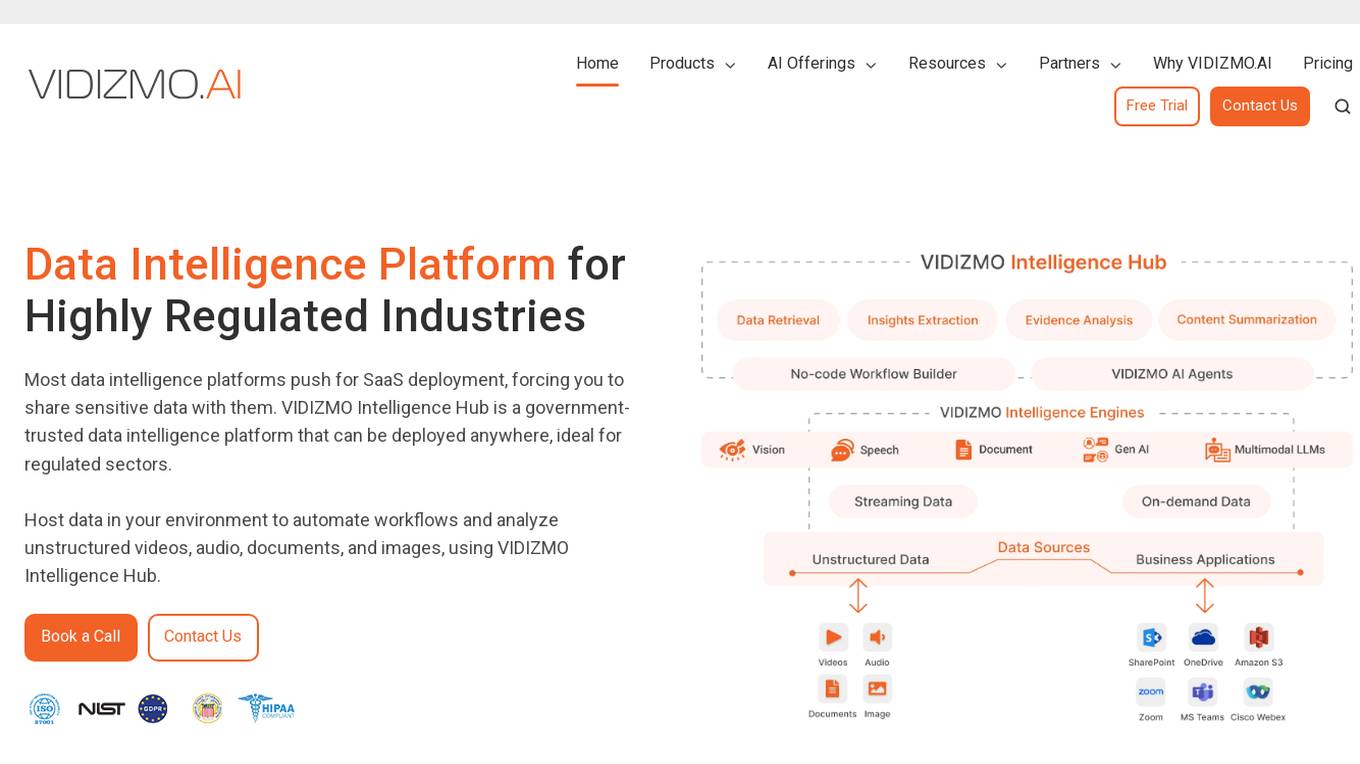

VIDIZMO.AI

VIDIZMO.AI is a data intelligence platform designed for highly regulated industries, offering solutions for video content management, digital evidence management, and redaction. The platform provides granular control over unstructured data types like videos, audio, documents, and images, with features such as AI-powered analytics, multimodal data handling, and HIPAA-compliant data intelligence. VIDIZMO.AI is a government-trusted platform that can be deployed on-premises, in private cloud, or in a hybrid environment, ensuring data privacy and security. The platform is suitable for organizations in government, law enforcement, healthcare, legal, financial services, and insurance sectors, helping them automate workflows, analyze data, and meet regulatory requirements.

Mixpeek Solutions

Mixpeek Solutions offers a Multimodal Data Warehouse for Developers, providing a Developer-First API for AI-native Content Understanding. The platform allows users to search, monitor, classify, and cluster unstructured data like video, audio, images, and documents. Mixpeek Solutions offers a range of features including Unified Search, Automated Classification, Unsupervised Clustering, Feature Extractors for Every Data Type, and various specialized extraction models for different data types. The platform caters to a wide range of industries and provides seamless model upgrades, cross-model compatibility, A/B testing infrastructure, and simplified model management.

Roboto AI

Roboto AI is an advanced platform that allows users to curate, transform, and analyze robotics data at scale. It provides features for data management, actions, events, search capabilities, and SDK integration. The application helps users understand complex machine data through multimodal queries and custom actions, enabling efficient data processing and collaboration within teams.

Tempus

Tempus is an AI-enabled precision medicine company that brings the power of data and artificial intelligence to healthcare. With the power of AI, Tempus accelerates the discovery of novel targets, predicts the effectiveness of treatments, identifies potentially life-saving clinical trials, and diagnoses multiple diseases earlier. Tempus' innovative technology includes ONE, an AI-enabled clinical assistant; NEXT, which identifies and closes gaps in care; LENS, which finds, accesses, and analyzes multimodal real-world data; and ALGOS, algorithmic models connected to Tempus' assays to provide additional insight.

Mixpeek

Mixpeek is a multimodal intelligence platform that helps users extract important data from videos, images, audio, and documents. It enables users to focus on insights rather than data preparation by identifying concepts, activities, and objects from various sources. Mixpeek offers features such as real-time synchronization, extraction and embedding, fine-tuning and scaling of models, and seamless integration with various data sources. The platform is designed to be easy to use, scalable, and secure, making it suitable for a wide range of applications.

GPT-4o

GPT-4o is an advanced multimodal AI platform developed by OpenAI, offering a comprehensive AI interaction experience across text, imagery, and audio. It excels in text comprehension, image analysis, and voice recognition, providing swift, cost-effective, and universally accessible AI technology. GPT-4o democratizes AI by balancing free access with premium features for paid subscribers, revolutionizing the way we interact with artificial intelligence.

ImageBind

ImageBind by Meta AI is a groundbreaking AI tool that revolutionizes the field of computer vision by introducing a new way to 'link' AI across multiple senses. It is the first AI model capable of binding data from six different modalities simultaneously, including images, video, audio, text, depth, thermal, and inertial measurement units (IMUs). By recognizing relationships between these modalities, ImageBind enables machines to analyze various forms of information together, advancing AI capabilities significantly.

Molmo AI

Molmo AI is a powerful, open-source multimodal AI model revolutionizing visual understanding. It helps developers easily build tools that can understand images and interact with the world in useful ways. Molmo AI offers exceptional image understanding, efficient data usage, open and accessible features, on-device compatibility, and a new era in multimodal AI development. It closes the gap between open and closed AI models, empowers the AI community with open access, and efficiently utilizes data for superior performance.

GoSearch

GoSearch is an AI-powered Enterprise Search and Resource Discovery platform that enables users to search all internal apps and resources in seconds with the help of AI technology. It offers features like AI workplace assistant, unified knowledge hub, multimodal AI, custom GPTs, and a no-code AI chatbot builder. GoSearch aims to streamline knowledge management and boost productivity by providing instant answers and information discovery through advanced search innovations.

GoSearch

GoSearch is an AI Enterprise Search and AI Agents platform designed to enhance team knowledge management efficiency by providing AI-generated answers and information discovery. It offers features such as unified knowledge hub, multimodal AI, AI agents, no-code AI agent builder, and enterprise data protection. GoSearch helps users search all internal apps and resources in seconds with AI, chat with a personal assistant for instant answers, and create a company knowledge hub for easy information access.

GPT-4o

GPT-4o is a state-of-the-art AI model developed by OpenAI, capable of processing and generating text, audio, and image outputs. It offers enhanced emotion recognition, real-time interaction, multimodal capabilities, improved accessibility, and advanced language capabilities. GPT-4o provides cost-effective and efficient AI solutions with superior vision and audio understanding. It aims to revolutionize human-computer interaction and empower users worldwide with cutting-edge AI technology.

Objective

Objective is an AI-native search platform designed for developers to build modern search experiences for web and mobile applications. It offers a multimodal search API that understands human language, images, and text relationships. The platform integrates various search techniques to provide natural and relevant search results, even with inconsistent data. Objective is trusted by great companies and accelerates data science roadmaps through its efficient search capabilities.

Cartesia Sonic Team Blog Research Playground

Cartesia Sonic Team Blog Research Playground is an AI application that offers real-time multimodal intelligence for every device. The application aims to build the next generation of AI by providing ubiquitous, interactive intelligence that can run on any device. It features the fastest, ultra-realistic generative voice API and is backed by research on simple linear attention language models and state-space models. The founding team, who met at the Stanford AI Lab, has invented State Space Models (SSMs) and scaled it up to achieve state-of-the-art results in various modalities such as text, audio, video, images, and time-series data.

User Evaluation

User Evaluation is an AI-first user research platform that leverages AI technology to provide instant insights, comprehensive reports, and on-demand answers to enhance customer research. The platform offers features such as AI-driven data analysis, multilingual transcription, live timestamped notes, AI reports & presentations, and multimodal AI chat. User Evaluation empowers users to analyze qualitative and quantitative data, synthesize AI-generated recommendations, and ensure data security through encryption protocols. It is designed for design agencies, product managers, founders, and leaders seeking to accelerate innovation and shape exceptional product experiences.

Outspeed

Outspeed is a platform for Realtime Voice and Video AI applications, providing networking and inference infrastructure to build fast, real-time voice and video AI apps. It offers tools for intelligence across industries, including Voice AI, Streaming Avatars, Visual Intelligence, Meeting Copilot, and the ability to build custom multimodal AI solutions. Outspeed is designed by engineers from Google and MIT, offering robust streaming infrastructure, low-latency inference, instant deployment, and enterprise-ready compliance with regulations such as SOC2, GDPR, and HIPAA.

Open GPT 4o

Open GPT 4o is an advanced large multimodal language model developed by OpenAI, offering real-time audiovisual responses, emotion recognition, and superior visual capabilities. It can handle text, audio, and image inputs, providing a rich and interactive user experience. GPT 4o is free for all users and features faster response times, advanced interactivity, and the ability to recognize and output emotions. It is designed to be more powerful and comprehensive than its predecessor, GPT 4, making it suitable for applications requiring voice interaction and multimodal processing.

The Drive AI

The Drive AI is the world's first agentic workspace that allows users to create, share, analyze, and organize thousands of files using natural language and voice commands. It offers features like file intelligence, multimodal actions, secure file sharing, and image analysis. The application replaces traditional file management tools and provides AI-powered writing assistance to enhance productivity and creativity.

1 - Open Source AI Tools

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

20 - OpenAI Gpts

Wowza Bias Detective

I analyze cognitive biases in scenarios and thoughts, providing neutral, educational insights.

Art Engineer

Analyze and reverse engineer images. Receive style descriptions and image re-creation prompts.

Stock Market Analyst

I read and analyze annual reports of companies. Just upload the annual report PDF and start asking me questions!

Good Design Advisor

As a Good Design Advisor, I provide consultation and advice on design topics and analyze designs that are provided through documents or links. I can also generate visual representations myself to illustrate design concepts.

History Perspectives

I analyze historical events, offering insights from multiple perspectives.

Automated Knowledge Distillation

For strategic knowledge distillation, upload the document you need to analyze and use !start. ENSURE the uploaded file shows DOCUMENT and NOT PDF. This workflow requires leveraging RAG to operate. Only a small amount of PDFs are supported, convert to txt or doc. For timeout, refresh & !continue

Art Enthusiast

Analyze any uploaded art piece, providing thoughtful insight on the history of the piece and its maker. Replicate art pieces in new styles generated by the user. Be an overall expert in art and help users navigate the art scene. Inform them of different types of art

Historical Image Analyzer

A tool for historians to analyze and catalog historical images and documents.

Phish or No Phish Trainer

Hone your phishing detection skills! Analyze emails, texts, and calls to spot deception. Become a security pro!

Actor Audition Coach

I analyze audition sides to help actors prepare for in-person and self-taped auditions for TV and Film

Next.js Helper

A Next.js expert ready to analyze code, answer questions, and offer learning plans.

ChainBot

The assistant launched by ChainBot.io can help you analyze EVM transactions, providing blockchain and crypto info.