Best AI tools for< Optimize Model Size >

20 - AI tool Sites

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

Uwear.ai

Uwear.ai is an AI tool that allows users to easily create stunningly complete photos of AI-generated fashion models in just a few clicks. It turns flat lay photos into studio-quality model visuals, eliminating the need for 3D designs, mannequins, or photos of the product already worn. The platform uses its proprietary AI model to keep the generated clothes as close as possible to the input, helping users sell more by generating realistic human models of different sizes and ethnicities.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

IngestAI

IngestAI is a Silicon Valley-based startup that provides a sophisticated toolbox for data preparation and model selection, powered by proprietary AI algorithms. The company's mission is to make AI accessible and affordable for businesses of all sizes. IngestAI's platform offers a turn-key service tailored for AI builders seeking to optimize AI application development. The company identifies the model best-suited for a customer's needs, ensuring it is designed for high performance and reliability. IngestAI utilizes Deepmark AI, its proprietary software solution, to minimize the time required to identify and deploy the most effective AI solutions. IngestAI also provides data preparation services, transforming raw structured and unstructured data into high-quality, AI-ready formats. This service is meticulously designed to ensure that AI models receive the best possible input, leading to unparalleled performance and accuracy. IngestAI goes beyond mere implementation; the company excels in fine-tuning AI models to ensure that they match the unique nuances of a customer's data and specific demands of their industry. IngestAI rigorously evaluates each AI project, not only ensuring its successful launch but its optimal alignment with a customer's business goals.

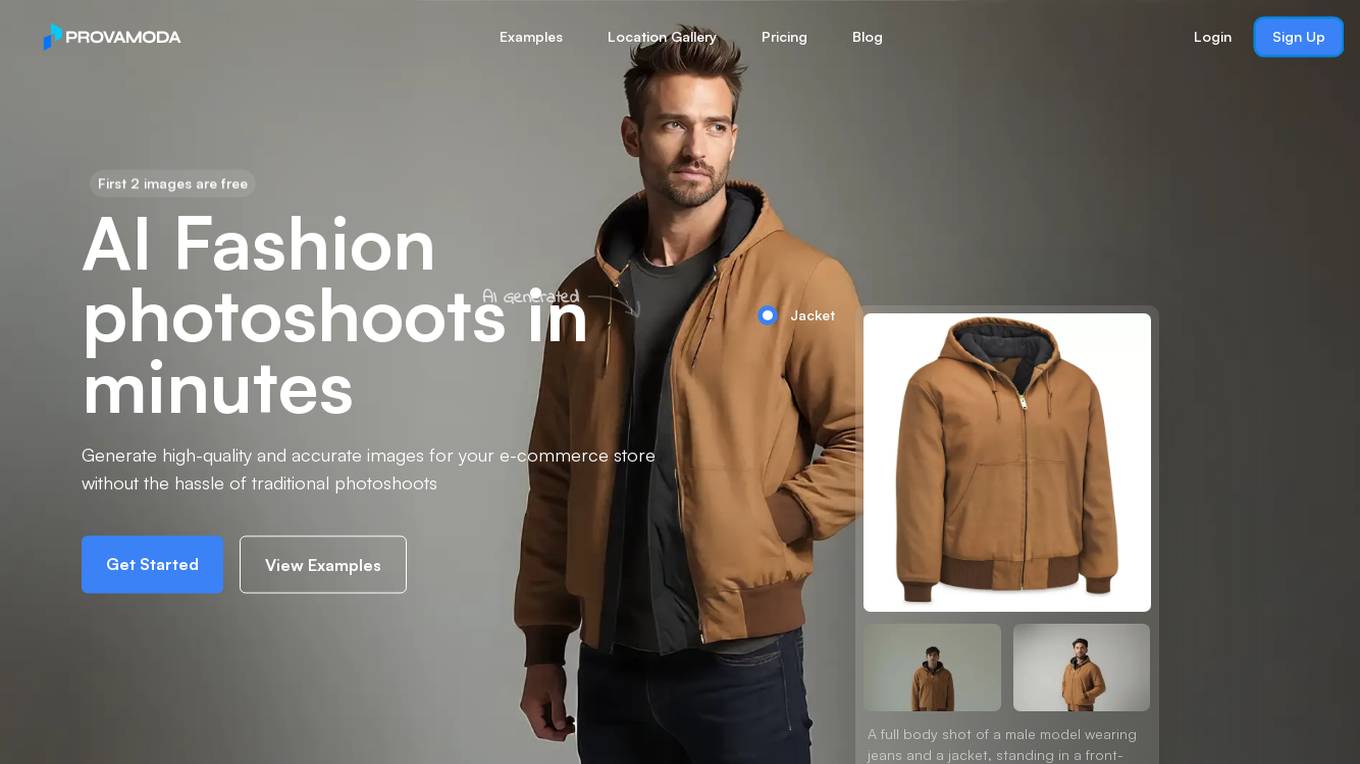

Provamoda

Provamoda is a virtual fashion photoshoot tool designed for e-commerce businesses. It utilizes AI technology to generate high-quality and accurate images for online stores without the need for traditional photoshoots. Users can upload clothing images, select model types and backgrounds, and receive multiple photos for each piece of clothing. The tool offers cost-effective, time-efficient, and customizable virtual photoshoots, catering to businesses of all sizes. With features like generating videos, bottom and top clothes support, and high-resolution images, Provamoda empowers fashion brands to create professional visuals effortlessly.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

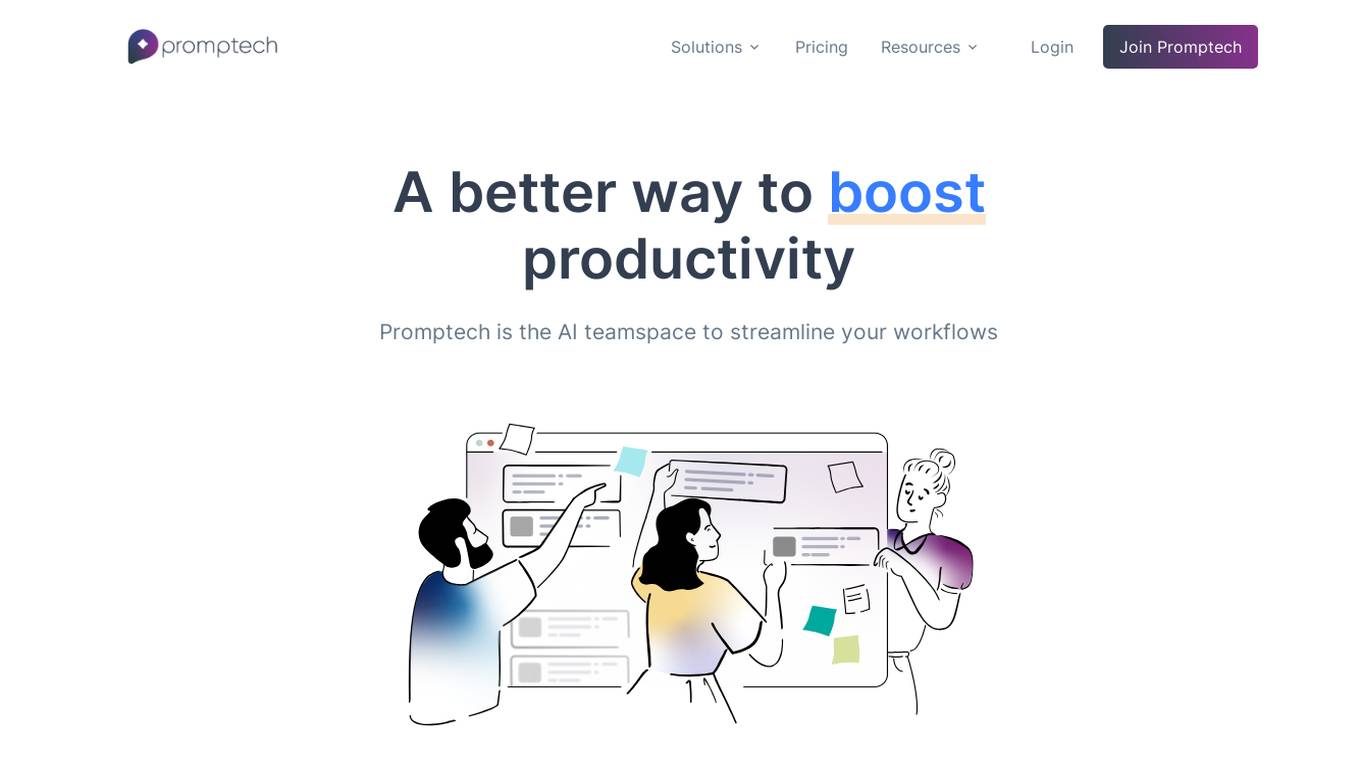

Promptech

Promptech is an AI teamspace designed to streamline workflows and enhance productivity. It offers a range of features including AI assistants, a collaborative teamspace, and access to large language models (LLMs). Promptech is suitable for businesses of all sizes and can be used for a variety of tasks such as streamlining tasks, enhancing collaboration, and safeguarding IP. It is a valuable resource for technology leaders and provides a cost-effective AI solution for smaller teams and startups.

Market Brew

Market Brew is an AI SEO software platform that offers advanced SEO modeling services backed by a team of search engineers. Trusted by over 60,000 brands since 2006, Market Brew empowers SEO teams to optimize and grow their rankings with precision. The platform utilizes proprietary search engine models to track algorithmic changes, prioritize optimizations, and forecast search engine rankings. Market Brew's SEO software is built with machine learning algorithms and is designed to be user-friendly and scalable for businesses of all sizes.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Creatus.AI

Creatus.AI is an AI-powered platform that provides a range of tools and services to help businesses boost productivity and transform their workplaces. With over 35 AI models and tools, and 90+ business integrations, Creatus.AI offers a comprehensive suite of solutions for businesses of all sizes. The platform's AI-native workspace and autonomous team members enable businesses to automate tasks, improve efficiency, and gain valuable insights from data. Creatus.AI also specializes in custom AI integrations and solutions, helping businesses to tailor AI solutions to their specific needs.

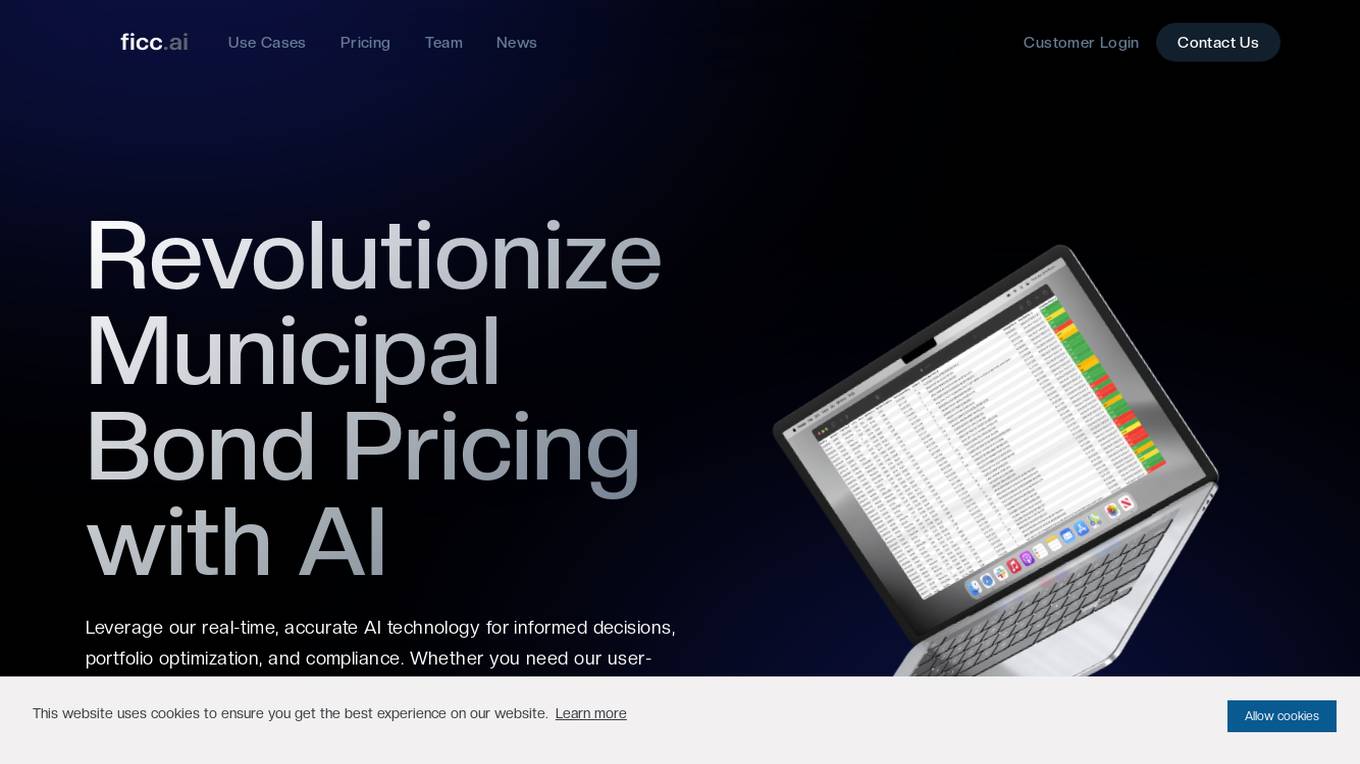

ficc.ai

ficc.ai is an AI application that revolutionizes municipal bond pricing by providing real-time, accurate AI technology for informed decisions, portfolio optimization, and compliance. The platform offers a user-friendly web app, direct API access, and integration with existing software or vendors. ficc.ai uses cutting-edge AI models developed in-house by market experts and scientists to deliver highly accurate bond prices based on trade size, ensuring valuable output for trading decisions, investment allocations, and compliance oversight.

Notification Harbor

Notification Harbor is an email marketing platform that uses Large Language Models (LLMs) to help businesses create and send more effective email campaigns. With Notification Harbor, businesses can use LLMs to generate personalized email content, optimize subject lines, and even create entire email campaigns from scratch. Notification Harbor is designed to make email marketing easier and more effective for businesses of all sizes.

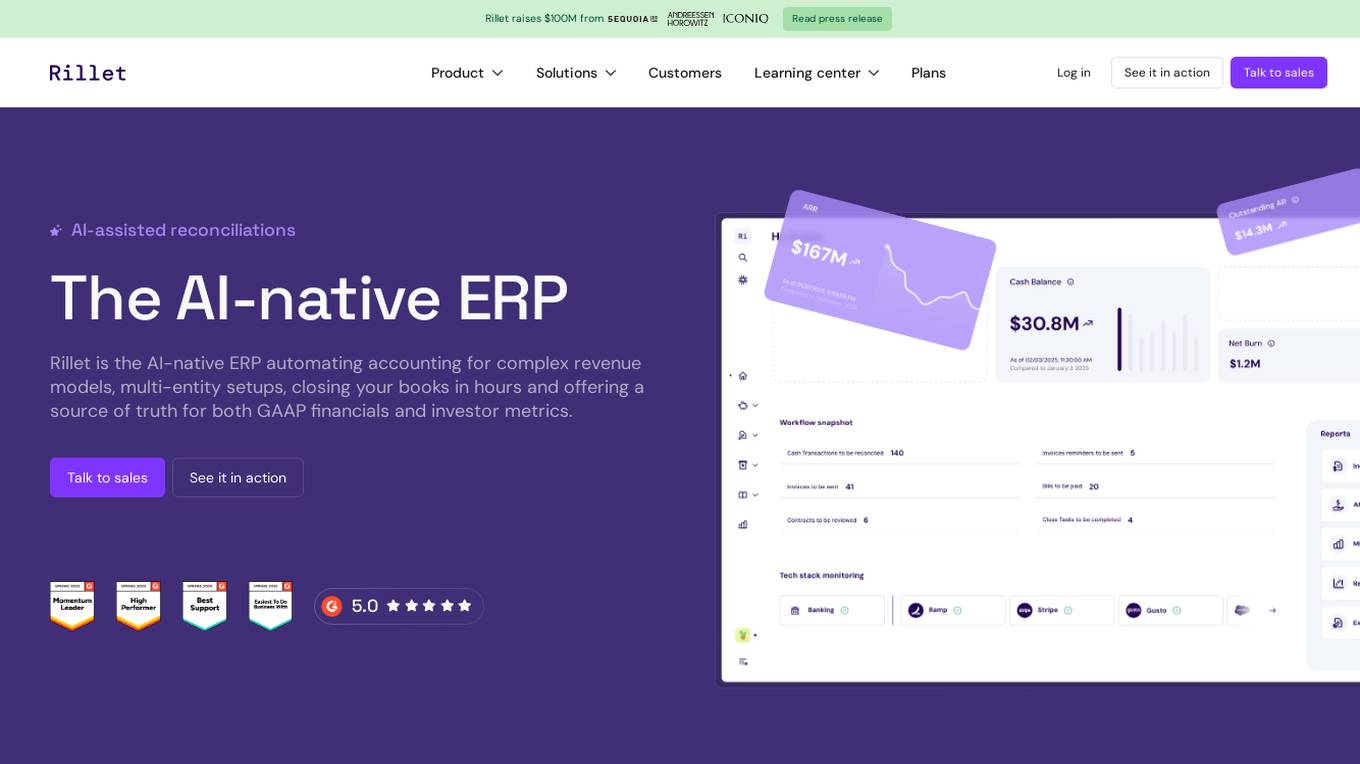

Rillet

Rillet is an AI-native ERP application that automates accounting processes for complex revenue models, multi-entity setups, and monthly book closings. It offers features such as automated general ledger, accounts receivable, accounts payable, bank reconciliation, and flexible GAAP reporting. Rillet is designed to provide a source of truth for both GAAP financials and investor metrics, with advanced functionalities like multi-entity consolidation and AI-powered accounting. The application caters to various industries and company sizes, offering tailored solutions for businesses to streamline financial operations and improve efficiency.

Assembo.ai

Assembo.ai is an AI-powered tool for product photography and videos, offering easy and prompt ecommerce photography solutions. It allows users to create professional product photos and videos in minutes by utilizing AI technology to optimize visuals for popular e-commerce and social media platforms. With features like AI-generated models, vibrant product video animations, and customizable backgrounds, Assembo.ai aims to revolutionize the way product photography is done, saving time and money for businesses of all sizes.

Google Gemma

Google Gemma is a lightweight, state-of-the-art open language model (LLM) developed by Google. It is part of the same research used in the creation of Google's Gemini models. Gemma models come in two sizes, the 2B and 7B parameter versions, where each has a base (pre-trained) and instruction-tuned modifications. Gemma models are designed to be cross-device compatible and optimized for Google Cloud and NVIDIA GPUs. They are also accessible through Kaggle, Hugging Face, Google Cloud with Vertex AI or GKE. Gemma models can be used for a variety of applications, including text generation, summarization, RAG, and both commercial and research use.

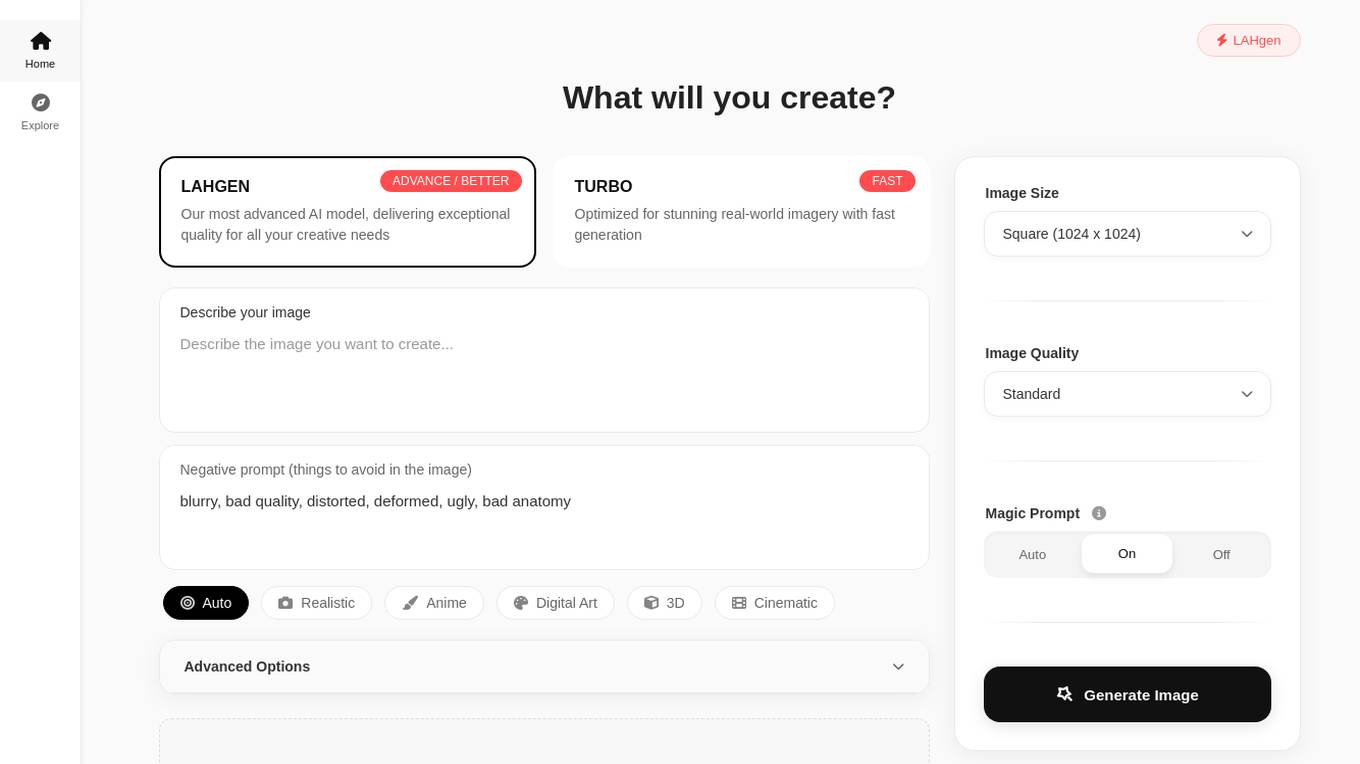

LAHgen

LAHgen is a free unlimited text to image generator that does not require any login. It offers an advanced AI model for creating high-quality images for various creative purposes. Users can input prompts and select from a range of image sizes to generate stunning visuals. The tool is optimized for producing realistic, anime, digital art, and 3D cinematic images with advanced options for customization.

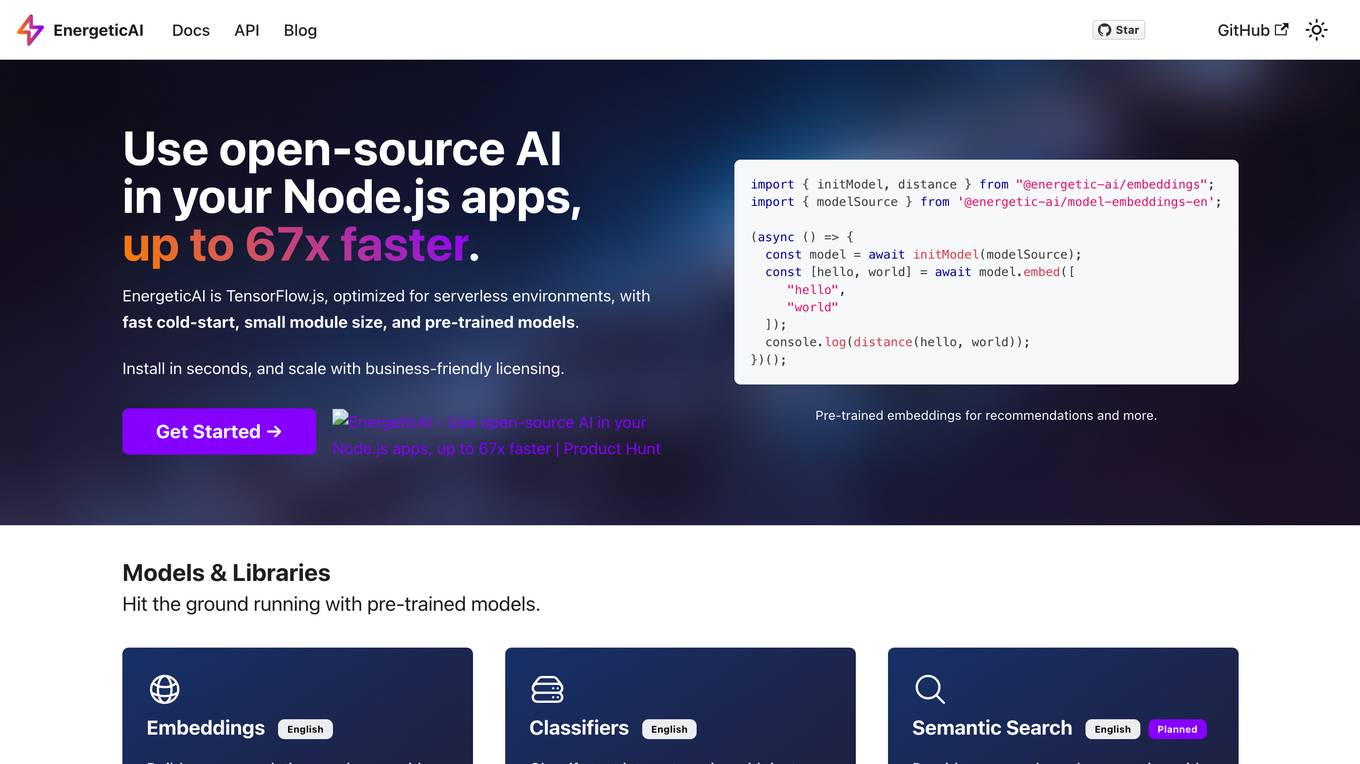

EnergeticAI

EnergeticAI is an open-source AI library that can be used in Node.js applications. It is optimized for serverless environments and provides fast cold-start, small module size, and pre-trained models. EnergeticAI can be used for a variety of tasks, including building recommendations, classifying text, and performing semantic search.

Valohai

Valohai is a scalable MLOps platform that enables Continuous Integration/Continuous Deployment (CI/CD) for machine learning and pipeline automation on-premises and across various cloud environments. It helps streamline complex machine learning workflows by offering framework-agnostic ML capabilities, automatic versioning with complete lineage of ML experiments, hybrid and multi-cloud support, scalability and performance optimization, streamlined collaboration among data scientists, IT, and business units, and smart orchestration of ML workloads on any infrastructure. Valohai also provides a knowledge repository for storing and sharing the entire model lifecycle, facilitating cross-functional collaboration, and allowing developers to build with total freedom using any libraries or frameworks.

SambaNova Systems

SambaNova Systems is an AI platform that revolutionizes AI workloads by offering an enterprise-grade full stack platform purpose-built for generative AI. It provides state-of-the-art AI and deep learning capabilities to help customers outcompete their peers. SambaNova delivers the only enterprise-grade full stack platform, from chips to models, designed for generative AI in the enterprise. The platform includes the SN40L Full Stack Platform with 1T+ parameter models, Composition of Experts, and Samba Apps. SambaNova also offers resources to accelerate AI journeys and solutions for various industries like financial services, healthcare, manufacturing, and more.

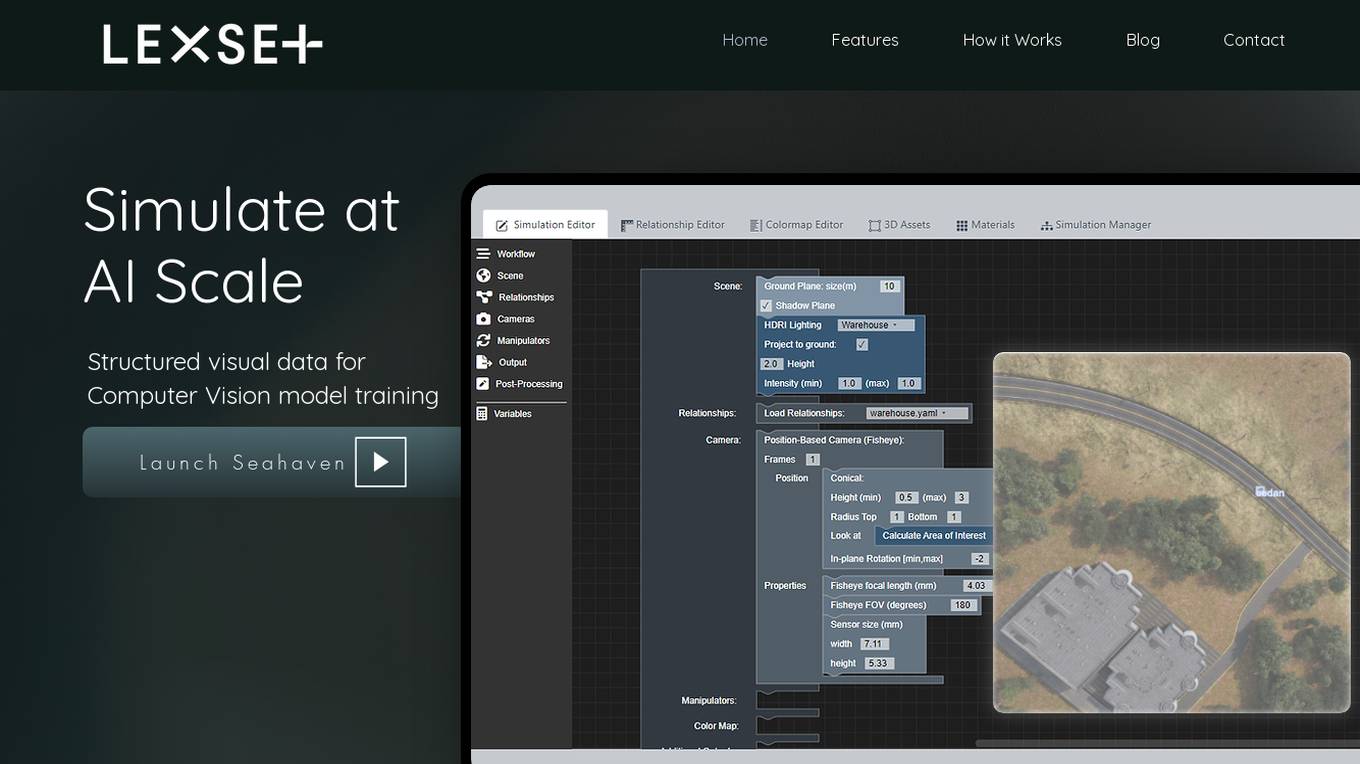

Lexset

Lexset is an AI tool that provides synthetic data generation services for computer vision model training. It offers a no-code interface to create unlimited data with advanced camera controls and lighting options. Users can simulate AI-scale environments, composite objects into images, and create custom 3D scenarios. Lexset also provides access to GPU nodes, dedicated support, and feature development assistance. The tool aims to improve object detection accuracy and optimize generalization on high-quality synthetic data.

1 - Open Source AI Tools

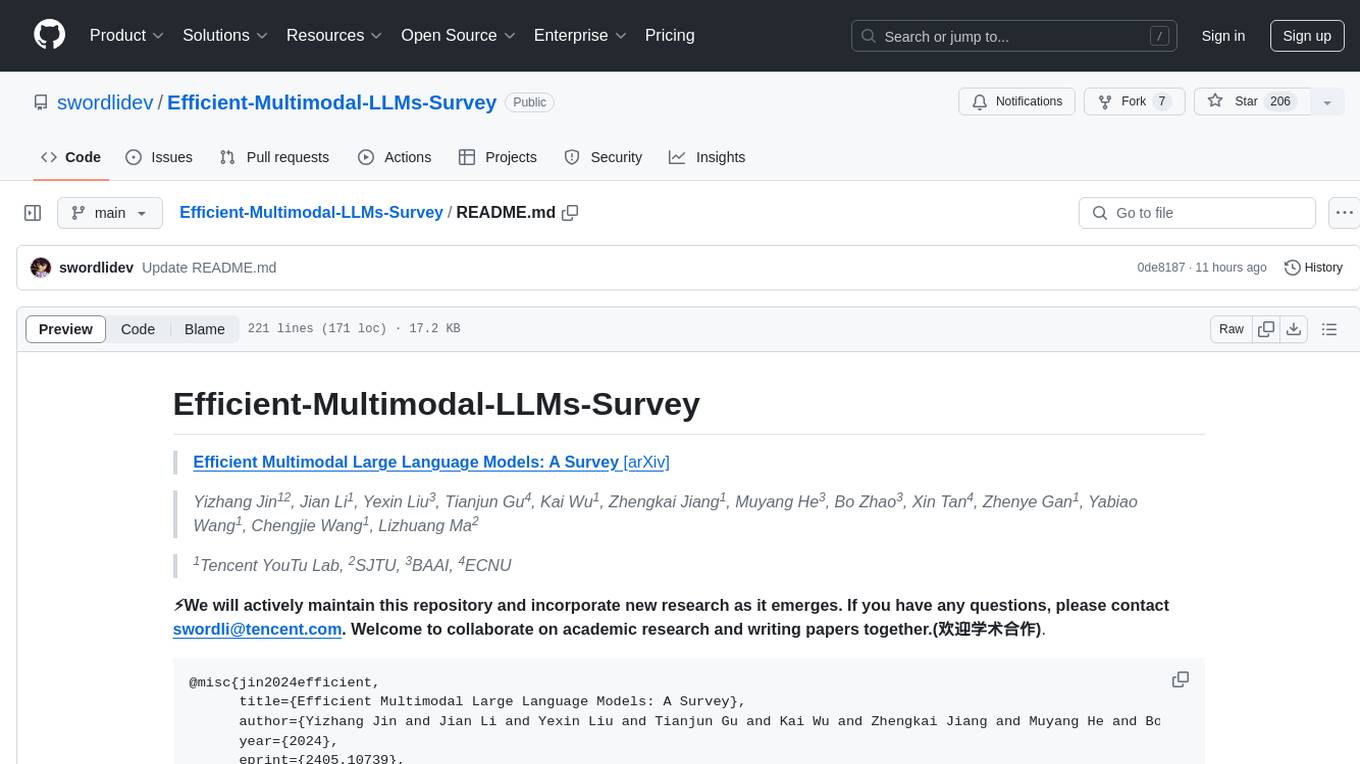

Efficient-Multimodal-LLMs-Survey

Efficient Multimodal Large Language Models: A Survey provides a comprehensive review of efficient and lightweight Multimodal Large Language Models (MLLMs), focusing on model size reduction and cost efficiency for edge computing scenarios. The survey covers the timeline of efficient MLLMs, research on efficient structures and strategies, and their applications, while also discussing current limitations and future directions.

20 - OpenAI Gpts

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

Back Propagation

I'm Back Propagation, here to help you understand and apply back propagation techniques to your AI models.

Modelos de Negocios GPT

Guía paso a paso para la creación y mejora de modelos de negocio usando la metodología Business Model Canvas.

Shell Mentor

An AI GPT model designed to assist with Shell/Bash programming, providing real-time code suggestions, debugging tips, and script optimization for efficient command-line operations.

Octorate Code Companion

I help developers understand and use APIs, referencing a YAML model.

Apple CoreData Complete Code Expert

A detailed expert trained on all 5,588 pages of Apple CoreData, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

CAE Simulation Assistant

Providing the most comprehensive, cutting-edge, and detailed technical guidance on the latest international CAE simulation technology(HyperMesh、THESEUS-FE、ANSA、STAR-CCM+、Amesim、Ncode、Adams、Abaqus)