cntext

文本分析包,支持字数统计、可读性、文档相似度、情感分析在内的多种文本分析方法。Text analysis, supporting multiple methods including word count, readability, document similarity, sentiment analysis, Word2Vec/GloVe, and Large Language Models (LLMs).

Stars: 329

cntext is a text analysis package that provides semantic distance and semantic projection based on word embedding models. Additionally, cntext offers traditional methods such as word count statistics, readability, document similarity, sentiment analysis, etc. It includes modules for text statistics, sentiment analysis, dictionary construction, similarity calculations, and text-to-mind cognitive analysis.

README:

Table of Contents

cntext 是一个文本分析包,提供基于词嵌入模型的语义距离和语义投影。 此外,cntext还提供了传统的方法,如字数统计、可读性、文档相似度、情感分析等。

pepy网站 统计,截止 2025-3-19, 累积下载量 69,403 。 目前分为

- 公开cntext1.x , 免费, 已更新至1.9.2;。

- 非公开cntext2.x, 付费,已更新至2.1.4。 感兴趣的可以点击阅读博客: 文本分析库cntext2.x使用手册

- [x] stats 文本统计指标

- [x] 词频统计

- [x] 可读性

- [x] 内置pkl词典

- [x] 情感分析

- [x] dictionary 构建词表(典)

- [x] Sopmi 互信息扩充词典法

- [x] W2Vmodels 词向量扩充词典法

- [x] Glove Glove词向量模型

- [x] similarity 文本相似度

- [x] cos相似度

- [x] jaccard相似度

- [x] 编辑距离相似度

- [x] mind.py 计算文本中的认知方向(态度、偏见)

pip install cntext

import cntext as ct

help(ct)Run

Help on package cntext:

NAME

cntext

PACKAGE CONTENTS

mind

dictionary

similarity

stats

目前stats内置的函数有

- readability 文本可读性

- term_freq 词频统计函数

- dict_pkl_list 获取cntext内置词典列表(pkl格式)

- load_pkl_dict 导入pkl词典文件

- sentiment 情感分析

import cntext as ct

text = '如何看待一网文作者被黑客大佬盗号改文,因万分惭愧而停更。'

ct.term_freq(text, lang='chinese')Run

Counter({'看待': 1,

'网文': 1,

'作者': 1,

'黑客': 1,

'大佬': 1,

'盗号': 1,

'改文': 1,

'因': 1,

'万分': 1,

'惭愧': 1,

'停': 1})

文本可读性,指标越大,文章复杂度越高,可读性越差。

readability(text, lang='chinese')

- text: 文本字符串数据

- lang: 语言类型,"chinese"或"english",默认"chinese"

**中文可读性 ** 算法参考自

徐巍,姚振晔,陈冬华.中文年报可读性:衡量与检验[J].会计研究,2021(03):28-44.

- readability1 ---每个分句中的平均字数

- readability2 ---每个句子中副词和连词所占的比例

- readability3 ---参考Fog Index, readability3=(readability1+readability2)×0.5

以上三个指标越大,都说明文本的复杂程度越高,可读性越差。

text1 = '如何看待一网文作者被黑客大佬盗号改文,因万分惭愧而停更。'

ct.readability(text1, lang='chinese')Run

{'readability1': 28.0,

'readability2': 0.15789473684210525,

'readability3': 14.078947368421053}

句子中的符号变更会影响结果

text2 = '如何看待一网文作者被黑客大佬盗号改文,因万分惭愧而停更。'

ct.readability(text2, lang='chinese')Run

{'readability1': 27.0,

'readability2': 0.16666666666666666,

'readability3': 13.583333333333334}

词频统计函数,返回Counter类型

import cntext as ct

text = '如何看待一网文作者被黑客大佬盗号改文,因万分惭愧而停更。'

ct.term_freq(text, lang='chinese')Run

Counter({'看待': 1,

'网文': 1,

'作者': 1,

'黑客': 1,

'大佬': 1,

'盗号': 1,

'改文因': 1,

'万分': 1,

'惭愧': 1,

'停': 1})

获取cntext内置词典列表(pkl格式)

import cntext as ct

# 获取cntext内置词典列表(pkl格式)

ct.dict_pkl_list()Run

['DUTIR.pkl',

'HOWNET.pkl',

'sentiws.pkl',

'Chinese_Digitalization.pkl',

'ChineseFinancialFormalUnformalSentiment.pkl',

'Concreteness.pkl',

'ANEW.pkl',

'LSD2015.pkl',

'NRC.pkl',

'ChineseEmoBank.pkl',

'geninqposneg.pkl',

'HuLiu.pkl',

'AFINN.pkl',

'ADV_CONJ.pkl',

'Loughran_McDonald_Financial_Sentiment.pkl',

'Chinese_Loughran_McDonald_Financial_Sentiment.pkl',

'STOPWORDS.pkl']

词典对应关系, 部分情感词典资料整理自 quanteda.sentiment

| pkl文件 | 词典 | 语言 | 功能 |

|---|---|---|---|

| ChineseEmoBank.pkl | 中文情感词典,含效价valence和唤醒度arousal。在cntext中,我们只使用了CVAW词表(单词),其他词典如CVAP, CVAS, CVAT没有纳入到ChineseEmoBank.pkl. |

Chinese |

效价valence和唤醒度arousal

|

| DUTIR.pkl | 大连理工大学情感本体库 | 中文 | 七大类情绪,哀, 好, 惊, 惧, 乐, 怒, 恶

|

| HOWNET.pkl | 知网Hownet词典 | 中文 | 正面词、负面词 |

| SentiWS.pkl | SentimentWortschatz (SentiWS) | 德文 | 正面词、负面词; |

| ChineseFinancialFormalUnformalSentiment.pkl | 金融领域正式、非正式;积极消极 | 中文 | formal-pos、 formal-neg; unformal-pos、 unformal-neg |

| ANEW.pkl | 英语单词的情感规范Affective Norms for English Words (ANEW) | 英文 | pleasure, arousal, dominance |

| LSD2015.pkl | Lexicoder Sentiment Dictionary (2015) | 英文 | 正面词、负面词 |

| NRC.pkl | NRC Word-Emotion Association Lexicon | 英文 | 细粒度情绪词; |

| geninqposneg.pkl | |||

| HuLiu.pkl | Hu&Liu (2004)正、负情感词典 | 英文 | 正面词、负面词 |

| AFINN.pkl | 尼尔森 (2011) 的“新 ANEW”效价词表 | 英文 | 情感效价信息valence |

| ADV_CONJ.pkl | 副词连词 | 中文 | |

| STOPWORDS.pkl | 中、英 | 停用词 | |

| Concreteness.pkl | Brysbaert, M., Warriner, A. B., & Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behavior Research Methods, 46, 904–911 | English | word & concreateness score |

| Chinese_Loughran_McDonald_Financial_Sentiment.pkl | 曾庆生, 周波, 张程, and 陈信元. "年报语调与内部人交易: 表里如一还是口是心非?." 管理世界 34, no. 09 (2018): 143-160. | 中文 | 正面、负面词 |

| Chinese_Digitalization.pkl | 吴非,胡慧芷,林慧妍,任晓怡. 企业数字化转型与资本市场表现——来自股票流动性的经验证据[J]. 管理世界,2021,37(07):130-144+10. | 中文 | 基于这篇论文,构建了中文数字化词典,含人工智能技术、大数据技术、云计算技术、区块链技术、数字技术应用等关键词列表。 |

| Loughran_McDonald_Financial_Sentiment.pkl | Loughran, Tim, and Bill McDonald. "When is a liability not a liability? Textual analysis, dictionaries, and 10‐Ks." The Journal of finance 66, no. 1 (2011): 35-65. | 英文 | 金融LM情绪词典2018年版本,含七个词表,分别是Negative, Positive, Uncertainty, Litigious, StrongModal, WeakModal, Constraining |

| Chinese_FLS.pkl | 许帅,邵帅,何贤杰.业绩说明会前瞻性信息对分析师盈余预测准确性的影响——信口雌黄还是言而有征[J].中国管理科学:1-15. | 中文 | 前瞻性词典集,含174个词语 |

-

如果用户情绪分析时使用DUTIR词典发表论文,请在论文中添加诸如“使用了大连理工大学信息检索研究室的情感词汇本体” 字样加以声明。参考文献中加入引文“徐琳宏,林鸿飞,潘宇,等.情感词汇本体的构造[J]. 情报学报, 2008, 27(2): 180-185.”

-

如果大家有制作的词典,可以上传至百度网盘,并在issue中留下词典的网盘链接。如词典需要使用声明,可连同文献出处一起issue

导入pkl词典文件,返回字典样式数据。

import cntext as ct

print(ct.__version__)

# 导入pkl词典文件,

print(ct.load_pkl_dict('DUTIR.pkl'))Run

1.8.0

{'DUTIR': {'哀': ['怀想', '治丝而棼', '伤害',...],

'好': ['进贤黜奸', '清醇', '放达', ...],

'惊': ['惊奇不已', '魂惊魄惕', '海外奇谈',...],

'惧': ['忸忸怩怩', '谈虎色变', '手忙脚乱',...],

'乐': ['神采', '喜人', '如意',...],

'怒': ['饮恨吞声', '扬眉瞬目',...],

'恶': ['出逃', '鱼肉百姓', '移天易日',...]},

'Desc': '大连理工大学情感本体库,细粒度情感词典。含七大类情绪,依次是哀, 好, 惊, 惧, 乐, 怒, 恶',

'Referer': '徐琳宏,林鸿飞,潘宇,等.情感词汇本体的构造[J]. 情报学报, 2008, 27(2): 180-185.'}

sentiment(text, diction, lang='chinese') 使用diy词典进行情感分析,计算各个情绪词出现次数; 未考虑强度副词、否定词对情感的复杂影响,

- text: 待分析中文文本

- diction: 情感词字典;

- lang: 语言类型,"chinese"或"english",默认"chinese"

import cntext as ct

text = '我今天得奖了,很高兴,我要将快乐分享大家。'

ct.sentiment(text=text,

diction=ct.load_pkl_dict('DUTIR.pkl')['DUTIR'],

lang='chinese')Run

{'哀_num': 0,

'好_num': 0,

'惊_num': 0,

'惧_num': 0,

'乐_num': 2,

'怒_num': 0,

'恶_num': 0,

'stopword_num': 8,

'word_num': 14,

'sentence_num': 1}

如果不适用pkl词典,可以自定义自己的词典,例如

diction = {'pos': ['高兴', '快乐', '分享'],

'neg': ['难过', '悲伤'],

'adv': ['很', '特别']}

text = '我今天得奖了,很高兴,我要将快乐分享大家。'

ct.sentiment(text=text,

diction=diction,

lang='chinese')Run

{'pos_num': 3,

'neg_num': 0,

'adv_num': 1,

'stopword_num': 8,

'word_num': 14,

'sentence_num': 1}

sentiment函数默认所有情感词权重均为1,只需要统计文本中情感词的个数,即可得到文本情感得分。

sentiment_by_valence(text, diction, lang='english')函数考虑了词语的效价(valence)

- text 待输入文本

- diction 带效价的词典,DataFrame格式。

- lang 语言类型'chinese' 或 'english',默认'english'

这里我们以文本具体性度量为例, concreteness.pkl 整理自 Brysbaert2014的文章。

Brysbaert, M., Warriner, A. B., & Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behavior Research Methods, 46, 904–911

import cntext as ct

# load the concreteness.pkl dictionary file

concreteness_df = ct.load_pkl_dict('concreteness.pkl')

concreteness_df.head()Run

| word | valence | |

|---|---|---|

| 0 | roadsweeper | 4.85 |

| 1 | traindriver | 4.54 |

| 2 | tush | 4.45 |

| 3 | hairdress | 3.93 |

| 4 | pharmaceutics | 3.77 |

先看一条文本的具体性度量

reply = "I'll go look for that"

score=ct.sentiment_by_valence(text=reply,

diction=concreteness_df,

lang='english')

scoreRun

1.85

很多条文本的具体性度量

employee_replys = ["I'll go look for that",

"I'll go search for that",

"I'll go search for that top",

"I'll go search for that t-shirt",

"I'll go look for that t-shirt in grey",

"I'll go search for that t-shirt in grey"]

for idx, reply in enumerate(employee_replys):

score=ct.sentiment_by_valence(text=reply,

diction=concreteness_df,

lang='english')

template = "Concreteness Score: {score:.2f} | Example-{idx}: {exmaple}"

print(template.format(score=score,

idx=idx,

exmaple=reply))

ct.sentiment_by_valence(text=text, diction=concreteness_df, lang='english')Run

Concreteness Score: 1.55 | Example-0: I'll go look for that

Concreteness Score: 1.55 | Example-1: I'll go search for that

Concreteness Score: 1.89 | Example-2: I'll go search for that top

Concreteness Score: 2.04 | Example-3: I'll go search for that t-shirt

Concreteness Score: 2.37 | Example-4: I'll go look for that t-shirt in grey

Concreteness Score: 2.37 | Example-5: I'll go search for that t-shirt in grey

本模块用于构建词表(典),含

- SoPmi 共现法扩充词表(典)

- W2VModels 词向量word2vec扩充词表(典)

import cntext as ct

import os

sopmier = ct.SoPmi(cwd=os.getcwd(),

input_txt_file='data/sopmi_corpus.txt', #原始数据,您的语料

seedword_txt_file='data/sopmi_seed_words.txt', #人工标注的初始种子词

)

sopmier.sopmi()Run

Step 1/4:...Preprocess Corpus ...

Step 2/4:...Collect co-occurrency information ...

Step 3/4:...Calculate mutual information ...

Step 4/4:...Save candidate words ...

Finish! used 44.49 s

特别要注意代码需要设定lang语言参数

import cntext as ct

import os

#初始化模型,需要设置lang参数。

model = ct.W2VModels(cwd=os.getcwd(),

lang='english') #语料数据 w2v_corpus.txt

model.train(input_txt_file='data/w2v_corpus.txt')

#根据种子词,筛选出没类词最相近的前100个词

model.find(seedword_txt_file='data/w2v_seeds/integrity.txt',

topn=100)

model.find(seedword_txt_file='data/w2v_seeds/innovation.txt',

topn=100)

model.find(seedword_txt_file='data/w2v_seeds/quality.txt',

topn=100)

model.find(seedword_txt_file='data/w2v_seeds/respect.txt',

topn=100)

model.find(seedword_txt_file='data/w2v_seeds/teamwork.txt',

topn=100)Run

Step 1/4:...Preprocess corpus ...

Step 2/4:...Train word2vec model

used 174 s

Step 3/4:...Prepare similar candidates for each seed word in the word2vec model...

Step 4/4 Finish! Used 187 s

Step 3/4:...Prepare similar candidates for each seed word in the word2vec model...

Step 4/4 Finish! Used 187 s

Step 3/4:...Prepare similar candidates for each seed word in the word2vec model...

Step 4/4 Finish! Used 187 s

Step 3/4:...Prepare similar candidates for each seed word in the word2vec model...

Step 4/4 Finish! Used 187 s

Step 3/4:...Prepare similar candidates for each seed word in the word2vec model...

Step 4/4 Finish! Used 187 s

训练出的w2v模型可以后续中使用。

from gensim.models import KeyedVectors

w2v_model = KeyedVectors.load(w2v.model路径)

#找出word的词向量

#w2v_model.get_vector(word)

#更多w2_model方法查看

#help(w2_model)例如本代码,运行生成的结果路径output/w2v_candi_words/w2v.model

from gensim.models import KeyedVectors

w2v_model = KeyedVectors.load('output/w2v_candi_words/w2v.model')

w2v_model.most_similar('innovation')Run

[('technology', 0.689210832118988),

('infrastructure', 0.669672966003418),

('resources', 0.6695448160171509),

('talent', 0.6627111434936523),

('execution', 0.6549549102783203),

('marketing', 0.6533523797988892),

('merchandising', 0.6504817008972168),

('diversification', 0.6479553580284119),

('expertise', 0.6446896195411682),

('digital', 0.6326863765716553)]

#获取词向量

w2v_model.get_vector('innovation')Run

array([-0.45616838, -0.7799563 , 0.56367606, -0.8570078 , 0.600359 ,

-0.6588043 , 0.31116748, -0.11956959, -0.47599426, 0.21840936,

-0.02268819, 0.1832016 , 0.24452794, 0.01084935, -1.4213187 ,

0.22840202, 0.46387577, 1.198386 , -0.621511 , -0.51598716,

0.13352732, 0.04140598, -0.23470387, 0.6402956 , 0.20394802,

0.10799981, 0.24908689, -1.0117126 , -2.3168423 , -0.0402851 ,

1.6886286 , 0.5357047 , 0.22932841, -0.6094084 , 0.4515793 ,

-0.5900931 , 1.8684244 , -0.21056202, 0.29313338, -0.221067 ,

-0.9535679 , 0.07325 , -0.15823542, 1.1477109 , 0.6716076 ,

-1.0096023 , 0.10605699, 1.4148282 , 0.24576302, 0.5740349 ,

0.19984631, 0.53964925, 0.41962907, 0.41497853, -1.0322098 ,

0.01090925, 0.54345983, 0.806317 , 0.31737605, -0.7965337 ,

0.9282971 , -0.8775608 , -0.26852605, -0.06743863, 0.42815775,

-0.11774074, -0.17956367, 0.88813037, -0.46279573, -1.0841943 ,

-0.06798118, 0.4493006 , 0.71962464, -0.02876493, 1.0282255 ,

-1.1993176 , -0.38734904, -0.15875885, -0.81085825, -0.07678922,

-0.16753489, 0.14065655, -1.8609751 , 0.03587054, 1.2792674 ,

1.2732009 , -0.74120265, -0.98000383, 0.4521185 , -0.26387128,

0.37045383, 0.3680011 , 0.7197629 , -0.3570571 , 0.8016917 ,

0.39243212, -0.5027844 , -1.2106236 , 0.6412354 , -0.878307 ],

dtype=float32)

词共现矩阵

import cntext as ct

documents = ["I go to school every day by bus .",

"i go to theatre every night by bus"]

ct.co_occurrence_matrix(documents,

window_size=2,

lang='english')documents2 = ["编程很好玩",

"Python是最好学的编程"]

ct.co_occurrence_matrix(documents2,

window_size=2,

lang='chinese')构建Glove词嵌入模型,使用英文数据data/brown_corpus.txt

import cntext as ct

import os

model = ct.Glove(cwd=os.getcwd(), lang='english')

model.create_vocab(file='data/brown_corpus.txt', min_count=5)

model.cooccurrence_matrix()

model.train_embeddings(vector_size=50, max_iter=25)

model.save()Run

Step 1/4: ...Create vocabulary for Glove.

Step 2/4: ...Create cooccurrence matrix.

Step 3/4: ...Train glove embeddings.

Note, this part takes a long time to run

Step 3/4: ... Finish! Use 175.98 s

生成的Glove词嵌入文件位于 output/Glove 。

四种相似度计算函数

- cosine_sim(text1, text2) cos余弦相似

- jaccard_sim(text1, text2) jaccard相似

- minedit_sim(text1, text2) 最小编辑距离相似度;

- simple_sim(text1, text2) 更改变动算法

算法实现参考自 Cohen, Lauren, Christopher Malloy, and Quoc Nguyen. Lazy prices. No. w25084. National Bureau of Economic Research, 2018.

import cntext as ct

text1 = '编程真好玩编程真好玩'

text2 = '游戏真好玩编程真好玩啊'

print(ct.cosine_sim(text1, text2))

print(ct.jaccard_sim(text1, text2))

print(ct.minedit_sim(text1, text2))

print(ct.simple_sim(text1, text2))Run

0.82

0.67

2.00

0.87

词嵌入中蕴含着人类的认知信息,以往的词嵌入大多是比较一个概念中两组反义词与某对象的距离计算认知信息。

- 多个对象在某概念的远近,职业与性别,某个职业是否存在亲近男性,而排斥女性

- 多个对象在某概念的分量(fen,一声)的多少, 人类语言中留存着对不同动物体积的认知记忆,如小鼠大象。动物词在词向量空间中是否能留存着这种大小的记忆

这两种认知分别可以用向量距离、向量语义投影计算得来。

- tm.sematic_distance(words, c_words1, c_words2) 向量距离

- tm.sematic_projection(words, c_words1, c_words2) 向量投影

分别计算words与c_words1、c_words2语义距离,返回距离差值。

例如

male_concept = ['male', 'man', 'he', 'him']

female_concept = ['female', 'woman', 'she', 'her']

software_engineer_concept = ['engineer', 'programming', 'software']

d1 = distance(male_concept, software_engineer_concept)

d2 = distance(female_concept, software_engineer_concept)

如果d1-d2<0,说明在语义空间中,software_engineer_concept更接近male_concept,更远离female_concept。

换言之,在该语料中,人们对软件工程师这一类工作,对女性存在刻板印象(偏见)。

下载glove_w2v.6B.100d.txt链接: https://pan.baidu.com/s/1MMfQ7M0YCzL9Klp4zrlHBw 提取码: 72l0

import cntext as ct

#Note: this is a word2vec format model

tm = ct.Text2Mind(w2v_model_path='glove_w2v.6B.100d.txt')

engineer = ['program', 'software', 'computer']

man_words = ["man", "he", "him"]

woman_words = ["woman", "she", "her"]

#在语义空间中,工程师更接近于男人,而不是女人。

#in semantic space, engineer is closer to man, other than woman.

tm.sematic_distance(words=engineer,

c_words1=man_words,

c_words2=woman_words)Run

-0.38

为了解释词向量模型的语义投影,我使用了 2022 年 Nature 论文中的图片[@Grand2022SemanticPR]。 关于动物的名字,人类对动物大小的认知信息隐藏在语料库文本中。 通过将LARGE WORDS 和SMALL WORDS的含义用不同的animals的向量投影,动物在size向量上的投影(就像下图中的红线 ) 得到,因此可以通过计算比较动物的大小。

根据两组反义词c_words1, c_words2构建一个概念(认知)向量, words中的每个词向量在概念向量中投影,即可得到认知信息。

分值越大,word越位于c_words2一侧。

Grand, G., Blank, I.A., Pereira, F. and Fedorenko, E., 2022. Semantic projection recovers rich human knowledge of multiple object features from word embeddings. Nature Human Behaviour, pp.1-13."

例如,人类的语言中,存在尺寸、性别、年龄、政治、速度、财富等不同的概念。每个概念可以由两组反义词确定概念的向量方向。

以尺寸为例,动物在人类认知中可能存在体积尺寸大小差异。

animals = ['mouse', 'cat', 'horse', 'pig', 'whale']

small_words= ["small", "little", "tiny"]

large_words = ["large", "big", "huge"]

# In size conception, mouse is smallest, horse is biggest.

# 在大小概念上,老鼠最小,马是最大的。

tm.sematic_projection(words=animals,

c_words1=small_words,

c_words2=large_words)Run

[('mouse', -1.68),

('cat', -0.92),

('pig', -0.46),

('whale', -0.24),

('horse', 0.4)]

关于尺寸的认知,人类在文本中隐含着老鼠较小,马较大。

如果再研究或项目中使用到cntext ,请声明出处。

Deng, X., & Nan, P. (2022). cntext: a Python tool for text mining [Computer software]. Zenodo. https://doi.org/10.5281/zenodo.7063523

Source Code URL: https://github.com/hiDaDeng/cntext

@misc{deng2022cntext,

author = {Deng, X. and Nan, P.},

title = {cntext: a Python tool for text mining},

year = {2022},

publisher = {Zenodo},

doi = {10.5281/zenodo.7063523},

url = {https://doi.org/10.5281/zenodo.7063523},

howpublished = {[Computer software]},

note = {Source Code URL: \url{https://github.com/hiDaDeng/cntext}}

}

%0 Generic

%A Deng, X.

%A Nan, P.

%T cntext: a Python tool for text mining

%Y [Computer software]

%D 2022

%I Zenodo

%R 10.5281/zenodo.7063523

%U https://doi.org/10.5281/zenodo.7063523

%Z Source Code URL: https://github.com/hiDaDeng/cntext

%@

如果您是经管人文社科专业背景,编程小白,面临海量文本数据采集和处理分析艰巨任务,可以参看 python实证指标构建与文本分析 视频课。作为文科生,一样也是从两眼一抹黑开始,这门课程是用五年时间凝缩出来的。自认为讲的很通俗易懂o( ̄︶ ̄)o,

- python入门

- 网络爬虫

- 数据读取

- 文本分析入门

- 机器学习与文本分析

- 词嵌入与态度认知

- 文本分析在经管研究中的应用

感兴趣的童鞋不妨 戳一下 python实证指标构建与文本分析 进来看看~

- B站:大邓和他的python

- 公众号:大邓和他的python

- 博客

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cntext

Similar Open Source Tools

cntext

cntext is a text analysis package that provides semantic distance and semantic projection based on word embedding models. Additionally, cntext offers traditional methods such as word count statistics, readability, document similarity, sentiment analysis, etc. It includes modules for text statistics, sentiment analysis, dictionary construction, similarity calculations, and text-to-mind cognitive analysis.

BetterOCR

BetterOCR is a tool that enhances text detection by combining multiple OCR engines with LLM (Language Model). It aims to improve OCR results, especially for languages with limited training data or noisy outputs. The tool combines results from EasyOCR, Tesseract, and Pororo engines, along with LLM support from OpenAI. Users can provide custom context for better accuracy, view performance examples by language, and upcoming features include box detection, improved interface, and async support. The package is under rapid development and contributions are welcomed.

instruct-ner

Instruct NER is a solution for complex Named Entity Recognition tasks, including Nested NER, based on modern Large Language Models (LLMs). It provides tools for dataset creation, training, automatic metric calculation, inference, error analysis, and model implementation. Users can create instructions for LLM, build dictionaries with labels, and generate model input templates. The tool supports various entity types and datasets, such as RuDReC, NEREL-BIO, CoNLL-2003, and MultiCoNER II. It offers training scripts for LLMs and metric calculation functions. Instruct NER models like Llama, Mistral, T5, and RWKV are implemented, with HuggingFace models available for adaptation and merging.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

Gensokyo-llm

Gensokyo-llm is a tool designed for Gensokyo and Onebotv11, providing a one-click solution for large models. It supports various Onebotv11 standard frameworks, HTTP-API, and reverse WS. The tool is lightweight, with built-in SQLite for context maintenance and proxy support. It allows easy integration with the Gensokyo framework by configuring reverse HTTP and forward HTTP addresses. Users can set system settings, role cards, and context length. Additionally, it offers an openai original flavor API with automatic context. The tool can be used as an API or integrated with QQ channel robots. It supports converting GPT's SSE type and ensures memory safety in concurrent SSE environments. The tool also supports multiple users simultaneously transmitting SSE bidirectionally.

api-for-open-llm

This project provides a unified backend interface for open large language models (LLMs), offering a consistent experience with OpenAI's ChatGPT API. It supports various open-source LLMs, enabling developers to seamlessly integrate them into their applications. The interface features streaming responses, text embedding capabilities, and support for LangChain, a tool for developing LLM-based applications. By modifying environment variables, developers can easily use open-source models as alternatives to ChatGPT, providing a cost-effective and customizable solution for various use cases.

opencode.nvim

Opencode.nvim is a Neovim plugin that provides a simple and efficient way to browse, search, and open files in a project. It enhances the file navigation experience by offering features like fuzzy finding, file preview, and quick access to frequently used files. With Opencode.nvim, users can easily navigate through their project files, jump to specific locations, and manage their workflow more effectively. The plugin is designed to improve productivity and streamline the development process by simplifying file handling tasks within Neovim.

gemini-ai

Gemini AI is a Ruby Gem designed to provide low-level access to Google's generative AI services through Vertex AI, Generative Language API, or AI Studio. It allows users to interact with Gemini to build abstractions on top of it. The Gem provides functionalities for tasks such as generating content, embeddings, predictions, and more. It supports streaming capabilities, server-sent events, safety settings, system instructions, JSON format responses, and tools (functions) calling. The Gem also includes error handling, development setup, publishing to RubyGems, updating the README, and references to resources for further learning.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

EAGLE

Eagle is a family of Vision-Centric High-Resolution Multimodal LLMs that enhance multimodal LLM perception using a mix of vision encoders and various input resolutions. The model features a channel-concatenation-based fusion for vision experts with different architectures and knowledge, supporting up to over 1K input resolution. It excels in resolution-sensitive tasks like optical character recognition and document understanding.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

MCP-Chinese-Getting-Started-Guide

The Model Context Protocol (MCP) is an innovative open-source protocol that redefines the interaction between large language models (LLMs) and the external world. MCP provides a standardized approach for any large language model to easily connect to various data sources and tools, enabling seamless access and processing of information. MCP acts as a USB-C interface for AI applications, offering a standardized way for AI models to connect to different data sources and tools. The core functionalities of MCP include Resources, Prompts, Tools, Sampling, Roots, and Transports. This guide focuses on developing an MCP server for network search using Python and uv management. It covers initializing the project, installing dependencies, creating a server, implementing tool execution methods, and running the server. Additionally, it explains how to debug the MCP server using the Inspector tool, how to call tools from the server, and how to connect multiple MCP servers. The guide also introduces the Sampling feature, which allows pre- and post-tool execution operations, and demonstrates how to integrate MCP servers into LangChain for AI applications.

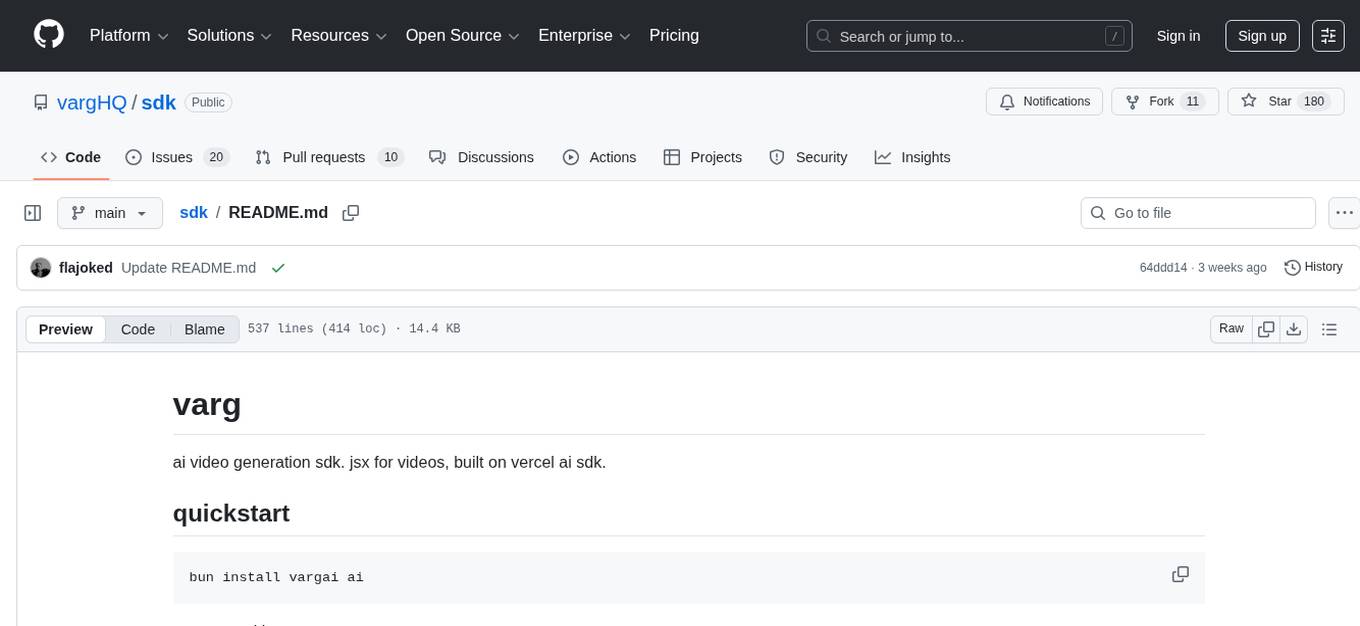

sdk

Varg is an AI video generation SDK that extends Vercel's AI SDK with capabilities for video, music, and lipsync. It allows users to generate images, videos, music, and more using familiar patterns and declarative JSX syntax. The SDK supports various models for image and video generation, speech synthesis, music generation, and background removal. Users can create reusable elements for character consistency, handle files from disk, URL, or buffer, and utilize layout helpers, transitions, and caption styles. Varg also offers a visual editor for video workflows with a code editor and node-based interface.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

HebTTS

HebTTS is a language modeling approach to diacritic-free Hebrew text-to-speech (TTS) system. It addresses the challenge of accurately mapping text to speech in Hebrew by proposing a language model that operates on discrete speech representations and is conditioned on a word-piece tokenizer. The system is optimized using weakly supervised recordings and outperforms diacritic-based Hebrew TTS systems in terms of content preservation and naturalness of generated speech.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.