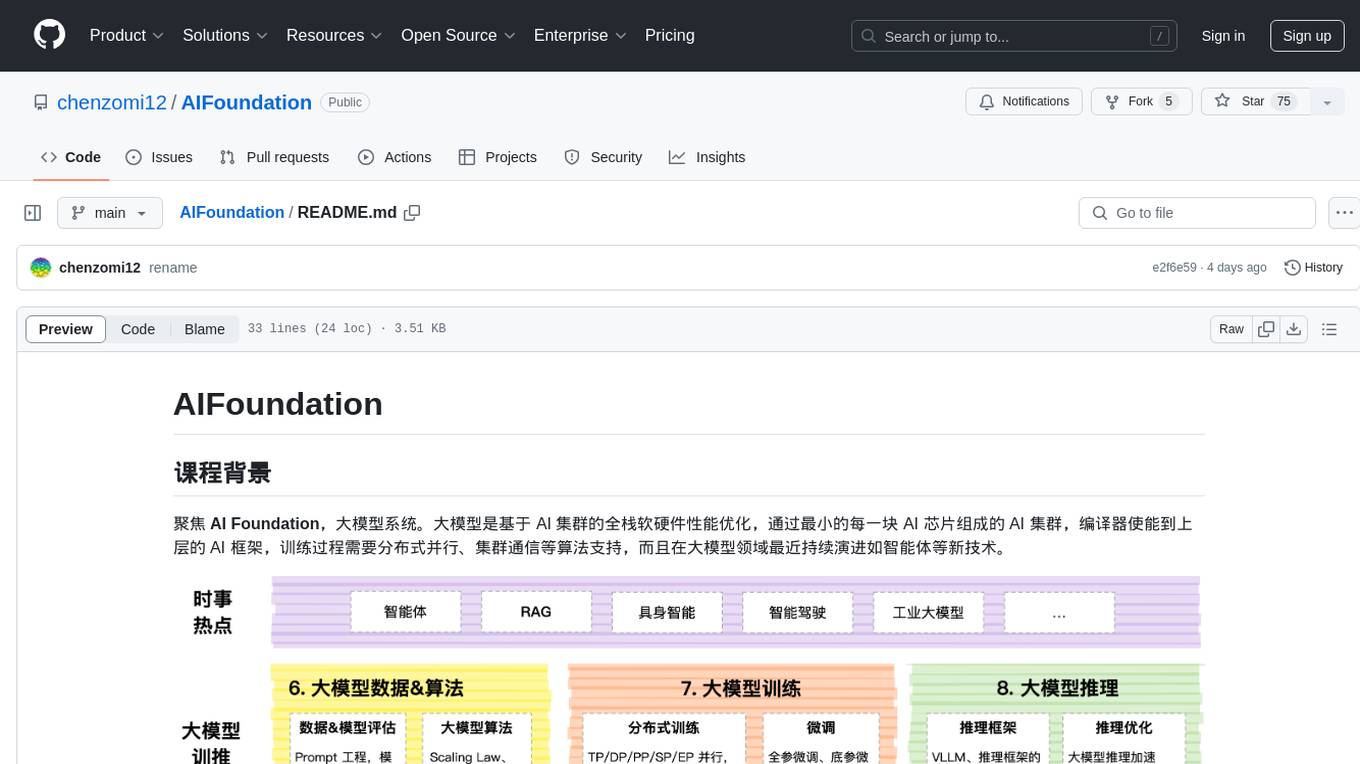

AIFoundation

AIFoundation 主要是指AI系统遇到大模型,从底层到上层如何系统级地支持大模型训练和推理,全栈的核心技术。

Stars: 188

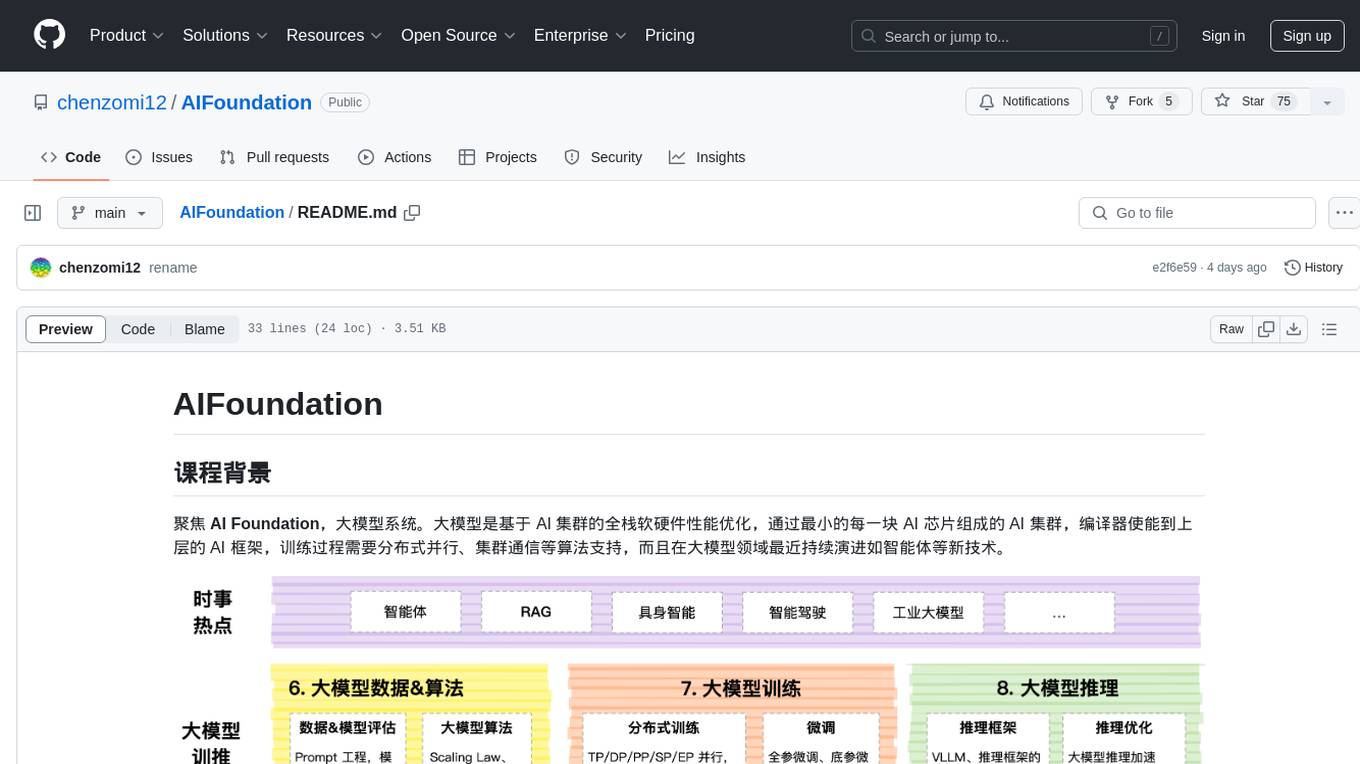

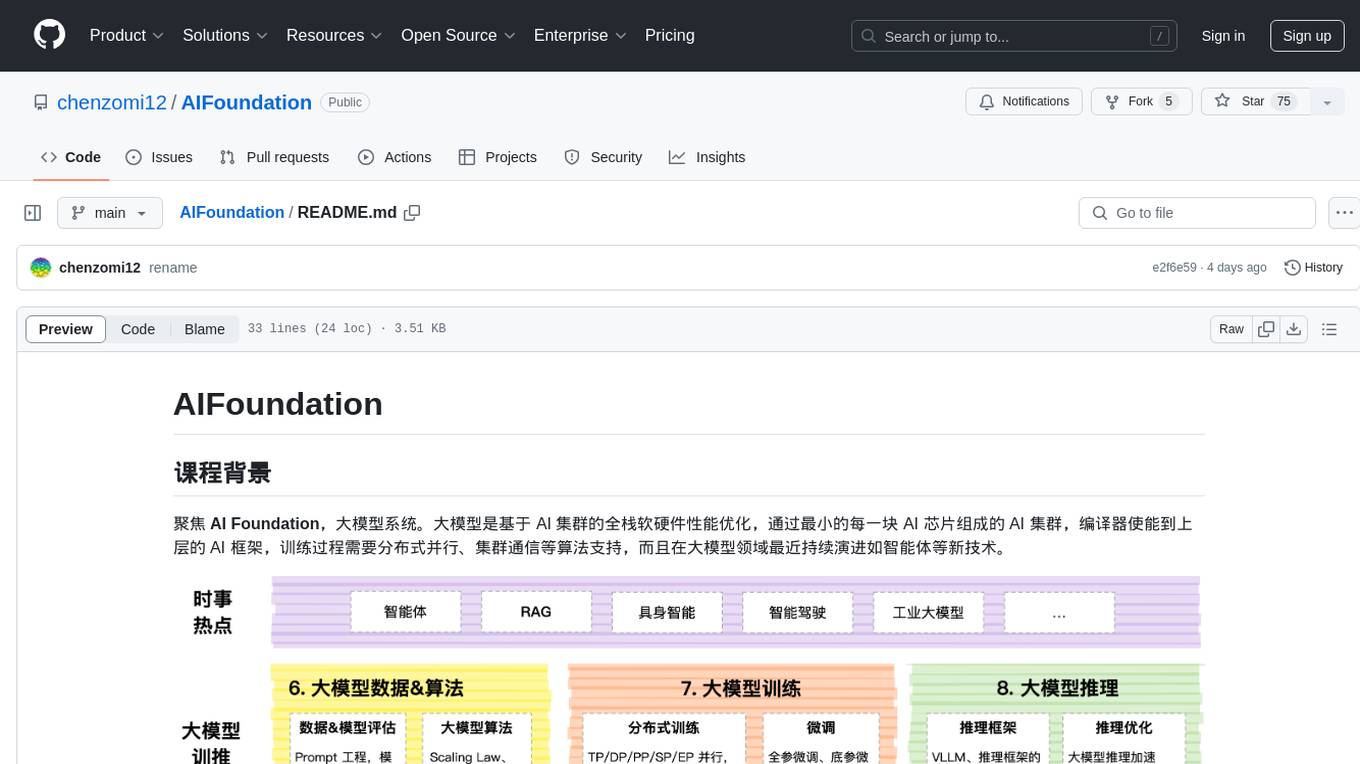

AIFoundation focuses on AI Foundation, large model systems. Large models optimize the performance of full-stack hardware and software based on AI clusters. The training process requires distributed parallelism, cluster communication algorithms, and continuous evolution in the field of large models such as intelligent agents. The course covers modules like AI chip principles, communication & storage, AI clusters, computing architecture, communication architecture, large model algorithms, training, inference, and analysis of hot technologies in the large model field.

README:

聚焦 AI Foundation,大模型系统。大模型是基于 AI 集群的全栈软硬件性能优化,通过最小的每一块 AI 芯片组成的 AI 集群,编译器使能到上层的 AI 框架,训练过程需要分布式并行、集群通信等算法支持,而且在大模型领域最近持续演进如智能体等新技术。

课程主要包括以下模块,内容陆续更新中,欢迎贡献:

| 序列 | 教程内容 | 简介 | 地址 |

|---|---|---|---|

| 01 | AI 芯片原理(完结) | AI 芯片主要介绍 AI 的硬件体系架构,包括从芯片基础到 AI 芯片的原理与架构,芯片设计需要考虑 AI 算法与编程体系,以应对 AI 快速的发展。 | [Slides] |

| 02 | 通信&存储 | 大模型训练和推理的过程中都严重依赖于网络通信,因此会重点介绍通信原理、网络拓扑、组网方案、高速互联通信的内容。存储则是会从节点内的存储到存储 POD 进行介绍。 | [Slides] |

| 03 | AI 集群 | 大模型虽然已经慢慢在端测设备开始落地,但是总体对云端的依赖仍然很重很重,AI 集群会介绍集群运维管理、集群性能、训练推理一体化拓扑流程等内容。 | [Slides] |

| 04 | 计算架构 | [Slides] | |

| 05 | 通信架构(完结) | 通信架构主要是指各种类型的 XCCL 集合通信库,大模型在推理的PD 分离和分布式训练,都对集合通信库有很强烈的诉求,网络模型的参数需要相互传递,因此 XCCL 极大帮助大模型更好地训练和推理。 | [Slides] |

| 06 | 大模型算法 | Transformer起源于NLP领域,近期统治了 CV/NLP/多模态的大模型,我们将深入地探讨 Scaling Law 背后的原理。在大模型算法背后数据和算法的评估也是核心的内容之一,如何实现 Prompt 和通过 Prompt 提升模型效果。 | [Slides] |

| 07 | 大模型训练 | [Slides] | |

| 08 | 大模型推理 | [Slides] | |

| 09 | 热点技术剖析 | 当前大模型技术已进入快速迭代期。这一时期的显著特点就是技术的更新换代速度极快,新算法、新模型层出不穷。因此本节内容将会紧跟大模型的时事内容,进行深度技术分析。 | [Slides] |

本课程主要为本科生高年级、硕博研究生、AI 系统从业者设计,帮助大家:

-

完整了解 AI 的计算机系统架构,并通过实际问题和案例,来了解 AI 完整生命周期下的系统设计。

-

介绍前沿系统架构和 AI 相结合的研究工作,了解主流框架、平台和工具来了解 AI 系统。

| 编号 | 名称 | 具体内容 |

|---|---|---|

| 1 | AI 计算体系 | 神经网络等 AI 技术的计算模式和计算体系架构 |

| 2 | AI 芯片基础 | CPU、GPU、NPU 等芯片体系架构基础原理 |

| 3 | 图形处理器 GPU | GPU 的基本原理,英伟达 GPU 的架构发展 |

| 4 | 英伟达 GPU 详解 | 英伟达 GPU 的 Tensor Core、NVLink 深度剖析 |

| 5 | 国外 AI 处理器 | 谷歌、特斯拉等专用 AI 处理器核心原理 |

| 6 | 国内 AI 处理器 | 寒武纪、燧原科技等专用 AI 处理器核心原理 |

| 7 | AI 芯片黄金 10 年 | 对 AI 芯片的编程模式和发展进行总结 |

| 编号 | 名称 | 具体内容 |

|---|---|---|

| 1 | 集合通信原理 | 通信域、通信算法、集合通信原语 |

| 2 | 集合通信库 | 深入地剖析 NCCL/HCCL 实现算法、对外 API |

| 编号 | 名称 | 具体内容 |

|---|---|---|

| 1 | 时事热点 | OpenAI o1、WWDC 大会发布 |

| 2 | AIAgent 智能体 | AI Agent 智能体的原理、架构 |

| 3 | 自动驾驶 | 端到端自动驾驶和萝卜快跑 |

| 4 | 具身智能 | 具身智能的原理、架构和产业思考 |

| 5 | 生成推荐 | 推荐领域的革命发展历程 |

| 6 | 隐私计算 | 发展过程与 Apple 引入隐私计算 |

这个仓已经到达疯狂的 10G 啦(ZOMI 把所有制作过程、高清图片都原封不动提供),如果你要 git clone 会非常的慢,因此建议优先到 Releases · chenzomi12/AIFoundation 来下载你需要的内容

非常希望您也参与到这个开源课程中,B 站给 ZOMI 留言哦!

欢迎大家使用的过程中发现 bug 或者勘误直接提交代码 PR 到开源社区哦!

请大家尊重开源和 ZOMI 的努力,引用 PPT 的内容请规范转载标明出处哦!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIFoundation

Similar Open Source Tools

AIFoundation

AIFoundation focuses on AI Foundation, large model systems. Large models optimize the performance of full-stack hardware and software based on AI clusters. The training process requires distributed parallelism, cluster communication algorithms, and continuous evolution in the field of large models such as intelligent agents. The course covers modules like AI chip principles, communication & storage, AI clusters, computing architecture, communication architecture, large model algorithms, training, inference, and analysis of hot technologies in the large model field.

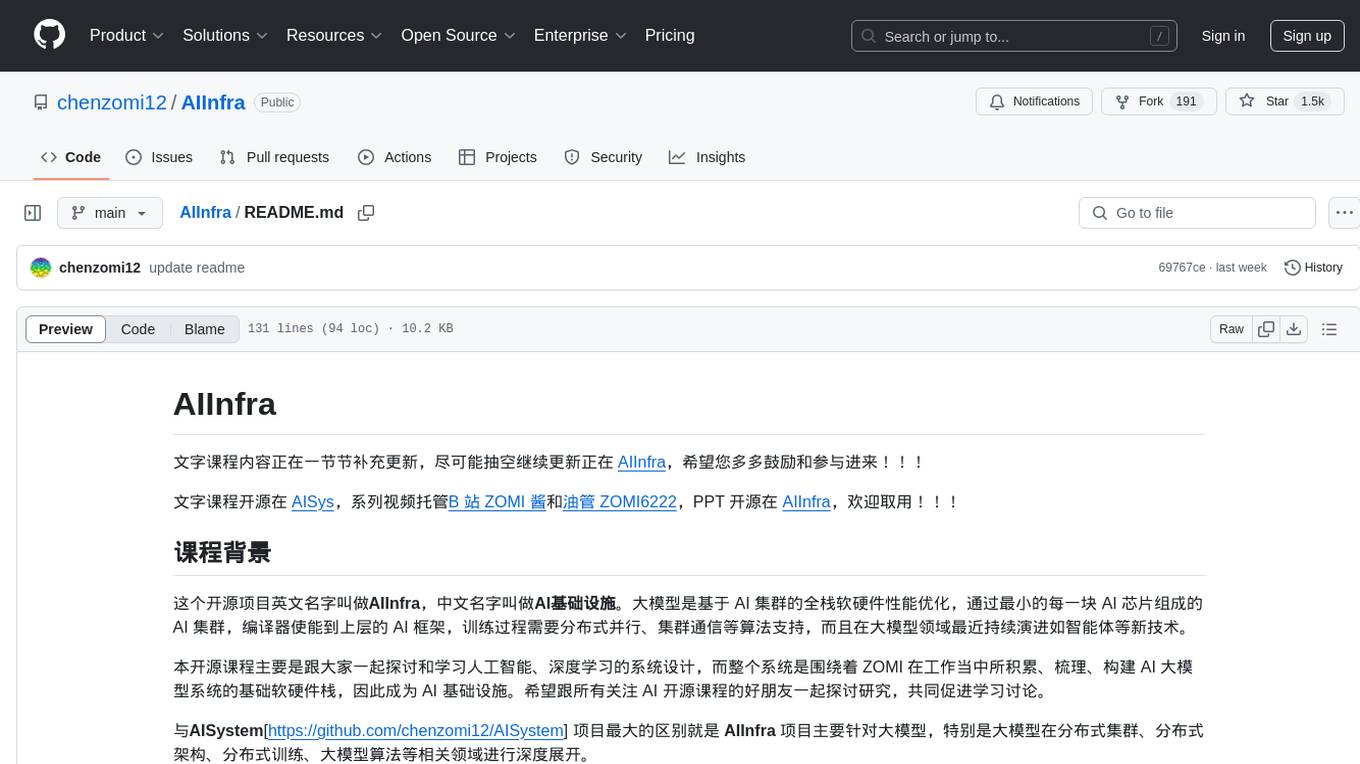

AIInfra

AIInfra is an open-source project focused on AI infrastructure, specifically targeting large models in distributed clusters, distributed architecture, distributed training, and algorithms related to large models. The project aims to explore and study system design in artificial intelligence and deep learning, with a focus on the hardware and software stack for building AI large model systems. It provides a comprehensive curriculum covering topics such as AI chip principles, communication and storage, AI clusters, large model training, and inference, as well as algorithms for large models. The course is designed for undergraduate and graduate students, as well as professionals working with AI large model systems, to gain a deep understanding of AI computer system architecture and design.

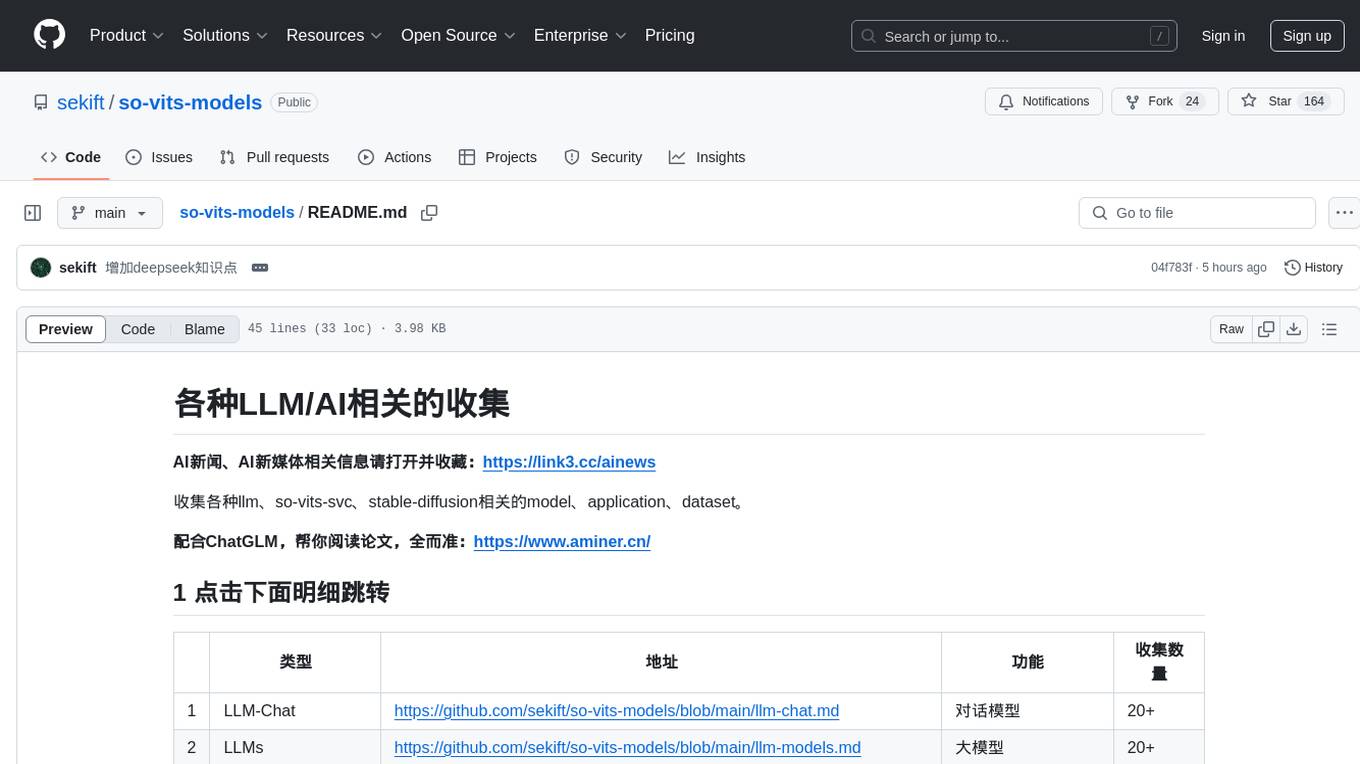

so-vits-models

This repository collects various LLM, AI-related models, applications, and datasets, including LLM-Chat for dialogue models, LLMs for large models, so-vits-svc for sound-related models, stable-diffusion for image-related models, and virtual-digital-person for generating videos. It also provides resources for deep learning courses and overviews, AI competitions, and specific AI tasks such as text, image, voice, and video processing.

kumo-search

Kumo search is an end-to-end search engine framework that supports full-text search, inverted index, forward index, sorting, caching, hierarchical indexing, intervention system, feature collection, offline computation, storage system, and more. It runs on the EA (Elastic automic infrastructure architecture) platform, enabling engineering automation, service governance, real-time data, service degradation, and disaster recovery across multiple data centers and clusters. The framework aims to provide a ready-to-use search engine framework to help users quickly build their own search engines. Users can write business logic in Python using the AOT compiler in the project, which generates C++ code and binary dynamic libraries for rapid iteration of the search engine.

step_into_llm

The 'step_into_llm' repository is dedicated to the 昇思MindSpore technology open class, which focuses on exploring cutting-edge technologies, combining theory with practical applications, expert interpretations, open sharing, and empowering competitions. The repository contains course materials, including slides and code, for the ongoing second phase of the course. It covers various topics related to large language models (LLMs) such as Transformer, BERT, GPT, GPT2, and more. The course aims to guide developers interested in LLMs from theory to practical implementation, with a special emphasis on the development and application of large models.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

PaddleScience

PaddleScience is a scientific computing suite developed based on the deep learning framework PaddlePaddle. It utilizes the learning ability of deep neural networks and the automatic (higher-order) differentiation mechanism of PaddlePaddle to solve problems in physics, chemistry, meteorology, and other fields. It supports three solving methods: physics mechanism-driven, data-driven, and mathematical fusion, and provides basic APIs and detailed documentation for users to use and further develop.

2020-12th-ironman

This repository contains tutorial content for the 12th iT Help Ironman competition, focusing on machine learning algorithms and their practical applications. The tutorials cover topics such as AI model integration, API server deployment techniques, and hands-on programming exercises. The series is presented in video format and will be compiled into an e-book in the future. Suitable for those familiar with Python, interested in implementing AI prediction models, data analysis, and backend integration and deployment of AI models.

aidea-server

AIdea Server is an open-source Golang-based server that integrates mainstream large language models and drawing models. It supports various functionalities including OpenAI's GPT-3.5 and GPT-4, Anthropic's Claude instant and Claude 2.1, Google's Gemini Pro, as well as Chinese models like Tongyi Qianwen, Wenxin Yiyuan, and more. It also supports open-source large models like Yi 34B, Llama2, and AquilaChat 7B. Additionally, it provides features for text-to-image, super-resolution, coloring black and white images, generating art fonts and QR codes, among others.

ailia-models

The collection of pre-trained, state-of-the-art AI models. ailia SDK is a self-contained, cross-platform, high-speed inference SDK for AI. The ailia SDK provides a consistent C++ API across Windows, Mac, Linux, iOS, Android, Jetson, and Raspberry Pi platforms. It also supports Unity (C#), Python, Rust, Flutter(Dart) and JNI for efficient AI implementation. The ailia SDK makes extensive use of the GPU through Vulkan and Metal to enable accelerated computing. # Supported models 323 models as of April 8th, 2024

KeepChatGPT

KeepChatGPT is a plugin designed to enhance the data security capabilities and efficiency of ChatGPT. It aims to make your chat experience incredibly smooth, eliminating dozens or even hundreds of unnecessary steps, and permanently getting rid of various errors and warnings. It offers innovative features such as automatic refresh, activity maintenance, data security, audit cancellation, conversation cloning, endless conversations, page purification, large screen display, full screen display, tracking interception, rapid changes, and detailed insights. The plugin ensures that your AI experience is secure, smooth, efficient, concise, and seamless.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

LLM-for-Healthcare

The repository 'LLM-for-Healthcare' provides a comprehensive survey of large language models (LLMs) for healthcare, covering data, technology, applications, and accountability and ethics. It includes information on various LLM models, training data, evaluation methods, and computation costs. The repository also discusses tasks such as NER, text classification, question answering, dialogue systems, and generation of medical reports from images in the healthcare domain.

Awesome-AISourceHub

Awesome-AISourceHub is a repository that collects high-quality information sources in the field of AI technology. It serves as a synchronized source of information to avoid information gaps and information silos. The repository aims to provide valuable resources for individuals such as AI book authors, enterprise decision-makers, and tool developers who frequently use Twitter to share insights and updates related to AI advancements. The platform emphasizes the importance of accessing information closer to the source for better quality content. Users can contribute their own high-quality information sources to the repository by following specific steps outlined in the contribution guidelines. The repository covers various platforms such as Twitter, public accounts, knowledge planets, podcasts, blogs, websites, YouTube channels, and more, offering a comprehensive collection of AI-related resources for individuals interested in staying updated with the latest trends and developments in the AI field.

For similar tasks

Interview-for-Algorithm-Engineer

This repository provides a collection of interview questions and answers for algorithm engineers. The questions are organized by topic, and each question includes a detailed explanation of the answer. This repository is a valuable resource for anyone preparing for an algorithm engineering interview.

PythonPark

PythonPark is a paradise for learning Python, providing babysitter-level tutorials on AI labs, treasure videos, data structures, study guides, machine learning practicals, deep learning practicals, Python basics, web scraping, big company interview experiences, programming life, and resource sharing. Original articles are published at least twice a week, with the latest articles being first released on WeChat and videos on Bilibili. Join the WeChat group for technical discussions or to provide feedback. Continuously improving and outputting content!

ai-explorables

The ai-explorables repository contains code for AI Explorables, a tool that allows users to make changes in the source code and view the changes locally. It is not an officially supported Google product.

AIFoundation

AIFoundation focuses on AI Foundation, large model systems. Large models optimize the performance of full-stack hardware and software based on AI clusters. The training process requires distributed parallelism, cluster communication algorithms, and continuous evolution in the field of large models such as intelligent agents. The course covers modules like AI chip principles, communication & storage, AI clusters, computing architecture, communication architecture, large model algorithms, training, inference, and analysis of hot technologies in the large model field.

ByteMLPerf

ByteMLPerf is an AI Accelerator Benchmark that focuses on evaluating AI Accelerators from a practical production perspective, including the ease of use and versatility of software and hardware. Byte MLPerf has the following characteristics: - Models and runtime environments are more closely aligned with practical business use cases. - For ASIC hardware evaluation, besides evaluate performance and accuracy, it also measure metrics like compiler usability and coverage. - Performance and accuracy results obtained from testing on the open Model Zoo serve as reference metrics for evaluating ASIC hardware integration.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

ai-hands-on

A complete, hands-on guide to becoming an AI Engineer. This repository is designed to help you learn AI from first principles, build real neural networks, and understand modern LLM systems end-to-end. Progress through math, PyTorch, deep learning, transformers, RAG, and OCR with clean, intuitive Jupyter notebooks guiding you at every step. Suitable for beginners and engineers leveling up, providing clarity, structure, and intuition to build real AI systems.

compute-blade

Compute Blade is a feature-rich carrier board designed for the Raspberry Pi Compute Module 4 and compatible alternatives, aimed at simplifying and automating the development and management of on-premise cluster environments. The solution streamlines complex processes, making cluster setup and management efficient and manageable for users, from homelabs to advanced AI clusters at scale.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.