compute-blade

Feature rich enterprise-level carrier board for the Raspberry Pi Compute Module 4. From Homelabs to advanced AI clusters at scale.

Stars: 61

Compute Blade is a feature-rich carrier board designed for the Raspberry Pi Compute Module 4 and compatible alternatives, aimed at simplifying and automating the development and management of on-premise cluster environments. The solution streamlines complex processes, making cluster setup and management efficient and manageable for users, from homelabs to advanced AI clusters at scale.

README:

A On-Premise Cluster Environment

Feature rich, enterprise-level, carrier board for the Raspberry Pi Compute Module 4 (+ compatible alternatives). From Homelabs to advanced AI clusters at scale: Simplifying and automating the development and management of on-premise cluster environments is at the heart of what we do. Our solutions are designed to streamline complex processes, turning what was once a daunting task into a manageable and efficient experience.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for compute-blade

Similar Open Source Tools

compute-blade

Compute Blade is a feature-rich carrier board designed for the Raspberry Pi Compute Module 4 and compatible alternatives, aimed at simplifying and automating the development and management of on-premise cluster environments. The solution streamlines complex processes, making cluster setup and management efficient and manageable for users, from homelabs to advanced AI clusters at scale.

knavigator

Knavigator is a project designed to analyze, optimize, and compare scheduling systems, with a focus on AI/ML workloads. It addresses various needs, including testing, troubleshooting, benchmarking, chaos engineering, performance analysis, and optimization. Knavigator interfaces with Kubernetes clusters to manage tasks such as manipulating with Kubernetes objects, evaluating PromQL queries, as well as executing specific operations. It can operate both outside and inside a Kubernetes cluster, leveraging the Kubernetes API for task management. To facilitate large-scale experiments without the overhead of running actual user workloads, Knavigator utilizes KWOK for creating virtual nodes in extensive clusters.

ais-k8s

AIStore on Kubernetes is a toolkit for deploying a lightweight, scalable object storage solution designed for AI applications in a Kubernetes environment. It includes documentation, Ansible playbooks, Kubernetes operator, Helm charts, and Terraform definitions for deployment on public cloud platforms. The system overview shows deployment across nodes with proxy and target pods utilizing Persistent Volumes. The AIStore Operator automates cluster management tasks. The repository focuses on production deployments but offers different deployment options. Thorough planning and configuration decisions are essential for successful multi-node deployment. The AIStore Operator simplifies tasks like starting, deploying, adjusting size, and updating AIStore resources within Kubernetes.

FedML

FedML is a unified and scalable machine learning library for running training and deployment anywhere at any scale. It is highly integrated with FEDML Nexus AI, a next-gen cloud service for LLMs & Generative AI. FEDML Nexus AI provides holistic support of three interconnected AI infrastructure layers: user-friendly MLOps, a well-managed scheduler, and high-performance ML libraries for running any AI jobs across GPU Clouds.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

edge-ai-libraries

The Edge AI Libraries project is a collection of libraries, microservices, and tools for Edge application development. It includes sample applications showcasing generic AI use cases. Key components include Anomalib, Dataset Management Framework, Deep Learning Streamer, ECAT EnableKit, EtherCAT Masterstack, FLANN, OpenVINO toolkit, Audio Analyzer, ORB Extractor, PCL, PLCopen Servo, Real-time Data Agent, RTmotion, Audio Intelligence, Deep Learning Streamer Pipeline Server, Document Ingestion, Model Registry, Multimodal Embedding Serving, Time Series Analytics, Vector Retriever, Visual-Data Preparation, VLM Inference Serving, Intel Geti, Intel SceneScape, Visual Pipeline and Platform Evaluation Tool, Chat Question and Answer, Document Summarization, PLCopen Benchmark, PLCopen Databus, Video Search and Summarization, Isolation Forest Classifier, Random Forest Microservices. Visit sub-directories for instructions and guides.

cascadeflow

cascadeflow is an intelligent AI model cascading library that dynamically selects the optimal model for each query or tool call through speculative execution. It helps reduce API costs by 40-85% through intelligent model cascading and speculative execution with automatic per-query cost tracking. The tool is based on the research that shows 40-70% of queries don't require slow, expensive flagship models, and domain-specific smaller models often outperform large general-purpose models on specialized tasks. cascadeflow automatically escalates to flagship models for advanced reasoning when needed. It supports multiple providers, low latency, cost control, and transparency, and can be used for edge and local-hosted AI deployment.

yao

YAO is an open-source application engine written in Golang, suitable for developing business systems, website/APP API, admin panel, and self-built low-code platforms. It adopts a flow-based programming model to implement functions by writing YAO DSL or using JavaScript. Yao allows developers to create web services by processes, creating a database model, writing API services, and describing dashboard interfaces just by JSON for web & hardware, and 10x productivity. It is based on the flow-based programming idea, developed in Go language, and supports multiple ways to expand the data stream processor. Yao has a built-in data management system, making it suitable for quickly making various management backgrounds, CRM, ERP, and other internal enterprise systems. It is highly versatile, efficient, and performs better than PHP, JAVA, and other languages.

AimRT

AimRT is a basic runtime framework for modern robotics, developed in modern C++ with lightweight and easy deployment. It integrates research and development for robot applications in various deployment scenarios, providing debugging tools and observability support. AimRT offers a plug-in development interface compatible with ROS2, HTTP, Grpc, and other ecosystems for progressive system upgrades.

awesome-alt-clouds

Awesome Alt Clouds is a curated list of non-hyperscale cloud providers offering specialized infrastructure and services catering to specific workloads, compliance requirements, and developer needs. The list includes various categories such as Infrastructure Clouds, Sovereign Clouds, Unikernels & WebAssembly, Data Clouds, Workflow and Operations Clouds, Network, Connectivity and Security Clouds, Vibe Clouds, Developer Happiness Clouds, Authorization, Identity, Fraud and Abuse Clouds, Monetization, Finance and Legal Clouds, Customer, Marketing and eCommerce Clouds, IoT, Communications, and Media Clouds, Blockchain Clouds, Source Code Control, Cloud Adjacent, and Future Clouds.

cosmos-rl

Cosmos-RL is a flexible and scalable Reinforcement Learning framework specialized for Physical AI applications. It provides a toolchain for large scale RL training workload with features like parallelism, asynchronous processing, low-precision training support, and a single-controller architecture. The system architecture includes Tensor Parallelism, Sequence Parallelism, Context Parallelism, FSDP Parallelism, and Pipeline Parallelism. It also utilizes a messaging system for coordinating policy and rollout replicas, along with dynamic NCCL Process Groups for fault-tolerant and elastic large-scale RL training.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

moon

Moon is a monitoring and alerting platform suitable for multiple domains, supporting various application scenarios such as cloud-native, Internet of Things (IoT), and Artificial Intelligence (AI). It simplifies operational work of cloud-native monitoring, boasts strong IoT and AI support capabilities, and meets diverse monitoring needs across industries. Capable of real-time data monitoring, intelligent alerts, and fault response for various fields.

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

For similar tasks

connery-sdk

Connery SDK is an open-source NPM package that provides an SDK and CLI for developing plugins and actions. The SDK offers a JavaScript API to define plugins and actions, which are then packaged into a plugin server with a standardized REST API. This enables automation in the development process and simplifies handling authorization, input validation, and logging. Users can focus on the logic of their actions while the standardized API allows various clients to interact with actions uniformly. Actions can communicate with external APIs, databases, or services, making it versatile for creating AI plugins and actions.

compute-blade

Compute Blade is a feature-rich carrier board designed for the Raspberry Pi Compute Module 4 and compatible alternatives, aimed at simplifying and automating the development and management of on-premise cluster environments. The solution streamlines complex processes, making cluster setup and management efficient and manageable for users, from homelabs to advanced AI clusters at scale.

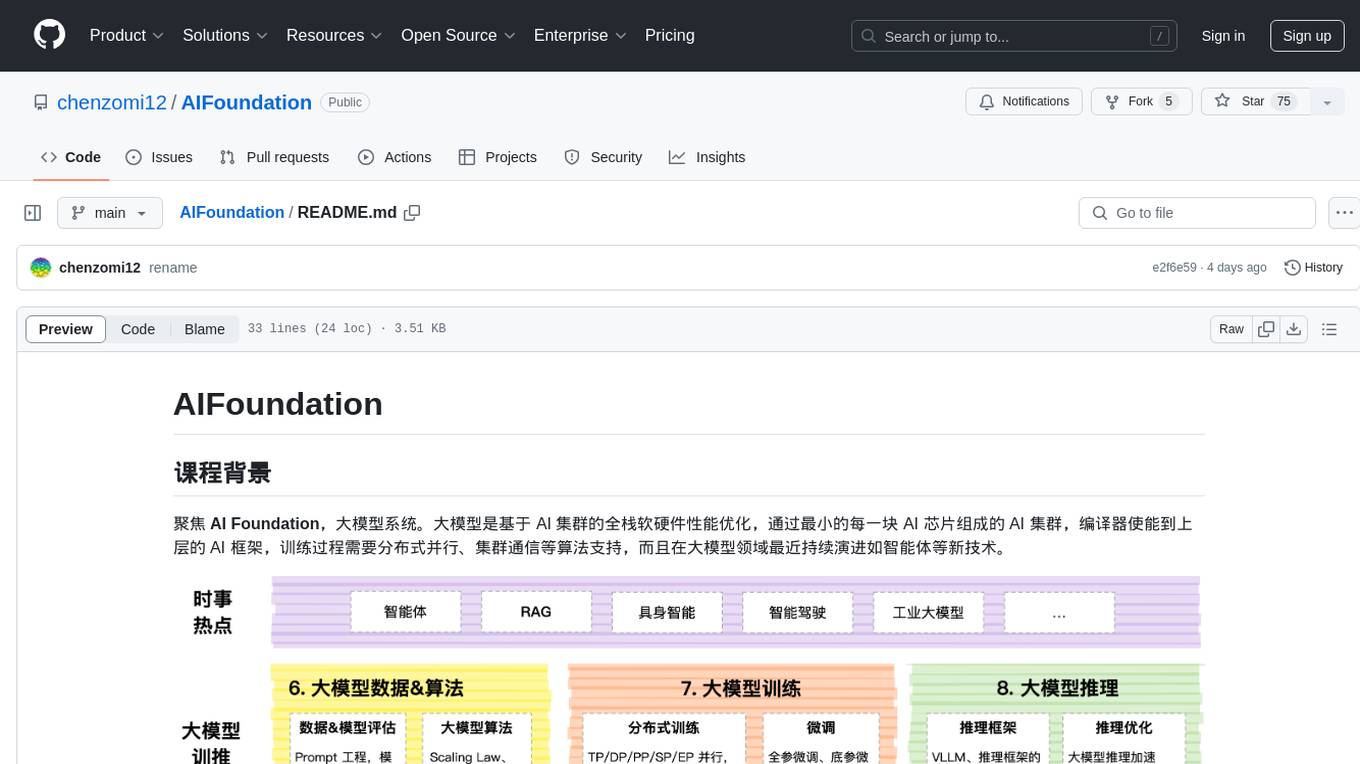

AIFoundation

AIFoundation focuses on AI Foundation, large model systems. Large models optimize the performance of full-stack hardware and software based on AI clusters. The training process requires distributed parallelism, cluster communication algorithms, and continuous evolution in the field of large models such as intelligent agents. The course covers modules like AI chip principles, communication & storage, AI clusters, computing architecture, communication architecture, large model algorithms, training, inference, and analysis of hot technologies in the large model field.

bigtop-manager

Apache Bigtop Manager is a modern, AI-driven web application designed to simplify the complexity of bigdata cluster management. It provides an easy deployment solution not only for Apache Bigtop components, but also other community version bigdata components. The platform aims to streamline the management of bigdata clusters by leveraging AI technology and user-friendly interfaces.

ksail

KSail is a tool that bundles common Kubernetes tooling into a single binary, providing a unified workflow for creating clusters, deploying workloads, and operating cloud-native stacks across different distributions and providers. It eliminates the need for multiple CLI tools and bespoke scripts, offering features like one binary for provisioning and deployment, support for various cluster configurations, mirror registries, GitOps integration, customizable stack selection, built-in SOPS for secrets management, AI assistant for interactive chat, and a VSCode extension for cluster management.

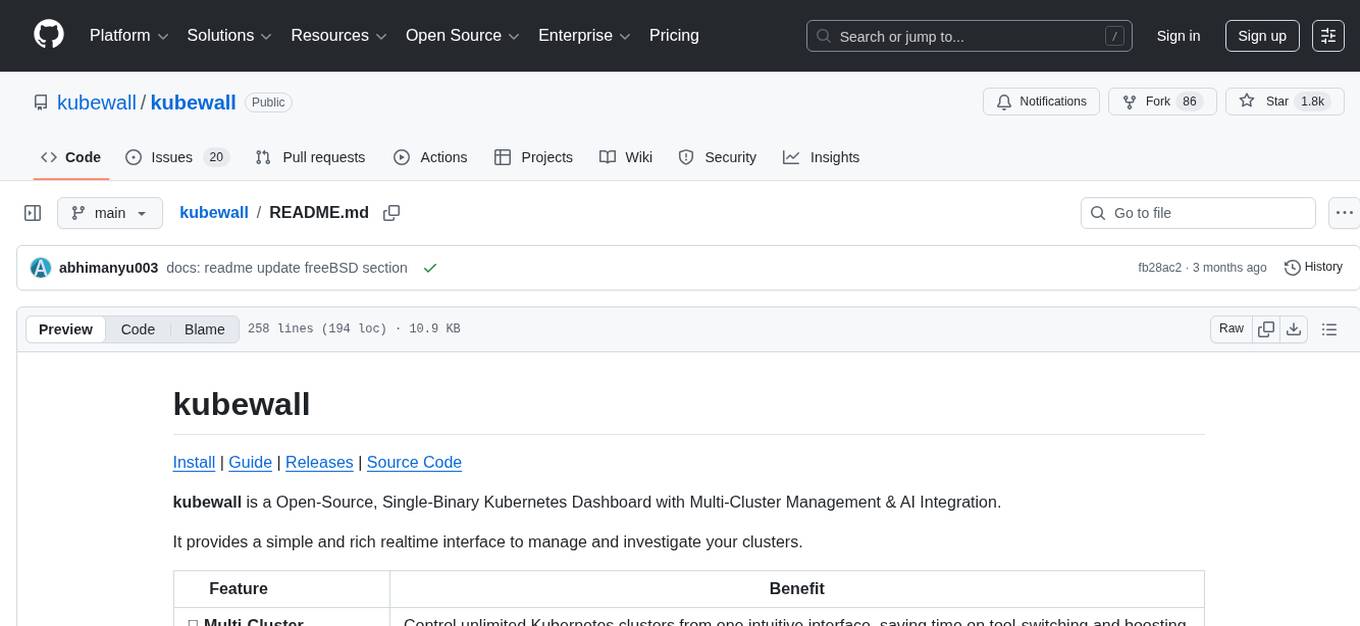

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

ai-controller-jobs

Aimeos job controllers is a repository containing controllers for scheduled tasks in e-commerce projects. It provides a set of tools to manage and execute various jobs related to e-commerce operations. The controllers are designed to streamline the process of handling scheduled tasks within e-commerce platforms, ensuring efficient and reliable task execution.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.