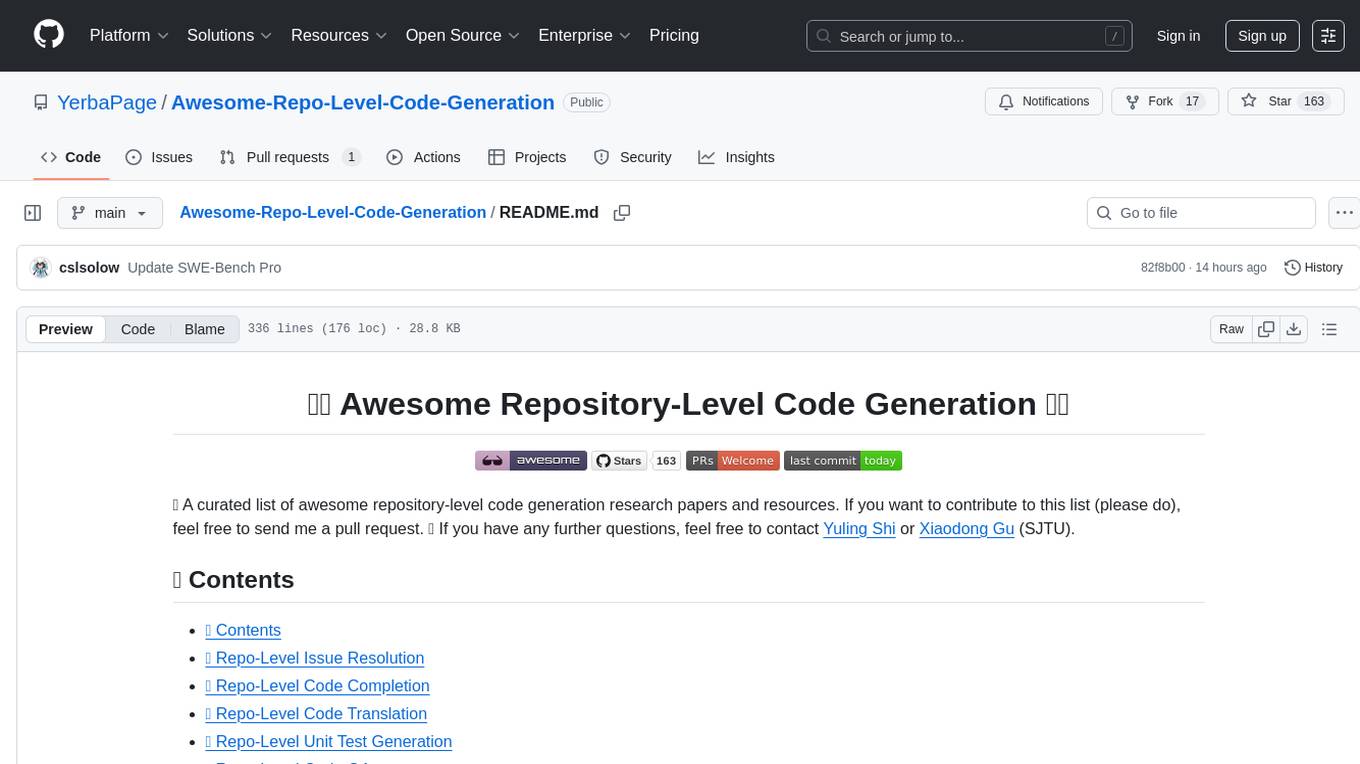

Awesome-Repo-Level-Code-Generation

Must-read papers on Repository-level Code Generation & Issue Resolution 🔥

Stars: 167

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

README:

🌟 A curated list of awesome repository-level code generation research papers and resources. If you want to contribute to this list (please do), feel free to send me a pull request. 🚀 If you have any further questions, feel free to contact Yuling Shi or Xiaodong Gu (SJTU).

- 📚 Contents

- 💥 Repo-Level Issue Resolution

- 🤖 Repo-Level Code Completion

- 🔄 Repo-Level Code Translation

- 🧪 Repo-Level Unit Test Generation

- 🔍 Repo-Level Code QA

- 👩💻 Repo-Level Issue Task Synthesis

- 📊 Datasets and Benchmarks

-

SWE-Exp: Experience-Driven Software Issue Resolution [2025-07-arXiv] [📄 paper] [🔗 repo]

-

SWE-Debate: Competitive Multi-Agent Debate for Software Issue Resolution [2025-07-arXiv] [📄 paper] [🔗 repo]

-

SWE-Effi: Re-Evaluating Software AI Agent System Effectiveness Under Resource Constraints [2025-09-arXiv] [📄 paper]

-

Diffusion is a code repair operator and generator [2025-08-arXiv] [📄 paper]

-

The SWE-Bench Illusion: When State-of-the-Art LLMs Remember Instead of Reason [2025-06-arXiv] [📄 paper]

-

Agent-RLVR: Training Software Engineering Agents via Guidance and Environment Rewards [2025-06-arXiv] [📄 paper]

-

EXPEREPAIR: Dual-Memory Enhanced LLM-based Repository-Level Program Repair [2025-06-arXiv] [📄 paper]

-

Coding Agents with Multimodal Browsing are Generalist Problem Solvers [2025-06-arXiv] [📄 paper] [🔗 repo]

-

CoRet: Improved Retriever for Code Editing [2025-05-arXiv] [📄 paper]

-

Darwin Godel Machine: Open-Ended Evolution of Self-Improving Agents [2025-05-arXiv] [📄 paper] [🔗 repo]

-

SWE-Dev: Evaluating and Training Autonomous Feature-Driven Software Development [2025-05-arXiv] [📄 paper] [🔗 repo]

-

Putting It All into Context: Simplifying Agents with LCLMs [2025-05-arXiv] [📄 paper]

-

SkyRL-v0: Train Real-World Long-Horizon Agents via Reinforcement Learning [2025-05-arXiv] [📄 blog] [🔗 repo]

-

AEGIS: An Agent-based Framework for General Bug Reproduction from Issue Descriptions [2025-FSE] [📄 paper]

-

Thinking Longer, Not Larger: Enhancing Software Engineering Agents via Scaling Test-Time Compute [2025-03-arXiv] [📄 paper] [🔗 repo]

-

Enhancing Repository-Level Software Repair via Repository-Aware Knowledge Graphs [2025-03-arXiv] [📄 paper]

-

CoSIL: Software Issue Localization via LLM-Driven Code Repository Graph Searching [2025-03-arXiv] [📄 paper]

-

SEAlign: Alignment Training for Software Engineering Agent [2025-03-arXiv] [📄 paper]

-

DARS: Dynamic Action Re-Sampling to Enhance Coding Agent Performance by Adaptive Tree Traversal [2025-03-arXiv] [📄 paper] [🔗 repo]

-

LocAgent: Graph-Guided LLM Agents for Code Localization [2025-03-arXiv] [📄 paper] [🔗 repo]

-

SoRFT: Issue Resolving with Subtask-oriented Reinforced Fine-Tuning [2025-02-arXiv] [📄 paper]

-

SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution [2025-02-arXiv] [📄 paper] [🔗 repo]

-

SWE-Fixer: Training Open-Source LLMs for Effective and Efficient GitHub Issue Resolution [2025-01-arXiv] [📄 paper] [🔗 repo]

-

CodeMonkeys: Scaling Test-Time Compute for Software Engineering [2025-01-arXiv] [📄 paper] [🔗 repo]

-

Training Software Engineering Agents and Verifiers with SWE-Gym [2024-12-arXiv] [📄 paper] [🔗 repo]

-

CODEV: Issue Resolving with Visual Data [2024-12-arXiv] [📄 paper] [🔗 repo]

-

LLMs as Continuous Learners: Improving the Reproduction of Defective Code in Software Issues [2024-11-arXiv] [📄 paper]

-

Globant Code Fixer Agent Whitepaper [2024-11] [📄 paper]

-

MarsCode Agent: AI-native Automated Bug Fixing [2024-11-arXiv] [📄 paper]

-

Lingma SWE-GPT: An Open Development-Process-Centric Language Model for Automated Software Improvement [2024-11-arXiv] [📄 paper] [🔗 repo]

-

SWE-Search: Enhancing Software Agents with Monte Carlo Tree Search and Iterative Refinement [2024-10-arXiv] [📄 paper] [🔗 repo]

-

AutoCodeRover: Autonomous Program Improvement [2024-09-ISSTA] [📄 paper] [🔗 repo]

-

SpecRover: Code Intent Extraction via LLMs [2024-08-arXiv] [📄 paper]

-

OpenHands: An Open Platform for AI Software Developers as Generalist Agents [2024-07-arXiv] [📄 paper] [🔗 repo]

-

AGENTLESS: Demystifying LLM-based Software Engineering Agents [2024-07-arXiv] [📄 paper]

-

RepoGraph: Enhancing AI Software Engineering with Repository-level Code Graph [2024-07-arXiv] [📄 paper] [🔗 repo]

-

CodeR: Issue Resolving with Multi-Agent and Task Graphs [2024-06-arXiv] [📄 paper] [🔗 repo]

-

Alibaba LingmaAgent: Improving Automated Issue Resolution via Comprehensive Repository Exploration [2024-06-arXiv] [📄 paper]

-

SWE-agent: Agent-Computer Interfaces Enable Automated Software Engineering [2024-NeurIPS] [📄 paper] [🔗 repo]

-

Enhancing Project-Specific Code Completion by Inferring Internal API Information [2025-07-TSE] [📄 paper] [🔗 repo]

-

CodeRAG: Supportive Code Retrieval on Bigraph for Real-World Code Generation [2025-04-arXiv] [📄 paper]

-

CodexGraph: Bridging Large Language Models and Code Repositories via Code Graph Databases [2025-04-NAACL] [📄 paper]

-

RTLRepoCoder: Repository-Level RTL Code Completion through the Combination of Fine-Tuning and Retrieval Augmentation [2025-04-arXiv] [📄 paper]

-

Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs [2025-04-AAAI] [📄 paper] [🔗 repo]

-

What to Retrieve for Effective Retrieval-Augmented Code Generation? An Empirical Study and Beyond [2025-03-arXiv] [📄 paper]

-

REPOFILTER: Adaptive Retrieval Context Trimming for Repository-Level Code Completion [2025-04-OpenReview] [📄 paper]

-

Improving FIM Code Completions via Context & Curriculum Based Learning [2024-12-arXiv] [📄 paper]

-

ContextModule: Improving Code Completion via Repository-level Contextual Information [2024-12-arXiv] [📄 paper]

-

A^3-CodGen: A Repository-Level Code Generation Framework for Code Reuse With Local-Aware, Global-Aware, and Third-Party-Library-Aware [2024-12-TSE] [📄 paper]

-

RepoGenReflex: Enhancing Repository-Level Code Completion with Verbal Reinforcement and Retrieval-Augmented Generation [2024-09-arXiv] [📄 paper]

-

RAMBO: Enhancing RAG-based Repository-Level Method Body Completion [2024-09-arXiv] [📄 paper] [🔗 repo]

-

RLCoder: Reinforcement Learning for Repository-Level Code Completion [2024-07-arXiv] [📄 paper] [🔗 repo]

-

STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis [2024-06-arXiv] [📄 paper]

-

GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model [2024-06-arXiv] [📄 paper]

-

Enhancing Repository-Level Code Generation with Integrated Contextual Information [2024-06-arXiv] [📄 paper]

-

R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models [2024-06-arXiv] [📄 paper]

-

Natural Language to Class-level Code Generation by Iterative Tool-augmented Reasoning over Repository [2024-05-arXiv] [📄 paper] [🔗 repo]

-

Iterative Refinement of Project-Level Code Context for Precise Code Generation with Compiler Feedback [2024-03-arXiv] [📄 paper] [🔗 repo]

-

Repoformer: Selective Retrieval for Repository-Level Code Completion [2024-03-arXiv] [📄 paper] [🔗 repo]

-

RepoHyper: Search-Expand-Refine on Semantic Graphs for Repository-Level Code Completion [2024-03-arXiv] [📄 paper] [🔗 repo]

-

RepoMinCoder: Improving Repository-Level Code Generation Based on Information Loss Screening [2024-07-Internetware] [📄 paper]

-

CodePlan: Repository-Level Coding using LLMs and Planning [2024-07-FSE] [📄 paper] [🔗 repo]

-

DraCo: Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion [2024-05-ACL] [📄 paper] [🔗 repo]

-

RepoCoder: Repository-Level Code Completion Through Iterative Retrieval and Generation [2023-10-EMNLP] [📄 paper] [🔗 repo]

-

Monitor-Guided Decoding of Code LMs with Static Analysis of Repository Context [2023-09-NeurIPS] [📄 paper] [🔗 repo]

-

RepoFusion: Training Code Models to Understand Your Repository [2023-06-arXiv] [📄 paper] [🔗 repo]

-

Repository-Level Prompt Generation for Large Language Models of Code [2023-06-ICML] [📄 paper] [🔗 repo]

-

Fully Autonomous Programming with Large Language Models [2023-06-GECCO] [📄 paper] [🔗 repo]

-

A Systematic Literature Review on Neural Code Translation [2025-05-arXiv] [📄 paper]

-

EVOC2RUST: A Skeleton-guided Framework for Project-Level C-to-Rust Translation [2025-08-arXiv] [📄 paper]

-

Lost in Translation: A Study of Bugs Introduced by Large Language Models while Translating Code [2024-04-ICSE] [📄 paper] [🔗 repo]

-

Enhancing llm-based code translation in repository context via triple knowledge-augmented [2025-03-arXiv] [📄 paper]

-

C2SaferRust: Transforming C Projects into Safer Rust with NeuroSymbolic Techniques [2025-01-arXiv] [📄 paper] [🔗 repo]

-

Scalable, Validated Code Translation of Entire Projects using Large Language Models [2025-06-PLDI] [📄 paper]

-

Syzygy: Dual Code-Test C to (safe) Rust Translation using LLMs and Dynamic Analysis [2024-12-arxiv] [📄 paper] [🕸️ website]

-

Execution-Feedback Driven Test Generation from SWE Issues [2025-08-arXiv] [📄 paper]

-

AssertFlip: Reproducing Bugs via Inversion of LLM-Generated Passing Tests [2025-07-arXiv] [📄 paper]

-

Issue2Test: Generating Reproducing Test Cases from Issue Reports [2025-03-arXiv] [📄 paper]

-

Agentic Bug Reproduction for Effective Automated Program Repair at Google [2025-02-arXiv] [📄 paper]

-

LLMs as Continuous Learners: Improving the Reproduction of Defective Code in Software Issues [2024-11-arXiv] [📄 paper]

-

SWE-QA: Can Language Models Answer Repository-level Code Questions? [2025-09-arXiv] [📄 paper] [🔗 repo]

-

Decompositional Reasoning for Graph Retrieval with Large Language Models [2025-06-arXiv] [📄 paper]

-

LongCodeBench: Evaluating Coding LLMs at 1M Context Windows [2025-05-arXiv] [📄 paper]

-

LocAgent: Graph-Guided LLM Agents for Code Localization [2025-03-arXiv] [📄 paper] [🔗 repo]

-

CoReQA: Uncovering Potentials of Language Models in Code Repository Question Answering [2025-01-arXiv] [📄 paper]

-

RepoChat Arena [2025-Blog] [🔗 repo]

-

RepoChat: An LLM-Powered Chatbot for GitHub Repository Question-Answering [MSR-2025] [🔗 repo]

-

CodeQueries: A Dataset of Semantic Queries over Code [2022-09-arXiv] [📄 paper]

-

SWE-Mirror: Scaling Issue-Resolving Datasets by Mirroring Issues Across Repositories [2025-09-arXiv] [📄 paper]

-

R2E-Gym: Procedural Environments and Hybrid Verifiers for Scaling Open-Weights SWE Agents [2025-04-arXiv] [📄 paper] [🔗 repo]

-

Scaling Data for Software Engineering Agents [2025-04-arXiv] [📄 paper] [🔗 repo]

-

Synthesizing Verifiable Bug-Fix Data to Enable Large Language Models in Resolving Real-World Bugs [2025-04-arXiv] [📄 paper] [🔗 repo]

-

Training Software Engineering Agents and Verifiers with SWE-Gym [2024-12-arXiv] [📄 paper] [🔗 repo]

-

SWE-QA: Can Language Models Answer Repository-level Code Questions? [2025-09-arXiv] [📄 paper] [🔗 repo]

-

SWE-bench Pro: Can AI Agents Solve Long-Horizon Software Engineering Tasks? [2025-09] [📄 paper] [🔗 repo]

-

AutoCodeBench: AutoCodeBench: Large Language Models are Automatic Code Benchmark Generators [2025-08-arXiv] [📄 paper] [🔗 repo]

-

LiveRepoReflection: Turning the Tide: Repository-based Code Reflection [2025-07-arXiv] [📄 paper] [🔗 repo]

-

SWE-Perf: SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories? [2025-07-arXiv] [📄 paper] [🔗 repo]

-

ResearchCodeBench: Benchmarking LLMs on Implementing Novel Machine Learning Research Code [2025-06-arXiv] [📄 paper] [🔗 repo]

-

SWE-Factory: Your Automated Factory for Issue Resolution Training Data and Evaluation Benchmarks [2025-06-arXiv] [📄 paper] [🔗 repo]

-

UTBoost: Rigorous Evaluation of Coding Agents on SWE-Bench [ACL-2025] [📄 paper]

-

SWE-Flow: Synthesizing Software Engineering Data in a Test-Driven Manner [ICML-2025] [📄 paper] [🔗 repo]

-

AgentIssue-Bench: Can Agents Fix Agent Issues? [2025-08-arXiv] [📄 paper] [🔗 repo]

-

OmniGIRL: OmniGIRL: A Multilingual and Multimodal Benchmark for GitHub Issue Resolution [2025-05-arXiv] [📄 paper] [🔗 repo]

-

SWE-Smith: Scaling Data for Software Engineering Agents [2025-04-arXiv] [📄 paper] [🔗 repo]

-

SWE-Synth: Synthesizing Verifiable Bug-Fix Data to Enable Large Language Models in Resolving Real-World Bugs [2025-04-arXiv] [📄 paper] [🔗 repo]

-

Are "Solved Issues" in SWE-bench Really Solved Correctly? An Empirical Study [2025-03-arXiv] [📄 paper]

-

Unveiling Pitfalls: Understanding Why AI-driven Code Agents Fail at GitHub Issue Resolution [2025-03-arXiv] [📄 paper]

-

SWE-Lancer: Can Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering? [2025-arXiv] [📄 paper] [🔗 repo]

-

Evaluating Agent-based Program Repair at Google [2025-01-arXiv] [📄 paper]

-

SWE-rebench: An Automated Pipeline for Task Collection and Decontaminated Evaluation of Software Engineering Agents [2025-05-arXiv] [📄 paper] [🕸️ website]

-

SWE-bench-Live: A Live Benchmark for Repository-Level Issue Resolution [2025-05-arXiv] [📄 paper] [🔗 repo]

-

FEA-Bench: A Benchmark for Evaluating Repository-Level Code Generation for Feature Implementation [2025-05-ACL] [📄 paper] [🔗 repo]

-

OmniGIRL: A Multilingual and Multimodal Benchmark for GitHub Issue Resolution [2025-05-ISSTA] [📄 paper]

-

SWE-PolyBench: A multi-language benchmark for repository level evaluation of coding agents [2025-04-arXiv] [📄 paper] [🔗 repo]

-

Multi-SWE-bench: A Multilingual Benchmark for Issue Resolving [2025-04-arXiv] [📄 paper] [🔗 repo]

-

LibEvolutionEval: A Benchmark and Study for Version-Specific Code Generation [2025-04-NAACL] [📄 paper][🔗 Website]

-

SWEE-Bench & SWA-Bench: Automated Benchmark Generation for Repository-Level Coding Tasks [2025-03-arXiv] [📄 paper]

-

ProjectEval: A Benchmark for Programming Agents Automated Evaluation on Project-Level Code Generation [2025-03-arXiv] [📄 paper]

-

REPOST-TRAIN: Scalable Repository-Level Coding Environment Construction with Sandbox Testing [2025-03-arXiv] [📄 paper] [🔗 repo]

-

Loc-Bench: Graph-Guided LLM Agents for Code Localization [2025-03-arXiv] [📄 paper] [🔗 repo]

-

SWE-Lancer: Can Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering? [2025-02-arXiv] [📄 paper] [🔗 repo]

-

SolEval: Benchmarking Large Language Models for Repository-level Solidity Code Generation [2025-02-arXiv] [📄 paper] [🔗 repo]

-

HumanEvo: An Evolution-aware Benchmark for More Realistic Evaluation of Repository-level Code Generation [2025-ICSE] [📄 paper] [🔗 repo]

-

RepoExec: On the Impacts of Contexts on Repository-Level Code Generation [2025-NAACL] [📄 paper] [🔗 repo]

-

SWE-Gym: Training Software Engineering Agents and Verifiers with SWE-Gym [2024-12-arXiv] [📄 paper] [🔗 repo]

-

RepoTransBench: RepoTransBench: A Real-World Benchmark for Repository-Level Code Translation [2024-12-arXiv] [📄 paper] [🔗 repo]

-

Visual SWE-bench: Issue Resolving with Visual Data [2024-12-arXiv] [📄 paper] [🔗 repo]

-

ExecRepoBench: Multi-level Executable Code Completion Evaluation [2024-12-arXiv] [📄 paper] [🔗 site]

-

REPOCOD: Can Language Models Replace Programmers? REPOCOD Says 'Not Yet' [2024-10-arXiv] [📄 paper] [🔗 repo]

-

M2RC-EVAL: M2rc-Eval: Massively Multilingual Repository-level Code Completion Evaluation [2024-10-arXiv] [📄 paper] [🔗 repo]

-

SWE-bench+: Enhanced Coding Benchmark for LLMs [2024-10-arXiv] [📄 paper]

-

SWE-bench Multimodal: Multimodal Software Engineering Benchmark [2024-10-arXiv] [📄 paper] [🔗 site]

-

Codev-Bench: How Do LLMs Understand Developer-Centric Code Completion? [2024-10-arXiv] [📄 paper] [🔗 repo]

-

SWT-Bench: Testing and Validating Real-World Bug-Fixes with Code Agents [2024-06-arxiv] [📄 paper] [🕸️ website]

-

CodeRAG-Bench: Can Retrieval Augment Code Generation? [2024-06-arXiv] [📄 paper] [🔗 repo]

-

R2C2-Bench: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models [2024-06-arXiv] [📄 paper]

-

RepoClassBench: Class-Level Code Generation from Natural Language Using Iterative, Tool-Enhanced Reasoning over Repository [2024-05-arXiv] [📄 paper] [🔗 repo]

-

DevEval: Evaluating Code Generation in Practical Software Projects [2024-ACL-Findings] [📄 paper] [🔗 repo]

-

CodAgentBench: Enhancing Code Generation with Tool-Integrated Agent Systems for Real-World Repo-level Coding Challenges [2024-ACL] [📄 paper]

-

RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems [2024-ICLR] [📄 paper] [🔗 repo]

-

SWE-bench: Can Language Models Resolve Real-World GitHub Issues? [2024-ICLR] [📄 paper] [🔗 repo]

-

CrossCodeLongEval: Repoformer: Selective Retrieval for Repository-Level Code Completion [2024-ICML] [📄 paper] [🔗 repo]

-

R2E-Eval: Turning Any GitHub Repository into a Programming Agent Test Environment [2024-ICML] [📄 paper] [🔗 repo]

-

RepoEval: Repository-Level Code Completion Through Iterative Retrieval and Generation [2023-EMNLP] [📄 paper] [🔗 repo]

-

CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion [2023-NeurIPS] [📄 paper] [🔗 site]

-

Skeleton-Guided-Translation: A Benchmarking Framework for Code Repository Translation with Fine-Grained Quality Evaluation [2025-01-arxiv] [📄 paper] [🔗 repo]

-

SWE-Dev: SWE-Dev: Evaluating and Training Autonomous Feature-Driven Software Development [2025-05-arXiv] [📄 paper] [🔗 repo]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Repo-Level-Code-Generation

Similar Open Source Tools

Awesome-Repo-Level-Code-Generation

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

c4-genai-suite

C4-GenAI-Suite is a comprehensive AI tool for generating code snippets and automating software development tasks. It leverages advanced machine learning models to assist developers in writing efficient and error-free code. The suite includes features such as code completion, refactoring suggestions, and automated testing, making it a valuable asset for enhancing productivity and code quality in software development projects.

VectorCode

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

Gito

Gito is a lightweight and user-friendly tool for managing and organizing your GitHub repositories. It provides a simple and intuitive interface for users to easily view, clone, and manage their repositories. With Gito, you can quickly access important information about your repositories, such as commit history, branches, and pull requests. The tool also allows you to perform common Git operations, such as pushing changes and creating new branches, directly from the interface. Gito is designed to streamline your GitHub workflow and make repository management more efficient and convenient.

pullfrog

Pullfrog is a versatile tool for managing and automating GitHub pull requests. It provides a simple and intuitive interface for developers to streamline their workflow and collaborate more efficiently. With Pullfrog, users can easily create, review, merge, and manage pull requests, all within a single platform. The tool offers features such as automated testing, code review, and notifications to help teams stay organized and productive. Whether you are a solo developer or part of a large team, Pullfrog can help you simplify the pull request process and improve code quality.

python-sdk

Python SDK is a software development kit that provides tools and resources for developers to interact with Python programming language. It simplifies the process of integrating Python code into applications and services, offering a wide range of functionalities and libraries to streamline development workflows. With Python SDK, developers can easily access and manipulate data, create automation scripts, build web applications, and perform various tasks efficiently. It is designed to enhance the productivity and flexibility of Python developers by providing a comprehensive set of tools and utilities for software development.

spec-workflow-mcp

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

airstate

AirState is a straightforward software development kit that enables users to integrate real-time collaboration functionalities into their web applications. With its user-friendly interface and robust capabilities, AirState simplifies the process of incorporating live collaboration features, making it an ideal choice for developers seeking to enhance the interactive elements of their projects. The SDK offers a seamless solution for creating engaging and interactive web experiences, allowing users to easily implement real-time collaboration tools without the need for extensive coding knowledge or complex configurations. By leveraging AirState, developers can streamline the development process and deliver dynamic web applications that facilitate real-time communication and collaboration among users.

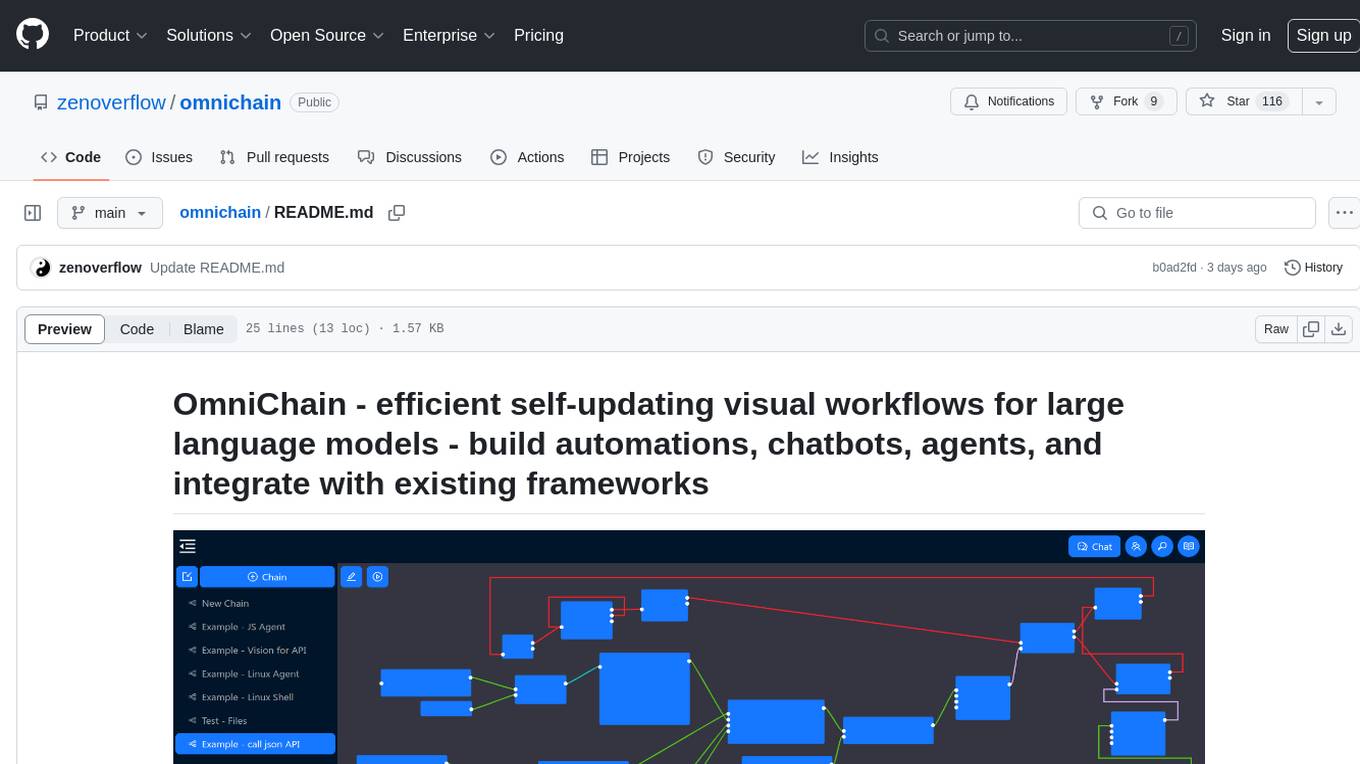

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

lightfriend

Lightfriend is a lightweight and user-friendly tool designed to assist developers in managing their GitHub repositories efficiently. It provides a simple and intuitive interface for users to perform various repository-related tasks, such as creating new repositories, managing branches, and reviewing pull requests. With Lightfriend, developers can streamline their workflow and collaborate more effectively with team members. The tool is designed to be easy to use and requires minimal setup, making it ideal for developers of all skill levels. Whether you are a beginner looking to get started with GitHub or an experienced developer seeking a more efficient way to manage your repositories, Lightfriend is the perfect companion for your GitHub workflow.

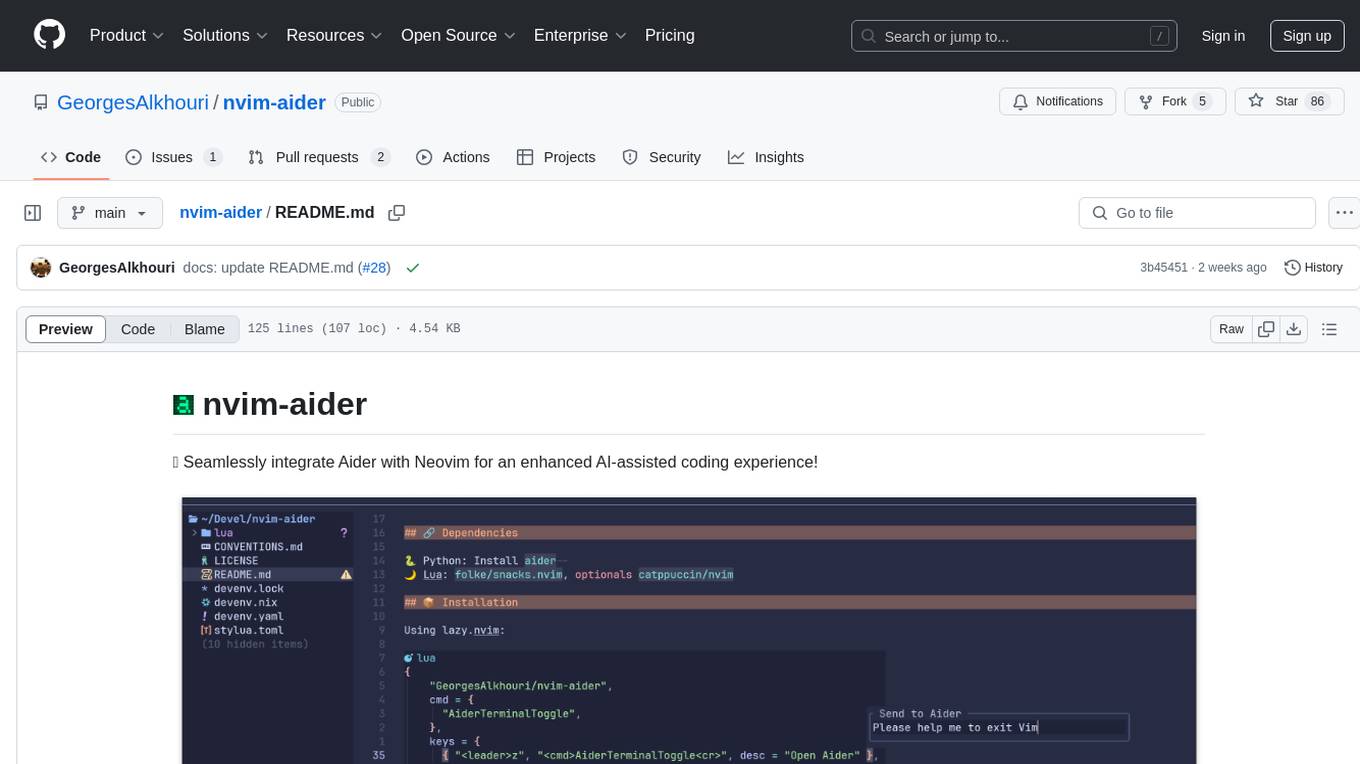

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

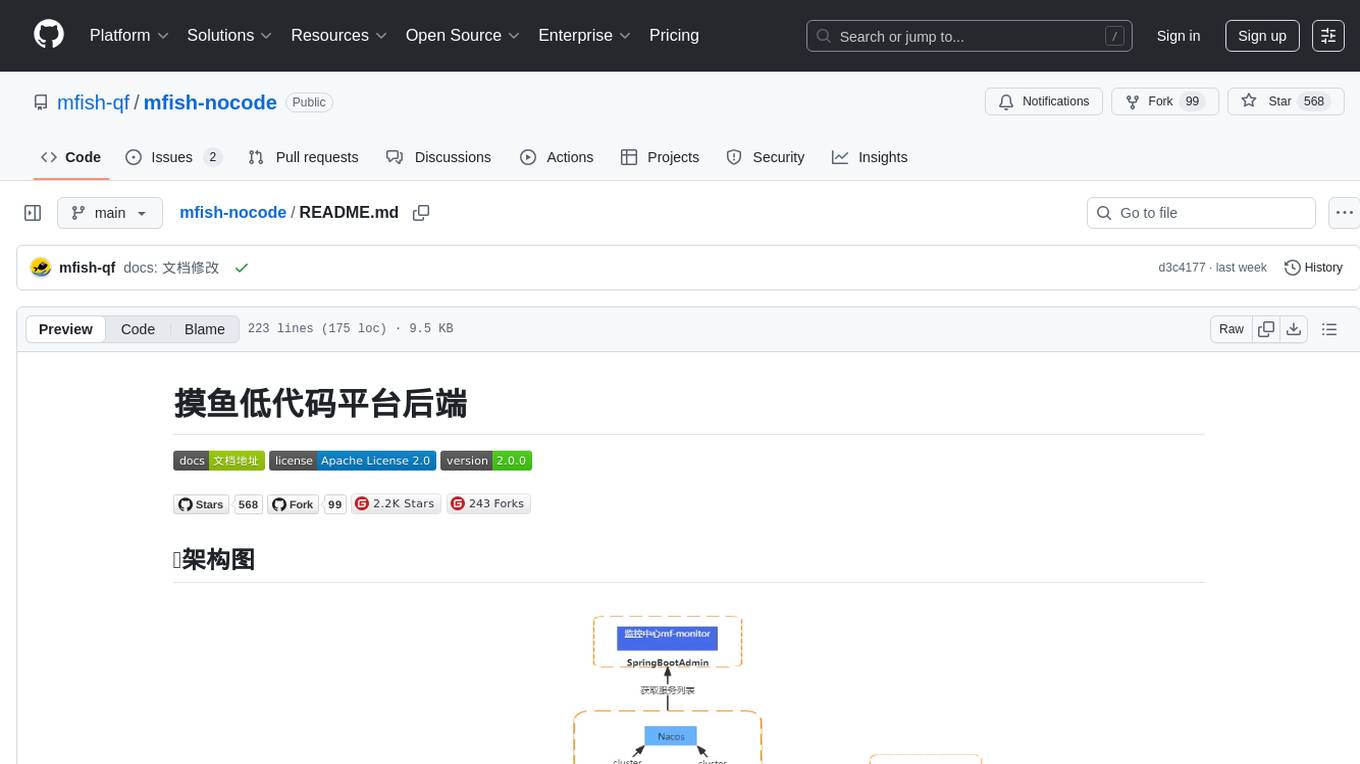

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

For similar tasks

Awesome-Repo-Level-Code-Generation

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

shell_gpt

ShellGPT is a command-line productivity tool powered by AI large language models (LLMs). This command-line tool offers streamlined generation of shell commands, code snippets, documentation, eliminating the need for external resources (like Google search). Supports Linux, macOS, Windows and compatible with all major Shells like PowerShell, CMD, Bash, Zsh, etc.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

llm.nvim

llm.nvim is a plugin for Neovim that enables code completion using LLM models. It supports 'ghost-text' code completion similar to Copilot and allows users to choose their model for code generation via HTTP requests. The plugin interfaces with multiple backends like Hugging Face, Ollama, Open AI, and TGI, providing flexibility in model selection and configuration. Users can customize the behavior of suggestions, tokenization, and model parameters to enhance their coding experience. llm.nvim also includes commands for toggling auto-suggestions and manually requesting suggestions, making it a versatile tool for developers using Neovim.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

CodeGen

CodeGen is an official release of models for Program Synthesis by Salesforce AI Research. It includes CodeGen1 and CodeGen2 models with varying parameters. The latest version, CodeGen2.5, outperforms previous models. The tool is designed for code generation tasks using large language models trained on programming and natural languages. Users can access the models through the Hugging Face Hub and utilize them for program synthesis and infill sampling. The accompanying Jaxformer library provides support for data pre-processing, training, and fine-tuning of the CodeGen models.

llm.hunyuan.T1

Hunyuan-T1 is a cutting-edge large-scale hybrid Mamba reasoning model driven by reinforcement learning. It has been officially released as an upgrade to the Hunyuan Thinker-1-Preview model. The model showcases exceptional performance in deep reasoning tasks, leveraging the TurboS base and Mamba architecture to enhance inference capabilities and align with human preferences. With a focus on reinforcement learning training, the model excels in various reasoning tasks across different domains, showcasing superior abilities in mathematical, logical, scientific, and coding reasoning. Through innovative training strategies and alignment with human preferences, Hunyuan-T1 demonstrates remarkable performance in public benchmarks and internal evaluations, positioning itself as a leading model in the field of reasoning.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

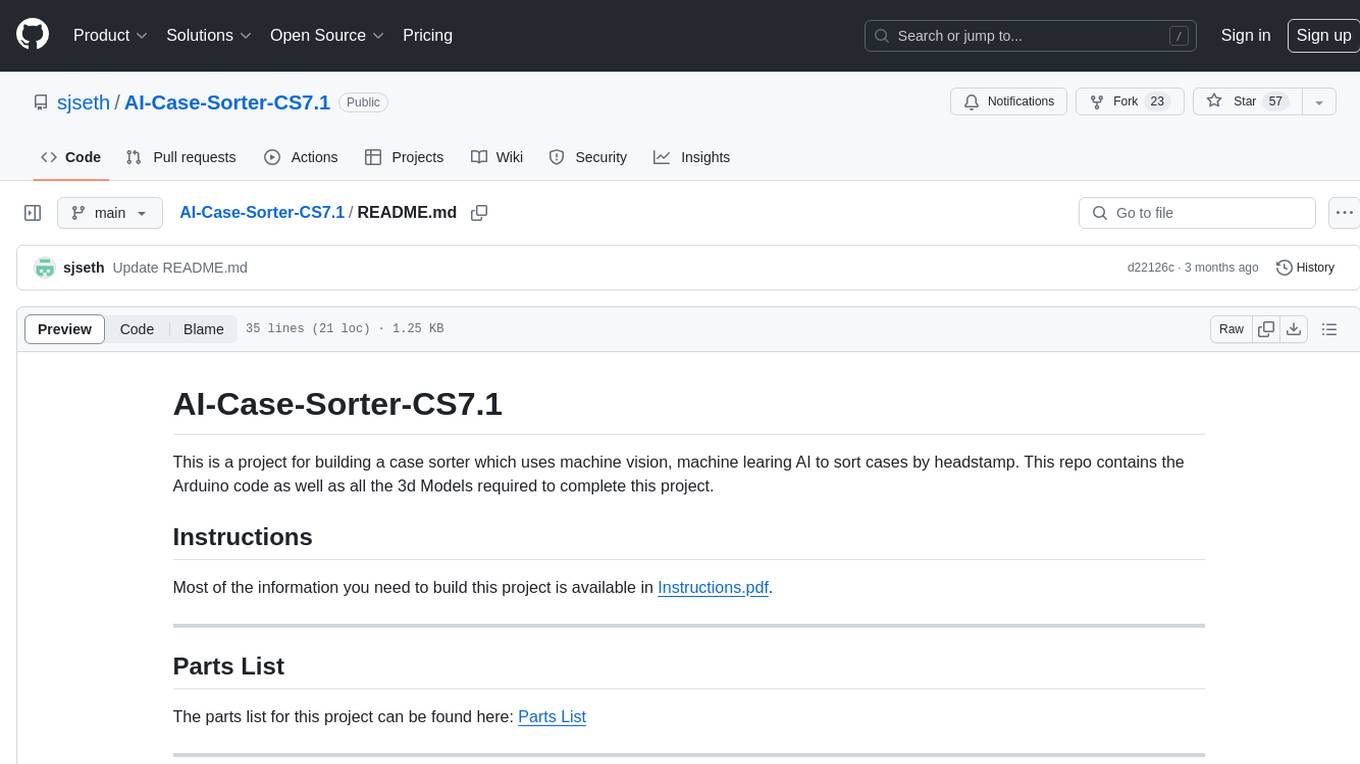

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.