Memento

Official Code of Memento: Fine-tuning LLM Agents without Fine-tuning LLMs

Stars: 1009

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

README:

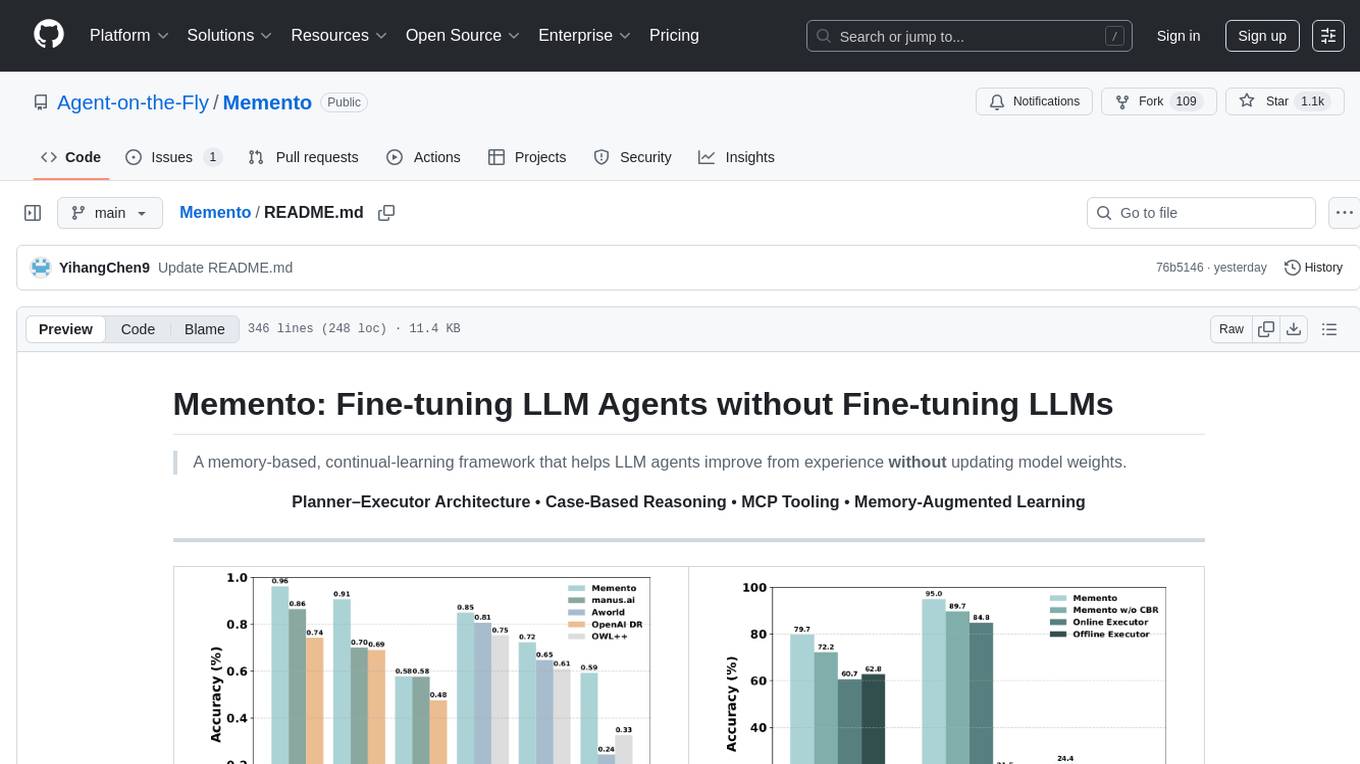

A memory-based, continual-learning framework that helps LLM agents improve from experience without updating model weights.

Planner–Executor Architecture • Case-Based Reasoning • MCP Tooling • Memory-Augmented Learning

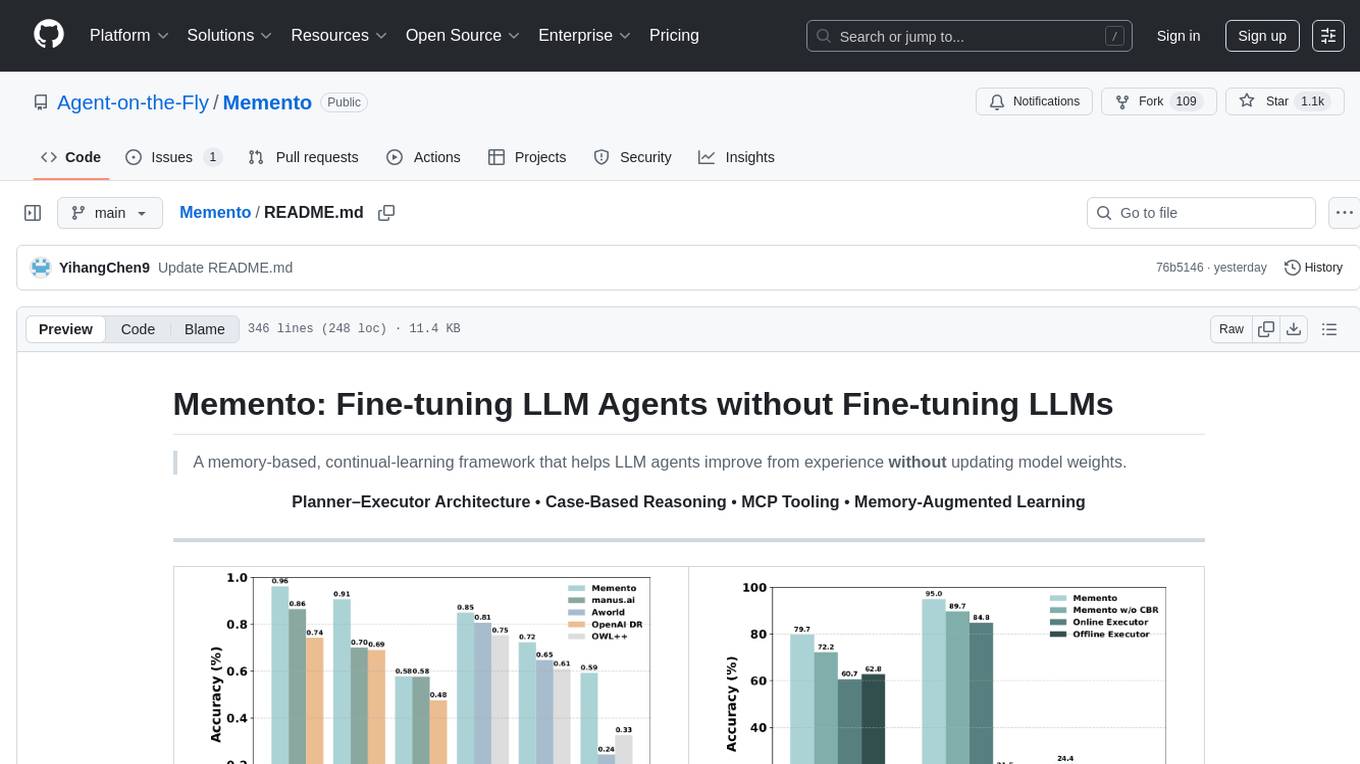

Memento vs. Baselines on GAIA validation and test sets. |

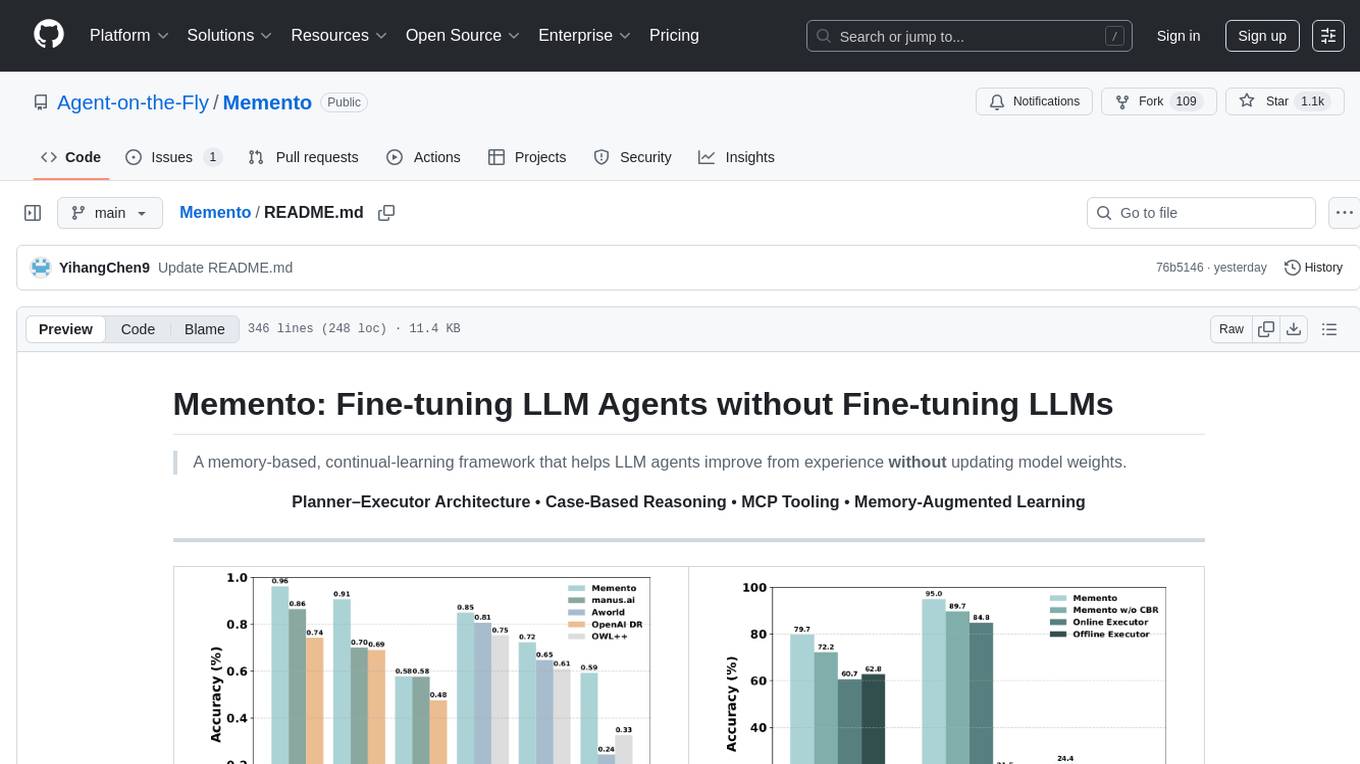

Ablation study of Memento across benchmarks. |

Continual learning curves across memory designs. |

Memento’s accuracy improvement on OOD datasets. |

- [2025.09.03] We’ve set up a WeChat group to make it easier to collaborate and exchange ideas on this project. Welcome to join the Group to share your thoughts, ask questions, or contribute your ideas! 🔥 🔥 🔥 Join our WeChat Group Now!

- [2025.08.30] We’re excited to announce that our no-parametric Case-Based Reasoning inference code is now officially open-sourced! 🎉

- [2025.08.28] We’ve created a Discord server to make discussions and collaboration around this project easier. Feel free to join and share your thoughts, ask questions, or contribute ideas! 🔥 🔥 🔥 Join our Discord!

- [2025.08.27] Thanks for your interest in our work! We’ll release our CBR code next week and our Parametric Memory code next month. We’ll keep updating on our further development.

- [2025.08.27] We add a new Crawler MCP in

server/ai_crawler.pyfor web crawling and query-aware content compression to reduce token cost. - [2025.08.26] We add the SerpAPI (https://serpapi.com/search-api) MCP tool to help you avoid using the search Docker and speed up development.

- No LLM weight updates. Memento reframes continual learning as memory-based online reinforcement learning over a memory-augmented MDP. A neural case-selection policy guides actions; experiences are stored and reused via efficient Read/Write operations.

- Two-stage planner–executor loop. A CBR-driven Planner decomposes tasks and retrieves relevant cases; an Executor runs each subtask as an MCP client, orchestrating tools and writing back outcomes.

- Comprehensive tool ecosystem. Built-in support for web search, document processing, code execution, image/video analysis, and more through a unified MCP interface.

- Strong benchmark performance. Achieves competitive results across GAIA, DeepResearcher, SimpleQA, and HLE benchmarks.

Learn from experiences, not gradients. Memento logs successful & failed trajectories into a Case Bank and retrieves by value to steer planning and execution—enabling low-cost, transferable, and online continual learning.

- Meta-Planner: Breaks down high-level queries into executable subtasks using GPT-4.1

- Executor: Executes individual subtasks using o3 or other models via MCP tools

- Case Memory: Stores final-step tuples (s_T, a_T, r_T) for experience replay

- MCP Tool Layer: Unified interface for external tools and services

- Web Research: Live search and controlled crawling via SearxNG

- Document Processing: Multi-format support (PDF, Office, images, audio, video)

- Code Execution: Sandboxed Python workspace with security controls

- Data Analysis: Excel processing, mathematical computations

- Media Analysis: Image captioning, video narration, audio transcription

- Python 3.11+

- OpenAI API key (or compatible API endpoint)

- SearxNG instance for web search

- FFmpeg (system-level binary required for video processing)

# Clone repository

git clone https://github.com/Agent-on-the-Fly/Memento

cd Memento

# Install uv if not already installed

curl -LsSf https://astral.sh/uv/install.sh | sh

# Sync dependencies and create virtual environment automatically

uv sync

# Activate the virtual environment

source .venv/bin/activate # On Windows: .venv\Scripts\activateFFmpeg is required for video processing functionality. The ffmpeg-python package in our dependencies requires a system-level FFmpeg binary.

Windows:

# Option 1: Using Conda (Recommended for isolated environment)

conda install -c conda-forge ffmpeg

# Option 2: Download from official website

# Visit https://ffmpeg.org/download.html and add to PATHmacOS:

# Using Homebrew

brew install ffmpegLinux:

# Debian/Ubuntu

sudo apt-get update && sudo apt-get install ffmpeg

# Install and setup crawl4ai

crawl4ai-setup

crawl4ai-doctor

# Install playwright browsers

playwright installAfter creating the .env file, you need to configure the following API keys and service endpoints:

# OPENAI API

OPENAI_API_KEY=your_openai_api_key_here

OPENAI_BASE_URL=https://api.openai.com/v1 # or your custom endpoint

#===========================================

# Tools & Services API

#===========================================

# Chunkr API (https://chunkr.ai/)

CHUNKR_API_KEY=your_chunkr_api_key_here

# Jina API

JINA_API_KEY=your_jina_api_key_here

# ASSEMBLYAI API

ASSEMBLYAI_API_KEY=your_assemblyai_api_key_hereNote: Replace your_*_api_key_here with your actual API keys. Some services are optional depending on which tools you plan to use.

For web search capabilities, set up SearxNG: You can follow https://github.com/searxng/searxng-docker/ to set the docker and use our setting.

# In a new terminal

cd ./Memento/searxng-docker

docker compose up -dpython client/agent.py-

Planner Model: Defaults to

gpt-4.1for task decomposition -

Executor Model: Defaults to

o3for task execution - Custom Models: Support for any OpenAI-compatible API

- Search: Configure SearxNG instance URL

- Code Execution: Customize import whitelist and security settings

- Document Processing: Set cache directories and processing limits

- GAIA: 87.88% (Val, Pass@3 Top-1) and 79.40% (Test)

- DeepResearcher: 66.6% F1 / 80.4% PM, with +4.7–9.6 absolute gains on OOD datasets

- SimpleQA: 95.0%

- HLE: 24.4% PM (close to GPT-5 at 25.32%)

- Small, high-quality memory works best: Retrieval K=4 yields peak F1/PM

- Planning + CBR consistently improves performance

- Concise, structured planning outperforms verbose deliberation

Memento/

├── client/ # Main agent implementation

│ ├── agent.py # Hierarchical client with planner–executor

│ └── no_parametric_cbr.py # Non-parametric case-based reasoning

├── server/ # MCP tool servers

│ ├── code_agent.py # Code execution & workspace management

│ ├── search_tool.py # Web search via SearxNG

│ ├── serp_search.py # SERP-based search tool

│ ├── documents_tool.py # Multi-format document processing

│ ├── image_tool.py # Image analysis & captioning

│ ├── video_tool.py # Video processing & narration

│ ├── excel_tool.py # Spreadsheet processing

│ ├── math_tool.py # Mathematical computations

│ ├── craw_page.py # Web page crawling

│ └── ai_crawler.py # Query-aware compression crawler

├── interpreters/ # Code execution backends

│ ├── docker_interpreter.py

│ ├── e2b_interpreter.py

│ ├── internal_python_interpreter.py

│ └── subprocess_interpreter.py

├── memory/ # Memory components / data

├── data/ # Sample data / cases

├── searxng-docker/ # SearxNG Docker setup

├── Figure/ # Figures for README/paper

├── README.md

├── requirements.txt

└── LICENSE

- Create a new FastMCP server in the

server/directory - Implement your tool functions with proper error handling

- Register the tool with the MCP protocol

- Update the client's server list in

agent.py

Extend the interpreters/ module to add new execution backends:

from interpreters.base import BaseInterpreter

class CustomInterpreter(BaseInterpreter):

async def execute(self, code: str) -> str:

# Your custom execution logic

pass- [ ] Add Case Bank Reasoning: Implement memory-based case retrieval and reasoning system

- [ ] Add User Personal Memory Mechanism: Implement user-preference search

- [ ] Refine Tools & Add More Tools: Enhance existing tools and expand the tool ecosystem

- [ ] Test More New Benchmarks: Evaluate performance on additional benchmark datasets

- Long-horizon tasks: GAIA Level-3 remains challenging due to compounding errors

- Frontier knowledge: HLE performance limited by tooling alone

- Open-source coverage: Limited executor validation in fully open pipelines

- Some parts of the code in the toolkits and interpreters are adapted from Camel-AI.

If Memento helps your work, please cite:

@article{zhou2025agentfly,

title={AgentFly: Fine-tuning LLM Agents without Fine-tuning LLMs},

author={Zhou, Huichi and Chen, Yihang and Guo, Siyuan and Yan, Xue and Lee, Kin Hei and Wang, Zihan and Lee, Ka Yiu and Zhang, Guchun and Shao, Kun and Yang, Linyi and others},

journal={arXiv preprint arXiv:2508.16153},

year={2025}

}

@article{huang2025deep,

title={Deep Research Agents: A Systematic Examination And Roadmap},

author={Huang, Yuxuan and Chen, Yihang and Zhang, Haozheng and Li, Kang and Fang, Meng and Yang, Linyi and Li, Xiaoguang and Shang, Lifeng and Xu, Songcen and Hao, Jianye and others},

journal={arXiv preprint arXiv:2506.18096},

year={2025}

}For a broader overview, please check out our survey: Github

We welcome contributions! Please see our contributing guidelines for:

- Bug reports and feature requests

- Code contributions and pull requests

- Documentation improvements

- Tool and interpreter extensions

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Memento

Similar Open Source Tools

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

lightfriend

Lightfriend is a lightweight and user-friendly tool designed to assist developers in managing their GitHub repositories efficiently. It provides a simple and intuitive interface for users to perform various repository-related tasks, such as creating new repositories, managing branches, and reviewing pull requests. With Lightfriend, developers can streamline their workflow and collaborate more effectively with team members. The tool is designed to be easy to use and requires minimal setup, making it ideal for developers of all skill levels. Whether you are a beginner looking to get started with GitHub or an experienced developer seeking a more efficient way to manage your repositories, Lightfriend is the perfect companion for your GitHub workflow.

Companion

Companion is a software tool designed to provide support and enhance development. It offers various features and functionalities to assist users in their projects and tasks. The tool aims to be user-friendly and efficient, helping individuals and teams to streamline their workflow and improve productivity.

Gito

Gito is a lightweight and user-friendly tool for managing and organizing your GitHub repositories. It provides a simple and intuitive interface for users to easily view, clone, and manage their repositories. With Gito, you can quickly access important information about your repositories, such as commit history, branches, and pull requests. The tool also allows you to perform common Git operations, such as pushing changes and creating new branches, directly from the interface. Gito is designed to streamline your GitHub workflow and make repository management more efficient and convenient.

pullfrog

Pullfrog is a versatile tool for managing and automating GitHub pull requests. It provides a simple and intuitive interface for developers to streamline their workflow and collaborate more efficiently. With Pullfrog, users can easily create, review, merge, and manage pull requests, all within a single platform. The tool offers features such as automated testing, code review, and notifications to help teams stay organized and productive. Whether you are a solo developer or part of a large team, Pullfrog can help you simplify the pull request process and improve code quality.

airstate

AirState is a straightforward software development kit that enables users to integrate real-time collaboration functionalities into their web applications. With its user-friendly interface and robust capabilities, AirState simplifies the process of incorporating live collaboration features, making it an ideal choice for developers seeking to enhance the interactive elements of their projects. The SDK offers a seamless solution for creating engaging and interactive web experiences, allowing users to easily implement real-time collaboration tools without the need for extensive coding knowledge or complex configurations. By leveraging AirState, developers can streamline the development process and deliver dynamic web applications that facilitate real-time communication and collaboration among users.

vibe

Vibe Design System is a collection of packages for React.js development, providing components, styles, and guidelines to streamline the development process and enhance user experience. It includes a Core component library, Icons library, Testing utilities, Codemods, and more. The system also features an MCP server for intelligent assistance with component APIs, usage examples, icons, and best practices. Vibe 2 is no longer actively maintained, with users encouraged to upgrade to Vibe 3 for the latest improvements and ongoing support.

intelligent-app-workshop

Welcome to the envisioning workshop designed to help you build your own custom Copilot using Microsoft's Copilot stack. This workshop aims to rethink user experience, architecture, and app development by leveraging reasoning engines and semantic memory systems. You will utilize Azure AI Foundry, Prompt Flow, AI Search, and Semantic Kernel. Work with Miyagi codebase, explore advanced capabilities like AutoGen and GraphRag. This workshop guides you through the entire lifecycle of app development, including identifying user needs, developing a production-grade app, and deploying on Azure with advanced capabilities. By the end, you will have a deeper understanding of leveraging Microsoft's tools to create intelligent applications.

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

yao

YAO is an open-source application engine written in Golang, suitable for developing business systems, website/APP API, admin panel, and self-built low-code platforms. It adopts a flow-based programming model to implement functions by writing YAO DSL or using JavaScript. Yao allows developers to create web services by processes, creating a database model, writing API services, and describing dashboard interfaces just by JSON for web & hardware, and 10x productivity. It is based on the flow-based programming idea, developed in Go language, and supports multiple ways to expand the data stream processor. Yao has a built-in data management system, making it suitable for quickly making various management backgrounds, CRM, ERP, and other internal enterprise systems. It is highly versatile, efficient, and performs better than PHP, JAVA, and other languages.

crewAI-tools

This repository provides a guide for setting up tools for crewAI agents to enhance functionality. It offers steps to equip agents with ready-to-use tools and create custom ones. Tools are expected to return strings for generating responses. Users can create tools by subclassing BaseTool or using the tool decorator. Contributions are welcome to enrich the toolset, and guidelines are provided for contributing. The development setup includes installing dependencies, activating virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. The goal is to empower AI solutions through advanced tooling.

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

For similar tasks

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

comfyui_prompt_assistant

ComfyUI Prompt Assistant is a plugin that enables prompt word translation, expansion, preset tag insertion, image reverse prompt words, and history record functions without adding nodes. It offers features like UI optimization, avoiding scroll bar overlap, tag popup window scrollbar fix, and more. Users can manually install the latest version from the Releases section. The tool supports various functionalities like image reverse, Kontext presets, translation nodes, and custom rules. It also provides features for tag insertion, LLM expansion, translation switching between Baidu and LLM, and history management.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

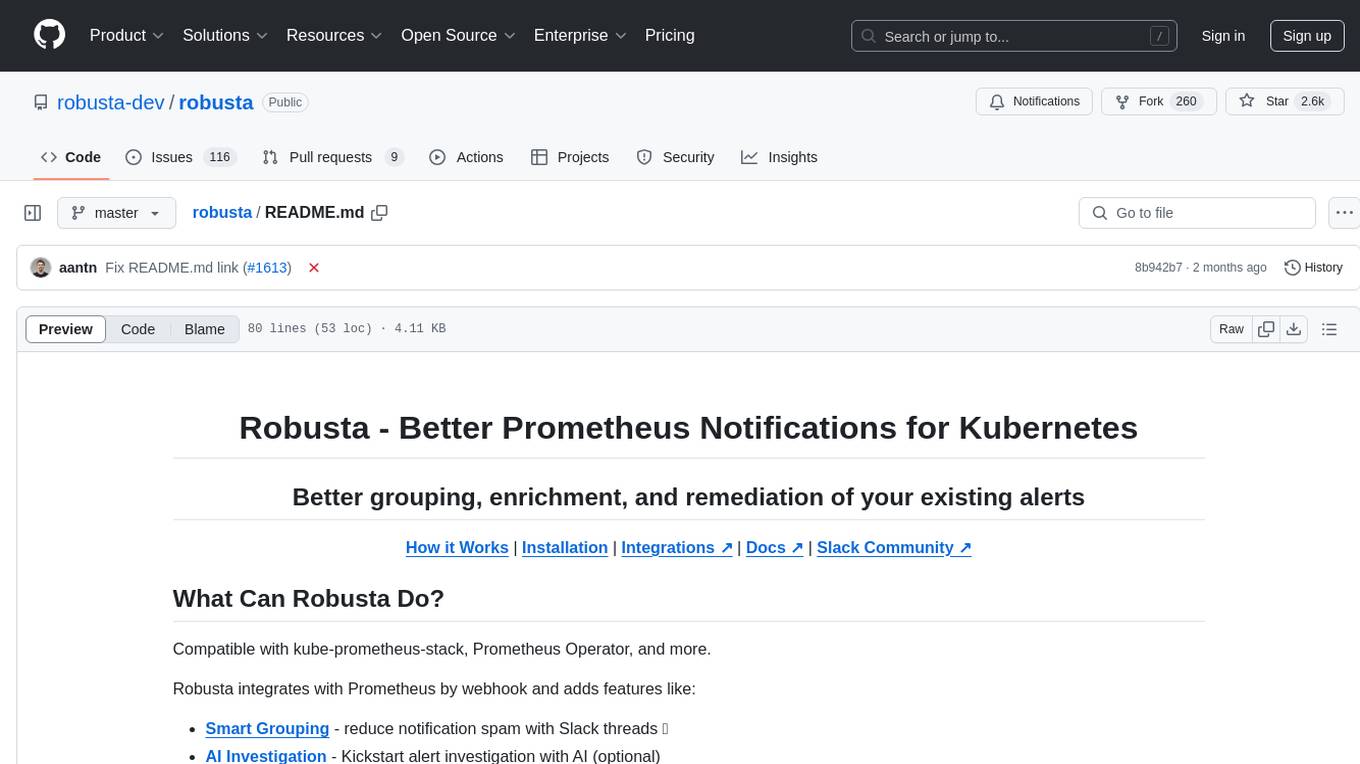

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

cursor-agent-tracking

Cursor Agent History Tracking System is a simple tool to maintain context and track changes in conversations with Cursor when it's in AGENT mode. It ensures continuity even if the AI 'forgets' previous interactions. The system includes templates for starting chat sessions, tracking changes, and maintaining project status and goals. Users can modify the templates to suit their specific needs while following best practices for consistent formatting and documentation.

git-mcp-server

A secure and scalable Git MCP server providing AI agents with powerful version control capabilities for local and serverless environments. It offers 28 comprehensive Git operations organized into seven functional categories, resources for contextual information about the Git environment, and structured prompt templates for guiding AI agents through complex workflows. The server features declarative tools, robust error handling, pluggable authentication, abstracted storage, full-stack observability, dependency injection, and edge-ready architecture. It also includes specialized features for Git integration such as cross-runtime compatibility, provider-based architecture, optimized Git execution, working directory management, configurable Git identity, safety features, and commit signing.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.