comfyui_prompt_assistant

提示词小助手可以一键调用智谱、硅基流动、gemini、本地ollama、百度等大语言模型服务,实现提示词翻译、润色扩写、图片反推。支持提示词预设实现一键插入、历史提示词查找等功能。是一个全能型提示词插件。The Prompt Assistant enables one-click access to LLMs/VLMs for prompt translation, expansion, and image captioning. It also supports one-click preset insertion and historical prompt search.

Stars: 772

ComfyUI Prompt Assistant is a plugin that enables prompt word translation, expansion, preset tag insertion, image reverse prompt words, and history record functions without adding nodes. It offers features like UI optimization, avoiding scroll bar overlap, tag popup window scrollbar fix, and more. Users can manually install the latest version from the Releases section. The tool supports various functionalities like image reverse, Kontext presets, translation nodes, and custom rules. It also provides features for tag insertion, LLM expansion, translation switching between Baidu and LLM, and history management.

README:

🎀特别感谢以下朋友提出的宝贵方案!

Cereza69、LAOGOU-666、H、小海、foryoung365、xu...

这是一个无需添加节点,即可实现提示词翻译、扩写、预设标签插入、图片反推提示词、历史记录功能等功能的comfyUI插件。

📍手动安装请从右侧Releases下载最新版本。

[2025-8-28]V1.1.3:

- 优化小助手UI,实现自动避开滚动条,避免重叠误触

- 修复标签弹窗无滚动条,内容显示不全的问题

[2025-8-23]V1.1.2:

- 重构节点,解决执行时产生多队列和重复执行的问题

- API配置界面添加模型参数,某些报错可以尝试调整最大token数解决

- 简化图像反推流程,提升反推速度

- 修复了标签按需加载时,无法搜索到未加载的标签

[2025-8-10]V1.1.1:

-修复图像反推节点报错

[2025-8-10]V1.1.0:

- 修改了UI交互

- 支持所有兼容OpenAI SDK API

- 新增自定自定义规则

- 新增自定义标签

- 新增图像反推、Kontext预设、翻译节点节点

[2025-6-24]V1.0.6:

- 修复了一些界面bug

[2025-6-24]V1.0.5:

-

修复新版创建使用选择工具栏创建kontext节点时,出现小助手UI异常问题

-

修复可能网络环境问题造成的智谱无法服务无法使用问题

-

修复可能出现实例清除出错导致工作流无法加载问题

-

修复AIGODLIKE-COMFYUI-TRANSLATION汉化插件导致标签弹窗打开卡住的问题

-

新增标签面板可以调整大小

-

优化UI资源加载机制

[2025-6-24]V1.0.3:

-

重构了api请求服务,避免apikey暴露在前端

-

修改了配置的保存和读取机制,解决配置无法保存问题

-

修复了少许bug

[2025-6-21]V1.0.2:

- 修复了少许bug

[2025-6-15]V1.0.0:

-

一键插入tag

-

支持llm扩写

-

支持百度翻译和llm翻译切换

-

图片反推提示词

-

历史、撤销、重做

在Manager中输入“Prompt Assistant”或“提示词小助手”,点击Install,选择最新版本安装。

-

从克隆仓库中下载最新版本 解压缩到ComfyUI/custom_nodes目录下

-

重启 ComfyUI

目前小助手的翻译功能支持百度和智谱两种翻译服务,都是免费的。百度机翻速度快,智谱则是 AI翻译,更加准确。你可以根据自己的需求,进行切换 。而扩写和提示词反推则必须要使用智谱的服务来实现。

申请教程,可查看作者 B 站视频:

百度翻译申请入口:通用文本翻译API链接

智谱API申请入口:智谱API申请

硅基流动 api申请入口:硅基流动API申请

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for comfyui_prompt_assistant

Similar Open Source Tools

comfyui_prompt_assistant

ComfyUI Prompt Assistant is a plugin that enables prompt word translation, expansion, preset tag insertion, image reverse prompt words, and history record functions without adding nodes. It offers features like UI optimization, avoiding scroll bar overlap, tag popup window scrollbar fix, and more. Users can manually install the latest version from the Releases section. The tool supports various functionalities like image reverse, Kontext presets, translation nodes, and custom rules. It also provides features for tag insertion, LLM expansion, translation switching between Baidu and LLM, and history management.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

TensorRT-LLM

TensorRT-LLM is an easy-to-use Python API to define Large Language Models (LLMs) and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. TensorRT-LLM contains components to create Python and C++ runtimes that execute those TensorRT engines. It also includes a backend for integration with the NVIDIA Triton Inference Server; a production-quality system to serve LLMs. Models built with TensorRT-LLM can be executed on a wide range of configurations going from a single GPU to multiple nodes with multiple GPUs (using Tensor Parallelism and/or Pipeline Parallelism).

ten-framework

TEN is an open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions. It includes various components like TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Users can follow the provided guidelines to set up and customize their agents using TMAN Designer, run them locally or in Codespace, and deploy them with Docker or other cloud services. The ecosystem also offers community channels for developers to connect, contribute, and get support.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

LLaVA-pp

This repository, LLaVA++, extends the visual capabilities of the LLaVA 1.5 model by incorporating the latest LLMs, Phi-3 Mini Instruct 3.8B, and LLaMA-3 Instruct 8B. It provides various models for instruction-following LMMS and academic-task-oriented datasets, along with training scripts for Phi-3-V and LLaMA-3-V. The repository also includes installation instructions and acknowledgments to related open-source contributions.

gpt-rss

GPT RSS is a tool that allows users to stay up-to-date on the latest AIGC/GPT/LLM articles by定时抓取前沿 AIGC / GPT / LLM 文章. It features a user-friendly interface that supports PC and mobile devices, as well as search and filter functions. GPT RSS is built using Vue3 and Vant UI component library, and utilizes Node.js for定时任务 to update articles daily.

Plug-play-modules

Plug-play-modules is a comprehensive collection of plug-and-play modules for AI, deep learning, and computer vision applications. It includes various convolution variants, latest attention mechanisms, feature fusion modules, up-sampling/down-sampling modules, suitable for tasks like image classification, object detection, instance segmentation, semantic segmentation, single object tracking (SOT), multi-object tracking (MOT), infrared object tracking (RGBT), image de-raining, de-fogging, de-blurring, super-resolution, and more. The modules are designed to enhance model performance and feature extraction capabilities across various tasks.

500

Simple Config GeoIP for Quantumult X is a pre-configured file package containing rules for unlocking Apple News, ad blocking, TikTok unlock Rewrite, machine routing rules, and VIP video resolution rewriting. It integrates multiple rewrite configurations using Quantumult X and GeoIP features to remove most rules to save resources, and provides corresponding shortcuts for rule and configuration simplification through Vercel redirection.

aidea

AIdea is an app that integrates mainstream large language models and drawing models, developed using Flutter. The code is completely open-source and supports various functions such as GPT-3.5, GPT-4 from OpenAI, Claude instant, Claude 2.1 from Anthropic, Gemini Pro and visual language models from Google, as well as various Chinese and open-source models. It also supports features like text-to-image, super-resolution, coloring black and white images, artistic fonts, artistic QR codes, and more.

Train-llm-from-scratch

Train-llm-from-scratch is a repository that guides users through training a Large Language Model (LLM) from scratch. The model size can be adjusted based on available computing power. The repository utilizes deepspeed for distributed training and includes detailed explanations of the code and key steps at each stage to facilitate learning. Users can train their own tokenizer or use pre-trained tokenizers like ChatGLM2-6B. The repository provides information on preparing pre-training data, processing training data, and recommended SFT data for fine-tuning. It also references other projects and books related to LLM training.

cool-admin-java

Cool-admin-java is an open-source backend permission management system with features like Ai coding, flow arrangement, modularity, and plugin support. It is used to quickly build backend applications. The system offers a modern development experience by providing functionalities such as one-click generation of API interfaces to frontend pages, drag-and-drop flow arrangement, modularized code for easy maintenance, and extensibility through plugin installation for features like payments, SMS, and emails.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

For similar tasks

comfyui_prompt_assistant

ComfyUI Prompt Assistant is a plugin that enables prompt word translation, expansion, preset tag insertion, image reverse prompt words, and history record functions without adding nodes. It offers features like UI optimization, avoiding scroll bar overlap, tag popup window scrollbar fix, and more. Users can manually install the latest version from the Releases section. The tool supports various functionalities like image reverse, Kontext presets, translation nodes, and custom rules. It also provides features for tag insertion, LLM expansion, translation switching between Baidu and LLM, and history management.

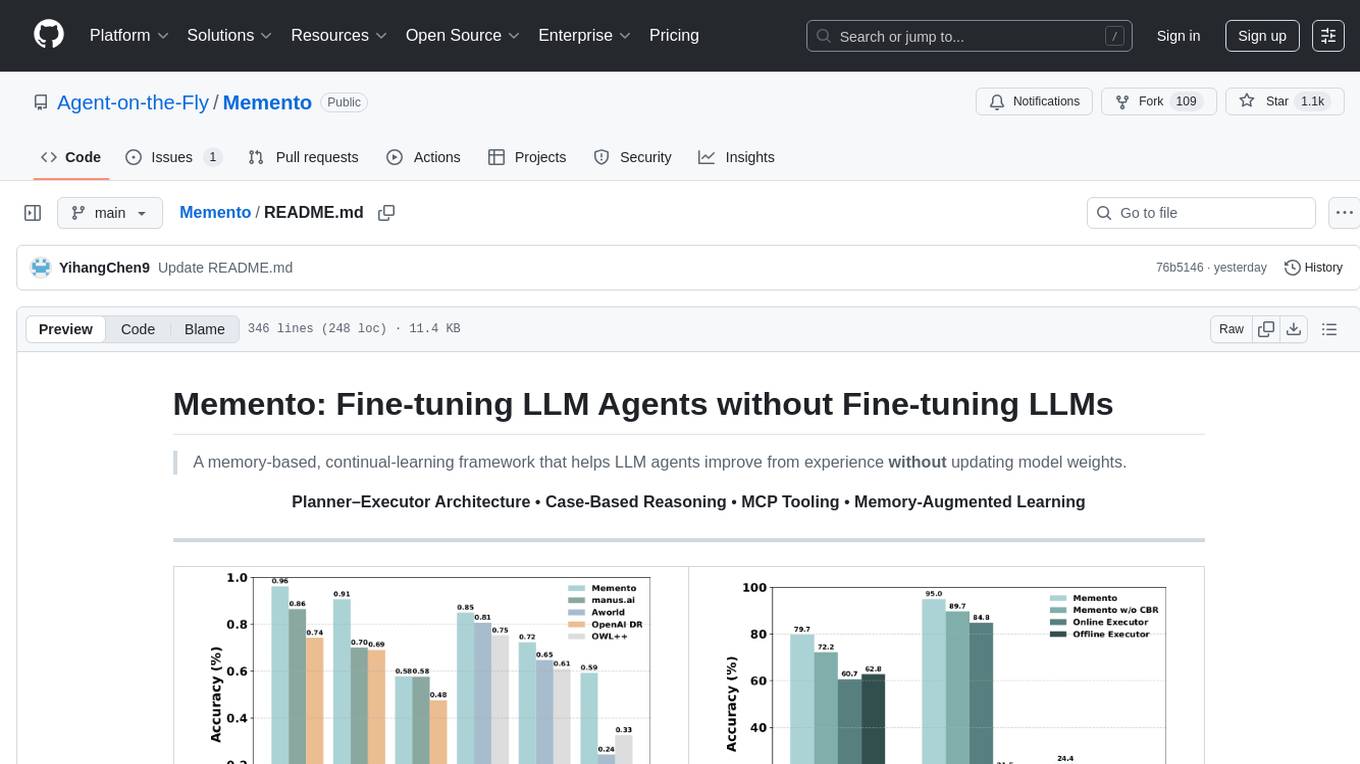

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.