cursor-agent-tracking

A repository that provides a structured system for maintaining context and tracking changes in Cursor's AGENT mode conversations through template files, enabling better continuity and organization of AI interactions.

Stars: 56

Cursor Agent History Tracking System is a simple tool to maintain context and track changes in conversations with Cursor when it's in AGENT mode. It ensures continuity even if the AI 'forgets' previous interactions. The system includes templates for starting chat sessions, tracking changes, and maintaining project status and goals. Users can modify the templates to suit their specific needs while following best practices for consistent formatting and documentation.

README:

A simple system to maintain context and track changes in conversations with Cursor when it's in AGENT mode, ensuring continuity even if the AI 'forgets' previous interactions. This workaround isn't necessary for regular chat mode, as Cursor implements native tracking.

-

Copy all

.mdfiles from thetemplatesdirectory into your project's root directory:CHANGELOG.mdPROJECT_SCOPE.mdPROMPT.md

-

Begin every new chat with the AI by copying the contents of

PROMPT.md- This ensures the AI has proper context about your project

- The AI will maintain consistent formatting for changes

- Track all significant changes in

CHANGELOG.md - Keep

PROJECT_SCOPE.mdupdated with current status

-

PROMPT.md: Your template for starting each new chat session -

CHANGELOG.md: Tracks all significant changes to your project -

PROJECT_SCOPE.md: Maintains current project status and goals

- Always start new chats with the

PROMPT.mdtemplate (this means every time you click the + symbol) - Update

CHANGELOG.mdafter significant changes - Keep

PROJECT_SCOPE.mdcurrent - Include relevant file paths in your prompts

- Save important code snippets and explanations

Feel free to modify the templates to better suit your specific needs while maintaining the core structure for consistency.

Refer to the individual template files for detailed formatting and usage instructions.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cursor-agent-tracking

Similar Open Source Tools

cursor-agent-tracking

Cursor Agent History Tracking System is a simple tool to maintain context and track changes in conversations with Cursor when it's in AGENT mode. It ensures continuity even if the AI 'forgets' previous interactions. The system includes templates for starting chat sessions, tracking changes, and maintaining project status and goals. Users can modify the templates to suit their specific needs while following best practices for consistent formatting and documentation.

temporal-ai-agent

Temporal AI Agent is a demo showcasing a multi-turn conversation with an AI agent running inside a Temporal workflow. The agent collects information towards a goal using a simple DSL input. It is currently set up to search for events, book flights around those events, and create an invoice for those flights. The AI agent responds with clarifications and prompts for missing information. Users can configure the agent to use ChatGPT 4o or a local LLM via Ollama. The tool requires Rapidapi key for sky-scrapper to find flights and a Stripe key for creating invoices. Users can customize the agent by modifying tool and goal definitions in the codebase.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

jaison-core

J.A.I.son is a Python project designed for generating responses using various components and applications. It requires specific plugins like STT, T2T, TTSG, and TTSC to function properly. Users can customize responses, voice, and configurations. The project provides a Discord bot, Twitch events and chat integration, and VTube Studio Animation Hotkeyer. It also offers features for managing conversation history, training AI models, and monitoring conversations.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

git-mcp

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

serena

Serena is a powerful coding agent that integrates with existing LLMs to provide essential semantic code retrieval and editing tools. It is free to use and does not require API keys or subscriptions. Serena can be used for coding tasks such as analyzing, planning, and editing code directly on your codebase. It supports various programming languages and offers semantic code analysis capabilities through language servers. Serena can be integrated with different LLMs using the model context protocol (MCP) or Agno framework. The tool provides a range of functionalities for code retrieval, editing, and execution, making it a versatile coding assistant for developers.

second-brain-agent

The Second Brain AI Agent Project is a tool designed to empower personal knowledge management by automatically indexing markdown files and links, providing a smart search engine powered by OpenAI, integrating seamlessly with different note-taking methods, and enhancing productivity by accessing information efficiently. The system is built on LangChain framework and ChromaDB vector store, utilizing a pipeline to process markdown files and extract text and links for indexing. It employs a Retrieval-augmented generation (RAG) process to provide context for asking questions to the large language model. The tool is beneficial for professionals, students, researchers, and creatives looking to streamline workflows, improve study sessions, delve deep into research, and organize thoughts and ideas effortlessly.

HackBot

HackBot is an AI-powered cybersecurity chatbot designed to provide accurate answers to cybersecurity-related queries, conduct code analysis, and scan analysis. It utilizes the Meta-LLama2 AI model through the 'LlamaCpp' library to respond coherently. The chatbot offers features like local AI/Runpod deployment support, cybersecurity chat assistance, interactive interface, clear output presentation, static code analysis, and vulnerability analysis. Users can interact with HackBot through a command-line interface and utilize it for various cybersecurity tasks.

GhostOS

GhostOS is an AI Agent framework designed to replace JSON Schema with a Turing-complete code interaction interface (Moss Protocol). It aims to create intelligent entities capable of continuous learning and growth through code generation and project management. The framework supports various capabilities such as turning Python files into web agents, real-time voice conversation, body movements control, and emotion expression. GhostOS is still in early experimental development and focuses on out-of-the-box capabilities for AI agents.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

For similar tasks

cursor-agent-tracking

Cursor Agent History Tracking System is a simple tool to maintain context and track changes in conversations with Cursor when it's in AGENT mode. It ensures continuity even if the AI 'forgets' previous interactions. The system includes templates for starting chat sessions, tracking changes, and maintaining project status and goals. Users can modify the templates to suit their specific needs while following best practices for consistent formatting and documentation.

obsidian-pieces

Pieces for Developers is a closed-source Obsidian plugin designed to revolutionize coding workflows by incorporating key capabilities and favorite features directly into the Obsidian environment. The plugin, Pieces Copilot for Obsidian, enhances coding and problem-solving experiences by providing insights on code snippets, generating samples, and facilitating navigation through PRs. Users can capture, manage, share, and discover code snippets and developer materials with ease, bringing efficiency and organization to their coding experience.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

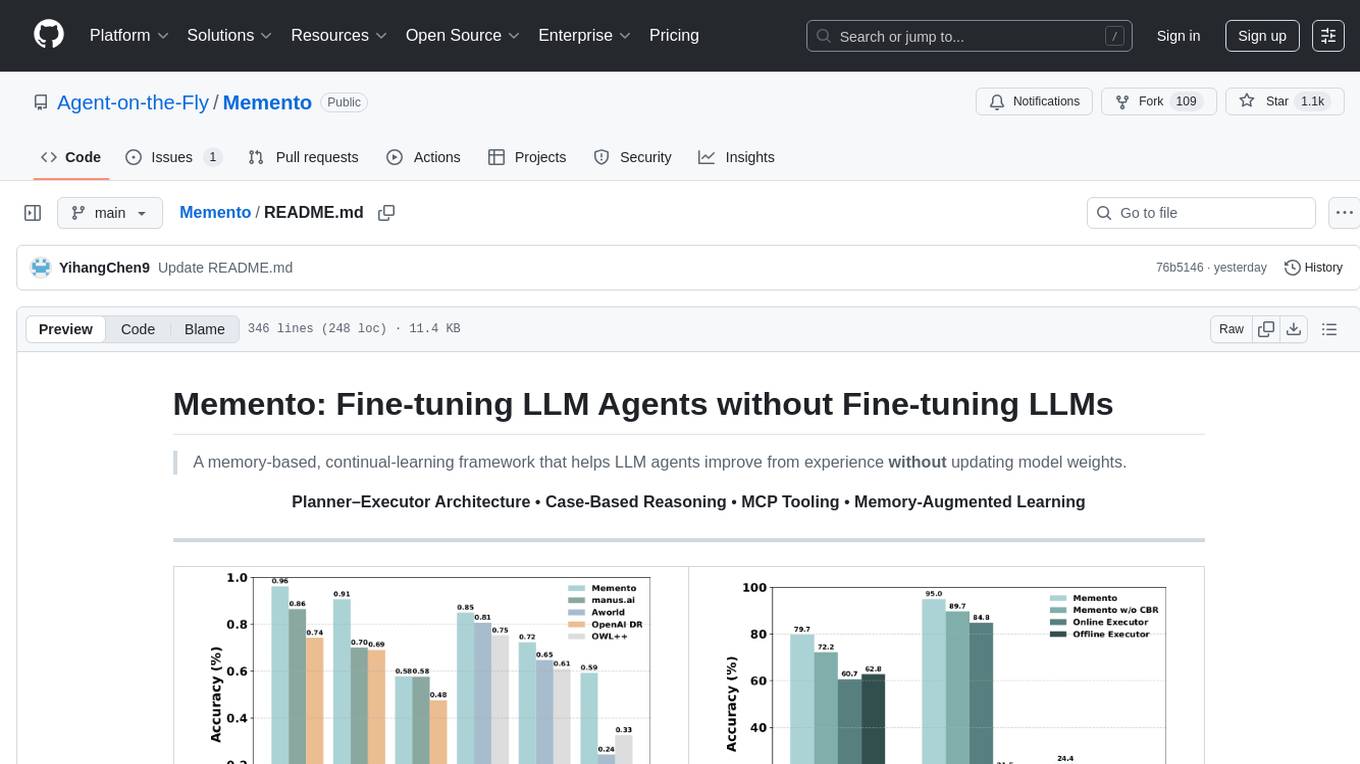

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.