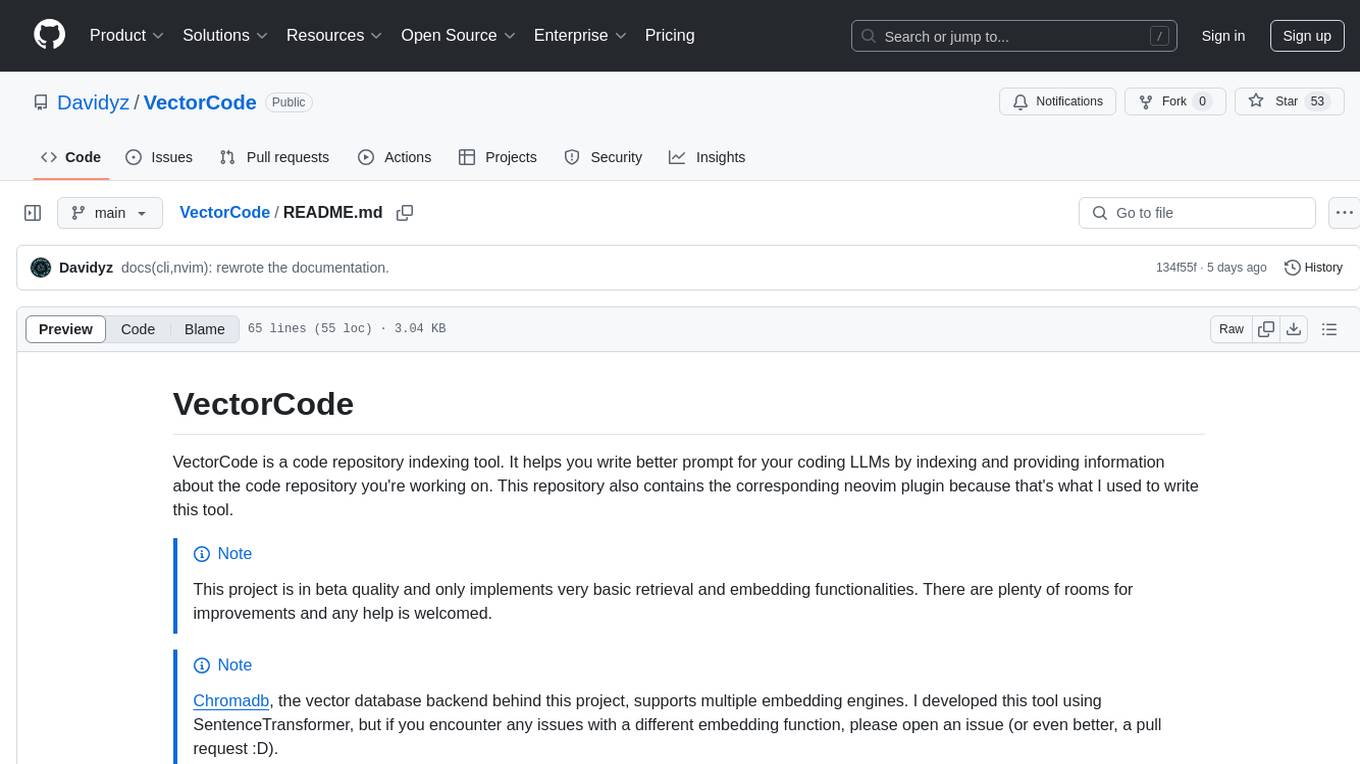

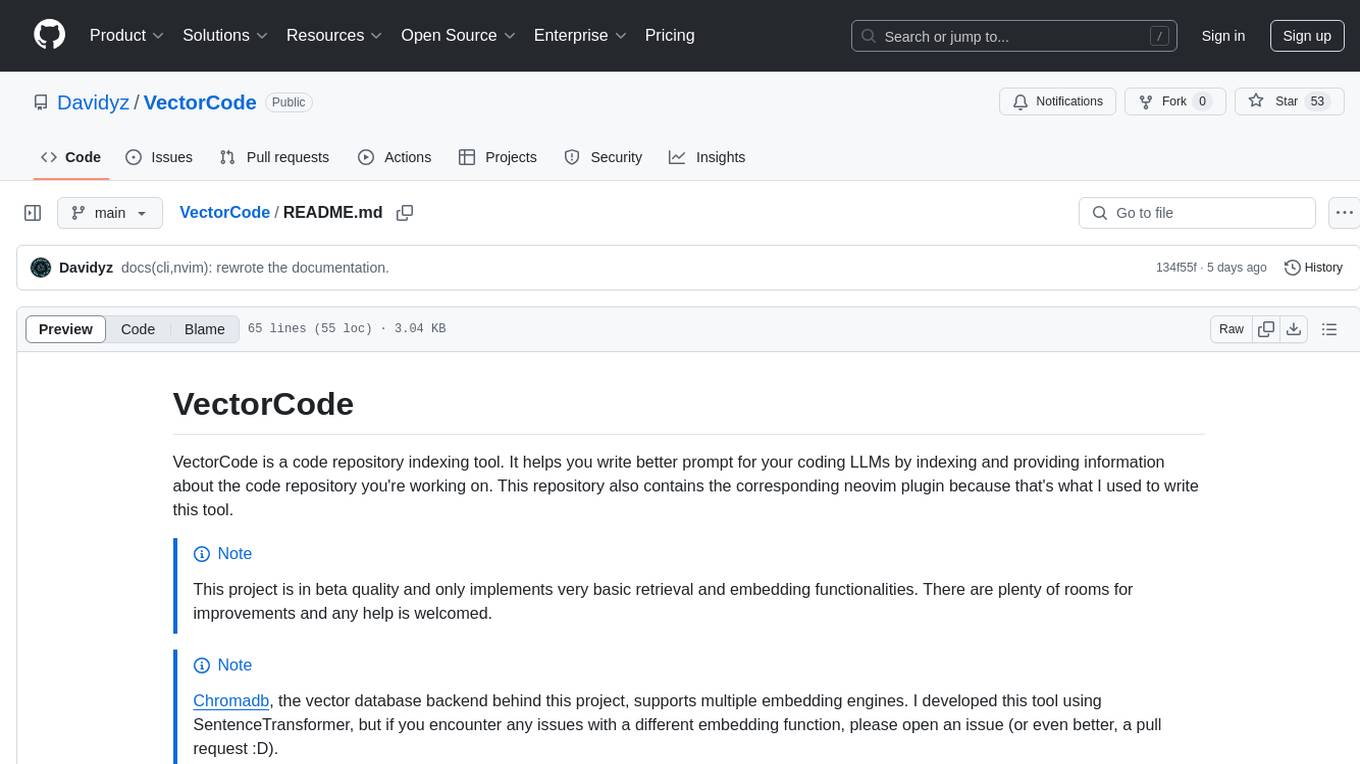

VectorCode

A code repository indexing tool to supercharge your LLM experience.

Stars: 665

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

README:

VectorCode is a code repository indexing tool. It helps you build better prompt for your coding LLMs by indexing and providing information about the code repository you're working on. This repository also contains the corresponding neovim plugin that provides a set of APIs for you to build or enhance AI plugins, and integrations for some of the popular plugins.

[!NOTE] This project is in beta quality and is undergoing rapid iterations. I know there are plenty of rooms for improvements, and any help is welcomed.

LLMs usually have very limited understanding about close-source projects, projects that are not well-known, and cutting edge developments that have not made it into releases. Their capabilities on these projects are quite limited. With VectorCode, you can easily (and programmatically) inject task-relevant context from the project into the prompt. This significantly improves the quality of the model output and reduce hallucination.

[!NOTE] The documentation on the

mainbranch reflects the code on the latest commit. To check for the documentation for the version you're using, you can check out the corresponding tags.

- For the setup and usage of the command-line tool, see the CLI documentation;

- For neovim users, after you've gone through the CLI documentation, please refer to the neovim plugin documentation (and optionally the lua API reference) for further instructions.

- Additional resources:

- the wiki for extra tricks and tips that will help you get the most out of VectorCode;

- the discussions where you can ask general questions and share your cool usages about VectorCode.

- If you're feeling adanvturous, feel free to check out the pull requests for WIP features.

If you're trying to contribute to this project, take a look at the contribution guide, which contains information about some basic guidelines that you should follow and tips that you may find helpful.

This project follows an adapted semantic versioning:

- Until 1.0.0 is released, the major version number stays 0 which indicates that this project is still in early stage, and features/interfaces may change from time to time;

- The minor version number indicates breaking changes. When I decide to remove a

feature/config option, the actual removal will happen when I bump the minor

version number. Therefore, if you want to avoid breaking a working setup, you

may choose to use a version constraint like

"vectorcode<0.7.0"; - The patch version number indicates non-breaking changes. This can include new features and bug fixes. When I decide to deprecate things, I will make a new release with bumped patch version. Until the minor version number is bumped, the deprecated feature will still work but you'll see a warning. It's recommended to update your setup to adapt the new features.

- [x] query by

file pathexcluded paths; - [x] chunking support;

- [x] add metadata for files;

- [x] chunk-size configuration;

- [x] smarter chunking (semantics/syntax based), implemented with py-tree-sitter and tree-sitter-language-pack;

- [x] configurable document selection from query results.

- [x]

NeoVim Lua API with cache to skip the retrieval when a project has not been indexedReturns empty array instead; - [x] job pool for async caching;

- [x] persistent-client;

- [ ] proper remote Chromadb support (with authentication, etc.);

- [x] respect

.gitignore; - [x] implement some sort of project-root anchors (such as

.gitor a custom.vectorcode.json) that enhances automatic project-root detection. Implemented project-level.vectorcode/and.gitas root anchor - [x] ability to view and delete files in a collection;

- [x] joint search (kinda, using codecompanion.nvim/MCP);

- [x] Nix support (unofficial packages here);

- [ ] Query rewriting (#124).

- @milanglacier (and minuet-ai.nvim) for the support when this project was still in early stage;

- @olimorris for the help (personally and from codecompanion.nvim) when this project made initial attempts at tool-calling;

- @ravitemer for the help to interface VectorCode with MCP;

- The nix community (especially @sarahec and @GaetanLepage) for maintaining the nix packages.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VectorCode

Similar Open Source Tools

VectorCode

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

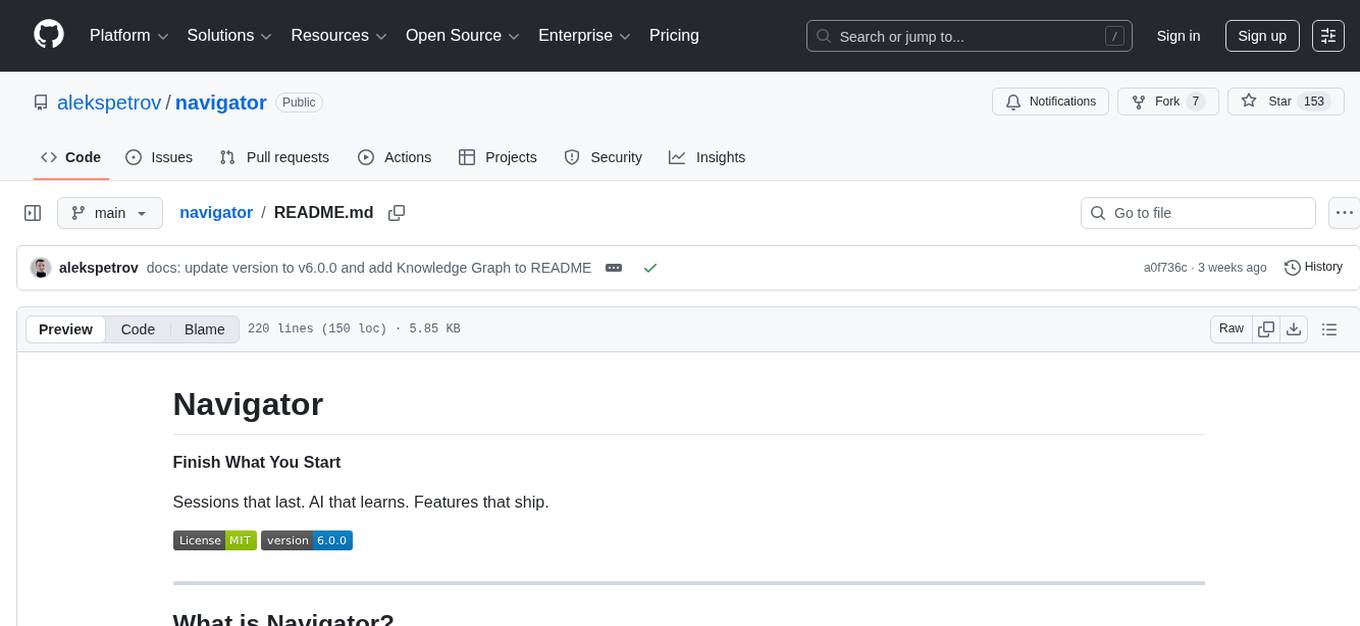

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

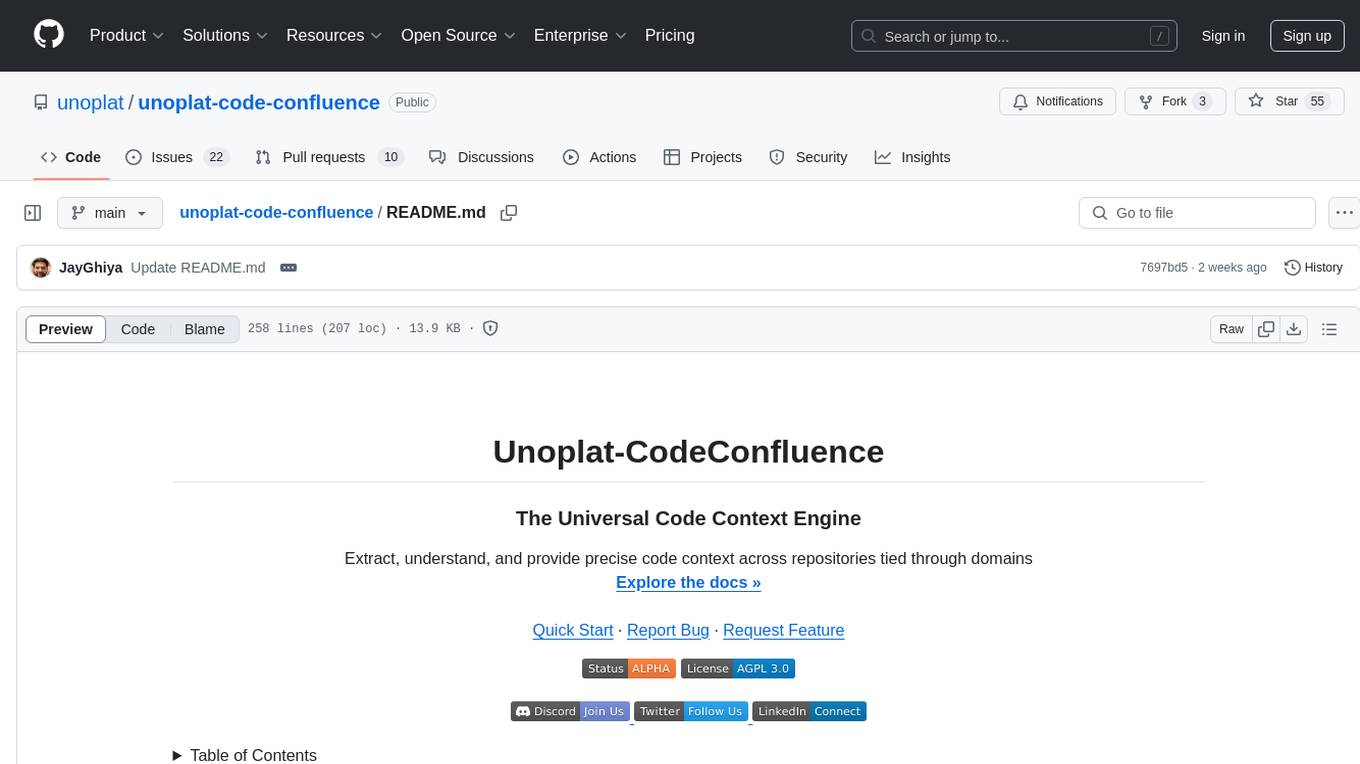

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

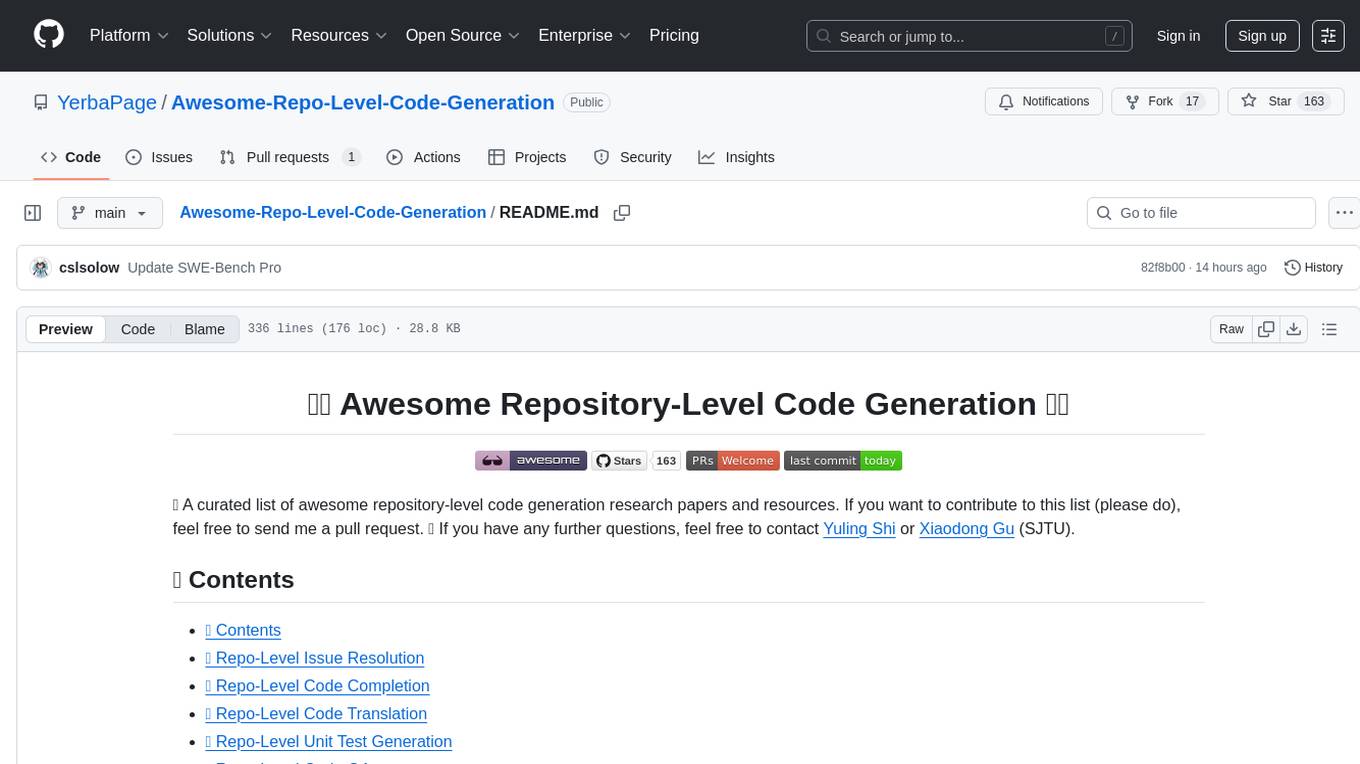

Awesome-Repo-Level-Code-Generation

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

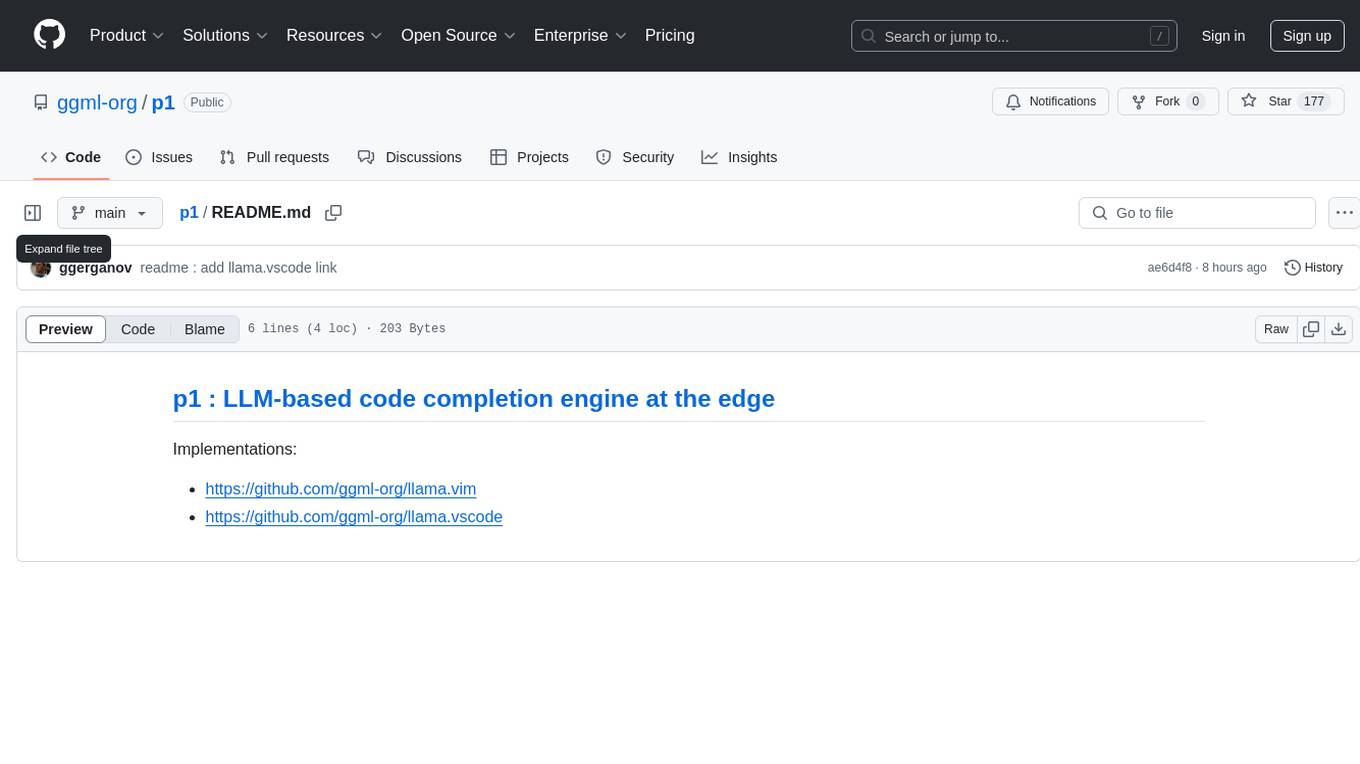

p1

p1 is a code completion engine based on Large Language Models (LLM) that operates at the edge. It provides intelligent code suggestions and completions to enhance the coding experience. The tool is designed to assist developers in writing code more efficiently by predicting and offering context-aware completions based on the code being written. With implementations available for popular code editors like Vim and Visual Studio Code, p1 aims to improve productivity and streamline the coding process for software developers.

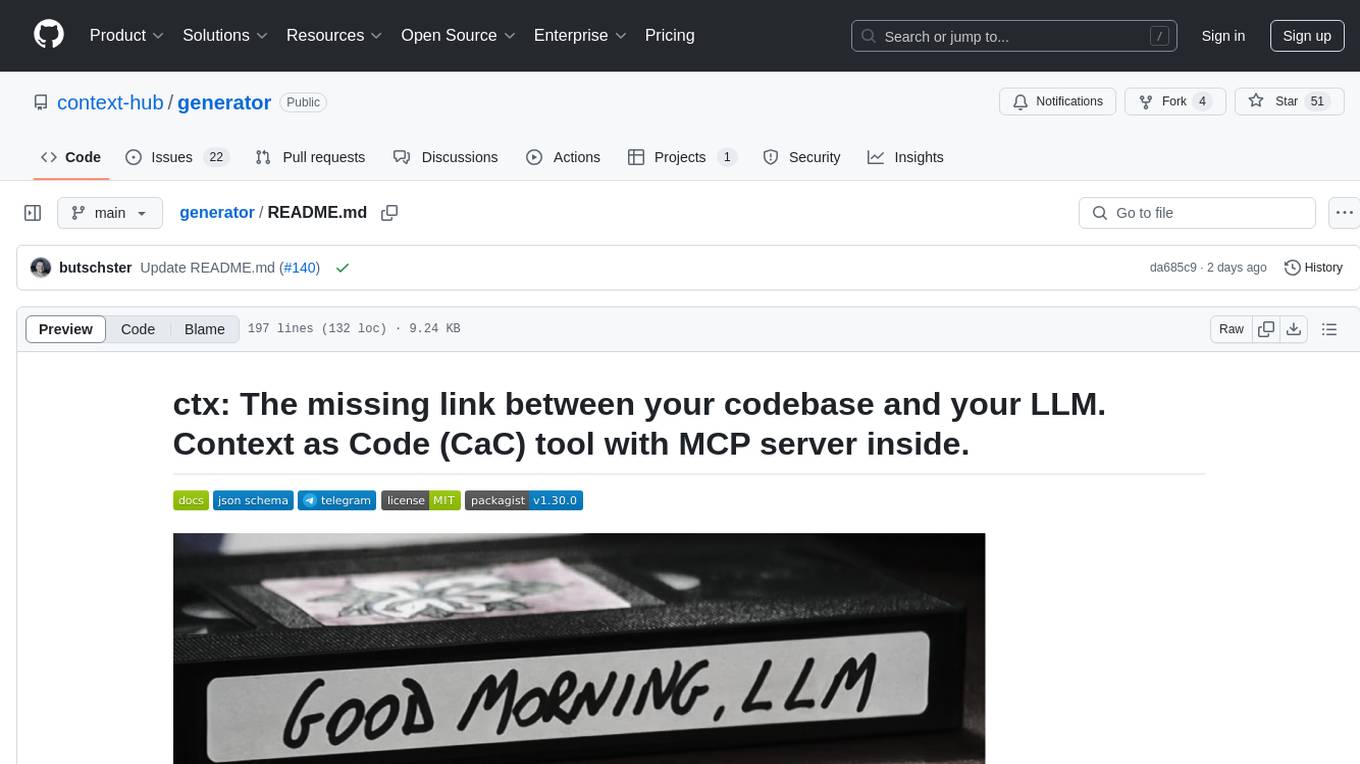

generator

ctx is a tool designed to automatically generate organized context files from code files, GitHub repositories, Git commits, web pages, and plain text. It aims to efficiently provide necessary context to AI language models like ChatGPT and Claude, enabling users to streamline code refactoring, multiple iteration development, documentation generation, and seamless AI integration. With ctx, users can create structured markdown documents, save context files, and serve context through an MCP server for real-time assistance. The tool simplifies the process of sharing project information with AI assistants, making AI conversations smarter and easier.

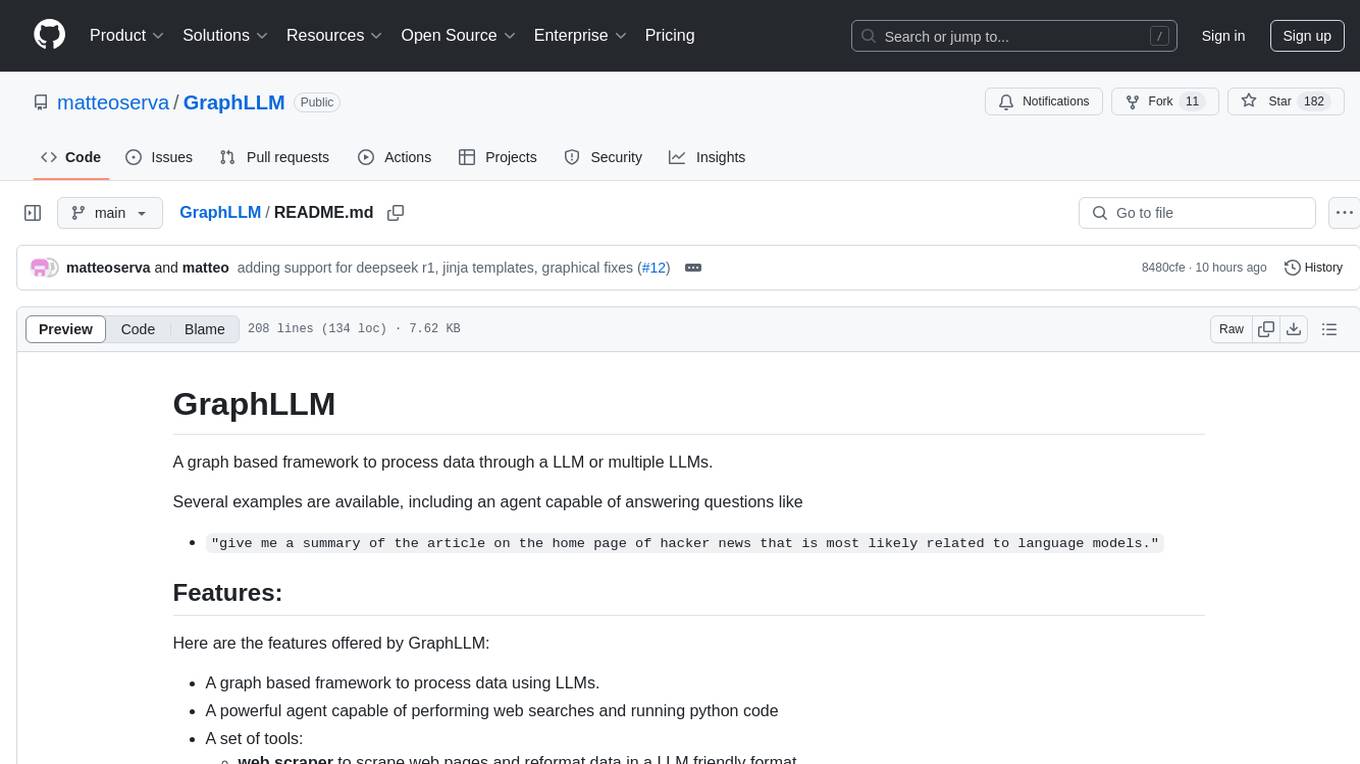

GraphLLM

GraphLLM is a graph-based framework designed to process data using LLMs. It offers a set of tools including a web scraper, PDF parser, YouTube subtitles downloader, Python sandbox, and TTS engine. The framework provides a GUI for building and debugging graphs with advanced features like loops, conditionals, parallel execution, streaming of results, hierarchical graphs, external tool integration, and dynamic scheduling. GraphLLM is a low-level framework that gives users full control over the raw prompt and output of models, with a steeper learning curve. It is tested with llama70b and qwen 32b, under heavy development with breaking changes expected.

oramacore

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced search capabilities in AI applications.

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

opensrc

Opensrc is a versatile open-source tool designed for collaborative software development. It provides a platform for developers to work together on projects, share code, and manage contributions effectively. With features like version control, issue tracking, and code review, Opensrc streamlines the development process and fosters a collaborative environment. Whether you are working on a small project with a few contributors or a large-scale open-source initiative, Opensrc offers the tools you need to organize and coordinate your development efforts.

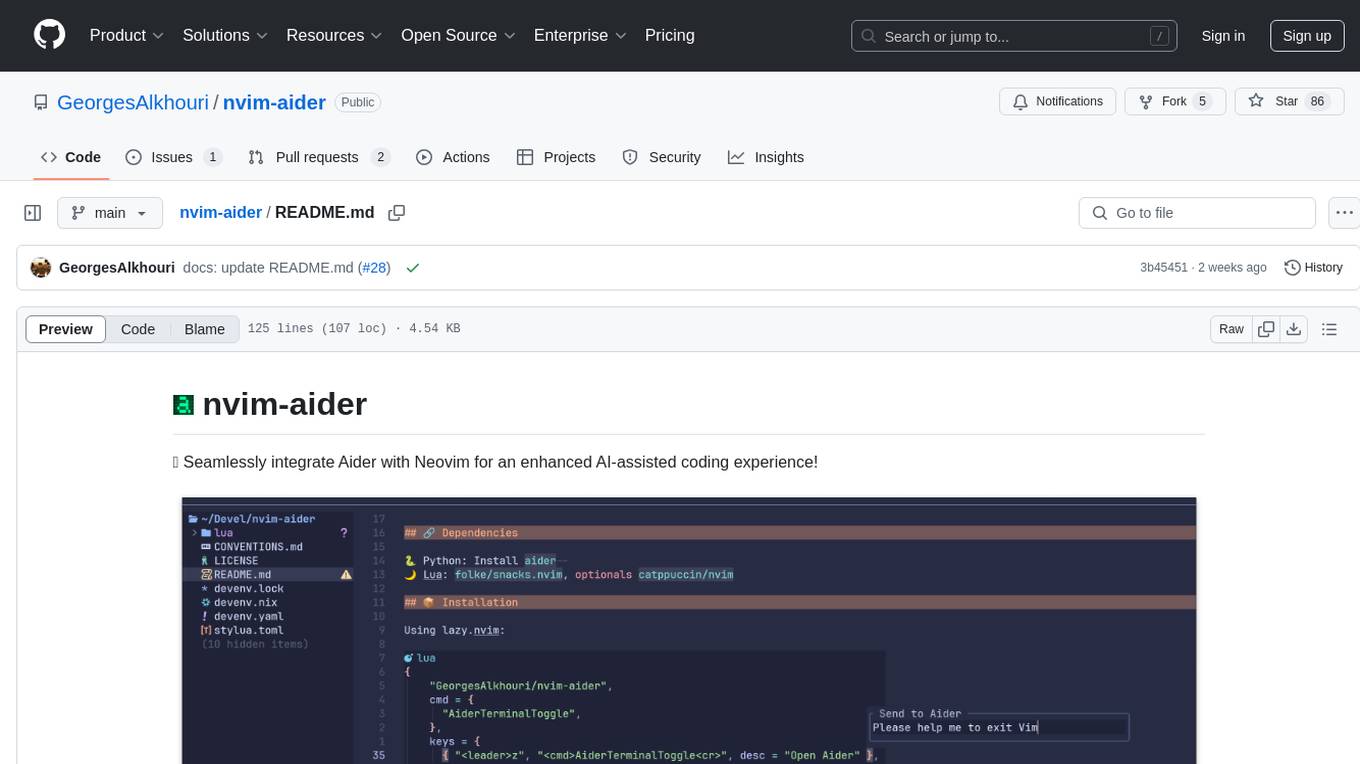

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

HyperAgent

HyperAgent is a powerful tool for automating repetitive tasks in web scraping and data extraction. It provides a user-friendly interface to create custom web scraping scripts without the need for extensive coding knowledge. With HyperAgent, users can easily extract data from websites, transform it into structured formats, and save it for further analysis. The tool supports various data formats and offers scheduling options for automated data extraction at regular intervals. HyperAgent is suitable for individuals and businesses looking to streamline their data collection processes and improve efficiency in extracting information from the web.

onlook

Onlook is a web scraping tool that allows users to extract data from websites easily and efficiently. It provides a user-friendly interface for creating web scraping scripts and supports various data formats for exporting the extracted data. With Onlook, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and customizable, making it suitable for a wide range of web scraping tasks.

semantic-kernel-docs

The Microsoft Semantic Kernel Documentation GitHub repository contains technical product documentation for Semantic Kernel. It serves as the home of technical content for Microsoft products and services. Contributors can learn how to make contributions by following the Docs contributor guide. The project follows the Microsoft Open Source Code of Conduct.

For similar tasks

VectorCode

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.