RealtimeSTT_LLM_TTS

实时STT,连接OpenAI接口/智谱AI(流式LLM)和GPT-SOVITS/Edge-TTS,通过网页的方式,进行跨网络的服务调用,实现实时对话的效果

Stars: 276

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

README:

Win整合包:https://pan.baidu.com/s/1EvqEBIUXBEYqYY0rWMsgAA?pwd=2m8y

Additional steps are needed for a GPU-optimized installation. These steps are recommended for those who require better performance and have a compatible NVIDIA GPU.

Note: To check if your NVIDIA GPU supports CUDA, visit the official CUDA GPUs list.

To use RealtimeSTT with GPU support via CUDA please follow these steps:

-

Install NVIDIA CUDA Toolkit 11.8:

- Visit NVIDIA CUDA Toolkit Archive.

- Select operating system and version.

- Download and install the software.

-

Install NVIDIA cuDNN 8.7.0 for CUDA 11.x:

- Visit NVIDIA cuDNN Archive.

- Click on "Download cuDNN v8.7.0 (November 28th, 2022), for CUDA 11.x".

- Download and install the software.

-

Install ffmpeg:

You can download an installer for your OS from the ffmpeg Website.

Or use a package manager:-

On Ubuntu or Debian:

sudo apt update && sudo apt install ffmpeg -

On Arch Linux:

sudo pacman -S ffmpeg

-

On MacOS using Homebrew (https://brew.sh/):

brew install ffmpeg

-

On Windows using Chocolatey (https://chocolatey.org/):

choco install ffmpeg

-

On Windows using Scoop (https://scoop.sh/):

scoop install ffmpeg

-

-

Install PyTorch with CUDA support:

pip uninstall torch pip install torch==2.0.1+cu118 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

Win如果装不上webrtcvad,提示了VS C++相关的报错,请下载https://visualstudio.microsoft.com/zh-hans/visual-cpp-build-tools/,安装C++开发相关工具,然后重新安装依赖。

依赖版本参考:requirements_common.txt

python webui.py,启动后根据使用情况进行配置,然后运行即可。

启动后端 python RealtimeSTT_server2.py

双击index.html,浏览器运行,填入服务器IP地址,注意,关闭服务器的防火墙,或者针对性放行9001/9002 这两个用于websocket连接的端口!

等待后端模型加载完毕后,就可以正常对话了。

-

2024-07-01

- 修复webui,聊天类型无法保存的bug

- 给webui的OpenAI的模型增加自定义配置功能,可以删除后,自定义完回车保存配置

-

2024-06-03

- 增加了 唤醒词的配置,未启用唤醒词功能,测试了下可以通过唤醒词触发录音。唤醒一次,说一些话。

-

2024-06-02

- 新增了OpenAI接口的接入,测了ollama,没啥问题

- 新增了Edge-TTS的接入(方便测试)

-

2024-05-28

- 补充个webui,方便配置(不过并不完整,凑合用)

- 补充了gpt-sovits的新api的兼容

Easy-to-use, low-latency speech-to-text library for realtime applications

RealtimeSTT listens to the microphone and transcribes voice into text.

It's ideal for:

- Voice Assistants

- Applications requiring fast and precise speech-to-text conversion

https://github.com/KoljaB/RealtimeSTT/assets/7604638/207cb9a2-4482-48e7-9d2b-0722c3ee6d14

- switched to torch.multiprocessing

- added compute_type, input_device_index and gpu_device_index parameters

- recorder.text() interruptable with recorder.abort()

- fix for #20

- added example how to realtime transcribe from browser microphone

- large-v3 whisper model now supported (upgrade to faster_whisper 0.10.0)

- added feed_audio() and use_microphone parameter to feed chunks

- Bugfix for Mac OS Installation (multiprocessing / queue.size())

- KeyboardInterrupt handling (now abortable with CTRL+C)

- Bugfix for spinner handling (could lead to exception in some cases)

- Implements context manager protocol (recorder can be used in a

withstatement) - Bugfix for resource management in shutdown method

- Bugfix for detection of short speech right after sentence detection (the problem mentioned in the video)

- Main transcription and recording moved into separate process contexts with multiprocessing

Hint: Since we use the

multiprocessingmodule now, ensure to include theif __name__ == '__main__':protection in your code to prevent unexpected behavior, especially on platforms like Windows. For a detailed explanation on why this is important, visit the official Python documentation onmultiprocessing.

- Voice Activity Detection: Automatically detects when you start and stop speaking.

- Realtime Transcription: Transforms speech to text in real-time.

- Wake Word Activation: Can activate upon detecting a designated wake word.

Hint: Check out RealtimeTTS, the output counterpart of this library, for text-to-voice capabilities. Together, they form a powerful realtime audio wrapper around large language models.

This library uses:

- Voice Activity Detection

-

Speech-To-Text

- Faster_Whisper for instant (GPU-accelerated) transcription.

-

Wake Word Detection

- Porcupine for wake word detection.

These components represent the "industry standard" for cutting-edge applications, providing the most modern and effective foundation for building high-end solutions.

pip install RealtimeSTTThis will install all the necessary dependencies, including a CPU support only version of PyTorch.

Although it is possible to run RealtimeSTT with a CPU installation only (use a small model like "tiny" or "base" in this case) you will get way better experience using:

Additional steps are needed for a GPU-optimized installation. These steps are recommended for those who require better performance and have a compatible NVIDIA GPU.

Note: To check if your NVIDIA GPU supports CUDA, visit the official CUDA GPUs list.

To use RealtimeSTT with GPU support via CUDA please follow these steps:

-

Install NVIDIA CUDA Toolkit 11.8:

- Visit NVIDIA CUDA Toolkit Archive.

- Select operating system and version.

- Download and install the software.

-

Install NVIDIA cuDNN 8.7.0 for CUDA 11.x:

- Visit NVIDIA cuDNN Archive.

- Click on "Download cuDNN v8.7.0 (November 28th, 2022), for CUDA 11.x".

- Download and install the software.

-

Install ffmpeg:

You can download an installer for your OS from the ffmpeg Website.

Or use a package manager:

-

On Ubuntu or Debian:

sudo apt update && sudo apt install ffmpeg -

On Arch Linux:

sudo pacman -S ffmpeg

-

On MacOS using Homebrew (https://brew.sh/):

brew install ffmpeg

-

On Windows using Chocolatey (https://chocolatey.org/):

choco install ffmpeg

-

On Windows using Scoop (https://scoop.sh/):

scoop install ffmpeg

-

-

Install PyTorch with CUDA support:

pip uninstall torch pip install torch==2.0.1+cu118 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

Basic usage:

Start and stop of recording are manually triggered.

recorder.start()

recorder.stop()

print(recorder.text())Recording based on voice activity detection.

with AudioToTextRecorder() as recorder:

print(recorder.text())When running recorder.text in a loop it is recommended to use a callback, allowing the transcription to be run asynchronously:

def process_text(text):

print (text)

while True:

recorder.text(process_text)Keyword activation before detecting voice. Write the comma-separated list of your desired activation keywords into the wake_words parameter. You can choose wake words from these list: alexa, americano, blueberry, bumblebee, computer, grapefruits, grasshopper, hey google, hey siri, jarvis, ok google, picovoice, porcupine, terminator.

recorder = AudioToTextRecorder(wake_words="jarvis")

print('Say "Jarvis" then speak.')

print(recorder.text())You can set callback functions to be executed on different events (see Configuration) :

def my_start_callback():

print("Recording started!")

def my_stop_callback():

print("Recording stopped!")

recorder = AudioToTextRecorder(on_recording_start=my_start_callback,

on_recording_stop=my_stop_callback)If you don't want to use the local microphone set use_microphone parameter to false and provide raw PCM audiochunks in 16-bit mono (samplerate 16000) with this method:

recorder.feed_audio(audio_chunk)You can shutdown the recorder safely by using the context manager protocol:

with AudioToTextRecorder() as recorder:

[...]Or you can call the shutdown method manually (if using "with" is not feasible):

recorder.shutdown()The test subdirectory contains a set of scripts to help you evaluate and understand the capabilities of the RealtimeTTS library.

Test scripts depending on RealtimeTTS library may require you to enter your azure service region within the script. When using OpenAI-, Azure- or Elevenlabs-related demo scripts the API Keys should be provided in the environment variables OPENAI_API_KEY, AZURE_SPEECH_KEY and ELEVENLABS_API_KEY (see RealtimeTTS)

-

simple_test.py

- Description: A "hello world" styled demonstration of the library's simplest usage.

-

realtimestt_test.py

- Description: Showcasing live-transcription.

-

wakeword_test.py

- Description: A demonstration of the wakeword activation.

-

translator.py

-

Dependencies: Run

pip install openai realtimetts. - Description: Real-time translations into six different languages.

-

Dependencies: Run

-

openai_voice_interface.py

-

Dependencies: Run

pip install openai realtimetts. - Description: Wake word activated and voice based user interface to the OpenAI API.

-

Dependencies: Run

-

advanced_talk.py

-

Dependencies: Run

pip install openai keyboard realtimetts. - Description: Choose TTS engine and voice before starting AI conversation.

-

Dependencies: Run

-

minimalistic_talkbot.py

-

Dependencies: Run

pip install openai realtimetts. - Description: A basic talkbot in 20 lines of code.

-

Dependencies: Run

The example_app subdirectory contains a polished user interface application for the OpenAI API based on PyQt5.

When you initialize the AudioToTextRecorder class, you have various options to customize its behavior.

-

model (str, default="tiny"): Model size or path for transcription.

- Options: 'tiny', 'tiny.en', 'base', 'base.en', 'small', 'small.en', 'medium', 'medium.en', 'large-v1', 'large-v2'.

- Note: If a size is provided, the model will be downloaded from the Hugging Face Hub.

-

language (str, default=""): Language code for transcription. If left empty, the model will try to auto-detect the language. Supported language codes are listed in Whisper Tokenizer library.

-

compute_type (str, default="default"): Specifies the type of computation to be used for transcription. See Whisper Quantization

-

input_device_index (int, default=0): Audio Input Device Index to use.

-

gpu_device_index (int, default=0): GPU Device Index to use. The model can also be loaded on multiple GPUs by passing a list of IDs (e.g. [0, 1, 2, 3]).

-

on_recording_start: A callable function triggered when recording starts.

-

on_recording_stop: A callable function triggered when recording ends.

-

on_transcription_start: A callable function triggered when transcription starts.

-

ensure_sentence_starting_uppercase (bool, default=True): Ensures that every sentence detected by the algorithm starts with an uppercase letter.

-

ensure_sentence_ends_with_period (bool, default=True): Ensures that every sentence that doesn't end with punctuation such as "?", "!" ends with a period

-

use_microphone (bool, default=True): Usage of local microphone for transcription. Set to False if you want to provide chunks with feed_audio method.

-

spinner (bool, default=True): Provides a spinner animation text with information about the current recorder state.

-

level (int, default=logging.WARNING): Logging level.

Note: When enabling realtime description a GPU installation is strongly advised. Using realtime transcription may create high GPU loads.

-

enable_realtime_transcription (bool, default=False): Enables or disables real-time transcription of audio. When set to True, the audio will be transcribed continuously as it is being recorded.

-

realtime_model_type (str, default="tiny"): Specifies the size or path of the machine learning model to be used for real-time transcription.

- Valid options: 'tiny', 'tiny.en', 'base', 'base.en', 'small', 'small.en', 'medium', 'medium.en', 'large-v1', 'large-v2'.

-

realtime_processing_pause (float, default=0.2): Specifies the time interval in seconds after a chunk of audio gets transcribed. Lower values will result in more "real-time" (frequent) transcription updates but may increase computational load.

-

on_realtime_transcription_update: A callback function that is triggered whenever there's an update in the real-time transcription. The function is called with the newly transcribed text as its argument.

-

on_realtime_transcription_stabilized: A callback function that is triggered whenever there's an update in the real-time transcription and returns a higher quality, stabilized text as its argument.

-

silero_sensitivity (float, default=0.6): Sensitivity for Silero's voice activity detection ranging from 0 (least sensitive) to 1 (most sensitive). Default is 0.6.

-

silero_sensitivity (float, default=0.6): Sensitivity for Silero's voice activity detection ranging from 0 (least sensitive) to 1 (most sensitive). Default is 0.6.

-

silero_use_onnx (bool, default=False): Enables usage of the pre-trained model from Silero in the ONNX (Open Neural Network Exchange) format instead of the PyTorch format. Default is False. Recommended for faster performance.

-

post_speech_silence_duration (float, default=0.2): Duration in seconds of silence that must follow speech before the recording is considered to be completed. This ensures that any brief pauses during speech don't prematurely end the recording.

-

min_gap_between_recordings (float, default=1.0): Specifies the minimum time interval in seconds that should exist between the end of one recording session and the beginning of another to prevent rapid consecutive recordings.

-

min_length_of_recording (float, default=1.0): Specifies the minimum duration in seconds that a recording session should last to ensure meaningful audio capture, preventing excessively short or fragmented recordings.

-

pre_recording_buffer_duration (float, default=0.2): The time span, in seconds, during which audio is buffered prior to formal recording. This helps counterbalancing the latency inherent in speech activity detection, ensuring no initial audio is missed.

-

on_vad_detect_start: A callable function triggered when the system starts to listen for voice activity.

-

on_vad_detect_stop: A callable function triggered when the system stops to listen for voice activity.

-

wake_words (str, default=""): Wake words for initiating the recording. Multiple wake words can be provided as a comma-separated string. Supported wake words are: alexa, americano, blueberry, bumblebee, computer, grapefruits, grasshopper, hey google, hey siri, jarvis, ok google, picovoice, porcupine, terminator

-

wake_words_sensitivity (float, default=0.6): Sensitivity level for wake word detection (0 for least sensitive, 1 for most sensitive).

-

wake_word_activation_delay (float, default=0): Duration in seconds after the start of monitoring before the system switches to wake word activation if no voice is initially detected. If set to zero, the system uses wake word activation immediately.

-

wake_word_timeout (float, default=5): Duration in seconds after a wake word is recognized. If no subsequent voice activity is detected within this window, the system transitions back to an inactive state, awaiting the next wake word or voice activation.

-

on_wakeword_detected: A callable function triggered when a wake word is detected.

-

on_wakeword_timeout: A callable function triggered when the system goes back to an inactive state after when no speech was detected after wake word activation.

-

on_wakeword_detection_start: A callable function triggered when the system starts to listen for wake words

-

on_wakeword_detection_end: A callable function triggered when stopping to listen for wake words (e.g. because of timeout or wake word detected)

Contributions are always welcome!

MIT

Kolja Beigel

Email: [email protected]

GitHub

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RealtimeSTT_LLM_TTS

Similar Open Source Tools

RealtimeSTT_LLM_TTS

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

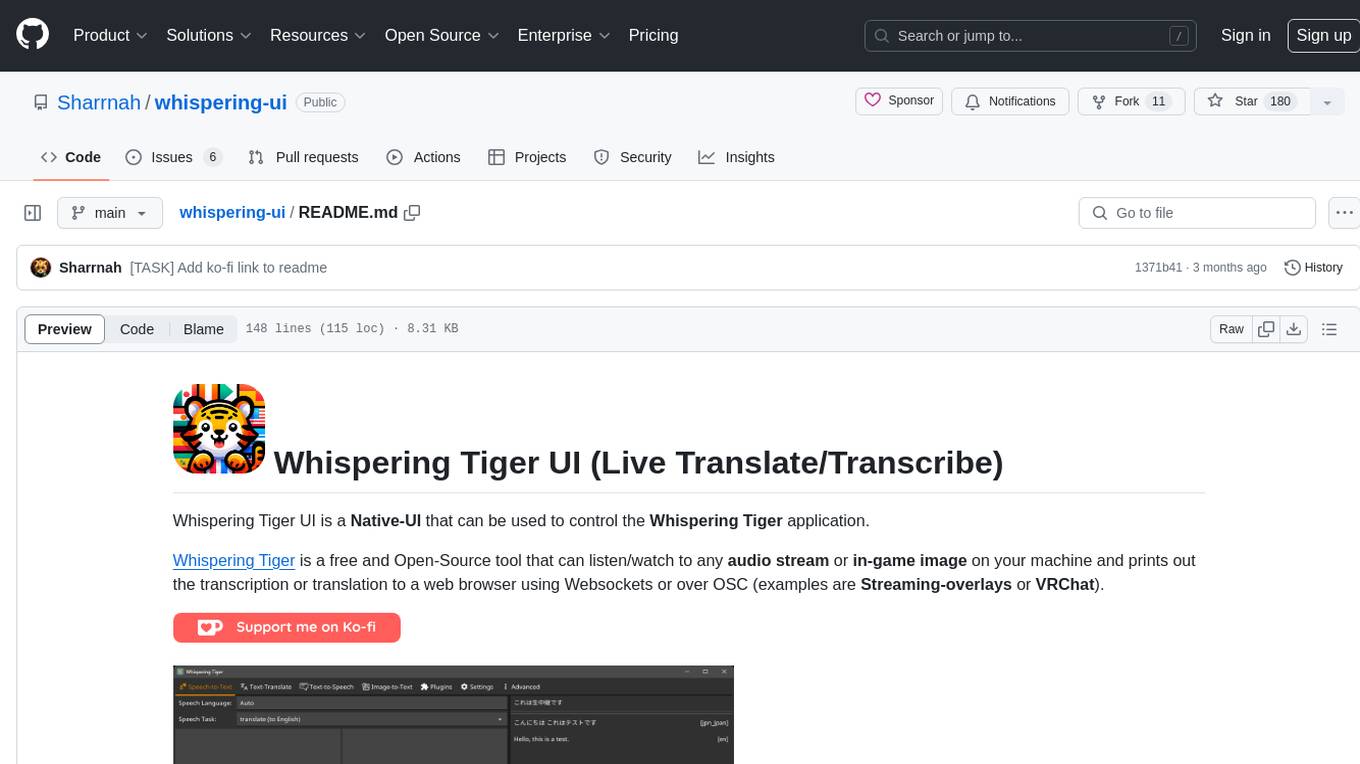

whispering-ui

Whispering Tiger UI is a Native-UI tool designed to control the Whispering Tiger application, a free and Open-Source tool that can listen/watch to audio streams or in-game images on your machine and provide transcription or translation to a web browser using Websockets or over OSC. It features a Native-UI for Windows, easy access to all Whispering Tiger features including transcription, translation, text-to-speech, and in-game image recognition. The tool supports loopback audio device, configuration saving/loading, plugin support for additional features, and auto-update functionality. Users can create profiles, configure audio devices, select A.I. devices for speech-to-text, and install/manage plugins for extended functionality.

AmigaGPT

AmigaGPT is a versatile ChatGPT client for AmigaOS 3.x, 4.1, and MorphOS. It brings the capabilities of OpenAI’s GPT to Amiga systems, enabling text generation, question answering, and creative exploration. AmigaGPT can generate images using DALL-E, supports speech output, and seamlessly integrates with AmigaOS. Users can customize the UI, choose fonts and colors, and enjoy a native user experience. The tool requires specific system requirements and offers features like state-of-the-art language models, AI image generation, speech capability, and UI customization.

ebook2audiobook

ebook2audiobook is a CPU/GPU converter tool that converts eBooks to audiobooks with chapters and metadata using tools like Calibre, ffmpeg, XTTSv2, and Fairseq. It supports voice cloning and a wide range of languages. The tool is designed to run on 4GB RAM and provides a new v2.0 Web GUI interface for user-friendly interaction. Users can convert eBooks to text format, split eBooks into chapters, and utilize high-quality text-to-speech functionalities. Supported languages include Arabic, Chinese, English, French, German, Hindi, and many more. The tool can be used for legal, non-DRM eBooks only and should be used responsibly in compliance with applicable laws.

easydiffusion

Easy Diffusion 3.0 is a user-friendly tool for installing and using Stable Diffusion on your computer. It offers hassle-free installation, clutter-free UI, task queue, intelligent model detection, live preview, image modifiers, multiple prompts file, saving generated images, UI themes, searchable models dropdown, and supports various image generation tasks like 'Text to Image', 'Image to Image', and 'InPainting'. The tool also provides advanced features such as custom models, merge models, custom VAE models, multi-GPU support, auto-updater, developer console, and more. It is designed for both new users and advanced users looking for powerful AI image generation capabilities.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

OrChat

OrChat is a powerful CLI tool for chatting with AI models through OpenRouter. It offers features like universal model access, interactive chat with real-time streaming responses, rich markdown rendering, agentic shell access, security gating, performance analytics, command auto-completion, pricing display, auto-update system, multi-line input support, conversation management, auto-summarization, session persistence, web scraping, file and media support, smart thinking mode, conversation export, customizable themes, interactive input features, and more.

AiR

AiR is an AI tool built entirely in Rust that delivers blazing speed and efficiency. It features accurate translation and seamless text rewriting to supercharge productivity. AiR is designed to assist non-native speakers by automatically fixing errors and polishing language to sound like a native speaker. The tool is under heavy development with more features on the horizon.

monty

Monty is a minimal, secure Python interpreter written in Rust for use by AI. It allows safe execution of Python code written by an LLM embedded in your agent, with fast startup times and performance similar to CPython. Monty supports running a subset of Python code, blocking access to the host environment, calling host functions, typechecking, snapshotting interpreter state, controlling resource usage, collecting stdout and stderr, and running async or sync code. It is designed for running code written by agents, providing a sandboxed environment without the complexity of a full container-based solution.

rigging

Rigging is a lightweight LLM framework designed to simplify the usage of language models in production code. It offers structured Pydantic models for text output, supports various models like LiteLLM and transformers, and provides features such as defining prompts as python functions, simple tool use, storing models as connection strings, async batching for large scale generation, and modern Python support with type hints and async capabilities. Rigging is developed by dreadnode and is suitable for tasks like building chat pipelines, running completions, tracking behavior with tracing, playing with generation parameters, and scaling up with iterating and batching.

stenoai

StenoAI is an AI-powered meeting intelligence tool that allows users to record, transcribe, summarize, and query meetings using local AI models. It prioritizes privacy by processing data entirely on the user's device. The tool offers multiple AI models optimized for different use cases, making it ideal for healthcare, legal, and finance professionals with confidential data needs. StenoAI also features a macOS desktop app with a user-friendly interface, making it convenient for users to access its functionalities. The project is open-source and not affiliated with any specific company, emphasizing its focus on meeting-notes productivity and community collaboration.

strava-mcp

Strava MCP Server is a TypeScript implementation of a Model Context Protocol (MCP) server that serves as a bridge to the Strava API. It provides tools for accessing recent activities, detailed activity streams, segments exploration, activity and segment effort information, saved routes details, and route exporting in GPX or TCX format. The server offers AI-friendly JSON responses via MCP and utilizes Strava API V3 for seamless integration. Users can interact with their Strava data through natural language queries and advanced prompts, enabling personalized analysis and visualization of their activities.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

DesktopCommanderMCP

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

Visionatrix

Visionatrix is a project aimed at providing easy use of ComfyUI workflows. It offers simplified setup and update processes, a minimalistic UI for daily workflow use, stable workflows with versioning and update support, scalability for multiple instances and task workers, multiple user support with integration of different user backends, LLM power for integration with Ollama/Gemini, and seamless integration as a service with backend endpoints and webhook support. The project is approaching version 1.0 release and welcomes new ideas for further implementation.

For similar tasks

RealtimeSTT_LLM_TTS

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

VoiceStreamAI

VoiceStreamAI is a Python 3-based server and JavaScript client solution for near-realtime audio streaming and transcription using WebSocket. It employs Huggingface's Voice Activity Detection (VAD) and OpenAI's Whisper model for accurate speech recognition. The system features real-time audio streaming, modular design for easy integration of VAD and ASR technologies, customizable audio chunk processing strategies, support for multilingual transcription, and secure sockets support. It uses a factory and strategy pattern implementation for flexible component management and provides a unit testing framework for robust development.

speech-to-speech

This repository implements a speech-to-speech cascaded pipeline with consecutive parts including Voice Activity Detection (VAD), Speech to Text (STT), Language Model (LM), and Text to Speech (TTS). It aims to provide a fully open and modular approach by leveraging models available on the Transformers library via the Hugging Face hub. The code is designed for easy modification, with each component implemented as a class. Users can run the pipeline either on a server/client approach or locally, with detailed setup and usage instructions provided in the readme.

echosharp

EchoSharp is an open-source library designed for near-real-time audio processing, orchestrating different AI models seamlessly for various audio analysis scopes. It focuses on flexibility and performance, allowing near-real-time Transcription and Translation by integrating components for Speech-to-Text and Voice Activity Detection. With interchangeable components, easy orchestration, and first-party components like Whisper.net, SileroVad, OpenAI Whisper, AzureAI SpeechServices, WebRtcVadSharp, Onnx.Whisper, and Onnx.Sherpa, EchoSharp provides efficient audio analysis solutions for developers.

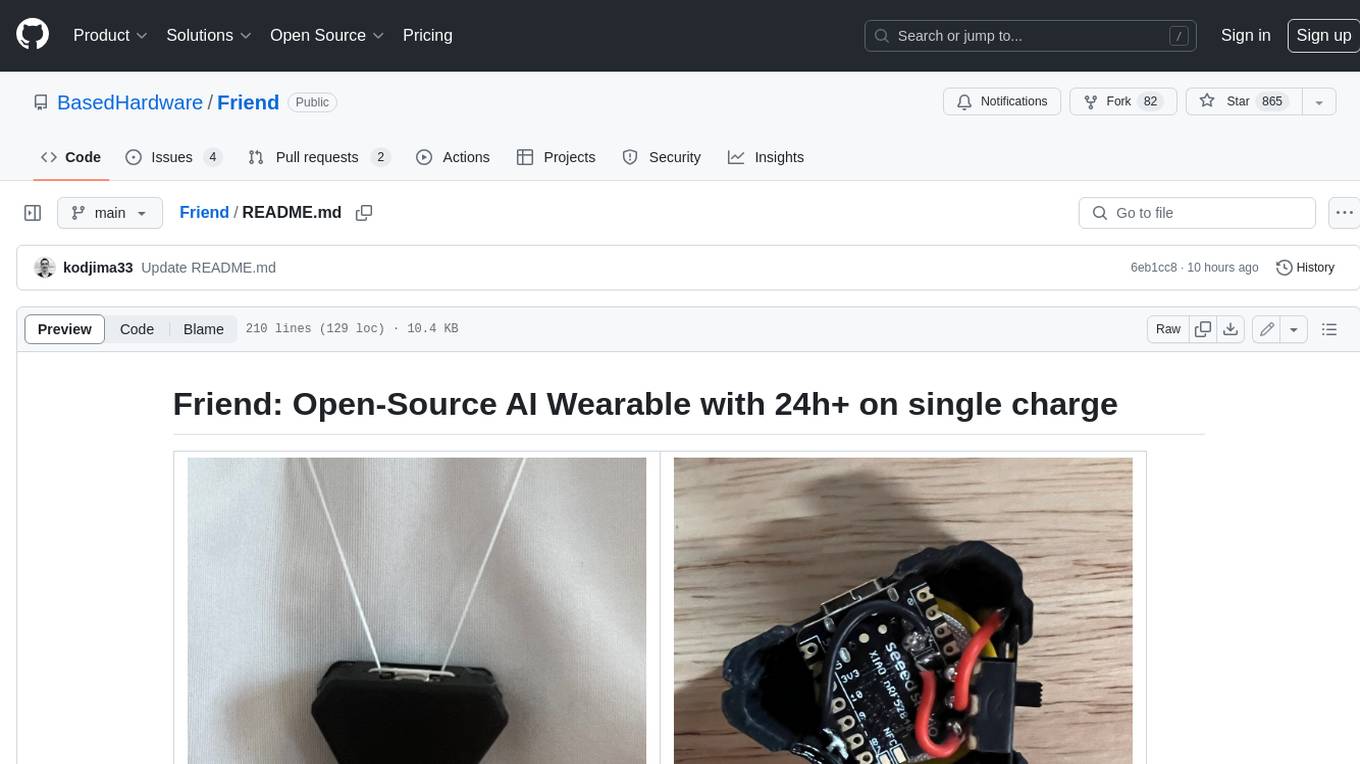

Friend

Friend is an open-source AI wearable device that records everything you say, gives you proactive feedback and advice. It has real-time AI audio processing capabilities, low-powered Bluetooth, open-source software, and a wearable design. The device is designed to be affordable and easy to use, with a total cost of less than $20. To get started, you can clone the repo, choose the version of the app you want to install, and follow the instructions for installing the firmware and assembling the device. Friend is still a prototype project and is provided "as is", without warranty of any kind. Use of the device should comply with all local laws and regulations concerning privacy and data protection.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

openvino-plugins-ai-audacity

OpenVINO™ AI Plugins for Audacity* are a set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU. * **Music Separation**: Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments. * **Noise Suppression**: Removes background noise from an audio sample. * **Music Generation & Continuation**: Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music. * **Whisper Transcription**: Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

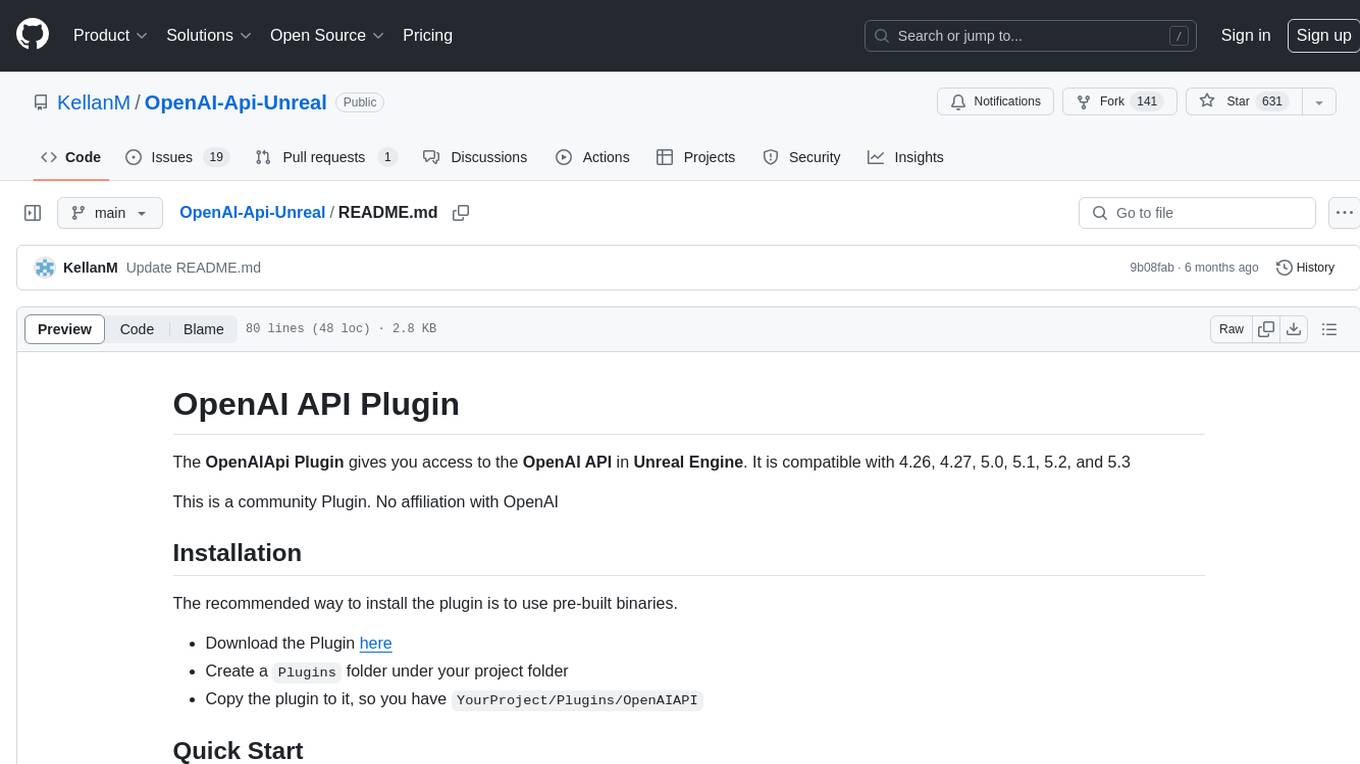

OpenAI-Api-Unreal

The OpenAIApi Plugin provides access to the OpenAI API in Unreal Engine, allowing users to generate images, transcribe speech, and power NPCs using advanced AI models. It offers blueprint nodes for making API calls, setting parameters, and accessing completion values. Users can authenticate using an API key directly or as an environment variable. The plugin supports various tasks such as generating images, transcribing speech, and interacting with NPCs through chat endpoints.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.