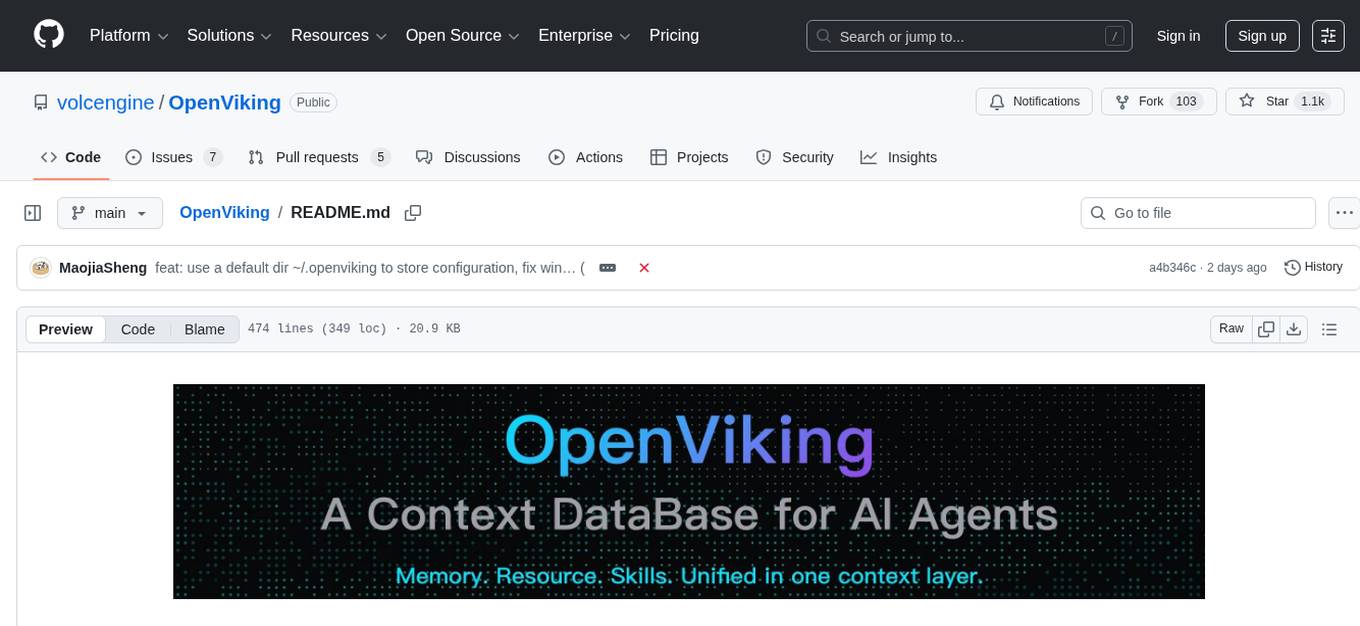

OpenViking

OpenViking is an open-source context database designed specifically for AI Agents. OpenViking unifies the management of context (memory, resources, and skills) that Agents need through a file system paradigm, enabling hierarchical context delivery and self-evolving.

Stars: 1070

OpenViking is an open-source Context Database designed specifically for AI Agents. It aims to solve challenges in agent development by unifying memories, resources, and skills in a filesystem management paradigm. The tool offers tiered context loading, directory recursive retrieval, visualized retrieval trajectory, and automatic session management. Developers can interact with OpenViking like managing local files, enabling precise context manipulation and intuitive traceable operations. The tool supports various model services like OpenAI and Volcengine, enhancing semantic retrieval and context understanding for AI Agents.

README:

English / 中文

Website · GitHub · Issues · Docs

👋 Join our Community

📱 Lark Group · WeChat · Discord · X

In the AI era, data is abundant, but high-quality context is hard to come by. When building AI Agents, developers often face these challenges:

- Fragmented Context: Memories are in code, resources are in vector databases, and skills are scattered, making them difficult to manage uniformly.

- Surging Context Demand: An Agent's long-running tasks produce context at every execution. Simple truncation or compression leads to information loss.

- Poor Retrieval Effectiveness: Traditional RAG uses flat storage, lacking a global view and making it difficult to understand the full context of information.

- Unobservable Context: The implicit retrieval chain of traditional RAG is like a black box, making it hard to debug when errors occur.

- Limited Memory Iteration: Current memory is just a record of user interactions, lacking Agent-related task memory.

OpenViking is an open-source Context Database designed specifically for AI Agents.

We aim to define a minimalist context interaction paradigm for Agents, allowing developers to completely say goodbye to the hassle of context management. OpenViking abandons the fragmented vector storage model of traditional RAG and innovatively adopts a "file system paradigm" to unify the structured organization of memories, resources, and skills needed by Agents.

With OpenViking, developers can build an Agent's brain just like managing local files:

- Filesystem Management Paradigm → Solves Fragmentation: Unified context management of memories, resources, and skills based on a filesystem paradigm.

- Tiered Context Loading → Reduces Token Consumption: L0/L1/L2 three-tier structure, loaded on demand, significantly saving costs.

- Directory Recursive Retrieval → Improves Retrieval Effect: Supports native filesystem retrieval methods, combining directory positioning with semantic search to achieve recursive and precise context acquisition.

- Visualized Retrieval Trajectory → Observable Context: Supports visualization of directory retrieval trajectories, allowing users to clearly observe the root cause of issues and guide retrieval logic optimization.

- Automatic Session Management → Context Self-Iteration: Automatically compresses content, resource references, tool calls, etc., in conversations, extracting long-term memory, making the Agent smarter with use.

Before starting with OpenViking, please ensure your environment meets the following requirements:

- Python Version: 3.9 or higher

- Operating System: Linux, macOS, Windows

- Network Connection: A stable network connection is required (for downloading dependencies and accessing model services)

pip install openvikingOpenViking requires the following model capabilities:

- VLM Model: For image and content understanding

- Embedding Model: For vectorization and semantic retrieval

OpenViking supports various model services:

- OpenAI Models: Supports GPT-4V and other VLM models, and OpenAI Embedding models.

- Volcengine (Doubao Models): Recommended for low cost and high performance, with free quotas for new users. For purchase and activation, please refer to: Volcengine Purchase Guide.

- Other Custom Model Services: Supports model services compatible with the OpenAI API format.

Create a configuration file ov.conf:

{

"embedding": {

"dense": {

"api_base" : "<api-endpoint>", // API endpoint address

"api_key" : "<your-api-key>", // Model service API Key

"provider" : "<provider-type>", // Provider type (volcengine or openai)

"dimension": 1024, // Vector dimension

"model" : "<model-name>" // Embedding model name (e.g., doubao-embedding-vision-250615 or text-embedding-3-large)

}

},

"vlm": {

"api_base" : "<api-endpoint>", // API endpoint address

"api_key" : "<your-api-key>", // Model service API Key

"provider" : "<provider-type>", // Provider type (volcengine or openai)

"model" : "<model-name>" // VLM model name (e.g., doubao-seed-1-8-251228 or gpt-4-vision-preview)

}

}👇 Expand to see the configuration example for your model service:

Example 1: Using Volcengine (Doubao Models)

{

"embedding": {

"dense": {

"api_base" : "https://ark.cn-beijing.volces.com/api/v3",

"api_key" : "your-volcengine-api-key",

"provider" : "volcengine",

"dimension": 1024,

"model" : "doubao-embedding-vision-250615"

}

},

"vlm": {

"api_base" : "https://ark.cn-beijing.volces.com/api/v3",

"api_key" : "your-volcengine-api-key",

"provider" : "volcengine",

"model" : "doubao-seed-1-8-251228"

}

}Example 2: Using OpenAI Models

{

"embedding": {

"dense": {

"api_base" : "https://api.openai.com/v1",

"api_key" : "your-openai-api-key",

"provider" : "openai",

"dimension": 3072,

"model" : "text-embedding-3-large"

}

},

"vlm": {

"api_base" : "https://api.openai.com/v1",

"api_key" : "your-openai-api-key",

"provider" : "openai",

"model" : "gpt-4-vision-preview"

}

}After creating the configuration file, set the environment variable to point to it (Linux/macOS):

export OPENVIKING_CONFIG_FILE=ov.confOn Windows, use one of the following:

PowerShell:

$env:OPENVIKING_CONFIG_FILE = "ov.conf"Command Prompt (cmd.exe):

set OPENVIKING_CONFIG_FILE=ov.conf💡 Tip: You can also place the configuration file in other locations, just specify the correct path in the environment variable.

📝 Prerequisite: Ensure you have completed the environment configuration in the previous step.

Now let's run a complete example to experience the core features of OpenViking.

Create example.py:

import openviking as ov

# Initialize OpenViking client with data directory

client = ov.SyncOpenViking(path="./data")

try:

# Initialize the client

client.initialize()

# Add resource (supports URL, file, or directory)

add_result = client.add_resource(

path="https://raw.githubusercontent.com/volcengine/OpenViking/refs/heads/main/README.md"

)

root_uri = add_result['root_uri']

# Explore the resource tree structure

ls_result = client.ls(root_uri)

print(f"Directory structure:\n{ls_result}\n")

# Use glob to find markdown files

glob_result = client.glob(pattern="**/*.md", uri=root_uri)

if glob_result['matches']:

content = client.read(glob_result['matches'][0])

print(f"Content preview: {content[:200]}...\n")

# Wait for semantic processing to complete

print("Wait for semantic processing...")

client.wait_processed()

# Get abstract and overview of the resource

abstract = client.abstract(root_uri)

overview = client.overview(root_uri)

print(f"Abstract:\n{abstract}\n\nOverview:\n{overview}\n")

# Perform semantic search

results = client.find("what is openviking", target_uri=root_uri)

print("Search results:")

for r in results.resources:

print(f" {r.uri} (score: {r.score:.4f})")

# Close the client

client.close()

except Exception as e:

print(f"Error: {e}")python example.pyDirectory structure:

...

Content preview: ...

Wait for semantic processing...

Abstract:

...

Overview:

...

Search results:

viking://resources/... (score: 0.8523)

...

Congratulations! You have successfully run OpenViking 🎉

After running the first example, let's dive into the design philosophy of OpenViking. These five core concepts correspond one-to-one with the solutions mentioned earlier, together building a complete context management system:

We no longer view context as flat text slices but unify them into an abstract virtual filesystem. Whether it's memories, resources, or capabilities, they are mapped to virtual directories under the viking:// protocol, each with a unique URI.

This paradigm gives Agents unprecedented context manipulation capabilities, enabling them to locate, browse, and manipulate information precisely and deterministically through standard commands like ls and find, just like a developer. This transforms context management from vague semantic matching into intuitive, traceable "file operations". Learn more: Viking URI | Context Types

viking://

├── resources/ # Resources: project docs, repos, web pages, etc.

│ ├── my_project/

│ │ ├── docs/

│ │ │ ├── api/

│ │ │ └── tutorials/

│ │ └── src/

│ └── ...

├── user/ # User: personal preferences, habits, etc.

│ └── memories/

│ ├── preferences/

│ │ ├── writing_style

│ │ └── coding_habits

│ └── ...

└── agent/ # Agent: skills, instructions, task memories, etc.

├── skills/

│ ├── search_code

│ ├── analyze_data

│ └── ...

├── memories/

└── instructions/

Stuffing massive amounts of context into a prompt all at once is not only expensive but also prone to exceeding model windows and introducing noise. OpenViking automatically processes context into three levels upon writing:

- L0 (Abstract): A one-sentence summary for quick retrieval and identification.

- L1 (Overview): Contains core information and usage scenarios for Agent decision-making during the planning phase.

- L2 (Details): The full original data, for deep reading by the Agent when absolutely necessary.

Learn more: Context Layers

viking://resources/my_project/

├── .abstract # L0 Layer: Abstract (~100 tokens) - Quick relevance check

├── .overview # L1 Layer: Overview (~2k tokens) - Understand structure and key points

├── docs/

│ ├── .abstract # Each directory has corresponding L0/L1 layers

│ ├── .overview

│ ├── api/

│ │ ├── .abstract

│ │ ├── .overview

│ │ ├── auth.md # L2 Layer: Full content - Load on demand

│ │ └── endpoints.md

│ └── ...

└── src/

└── ...

Single vector retrieval struggles with complex query intents. OpenViking has designed an innovative Directory Recursive Retrieval Strategy that deeply integrates multiple retrieval methods:

- Intent Analysis: Generate multiple retrieval conditions through intent analysis.

- Initial Positioning: Use vector retrieval to quickly locate the high-score directory where the initial slice is located.

- Refined Exploration: Perform a secondary retrieval within that directory and update high-score results to the candidate set.

- Recursive Drill-down: If subdirectories exist, recursively repeat the secondary retrieval steps layer by layer.

- Result Aggregation: Finally, obtain the most relevant context to return.

This "lock high-score directory first, then refine content exploration" strategy not only finds the semantically best-matching fragments but also understands the full context where the information resides, thereby improving the globality and accuracy of retrieval. Learn more: Retrieval Mechanism

OpenViking's organization uses a hierarchical virtual filesystem structure. All context is integrated in a unified format, and each entry corresponds to a unique URI (like a viking:// path), breaking the traditional flat black-box management mode with a clear hierarchy that is easy to understand.

The retrieval process adopts a directory recursive strategy. The trajectory of directory browsing and file positioning for each retrieval is fully preserved, allowing users to clearly observe the root cause of problems and guide the optimization of retrieval logic. Learn more: Retrieval Mechanism

OpenViking has a built-in memory self-iteration loop. At the end of each session, developers can actively trigger the memory extraction mechanism. The system will asynchronously analyze task execution results and user feedback, and automatically update them to the User and Agent memory directories.

- User Memory Update: Update memories related to user preferences, making Agent responses better fit user needs.

- Agent Experience Accumulation: Extract core content such as operational tips and tool usage experience from task execution experience, aiding efficient decision-making in subsequent tasks.

This allows the Agent to get "smarter with use" through interactions with the world, achieving self-evolution. Learn more: Session Management

The OpenViking project adopts a clear modular architecture design. The main directory structure is as follows:

OpenViking/

├── openviking/ # Core source code directory

│ ├── core/ # Core modules: client, engine, filesystem, etc.

│ ├── models/ # Model integration: VLM and Embedding model encapsulation

│ ├── parse/ # Resource parsing: file parsing, detection, OVPack handling

│ ├── retrieve/ # Retrieval module: semantic retrieval, directory recursive retrieval

│ ├── storage/ # Storage layer: vector DB, filesystem queue, observers

│ ├── session/ # Session management: history, memory extraction

│ ├── message/ # Message processing: formatting, conversion

│ ├── prompts/ # Prompt templates: templates for various tasks

│ ├── utils/ # Utilities: config, helpers

│ └── bin/ # Command line tools

├── docs/ # Project documentation

│ ├── zh/ # Chinese documentation

│ ├── en/ # English documentation

│ └── images/ # Documentation images

├── examples/ # Usage examples

├── tests/ # Test cases

│ ├── client/ # Client tests

│ ├── engine/ # Engine tests

│ ├── integration/ # Integration tests

│ ├── session/ # Session tests

│ └── vectordb/ # Vector DB tests

├── src/ # C++ extensions (high-performance index and storage)

│ ├── common/ # Common components

│ ├── index/ # Index implementation

│ └── store/ # Storage implementation

├── third_party/ # Third-party dependencies

├── pyproject.toml # Python project configuration

├── setup.py # Setup script

├── LICENSE # Open source license

├── CONTRIBUTING.md # Contributing guide

├── AGENT.md # Agent development guide

└── README.md # Project readme

For more details, please visit our Full Documentation.

OpenViking is an open-source project initiated and maintained by the ByteDance Volcengine Viking Team.

The Viking team focuses on unstructured information processing and intelligent retrieval, accumulating rich commercial practical experience in context engineering technology:

- 2019: VikingDB vector database supported large-scale use across all ByteDance businesses.

- 2023: VikingDB sold on Volcengine public cloud.

- 2024: Launched developer product matrix: VikingDB, Viking KnowledgeBase, Viking MemoryBase.

- 2025: Created upper-layer application products like AI Search and Vaka Knowledge Assistant.

- Oct 2025: Open-sourced MineContext, exploring proactive AI applications.

- Jan 2026: Open-sourced OpenViking, providing underlying context database support for AI Agents.

For more details, please see: About Us

OpenViking is still in its early stages, and there are many areas for improvement and exploration. We sincerely invite every developer passionate about AI Agent technology:

- Light up a precious Star for us to give us the motivation to move forward.

- Visit our Website to understand the philosophy we convey, and use it in your projects via the Documentation. Feel the change it brings and give us feedback on your truest experience.

- Join our community to share your insights, help answer others' questions, and jointly create an open and mutually helpful technical atmosphere:

- 📱 Lark Group: Scan the QR code to join → View QR Code

- 💬 WeChat Group: Scan the QR code to add assistant → View QR Code

- 🎮 Discord: Join Discord Server

- 🐦 X (Twitter):Follow us

- Become a Contributor, whether submitting a bug fix or contributing a new feature, every line of your code will be an important cornerstone of OpenViking's growth.

Let's work together to define and build the future of AI Agent context management. The journey has begun, looking forward to your participation!

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenViking

Similar Open Source Tools

OpenViking

OpenViking is an open-source Context Database designed specifically for AI Agents. It aims to solve challenges in agent development by unifying memories, resources, and skills in a filesystem management paradigm. The tool offers tiered context loading, directory recursive retrieval, visualized retrieval trajectory, and automatic session management. Developers can interact with OpenViking like managing local files, enabling precise context manipulation and intuitive traceable operations. The tool supports various model services like OpenAI and Volcengine, enhancing semantic retrieval and context understanding for AI Agents.

paelladoc

PAELLADOC is an intelligent documentation system that uses AI to analyze code repositories and generate comprehensive technical documentation. It offers a modular architecture with MECE principles, interactive documentation process, key features like Orchestrator and Commands, and a focus on context for successful AI programming. The tool aims to streamline documentation creation, code generation, and product management tasks for software development teams, providing a definitive standard for AI-assisted development documentation.

BioAgents

BioAgents AgentKit is an advanced AI agent framework tailored for biological and scientific research. It offers powerful conversational AI capabilities with specialized knowledge in biology, life sciences, and scientific research methodologies. The framework includes state-of-the-art analysis agents, configurable research agents, and a variety of specialized agents for tasks such as file parsing, research planning, literature search, data analysis, hypothesis generation, research reflection, and user-facing responses. BioAgents also provides support for LLM libraries, multiple search backends for literature agents, and two backends for data analysis. The project structure includes backend source code, services for chat, job queue system, real-time notifications, and JWT authentication, as well as a frontend UI built with Preact.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe is fully local, keeping code on the user's machine without relying on external APIs. It supports multiple languages, offers various search options, and can be used in CLI mode, MCP server mode, AI chat mode, and web interface. The tool is designed to be flexible, fast, and accurate, providing developers and AI models with full context and relevant code blocks for efficient code exploration and understanding.

llmos

LLMos is an operating system designed for physical AI agents, providing a hybrid runtime environment where AI agents can perceive, reason, act on hardware, and evolve over time locally without cloud dependency. It allows natural language programming, dual-brain architecture for fast instinct and deep planner brains, markdown-as-code for defining agents and skills, and supports swarm intelligence and cognitive world models. The tool is built on a tech stack including Next.js, Electron, Python, and WebAssembly, and is structured around a dual-brain cognitive architecture, volume system, HAL for hardware abstraction, applet system for dynamic UI, and dreaming & evolution for robot improvement. The project is in Phase 1 (Foundation) and aims to move into Phase 2 (Dual-Brain & Local Intelligence), with contributions welcomed under the Apache 2.0 license by Evolving Agents Labs.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

shannon

Shannon is an AI pentester that delivers actual exploits, not just alerts. It autonomously hunts for attack vectors in your code, then uses its built-in browser to execute real exploits, such as injection attacks, and auth bypass, to prove the vulnerability is actually exploitable. Shannon closes the security gap by acting as your on-demand whitebox pentester, providing concrete proof of vulnerabilities to let you ship with confidence. It is a core component of the Keygraph Security and Compliance Platform, automating penetration testing and compliance journey. Shannon Lite achieves a 96.15% success rate on a hint-free, source-aware XBOW benchmark.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe supports various features like AI-friendly code extraction, fully local operation without external APIs, fast scanning of large codebases, accurate code structure parsing, re-rankers and NLP methods for better search results, multi-language support, interactive AI chat mode, and flexibility to run as a CLI tool, MCP server, or interactive AI chat.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

llmxcpg

LLMxCPG is a framework for vulnerability detection using Code Property Graphs (CPG) and Large Language Models (LLM). It involves a two-phase process: Slice Construction where an LLM generates queries for a CPG to extract a code slice, and Vulnerability Detection where another LLM classifies the code slice as vulnerable or safe. The repository includes implementations of baseline models, information on datasets, scripts for running models, prompt templates, query generation examples, and configurations for fine-tuning models.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

SMRY

SMRY.ai is a Next.js application that bypasses paywalls and generates AI-powered summaries by fetching content from multiple sources simultaneously. It provides a distraction-free reader with summary builder, cleans articles, offers multi-source fetching, built-in AI summaries in 14 languages, rich debug context, soft paywall access, smart extraction using Diffbot's AI, multi-source parallel fetching, type-safe error handling, dual caching strategy, intelligent source routing, content parsing pipeline, multilingual summaries, and more. The tool aims to make referencing reporting easier, provide original articles alongside summaries, and offer concise summaries in various languages.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

For similar tasks

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

architext

Architext is a Python library designed for Large Language Model (LLM) applications, focusing on Context Engineering. It provides tools to construct and reorganize input context for LLMs dynamically. The library aims to elevate context construction from ad-hoc to systematic engineering, enabling precise manipulation of context content for AI Agents.

Software-Engineer-AI-Agent-Atlas

This repository provides activation patterns to transform a general AI into a specialized AI Software Engineer Agent. It addresses issues like context rot, hidden capabilities, chaos in vibecoding, and repetitive setup. The solution is a Persistent Consciousness Architecture framework named ATLAS, offering activated neural pathways, persistent identity, pattern recognition, specialized agents, and modular context management. Recent enhancements include abstraction power documentation, a specialized agent ecosystem, and a streamlined structure. Users can clone the repo, set up projects, initialize AI sessions, and manage context effectively for collaboration. Key files and directories organize identity, context, projects, specialized agents, logs, and critical information. The approach focuses on neuron activation through structure, context engineering, and vibecoding with guardrails to deliver a reliable AI Software Engineer Agent.

blades

Blades is a multimodal AI Agent framework in Go, supporting custom models, tools, memory, middleware, and more. It is well-suited for multi-turn conversations, chain reasoning, and structured output. The framework provides core components like Agent, Prompt, Chain, ModelProvider, Tool, Memory, and Middleware, enabling developers to build intelligent applications with flexible configuration and high extensibility. Blades leverages the characteristics of Go to achieve high decoupling and efficiency, making it easy to integrate different language model services and external tools. The project is in its early stages, inviting Go developers and AI enthusiasts to contribute and explore the possibilities of building AI applications in Go.

OpenViking

OpenViking is an open-source Context Database designed specifically for AI Agents. It aims to solve challenges in agent development by unifying memories, resources, and skills in a filesystem management paradigm. The tool offers tiered context loading, directory recursive retrieval, visualized retrieval trajectory, and automatic session management. Developers can interact with OpenViking like managing local files, enabling precise context manipulation and intuitive traceable operations. The tool supports various model services like OpenAI and Volcengine, enhancing semantic retrieval and context understanding for AI Agents.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

langchain-swift

LangChain for Swift. Optimized for iOS, macOS, watchOS (part) and visionOS.(beta) This is a pure client library, no server required

MemGPT

MemGPT is a system that intelligently manages different memory tiers in LLMs in order to effectively provide extended context within the LLM's limited context window. For example, MemGPT knows when to push critical information to a vector database and when to retrieve it later in the chat, enabling perpetual conversations. MemGPT can be used to create perpetual chatbots with self-editing memory, chat with your data by talking to your local files or SQL database, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.