Best AI tools for< Manage Context >

20 - AI tool Sites

Knowledge Plane

Knowledge Plane is a shared memory platform designed for AI agents and engineering teams. It automatically consolidates code, documentation, and conversations into a single source of truth, ensuring up-to-date, structured, and auditable information across existing tools. The platform helps teams overcome the challenge of outdated context in AI tools, enabling seamless collaboration and decision-making.

Godly

Godly is a tool that allows you to add your own data to GPT for personalized completions. It makes it easy to set up and manage your context, and comes with a chat bot to explore your context with no coding required. Godly also makes it easy to debug and manage which contexts are influencing your prompts, and provides an easy-to-use SDK for builders to quickly integrate context to their GPT completions.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

BigPanda

BigPanda is an AI-powered ITOps platform that helps businesses automatically identify actionable alerts, proactively prevent incidents, and ensure service availability. It uses advanced AI/ML algorithms to analyze large volumes of data from various sources, including monitoring tools, event logs, and ticketing systems. BigPanda's platform provides a unified view of IT operations, enabling teams to quickly identify and resolve issues before they impact business-critical services.

Context Data

Context Data is an enterprise data platform designed for Generative AI applications. It enables organizations to build AI apps without the need to manage vector databases, pipelines, and infrastructure. The platform empowers AI teams to create mission-critical applications by simplifying the process of building and managing complex workflows. Context Data also provides real-time data processing capabilities and seamless vector data processing. It offers features such as data catalog ontology, semantic transformations, and the ability to connect to major vector databases. The platform is ideal for industries like financial services, healthcare, real estate, and shipping & supply chain.

Ready to Send

Ready to Send is an AI-powered Gmail assistant that automates the process of generating personalized email responses. It seamlessly integrates with Gmail to provide lightning-fast email replies, personalized and editable responses, and privacy-centric handling of sensitive data. The application leverages AI technology to craft contextual responses in the user's voice, transforming inbox management from a chore to a delight. With support for multiple languages and advanced language models, Ready to Send offers a secure and efficient solution for enhancing email productivity.

Height

Height is an autonomous project management tool designed for teams involved in designing and building projects. It automates manual tasks to provide space for collaborative work, focusing on backlog upkeep, spec updates, and bug triage. With project intelligence and collaboration features, Height offers a customizable workspace with autonomous capabilities to streamline project management. Users can discuss projects in context and benefit from an AI assistant for creating better stories. The tool aims to revolutionize project management by offloading routine tasks to an intelligent system.

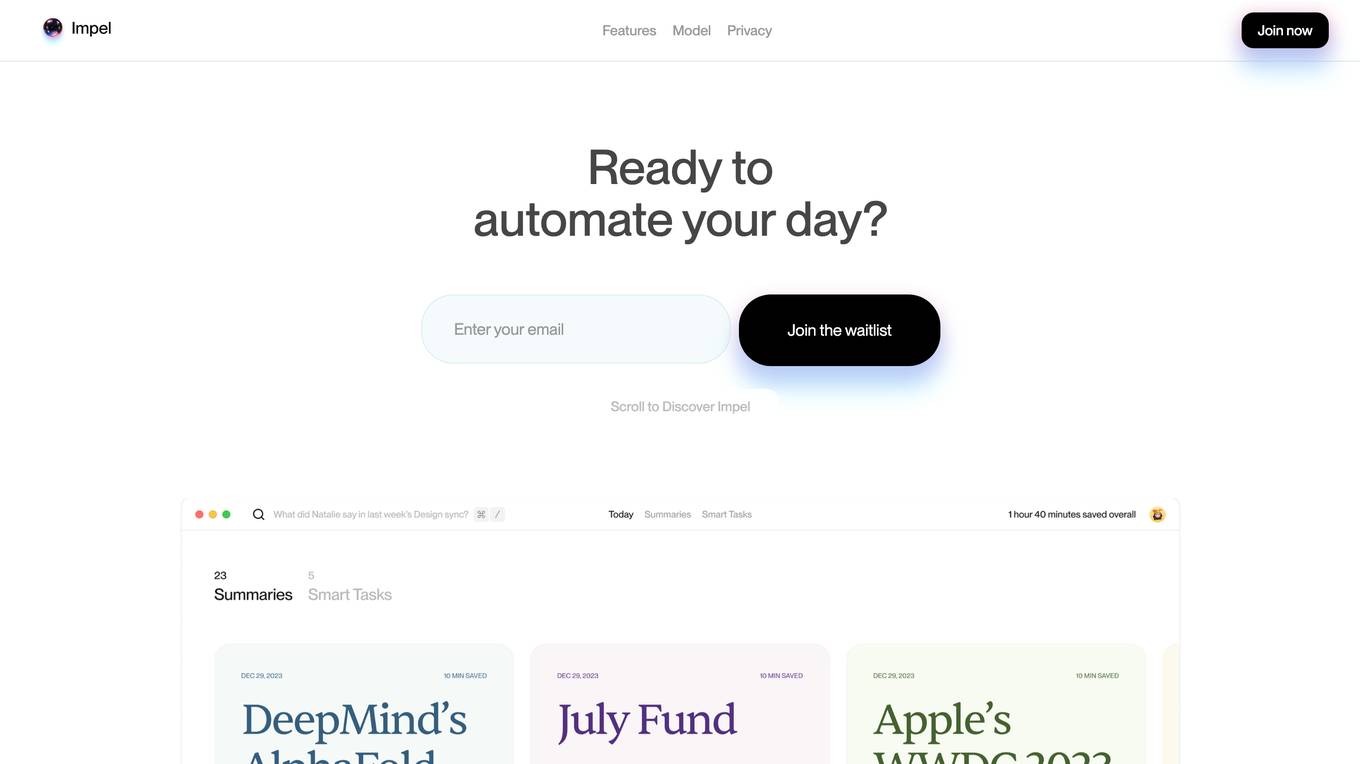

Impel

Impel is an AI tool designed for Mac users to automate daily tasks and enhance productivity. It continuously learns the user's workflow in the background and provides instant assistance when needed. With features like summarizing videos and articles, managing tasks, and providing quick authentication, Impel aims to simplify and streamline the user's digital experience. The application prioritizes privacy by storing and processing data locally, ensuring sensitive information remains secure. Impel serves as a personal tutor, offering contextual suggestions and actions without requiring manual input, making it an efficient AI companion for Mac users.

The Video Calling App

The Video Calling App is an AI-powered platform designed to revolutionize meeting experiences by providing laser-focused, context-aware, and outcome-driven meetings. It aims to streamline post-meeting routines, enhance collaboration, and improve overall meeting efficiency. With powerful integrations and AI features, the app captures, organizes, and distills meeting content to provide users with a clearer perspective and free headspace. It offers seamless integration with popular tools like Slack, Linear, and Google Calendar, enabling users to automate tasks, manage schedules, and enhance productivity. The app's user-friendly interface, interactive features, and advanced search capabilities make it a valuable tool for global teams and remote workers seeking to optimize their meeting experiences.

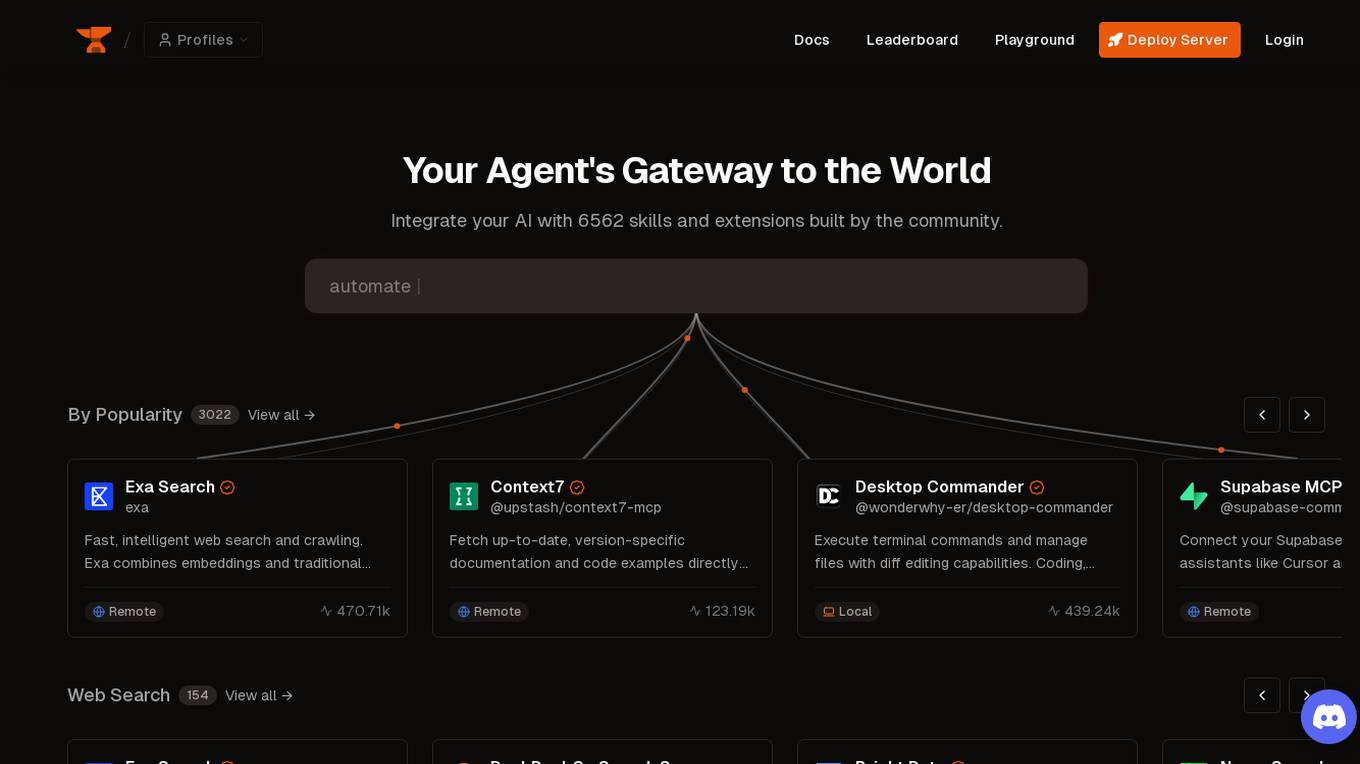

Smithery

Smithery is an AI tool that serves as an agent's gateway to the world, allowing users to extend their agent's capabilities by integrating with a wide range of skills and extensions developed by the community. With a focus on accelerating the agent economy, Smithery provides resources, documentation, and system status updates to support users in leveraging AI technology effectively. The platform offers various functionalities such as web search, browser automation, memory management, weather data & forecasts, AI image generation, web data extraction, and development boilerplates.

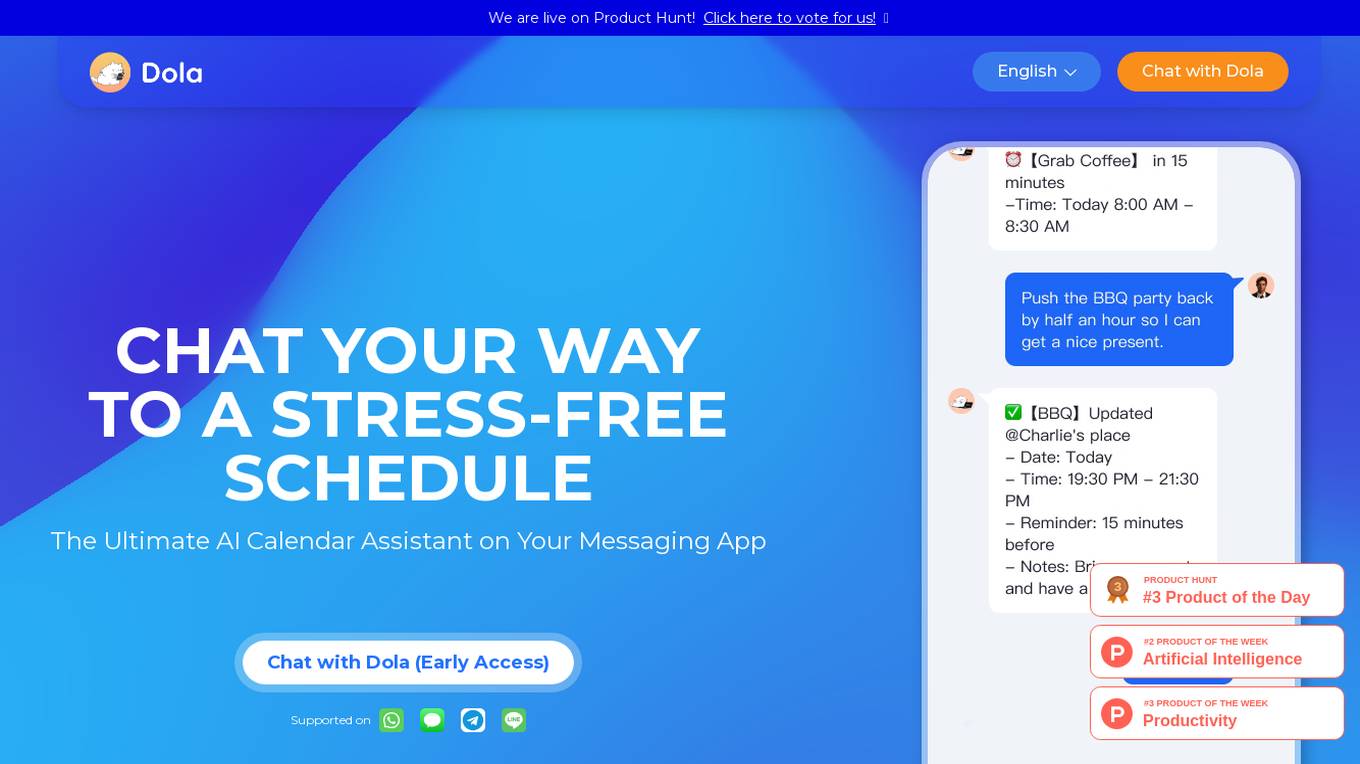

Dola

Dola is an AI-powered calendar assistant that helps you manage your schedule through messaging apps. With Dola, you can add events, edit them, and get reminders, all through natural language conversations. Dola also integrates with your existing calendar apps, so you can keep all your events in one place.

Criya AI

Criya AI is an Intelligent Content System that helps boost buyer engagement by providing AI-powered tools such as Content Builder, Slide Generator, Visual Design, and more. It offers features like Company knowledge management, Engagement Analytics, Secure Sharing, and Team Collaboration. Criya AI caters to various use cases like Account Based Prospecting, Lead Capture, and Deal Execution, benefiting roles such as BDR/SDR, Account Executive, and Sales Trainer. The application is designed to accelerate revenue generation by producing client-ready assets quickly and efficiently.

Dola

Dola is an AI calendar assistant that helps users schedule their lives efficiently and save time. It allows users to set reminders, make calendar events, and manage tasks through natural language communication. Dola works with voice messages, text messages, and images, making it a versatile and user-friendly tool. With features like smarter scheduling, daily weather reports, faster search, and seamless integration with popular calendar apps, Dola aims to simplify task and time management for its users. The application has received positive feedback for its accuracy, ease of use, and ability to sync across multiple devices.

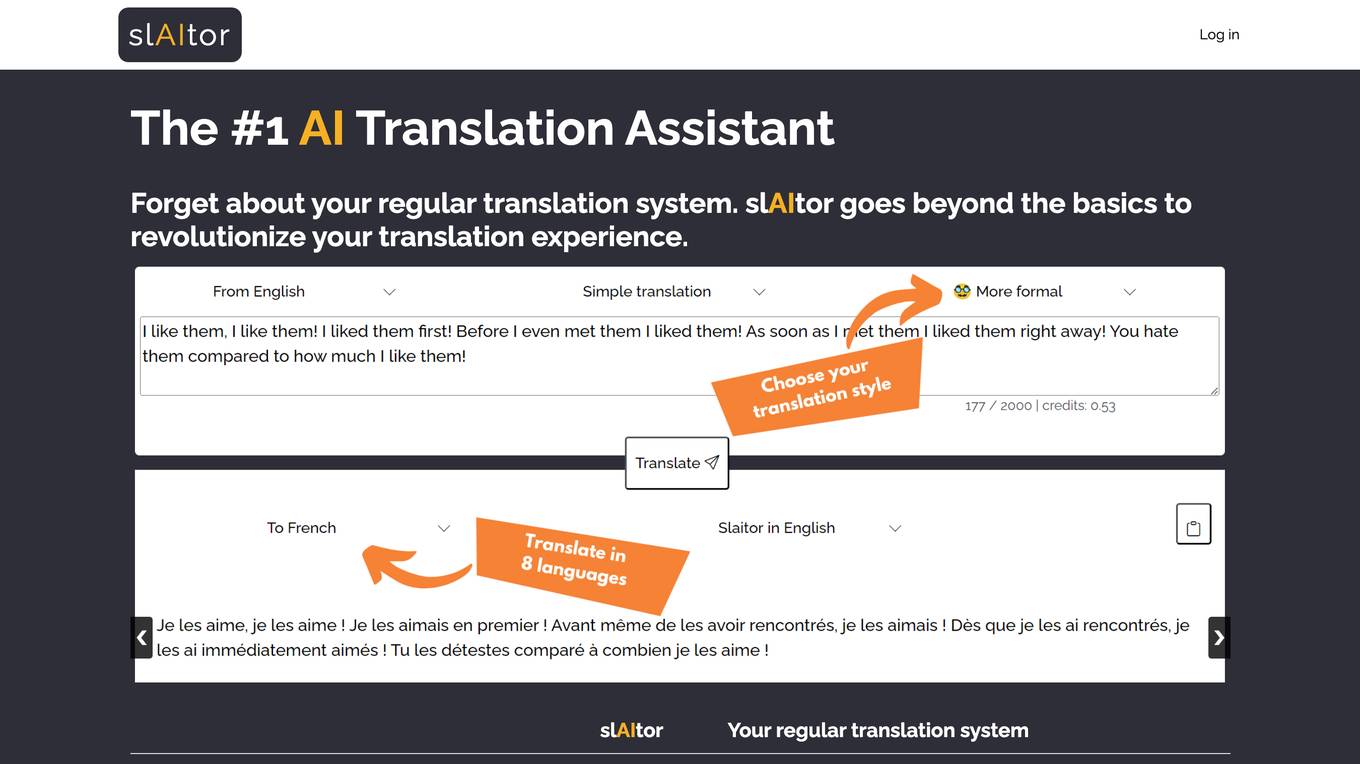

slAItor

slAItor is an AI translation assistant powered by GPT technology. It offers advanced translation features and customization options to enhance the translation experience. Users can benefit from step-by-step translations, multiple translation alternatives, and unique translation styles. The tool supports 28 language pairs and combines recent AI advancements with traditional translation techniques to deliver accurate and efficient translations. slAItor also provides post-processing and evaluation steps to ensure translation quality and offers a user-friendly interface for seamless translation management.

Wally

Wally is the world's first AI-powered personal finance app. It helps you track your spending, create budgets, and plan for the future. Wally is available on iOS and Android devices.

Tenable AI Exposure

Tenable AI Exposure is an AI tool that helps organizations secure and understand their use of AI platforms. It provides visibility, context, and control to manage risks from enterprise AI platforms, enabling security leaders to govern AI usage, enforce policies, and prevent exposures. The tool allows users to track AI platform usage, identify and fix AI misconfigurations, protect against AI exploitation, and deploy quickly with industry-leading security for AI platform use.

Dola

Dola is an AI-powered calendar assistant that helps you manage your schedule through messaging apps. With Dola, you can add events, edit them, and get reminders, all without having to fill out tedious forms or quote previous calendar events. Dola also supports group chats, so you can easily schedule events with friends and family. Dola is available on iOS, Android, and the web.

Whatfix

Whatfix is a Digital Adoption Platform that empowers users to unlock their true potential across all software experiences - web, desktop, and mobile. It provides in-app guidance, user support, and contextual messages to enhance user adoption and productivity. With features like creating interactive sandbox environments, analyzing user engagement, and no-code event tracking, Whatfix helps organizations manage digital transformation, change management, remote training, and employee onboarding efficiently. Trusted by organizations worldwide, Whatfix offers a comprehensive suite of products and services to drive software adoption and improve user experiences.

Key.ai

Key.ai is an AI-powered professional networking platform that leverages artificial intelligence to enhance the networking experience. It eliminates friction in professional networking by understanding users' goals and surfacing valuable connections and opportunities. Key.ai offers AI-powered conversation starters, timely follow-ups, and contextual insights to facilitate meaningful relationships and create impactful outcomes. The platform enables users to create branded communities, host events, manage members efficiently, and gain actionable insights to grow their network. With features like smart event setup, automated reminders, and real-time surveys, Key.ai aims to revolutionize professional networking by making it more personalized and efficient.

Valossa

Valossa is an AI tool that offers a range of video analysis services, including video-to-text conversion, search capabilities, captions generation, and clips creation. It provides solutions for brand-safe contextual advertising, automatic clip previews, sensitive content identification, and video mood analysis. Valossa Assistant™ allows users to have conversations inside videos, generate transcripts, captions, and insights, and analyze video moods and sentiment. The platform also offers AI solutions for video automation, such as transcribing, captioning, and translating audio-visual content, as well as categorizing video scenes and creating promotional videos automatically.

6 - Open Source AI Tools

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

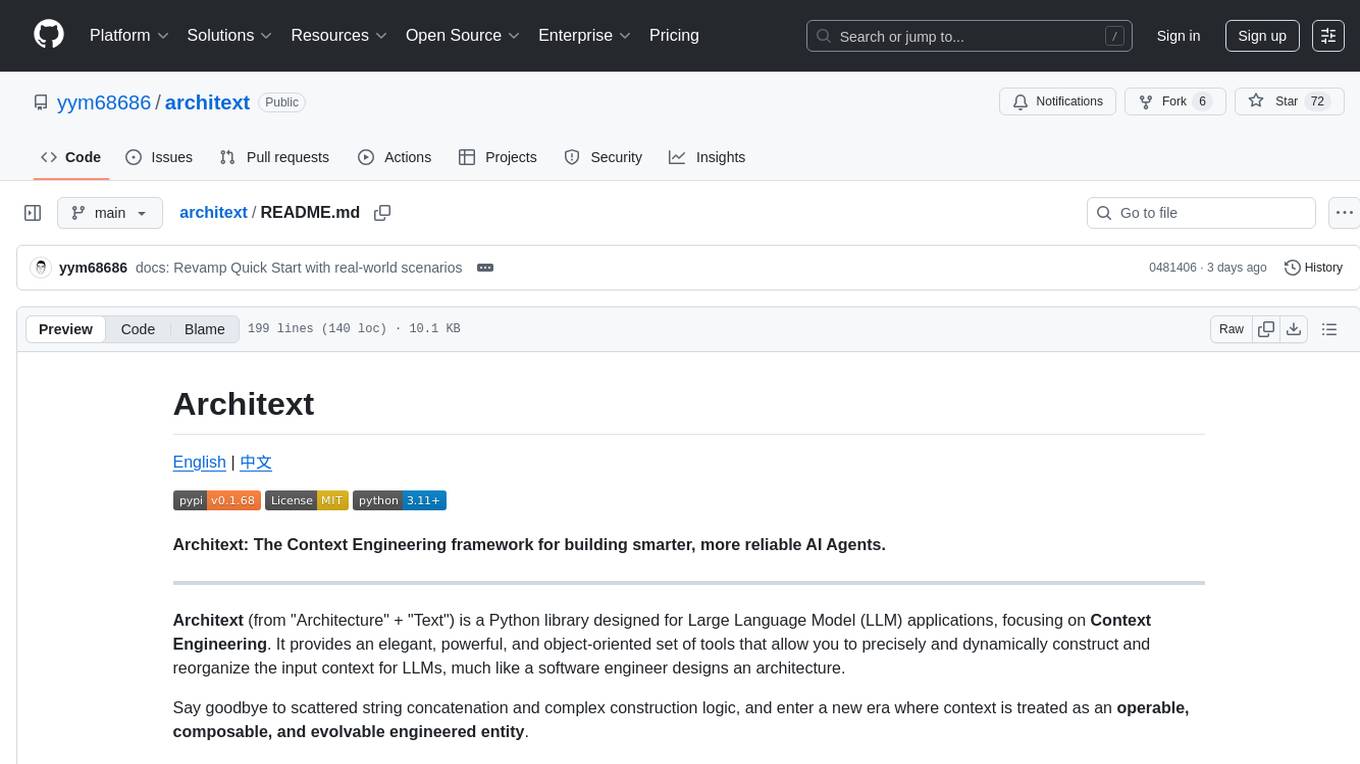

architext

Architext is a Python library designed for Large Language Model (LLM) applications, focusing on Context Engineering. It provides tools to construct and reorganize input context for LLMs dynamically. The library aims to elevate context construction from ad-hoc to systematic engineering, enabling precise manipulation of context content for AI Agents.

Software-Engineer-AI-Agent-Atlas

This repository provides activation patterns to transform a general AI into a specialized AI Software Engineer Agent. It addresses issues like context rot, hidden capabilities, chaos in vibecoding, and repetitive setup. The solution is a Persistent Consciousness Architecture framework named ATLAS, offering activated neural pathways, persistent identity, pattern recognition, specialized agents, and modular context management. Recent enhancements include abstraction power documentation, a specialized agent ecosystem, and a streamlined structure. Users can clone the repo, set up projects, initialize AI sessions, and manage context effectively for collaboration. Key files and directories organize identity, context, projects, specialized agents, logs, and critical information. The approach focuses on neuron activation through structure, context engineering, and vibecoding with guardrails to deliver a reliable AI Software Engineer Agent.

blades

Blades is a multimodal AI Agent framework in Go, supporting custom models, tools, memory, middleware, and more. It is well-suited for multi-turn conversations, chain reasoning, and structured output. The framework provides core components like Agent, Prompt, Chain, ModelProvider, Tool, Memory, and Middleware, enabling developers to build intelligent applications with flexible configuration and high extensibility. Blades leverages the characteristics of Go to achieve high decoupling and efficiency, making it easy to integrate different language model services and external tools. The project is in its early stages, inviting Go developers and AI enthusiasts to contribute and explore the possibilities of building AI applications in Go.

OpenViking

OpenViking is an open-source Context Database designed specifically for AI Agents. It aims to solve challenges in agent development by unifying memories, resources, and skills in a filesystem management paradigm. The tool offers tiered context loading, directory recursive retrieval, visualized retrieval trajectory, and automatic session management. Developers can interact with OpenViking like managing local files, enabling precise context manipulation and intuitive traceable operations. The tool supports various model services like OpenAI and Volcengine, enhancing semantic retrieval and context understanding for AI Agents.

learn-claude-code

Learn Claude Code is an educational project by shareAI Lab that aims to help users understand how modern AI agents work by building one from scratch. The repository provides original educational material on various topics such as the agent loop, tool design, explicit planning, context management, knowledge injection, task systems, parallel execution, team messaging, and autonomous teams. Users can follow a learning path through different versions of the project, each introducing new concepts and mechanisms. The repository also includes technical tutorials, articles, and example skills for users to explore and learn from. The project emphasizes the philosophy that the model is crucial in agent development, with code playing a supporting role.

20 - OpenAI Gpts

Blood pressure advice

Friendly guide on blood pressure, considering personal health context.

Seabiscuit Business Board Director Pro

Be Boardroom Brilliant: Specializes in corporate board design, recruitment, and operation, offering expert guidance on board composition, CEO performance monitoring, and risk management tailored to specific business needs and contexts. (v1.10)

Rentout

this chat gpt will analyze the info and act as a expert social media manager, will create blog posts and content for social media platforms

Squarespace Specialist

Focused on practical Squarespace solutions, trained on over 32,361 pages of content.

OctoberCMS Assistant

Expert in OctoberCMS, using provided docs and source code for precise guidance

Social Media Franzi |Post Creator auf Deutsch 🇩🇪

Du möchtest FaceBook Posts erstellen, die deine Leser dazu anregen mit dir in Verbindung zu treten? Dann ist FB Franzi genau die richtig für dich!