RepoMaster

RepoMaster: The open-source AI agent that masters GitHub. It turns any code repository into a powerful tool, achieving a new level of autonomous task-solving. An open alternative to Claude-Code.

Stars: 167

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

README:

- 2025.08.28 🎉 We open-sourced RepoMaster — an AI agent that leverages GitHub repos to solve complex real-world tasks.

- 2025.08.26 🎉 We open-sourced GitTaskBench — a repo-level benchmark & tooling suite for real-world tasks.

- 2025.08.10 🎉 We open-sourced SE-Agent — a self-evolution trajectory framework for multi-step reasoning.

🔗 Ecosystem: RepoMaster · GitTaskBench · SE-Agent · Team Homepage

RepoMaster transforms how you solve coding tasks by automatically finding the right GitHub tools and making them work together seamlessly. Just describe what you want, and watch as open source repositories become your intelligent assistants.

💬 Describe Task → 🧠 AI Analysis → 🔍 Auto Discovery → ⚡ Smart Execution → ✅ Perfect Results

git clone https://github.com/QuantaAlpha/RepoMaster.git

cd RepoMaster

pip install -r requirements.txtCopy the example configuration file and customize it with your API keys:

cp configs/env.example configs/.env

# Edit the configuration file with your preferred editor

nano configs/.env # or use vim, code, etc.Required API Keys:

# Primary AI Provider (Required)

OPENAI_API_KEY=your_openai_api_key_here

OPENAI_MODEL=gpt-5

# External Services (Required for deep search functionality)

SERPER_API_KEY=your_serper_key # Google search integration

JINA_API_KEY=your_jina_key # Web content extraction

# Optional: Additional AI Providers

# ANTHROPIC_API_KEY=your_claude_key # Anthropic Claude support

# DEEPSEEK_API_KEY=your_deepseek_key # DeepSeek integration

# GEMINI_API_KEY=your_gemini_key # Google Gemini support💡 Tip: The configs/env.example file contains all available configuration options with detailed comments.

Web Interface (Recommended for beginners):

python launcher.py --mode frontend

# Access the web dashboard at: http://localhost:8501Command Line Interface (Recommended for advanced users):

python launcher.py --mode backend --backend-mode unified

# Provides intelligent multi-agent orchestration via terminalSpecialized Agent Access:

python launcher.py --mode backend --backend-mode deepsearch # Deep Search Agent

python launcher.py --mode backend --backend-mode general_assistant # Programming Assistant

python launcher.py --mode backend --backend-mode repository_agent # Repository Agent📘 Need help? Check our comprehensive User Guide for advanced configuration, troubleshooting, and detailed usage examples.

Simply describe your task in natural language. RepoMaster's AI automatically analyzes your request, intelligently routes to optimal solution paths, and orchestrates the perfect GitHub tools to bring your ideas to life.

| Task Description | RepoMaster Action | Result |

|---|---|---|

| "Help me scrape product prices from this webpage" | 🔍 Find scraping tools → 🔧 Auto-configure → ✅ Extract data | Structured CSV output |

| "Transform photo into Van Gogh style" | 🔍 Find style transfer repos → 🎨 Process images → ✅ Generate art | Artistic masterpiece |

From "Writing Code from Scratch" → To "Making Open Source Work"

🎬 Complete Execution Demo | 📺 YouTube Demo

https://github.com/user-attachments/assets/a21b2f2e-a31c-4afd-953d-d143beef781a

Complete process of RepoMaster autonomously executing neural style transfer task

For advanced usage, configuration options, and troubleshooting, see our User Guide.

We believe in the power of community-driven innovation. Your contributions help make RepoMaster smarter, faster, and more capable.

- 🐛 Bug Reports: Help us identify and fix issues by reporting them.

- 💡 Feature Requests: Have a great idea? Suggest a new feature.

- 📖 Documentation: Improve clarity and examples by contributing to our documentation.

- 💻 Code Contributions: Ready to jump in? See our development setup to get started.

Quick Developer Environment Setup

# Fork and clone the repository

git clone https://github.com/your-username/RepoMaster.git

cd RepoMaster

# Install development dependencies

pip install -e ".[dev]"

# Set up pre-commit hooks for code quality

pre-commit install

# Run tests to ensure everything works

pytest tests/

# Start developing! 🚀📋 New to open source? Check our Contributing Guidelines for detailed instructions and best practices.

This project is licensed under the MIT License - see the LICENSE file for details.

- 📧 Email: [email protected]

- 🐛 Issues: GitHub Issues

- 💬 Discussions: GitHub Discussions

- 📖 Documentation: Full Documentation

Special thanks to:

- AutoGen - Multi-agent framework

- OpenHands - Software engineering agents

- SWE-Agent - GitHub issue resolution

- MLE-Bench - ML engineering benchmarks

- QuantaAlpha was founded in April 2025 by a team of professors, postdocs, PhDs, and master's students from Tsinghua University, Peking University, CAS, CMU, HKUST, and more.

🌟 Our mission is to explore the "quantum" of intelligence and pioneer the "alpha" frontier of agent research — from CodeAgents to self-evolving intelligence, and further to financial and cross-domain specialized agents, we are committed to redefining the boundaries of AI.

✨ In 2025, we will continue to produce high-quality research in the following directions:

- CodeAgent: End-to-end autonomous execution of real-world tasks

- DeepResearch: Deep reasoning and retrieval-augmented intelligence

- Agentic Reasoning / Agentic RL: Agent-based reasoning and reinforcement learning

- Self-evolution and collaborative learning: Evolution and coordination of multi-agent systems

📢 We welcome students and researchers interested in these directions to join us!

🔗 Team Homepage: QuantaAlpha

If you find RepoMaster useful in your research, please cite our work:

@article{wang2025repomaster,

title={RepoMaster: Autonomous Exploration and Understanding of GitHub Repositories for Complex Task Solving},

author={Huacan Wang and Ziyi Ni and Shuo Zhang and Lu, Shuo and Sen Hu and Ziyang He and Chen Hu and Jiaye Lin and Yifu Guo and Ronghao Chen and Xin Li and Daxin Jiang and Yuntao Du and Pin Lyu},

journal={arXiv preprint arXiv:2505.21577},

year={2025},

doi={10.48550/arXiv.2505.21577},

url={https://arxiv.org/abs/2505.21577}

}⭐ If RepoMaster helps you, please give us a star!

Made with ❤️ by the QuantaAlpha Team

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RepoMaster

Similar Open Source Tools

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

octocode-mcp

Octocode is a methodology and platform that empowers AI assistants with the skills of a Senior Staff Engineer. It transforms how AI interacts with code by moving from 'guessing' based on training data to 'knowing' based on deep, evidence-based research. The ecosystem includes the Manifest for Research Driven Development, the MCP Server for code interaction, Agent Skills for extending AI capabilities, a CLI for managing agent capabilities, and comprehensive documentation covering installation, core concepts, tutorials, and reference materials.

AgC

AgC is an open-core platform designed for deploying, running, and orchestrating AI agents at scale. It treats agents as first-class compute units, providing a modular, observable, cloud-neutral, and production-ready environment. Open Agentic Compute empowers developers and organizations to run agents like cloud-native workloads without lock-in.

parlant

Parlant is a structured approach to building and guiding customer-facing AI agents. It allows developers to create and manage robust AI agents, providing specific feedback on agent behavior and helping understand user intentions better. With features like guidelines, glossary, coherence checks, dynamic context, and guided tool use, Parlant offers control over agent responses and behavior. Developer-friendly aspects include instant changes, Git integration, clean architecture, and type safety. It enables confident deployment with scalability, effective debugging, and validation before deployment. Parlant works with major LLM providers and offers client SDKs for Python and TypeScript. The tool facilitates natural customer interactions through asynchronous communication and provides a chat UI for testing new behaviors before deployment.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

SAM

SAM is a native macOS AI assistant built with Swift and SwiftUI, designed for non-developers who want powerful tools in their everyday life. It provides real assistance, smart memory, voice control, image generation, and custom AI model training. SAM keeps your data on your Mac, supports multiple AI providers, and offers features for documents, creativity, writing, organization, learning, and more. It is privacy-focused, user-friendly, and accessible from various devices. SAM stands out with its privacy-first approach, intelligent memory, task execution capabilities, powerful tools, image generation features, custom AI model training, and flexible AI provider support.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

natively-cluely-ai-assistant

Natively is a free, open-source, privacy-first AI assistant designed to help users in real time during meetings, interviews, presentations, and conversations. Unlike traditional AI tools that work after the conversation, Natively operates while the conversation is happening. It runs as an invisible, always-on-top desktop overlay, listens when prompted, observes the screen content, and provides instant, context-aware assistance. The tool is fully transparent, customizable, and grants users complete control over local vs cloud AI, data, and credentials.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

DreamLayer

DreamLayer AI is an open-source Stable Diffusion WebUI designed for AI researchers, labs, and developers. It automates prompts, seeds, and metrics for benchmarking models, datasets, and samplers, enabling reproducible evaluations across multiple seeds and configurations. The tool integrates custom metrics and evaluation pipelines, providing a streamlined workflow for AI research. With features like automated benchmarking, reproducibility, built-in metrics, multi-modal readiness, and researcher-friendly interface, DreamLayer AI aims to simplify and accelerate the model evaluation process.

aigne-doc-smith

AIGNE DocSmith is a powerful AI-driven documentation generation tool that automates the creation of detailed, structured, and multi-language documentation directly from source code. It intelligently analyzes codebase to generate a comprehensive document structure, populates content with high-quality AI-powered generation, supports seamless translation into 12+ languages, integrates with AIGNE Hub for large language models, offers Discuss Kit publishing, automatically updates documentation with source code changes, and allows for individual document optimization.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

AIPex

AIPex is a revolutionary Chrome extension that transforms your browser into an intelligent automation platform. Using natural language commands and AI-powered intelligence, AIPex can automate virtually any browser task - from complex multi-step workflows to simple repetitive actions. It offers features like natural language control, AI-powered intelligence, multi-step automation, universal compatibility, smart data extraction, precision actions, form automation, visual understanding, developer-friendly with extensive API, and lightning-fast execution of automation tasks.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

For similar tasks

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

burp-ai-agent

Burp AI Agent is an extension for Burp Suite that integrates AI into your security workflow. It provides 7 AI backends, 53+ MCP tools, and 62 vulnerability classes. Users can configure privacy modes, perform audit logging, and connect external AI agents via MCP. The tool allows passive and active AI scanners to find vulnerabilities while users focus on manual testing. It requires Burp Suite, Java 21, and at least one AI backend configured.

ai-driven-dev-community

AI Driven Dev Community is a repository aimed at helping developers become more efficient by utilizing AI tools in their daily coding tasks. It provides a collection of tools, prompts, snippets, and agents for developers to integrate AI into their workflow. The repository is regularly updated with new resources and focuses on best practices for using AI in development work. Users can find tools like Espanso, ChatGPT, GitHub Copilot, and VSCode recommended for enhancing their coding experience. Additionally, the repository offers guidance on customizing AI for developers, installing AI toolbox for software engineers, and contributing to the community through easy steps.

SuperCoder

SuperCoder is an open-source autonomous software development system that leverages advanced AI tools and agents to streamline and automate coding, testing, and deployment tasks, enhancing efficiency and reliability. It supports a variety of languages and frameworks for diverse development needs. Users can set up the environment variables, build and run the Go server, Asynq worker, and Postgres using Docker and Docker Compose. The project is under active development and may still have issues, but users can seek help and support from the Discord community or by creating new issues on GitHub.

shire

The Shire is an AI Coding Agent Language that facilitates communication between an LLM and control IDE for automated programming. It offers a straightforward approach to creating AI agents tailored to individual IDEs, enabling users to build customized AI-driven development environments. The concept of Shire originated from AutoDev, a subproject of UnitMesh, with DevIns as its precursor. The tool provides documentation and resources for implementing AI in software engineering projects.

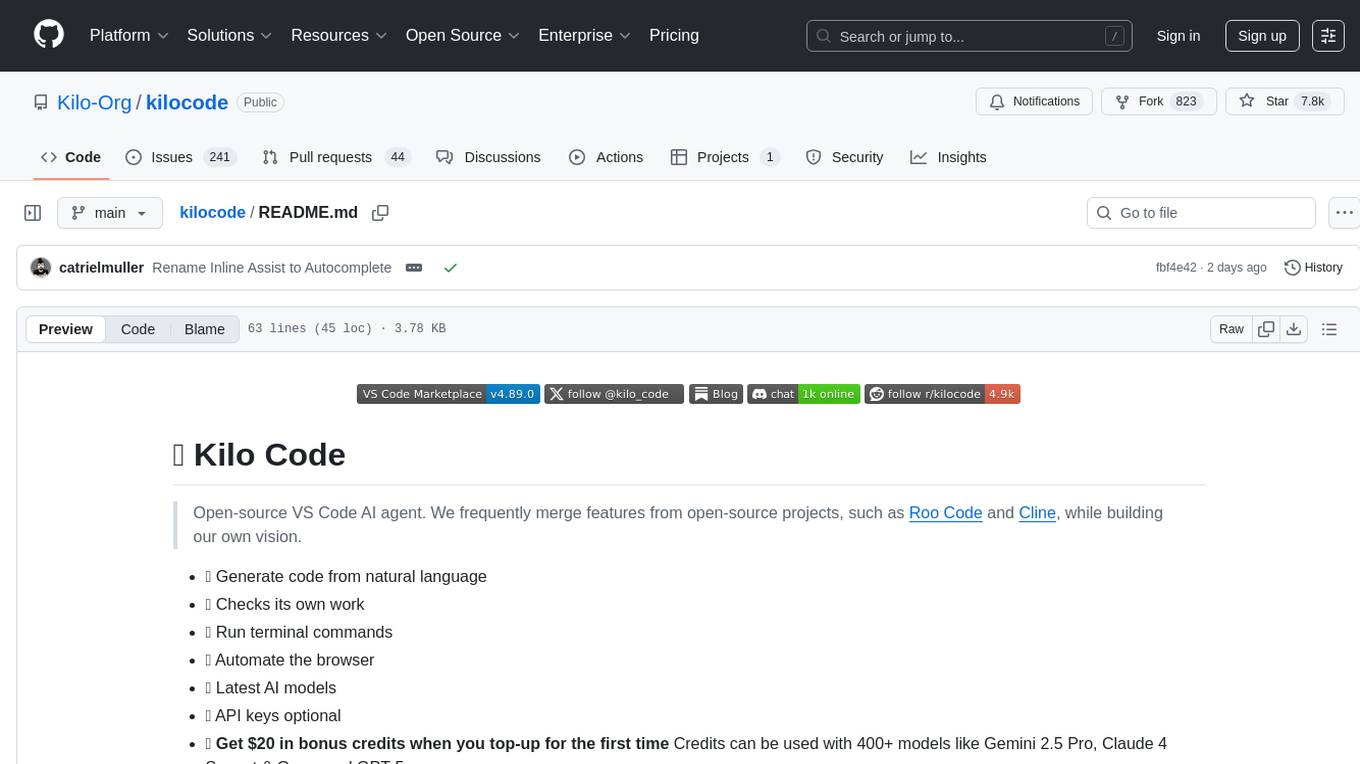

kilocode

Kilo Code is an open-source VS Code AI agent that allows users to generate code from natural language, check its own work, run terminal commands, automate the browser, and utilize the latest AI models. It offers features like task automation, automated refactoring, and integration with MCP servers. Users can access 400+ AI models and benefit from transparent pricing. Kilo Code is a fork of Roo Code and Cline, with improvements and unique features developed independently.

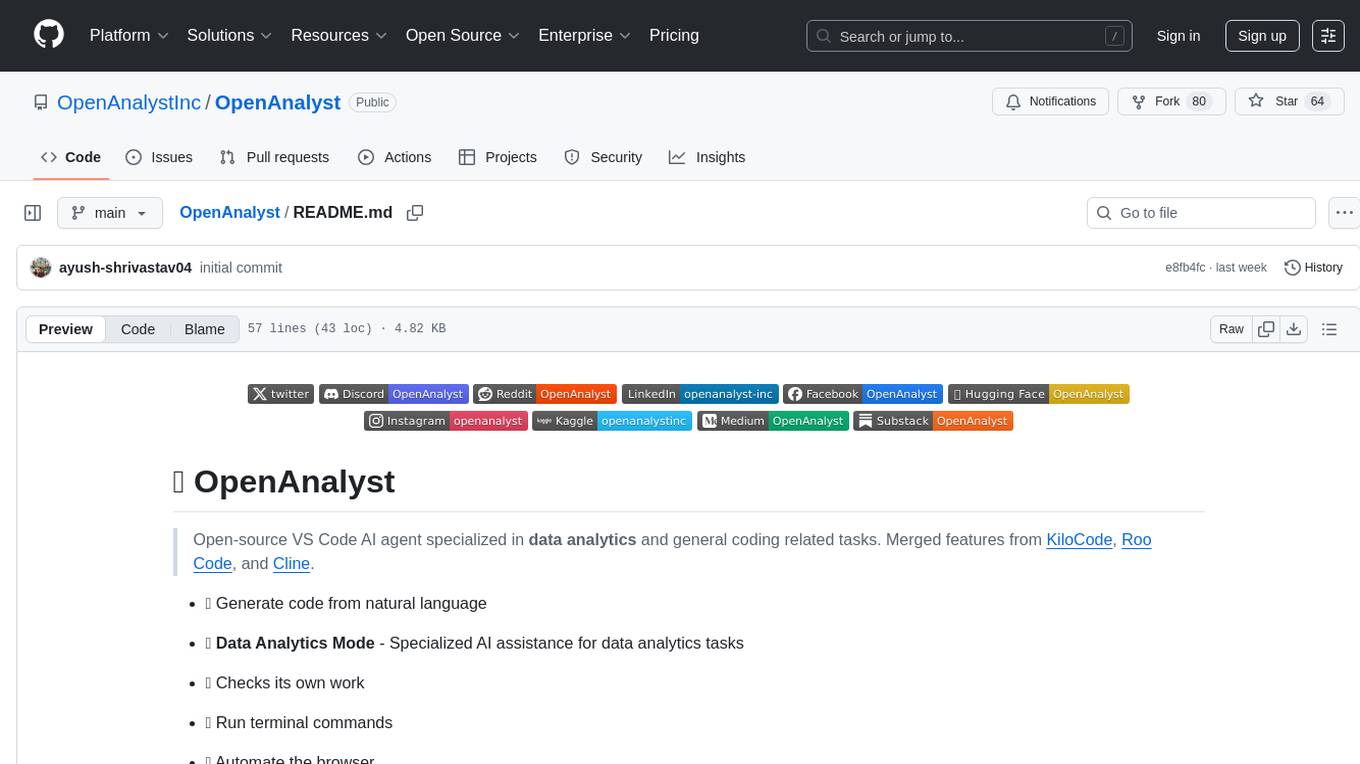

OpenAnalyst

OpenAnalyst is an open-source VS Code AI agent specialized in data analytics and general coding tasks. It merges features from KiloCode, Roo Code, and Cline, offering code generation from natural language, data analytics mode, self-checking, terminal command running, browser automation, latest AI models, and API keys option. It supports multi-mode operation for roles like Data Analyst, Code, Ask, and Debug. OpenAnalyst is a fork of KiloCode, combining the best features from Cline, Roo Code, and KiloCode, with enhancements like MCP Server Marketplace, automated refactoring, and support for latest AI models.

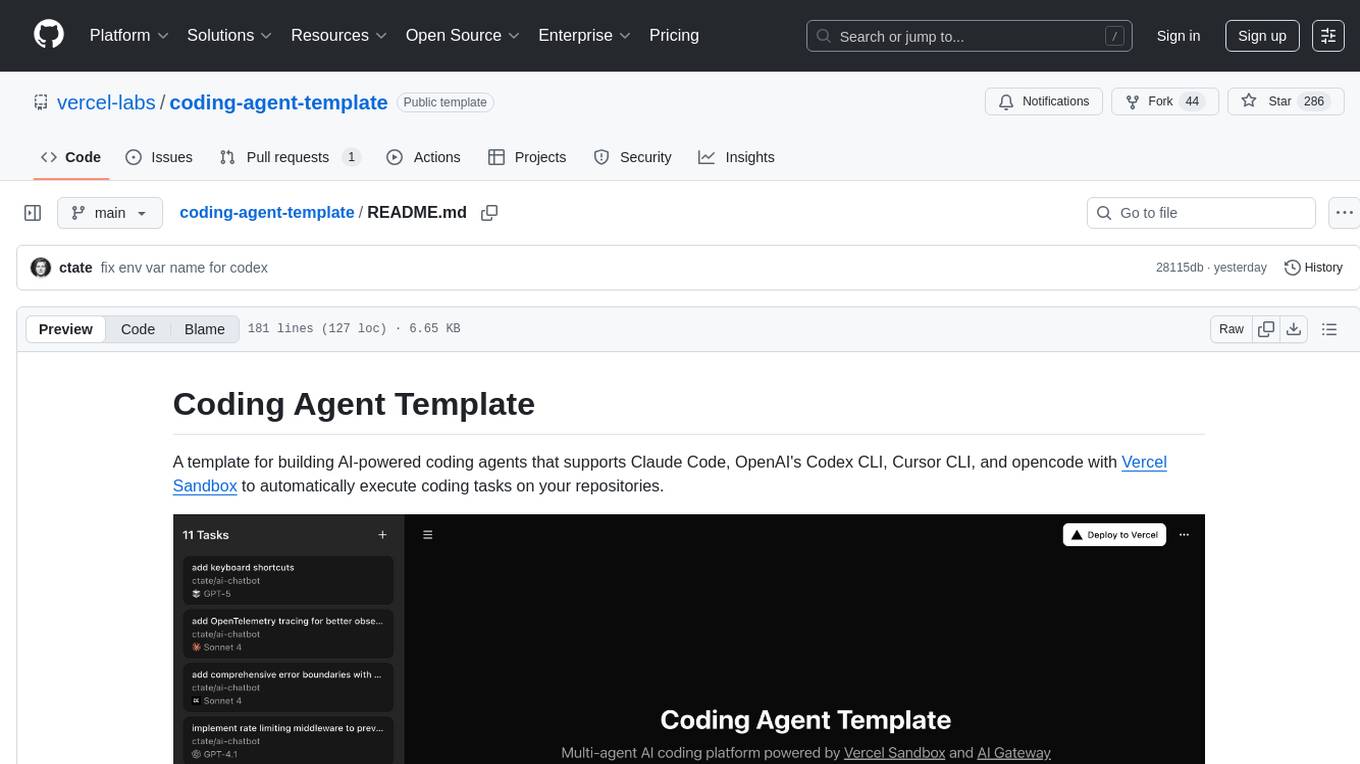

coding-agent-template

Coding Agent Template is a versatile tool for building AI-powered coding agents that support various coding tasks using Claude Code, OpenAI's Codex CLI, Cursor CLI, and opencode with Vercel Sandbox. It offers features like multi-agent support, Vercel Sandbox for secure code execution, AI Gateway integration, AI-generated branch names, task management, persistent storage, Git integration, and a modern UI built with Next.js and Tailwind CSS. Users can easily deploy their own version of the template to Vercel and set up the tool by cloning the repository, installing dependencies, configuring environment variables, setting up the database, and starting the development server. The tool simplifies the process of creating tasks, monitoring progress, reviewing results, and managing tasks, making it ideal for developers looking to automate coding tasks with AI agents.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.