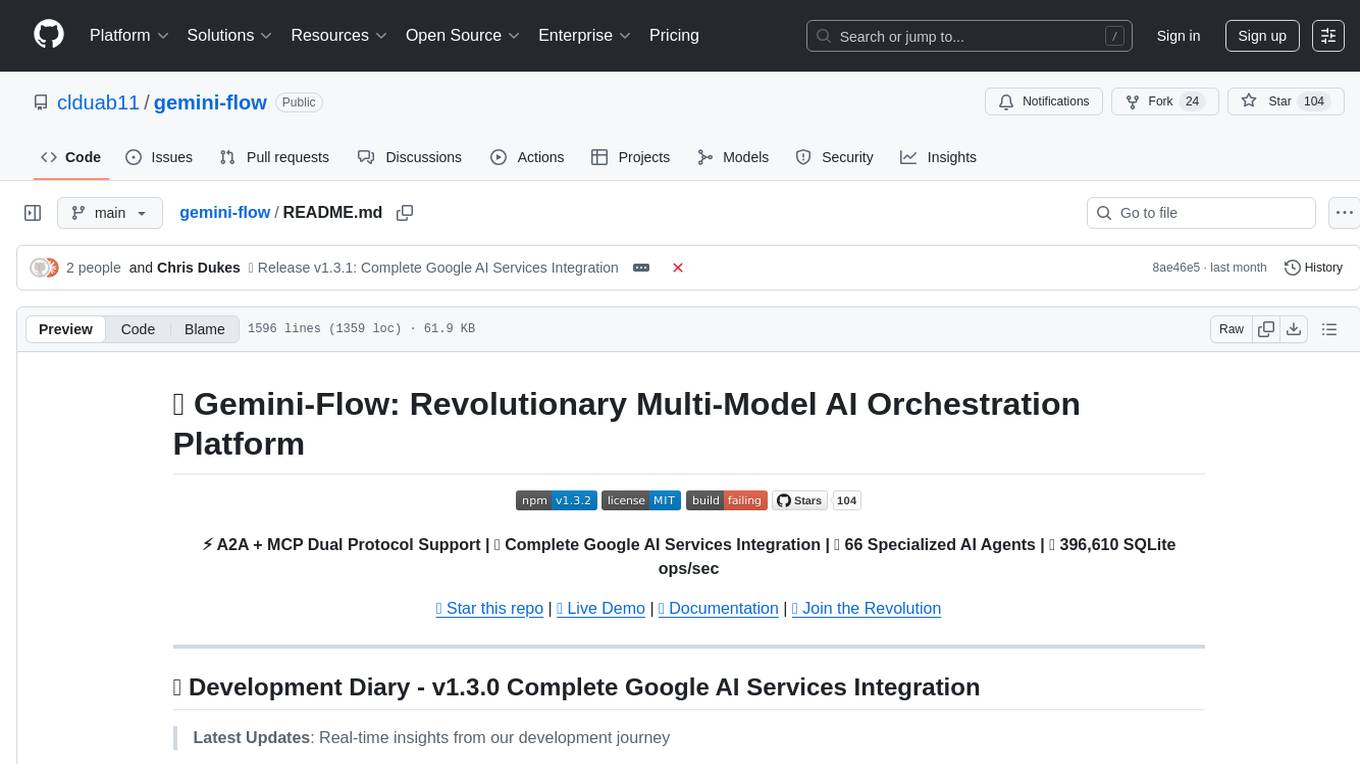

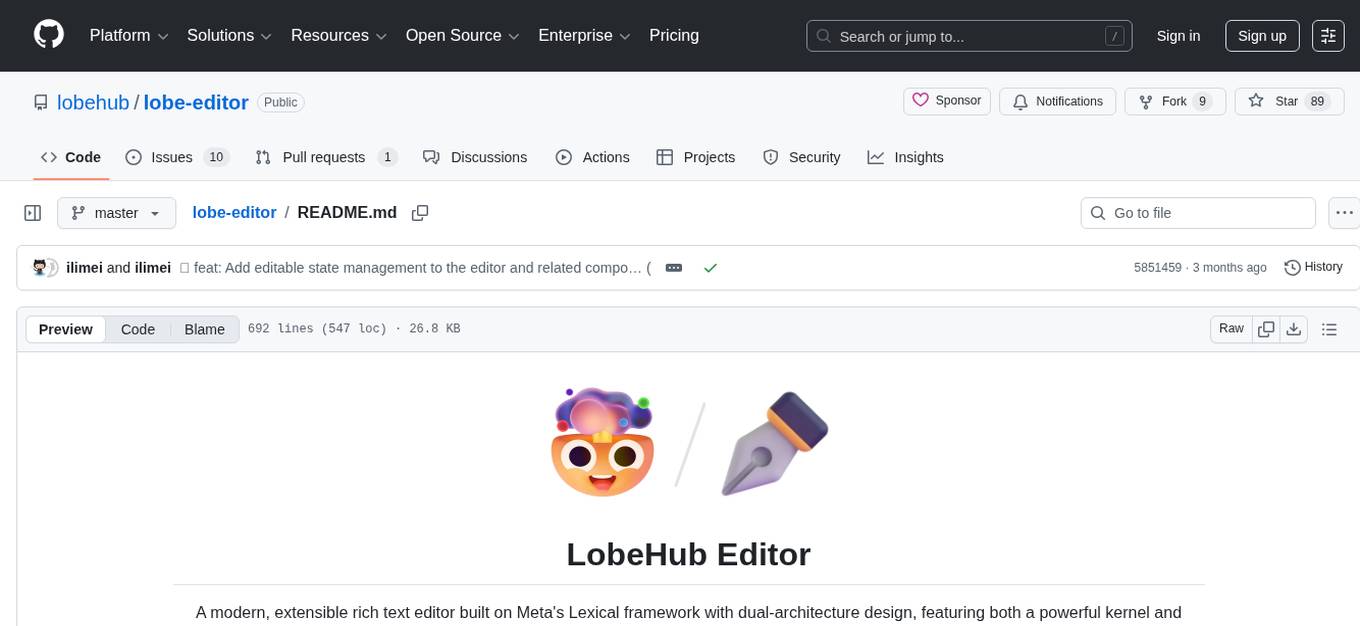

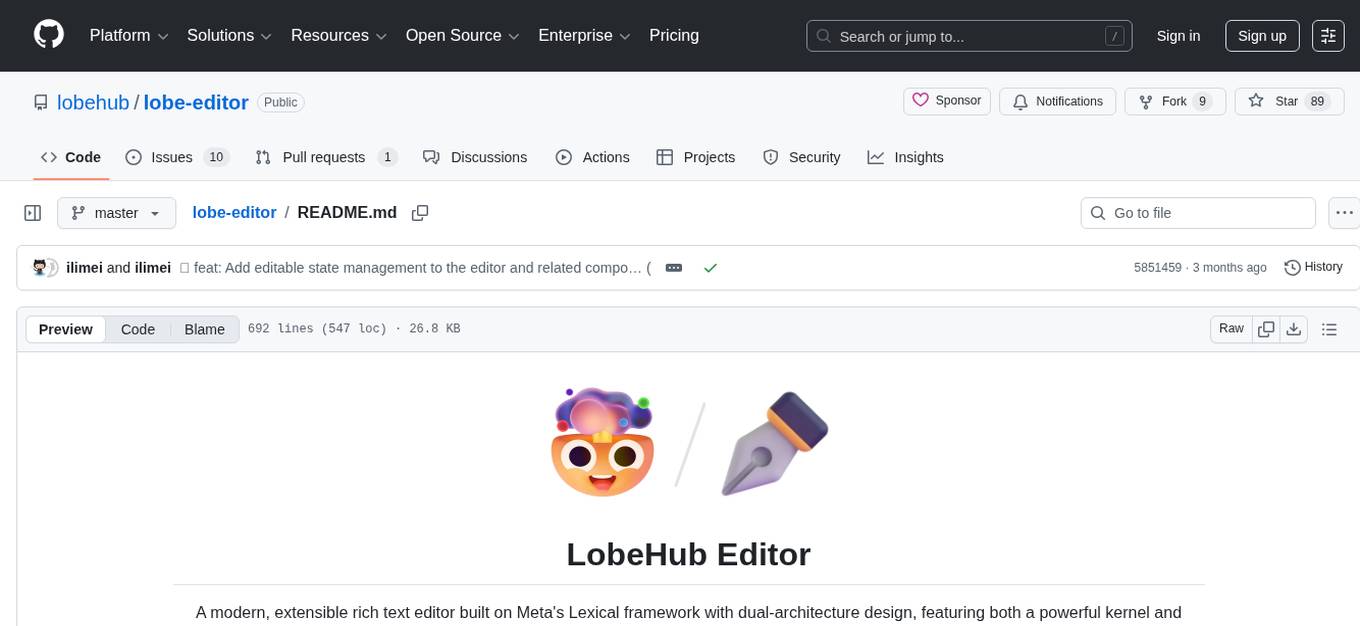

lobe-editor

✒️ Lobe Editor - a modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces.

Stars: 89

LobeHub Editor is a modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces, it offers a dual architecture with kernel-based API and React components, rich plugin ecosystem, chat interface ready, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system. The editor provides features like dual architecture, React-first design, rich plugin ecosystem, chat interface readiness, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system.

README:

A modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces.

Table of contents

- 🎯 Dual Architecture - Both kernel-based API and React components for maximum flexibility

- ⚛️ React-First - Built for React 19+ with modern hooks and patterns

- 🔌 Rich Plugin Ecosystem - 10+ built-in plugins for comprehensive content editing

- 💬 Chat Interface Ready - Pre-built chat input components with mention support

- ⌨️ Slash Commands - Intuitive

/and@triggered menus for quick content insertion - 📝 Multiple Export Formats - JSON, Markdown, and plain text export capabilities

- 🎨 Customizable UI - Antd-styled components with flexible theming

- 🔗 File & Media Support - Native support for images, files, tables, and more

- 🎯 TypeScript Native - Built with TypeScript for excellent developer experience

- 📱 Modern Build System - Optimized with Vite, Dumi docs, and comprehensive testing

To install @lobehub/editor, run the following command:

$ bun add @lobehub/editor$ pnpm add @lobehub/editor[![]back-to-top]

The simplest way to get started with a fully-featured editor:

import {

INSERT_HEADING_COMMAND,

ReactCodeblockPlugin,

ReactImagePlugin,

ReactLinkPlugin,

ReactListPlugin,

} from '@lobehub/editor';

import { Editor, useEditor } from '@lobehub/editor/react';

export default function MyEditor() {

const editor = useEditor();

return (

<Editor

placeholder="Start typing..."

editor={editor}

plugins={[ReactListPlugin, ReactLinkPlugin, ReactImagePlugin, ReactCodeblockPlugin]}

slashOption={{

items: [

{

key: 'h1',

label: 'Heading 1',

onSelect: (editor) => {

editor.dispatchCommand(INSERT_HEADING_COMMAND, { tag: 'h1' });

},

},

// More slash commands...

],

}}

onChange={(editor) => {

// Handle content changes

const markdown = editor.getDocument('markdown');

const json = editor.getDocument('json');

}}

/>

);

}Add more functionality with built-in plugins:

import {

INSERT_FILE_COMMAND,

INSERT_MENTION_COMMAND,

INSERT_TABLE_COMMAND,

ReactFilePlugin,

ReactHRPlugin,

ReactTablePlugin,

} from '@lobehub/editor';

import { Editor, useEditor } from '@lobehub/editor/react';

export default function AdvancedEditor() {

const editor = useEditor();

return (

<Editor

editor={editor}

plugins={[

ReactTablePlugin,

ReactHRPlugin,

Editor.withProps(ReactFilePlugin, {

handleUpload: async (file) => {

// Handle file upload

return { url: await uploadFile(file) };

},

}),

]}

mentionOption={{

items: async (search) => [

{

key: 'user1',

label: 'John Doe',

onSelect: (editor) => {

editor.dispatchCommand(INSERT_MENTION_COMMAND, {

label: 'John Doe',

extra: { userId: 1 },

});

},

},

],

}}

/>

);

}Pre-built component optimized for chat interfaces:

import { ChatInput } from '@lobehub/editor/react';

export default function ChatApp() {

return (

<ChatInput

placeholder="Type a message..."

onSend={(content) => {

// Handle message send

console.log('Message:', content);

}}

enabledFeatures={['mention', 'upload', 'codeblock']}

/>

);

}For advanced use cases, access the underlying kernel directly:

import { IEditor, createEditor } from '@lobehub/editor';

// Create editor instance

const editor: IEditor = createEditor();

// Register plugins

editor.registerPlugin(SomePlugin, { config: 'value' });

// Interact with content

editor.setDocument('text', 'Hello world');

const content = editor.getDocument('json');

// Listen to events

editor.on('content-changed', (newContent) => {

console.log('Content updated:', newContent);

});

// Execute commands

editor.dispatchCommand(INSERT_HEADING_COMMAND, { tag: 'h2' });[![]back-to-top]

| Plugin | Description | Features |

|---|---|---|

| CommonPlugin | Foundation editor components | ReactEditor, ReactEditorContent, ReactPlainText, base utilities |

| MarkdownPlugin | Markdown processing engine | Shortcuts, transformers, serialization, custom writers |

| UploadPlugin | File upload management system | Priority handlers, drag-drop, multi-source uploads |

| Plugin | Description | Features |

|---|---|---|

| ReactSlashPlugin | Slash command menu system |

/ and @ triggered menus, customizable items, async search |

| ReactMentionPlugin | User mention support |

@username mentions, custom markdown output, async user search |

| ReactImagePlugin | Image handling | Upload, display, drag & drop, captions, resizing |

| ReactCodeblockPlugin | Code syntax highlighting | Shiki-powered, 100+ languages, custom themes, color schemes |

| ReactListPlugin | List management | Ordered/unordered lists, nested lists, keyboard shortcuts |

| ReactLinkPlugin | Link management | Auto-detection, validation, previews, custom styling |

| ReactTablePlugin | Table support | Insert tables, edit cells, add/remove rows/columns, i18n |

| ReactHRPlugin | Horizontal rules | Divider insertion, custom styling, markdown shortcuts |

| ReactFilePlugin | File attachments | File upload, status tracking, validation, drag-drop |

All plugins follow a dual-architecture design:

- Plugin Interface: Standardized plugin system with lifecycle management

- Service Container: Centralized service registration and dependency injection

- Command System: Event-driven command pattern for editor operations

- Node System: Custom node types with serialization and transformation

- Data Sources: Content management and format conversion (JSON, Markdown, Text)

- React Components: High-level components for easy integration

- Hook Integration: Custom hooks for editor state and functionality

- Event Handling: React-friendly event system and callbacks

- UI Components: Pre-built UI elements with theming support

- ✅ Fully configurable with TypeScript-typed options

- ✅ Composable - use any combination together

- ✅ Extensible - create custom plugins using the same API

- ✅ Event-driven - react to user interactions and content changes

- ✅ Service-oriented - modular architecture with dependency injection

- ✅ Internationalization - Built-in i18n support where applicable

- ✅ Markdown integration - Shortcuts, import/export, custom transformers

- ✅ Theme system - Customizable styling and appearance

- ✅ Command pattern - Programmatic control and automation

// Get editor instance

const editor = useEditor();

// Helper for plugin configuration

const PluginWithConfig = Editor.withProps(ReactFilePlugin, {

handleUpload: async (file) => ({ url: 'uploaded-url' }),

});Create a new editor kernel instance:

const editor = createEditor();Core editor methods:

interface IEditor {

// Content management

setDocument(type: string, content: any): void;

getDocument(type: string): any;

// Plugin system

registerPlugin<T>(plugin: Constructor<T>, config?: T): IEditor;

registerPlugins(plugins: Plugin[]): IEditor;

// Commands

dispatchCommand<T>(command: LexicalCommand<T>, payload: T): boolean;

// Events

on<T>(event: string, listener: (data: T) => void): this;

off<T>(event: string, listener: (data: T) => void): this;

// Lifecycle

focus(): void;

blur(): void;

destroy(): void;

// Access

getLexicalEditor(): LexicalEditor | null;

getRootElement(): HTMLElement | null;

requireService<T>(serviceId: ServiceID<T>): T | null;

}import { IEditorKernel, IEditorPlugin } from '@lobehub/editor';

class MyCustomPlugin implements IEditorPlugin {

constructor(private config: MyPluginConfig) {}

initialize(kernel: IEditorKernel) {

// Register nodes, commands, transforms, etc.

kernel.registerNode(MyCustomNode);

kernel.registerCommand(MY_COMMAND, this.handleCommand);

}

destroy() {

// Cleanup

}

}Common commands you can dispatch:

// Content insertion

INSERT_HEADING_COMMAND; // { tag: 'h1' | 'h2' | 'h3' }

INSERT_LINK_COMMAND; // { url: string, text?: string }

INSERT_IMAGE_COMMAND; // { src: string, alt?: string }

INSERT_TABLE_COMMAND; // { rows: number, columns: number }

INSERT_MENTION_COMMAND; // { label: string, extra?: any }

INSERT_FILE_COMMAND; // { file: File }

INSERT_HORIZONTAL_RULE_COMMAND;

// Text formatting

FORMAT_TEXT_COMMAND; // { format: 'bold' | 'italic' | 'underline' }

CLEAR_FORMAT_COMMAND;[![]back-to-top]

You can use Github Codespaces for online development:

Or clone it for local development:

$ git clone https://github.com/lobehub/lobe-editor.git

$ cd lobe-editor

$ pnpm install

$ pnpm run devThis will start the Dumi documentation server with live playground at http://localhost:8000.

| Script | Description |

|---|---|

pnpm dev |

Start Dumi development server with playground |

pnpm build |

Build library and generate type definitions |

pnpm test |

Run tests with Vitest |

pnpm test:coverage |

Run tests with coverage report |

pnpm lint |

Lint and fix code with ESLint |

pnpm type-check |

Type check with TypeScript |

pnpm ci |

Run all CI checks (lint, type-check, test) |

pnpm docs:build |

Build documentation for production |

pnpm release |

Publish new version with semantic-release |

LobeHub Editor includes comprehensive debug logging that can be controlled via environment variables:

# Enable all LobeHub Editor debug output

DEBUG=lobe-editor:*

# Enable only important logs (recommended for development)

DEBUG=lobe-editor:*:info,lobe-editor:*:warn,lobe-editor:*:error

# Enable specific components

DEBUG=lobe-editor:kernel,lobe-editor:plugin:*| Category | Description | Example |

|---|---|---|

kernel |

Core editor functionality | DEBUG=lobe-editor:kernel |

plugin:* |

All plugins | DEBUG=lobe-editor:plugin:* |

plugin:slash |

Slash commands | DEBUG=lobe-editor:plugin:slash |

plugin:mention |

Mention system | DEBUG=lobe-editor:plugin:mention |

plugin:image |

Image handling | DEBUG=lobe-editor:plugin:image |

plugin:file |

File operations | DEBUG=lobe-editor:plugin:file |

service:* |

All services | DEBUG=lobe-editor:service:* |

service:upload |

Upload service | DEBUG=lobe-editor:service:upload |

service:markdown |

Markdown processing | DEBUG=lobe-editor:service:markdown |

| Level | Browser Display | Usage | Environment Variable |

|---|---|---|---|

debug |

Console.log (gray) | Detailed tracing | DEBUG=lobe-editor:*:debug |

info |

Console.log (blue) | General information | DEBUG=lobe-editor:*:info |

warn |

Console.warn (yellow) | Warnings | DEBUG=lobe-editor:*:warn |

error |

Console.error (red) | Errors | DEBUG=lobe-editor:*:error |

# Full debug during development

DEBUG=lobe-editor:*

# Only critical logs

DEBUG=lobe-editor:*:error,lobe-editor:*:warn

# Plugin debugging

DEBUG=lobe-editor:plugin:*

# Service debugging

DEBUG=lobe-editor:service:*lobe-editor/

├── src/

│ ├── editor-kernel/ # 🧠 Core editor logic

│ │ ├── kernel.ts # Main editor class with plugin system

│ │ ├── data-source.ts # Content management (JSON/Markdown/Text)

│ │ ├── service.ts # Service container and dependency injection

│ │ ├── plugin/ # Plugin base classes and interfaces

│ │ ├── react/ # React integration layer

│ │ └── types.ts # TypeScript interfaces

│ │

│ ├── plugins/ # 🔌 Feature plugins

│ │ ├── common/ # 🏗️ Foundation components

│ │ │ ├── plugin/ # Base editor plugin

│ │ │ ├── react/ # ReactEditor, ReactEditorContent, ReactPlainText

│ │ │ ├── data-source/ # Content data sources

│ │ │ └── utils/ # Common utilities

│ │ │

│ │ ├── markdown/ # 📝 Markdown processing engine

│ │ │ ├── plugin/ # Markdown transformation plugin

│ │ │ ├── service/ # Markdown shortcut service

│ │ │ ├── data-source/ # Markdown serialization

│ │ │ └── utils/ # Transformer utilities

│ │ │

│ │ ├── upload/ # 📤 Upload management system

│ │ │ ├── plugin/ # Upload handling plugin

│ │ │ ├── service/ # Upload service with priority system

│ │ │ └── utils/ # Upload utilities

│ │ │

│ │ ├── slash/ # ⚡ Slash commands (/, @)

│ │ │ ├── plugin/ # Slash detection plugin

│ │ │ ├── react/ # ReactSlashPlugin, ReactSlashOption

│ │ │ ├── service/ # Slash service with fuzzy search

│ │ │ └── utils/ # Search and trigger utilities

│ │ │

│ │ ├── mention/ # 👤 @mention system

│ │ │ ├── plugin/ # Mention plugin with decorators

│ │ │ ├── react/ # ReactMentionPlugin

│ │ │ ├── command/ # INSERT_MENTION_COMMAND

│ │ │ └── node/ # MentionNode with serialization

│ │ │

│ │ ├── codeblock/ # 🎨 Syntax highlighting

│ │ │ ├── plugin/ # Codeblock plugin with Shiki

│ │ │ ├── react/ # ReactCodeblockPlugin

│ │ │ ├── command/ # Language and color commands

│ │ │ └── utils/ # Language detection

│ │ │

│ │ ├── image/ # 🖼️ Image upload & display

│ │ │ ├── plugin/ # Image plugin with captions

│ │ │ ├── react/ # ReactImagePlugin

│ │ │ ├── command/ # INSERT_IMAGE_COMMAND

│ │ │ └── node/ # BaseImageNode, ImageNode

│ │ │

│ │ ├── table/ # 📊 Table support

│ │ │ ├── plugin/ # Table plugin with i18n

│ │ │ ├── react/ # ReactTablePlugin

│ │ │ ├── command/ # Table manipulation commands

│ │ │ ├── node/ # Enhanced TableNode

│ │ │ └── utils/ # Table operations

│ │ │

│ │ ├── file/ # 📎 File attachments

│ │ │ ├── plugin/ # File plugin with status tracking

│ │ │ ├── react/ # ReactFilePlugin

│ │ │ ├── command/ # INSERT_FILE_COMMAND

│ │ │ ├── node/ # FileNode with metadata

│ │ │ └── utils/ # File operations

│ │ │

│ │ ├── link/ # 🔗 Link management

│ │ │ ├── plugin/ # Link plugin with validation

│ │ │ ├── react/ # ReactLinkPlugin

│ │ │ ├── command/ # Link commands

│ │ │ └── utils/ # URL validation and detection

│ │ │

│ │ ├── list/ # 📋 Lists (ordered/unordered)

│ │ │ ├── plugin/ # List plugin with nesting

│ │ │ ├── react/ # ReactListPlugin

│ │ │ ├── command/ # List manipulation commands

│ │ │ └── utils/ # List operations

│ │ │

│ │ └── hr/ # ➖ Horizontal rules

│ │ ├── plugin/ # HR plugin with styling

│ │ ├── react/ # ReactHRPlugin

│ │ ├── command/ # HR insertion commands

│ │ └── node/ # HorizontalRuleNode

│ │

│ ├── react/ # ⚛️ High-level React components

│ │ ├── Editor/ # Main Editor component with plugins

│ │ ├── ChatInput/ # Chat interface component

│ │ ├── ChatInputActions/ # Chat action buttons

│ │ ├── ChatInputActionBar/ # Action bar layout

│ │ ├── SendButton/ # Send button with states

│ │ └── CodeLanguageSelect/ # Code language selector

│ │

│ └── index.ts # Public API exports

│

├── docs/ # 📚 Documentation source

├── tests/ # 🧪 Test files

├── vitest.config.ts # Test configuration

└── .dumi/ # Dumi doc build cache

The architecture follows a dual-layer design:

-

Kernel Layer (

editor-kernel/) - Framework-agnostic core with plugin system -

React Layer (

react/+plugins/*/react/) - React-specific implementations

Each plugin follows a consistent structure:

-

plugin/- Core plugin logic and node definitions -

react/- React components and hooks (if applicable) -

command/- Editor commands and handlers -

service/- Services and business logic -

node/- Custom Lexical nodes -

utils/- Utility functions and helpers

This allows for maximum flexibility - you can use just the kernel for custom integrations, or the React components for rapid development.

Contributions of all types are more than welcome, if you are interested in contributing code, feel free to check out our GitHub Issues to get stuck in to show us what you're made of.

- 🤯 Lobe Chat - An open-source, extensible (Function Calling), high-performance chatbot framework. It supports one-click free deployment of your private ChatGPT/LLM web application.

-

🅰️ Lobe Theme - The modern theme for stable diffusion webui, exquisite interface design, highly customizable UI, and efficiency boosting features. - 🧸 Lobe Vidol - Experience the magic of virtual idol creation with Lobe Vidol, enjoy the elegance of our Exquisite UI Design, dance along using MMD Dance Support, and engage in Smooth Conversations.

- 🍭 Lobe UI - An open-source UI component library for building AIGC web apps.

- 🥨 Lobe Icons - Popular AI / LLM Model Brand SVG Logo and Icon Collection.

- 📊 Lobe Charts - React modern charts components built on recharts

- 🎤 Lobe TTS - A high-quality & reliable TTS/STT library for Server and Browser

- 🌏 Lobe i18n - Automation ai tool for the i18n (internationalization) translation process.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lobe-editor

Similar Open Source Tools

lobe-editor

LobeHub Editor is a modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces, it offers a dual architecture with kernel-based API and React components, rich plugin ecosystem, chat interface ready, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system. The editor provides features like dual architecture, React-first design, rich plugin ecosystem, chat interface readiness, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

one

ONE is a modern web and AI agent development toolkit that empowers developers to build AI-powered applications with high performance, beautiful UI, AI integration, responsive design, type safety, and great developer experience. It is perfect for building modern web applications, from simple landing pages to complex AI-powered platforms.

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

morgana-form

MorGana Form is a full-stack form builder project developed using Next.js, React, TypeScript, Ant Design, PostgreSQL, and other technologies. It allows users to quickly create and collect data through survey forms. The project structure includes components, hooks, utilities, pages, constants, Redux store, themes, types, server-side code, and component packages. Environment variables are required for database settings, NextAuth login configuration, and file upload services. Additionally, the project integrates an AI model for form generation using the Ali Qianwen model API.

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

Lynkr

Lynkr is a self-hosted proxy server that unlocks various AI coding tools like Claude Code CLI, Cursor IDE, and Codex Cli. It supports multiple LLM providers such as Databricks, AWS Bedrock, OpenRouter, Ollama, llama.cpp, Azure OpenAI, Azure Anthropic, OpenAI, and LM Studio. Lynkr offers cost reduction, local/private execution, remote or local connectivity, zero code changes, and enterprise-ready features. It is perfect for developers needing provider flexibility, cost control, self-hosted AI with observability, local model execution, and cost reduction strategies.

mcp-debugger

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP). The tool supports multi-language debugging with clean adapter patterns, including Python debugging via debugpy, JavaScript (Node.js) debugging via js-debug, and Rust debugging via CodeLLDB. It offers features like mock adapter for testing, STDIO and SSE transport modes, zero-runtime dependencies, Docker and npm packages for deployment, structured JSON responses for easy parsing, path validation to prevent crashes, and AI-aware line context for intelligent breakpoint placement with code context.

Callytics

Callytics is an advanced call analytics solution that leverages speech recognition and large language models (LLMs) technologies to analyze phone conversations from customer service and call centers. By processing both the audio and text of each call, it provides insights such as sentiment analysis, topic detection, conflict detection, profanity word detection, and summary. These cutting-edge techniques help businesses optimize customer interactions, identify areas for improvement, and enhance overall service quality. When an audio file is placed in the .data/input directory, the entire pipeline automatically starts running, and the resulting data is inserted into the database. This is only a v1.1.0 version; many new features will be added, models will be fine-tuned or trained from scratch, and various optimization efforts will be applied.

hia

HIA (Health Insights Agent) is an AI agent designed to analyze blood reports and provide personalized health insights. It features an intelligent agent-based architecture with multi-model cascade system, in-context learning, PDF upload and text extraction, secure user authentication, session history tracking, and a modern UI. The tech stack includes Streamlit for frontend, Groq for AI integration, Supabase for database, PDFPlumber for PDF processing, and Supabase Auth for authentication. The project structure includes components for authentication, UI, configuration, services, agents, and utilities. Contributions are welcome, and the project is licensed under MIT.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

multi-agent-shogun

multi-agent-shogun is a system that runs multiple AI coding CLI instances simultaneously, orchestrating them like a feudal Japanese army. It supports Claude Code, OpenAI Codex, GitHub Copilot, and Kimi Code. The system allows you to command your AI army with zero coordination cost, enabling parallel execution, non-blocking workflow, cross-session memory, event-driven communication, and full transparency. It also features skills discovery, phone notifications, pane border task display, shout mode, and multi-CLI support.

kubectl-mcp-server

Control your entire Kubernetes infrastructure through natural language conversations with AI. Talk to your clusters like you talk to a DevOps expert. Debug crashed pods, optimize costs, deploy applications, audit security, manage Helm charts, and visualize dashboards—all through natural language. The tool provides 253 powerful tools, 8 workflow prompts, 8 data resources, and works with all major AI assistants. It offers AI-powered diagnostics, built-in cost optimization, enterprise-ready features, zero learning curve, universal compatibility, visual insights, and production-grade deployment options. From debugging crashed pods to optimizing cluster costs, kubectl-mcp-server is your AI-powered DevOps companion.

For similar tasks

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

ragstack-ai

RAGStack is an out-of-the-box solution simplifying Retrieval Augmented Generation (RAG) in GenAI apps. RAGStack includes the best open-source for implementing RAG, giving developers a comprehensive Gen AI Stack leveraging LangChain, CassIO, and more. RAGStack leverages the LangChain ecosystem and is fully compatible with LangSmith for monitoring your AI deployments.

breadboard

Breadboard is a library for prototyping generative AI applications. It is inspired by the hardware maker community and their boundless creativity. Breadboard makes it easy to wire prototypes and share, remix, reuse, and compose them. The library emphasizes ease and flexibility of wiring, as well as modularity and composability.

cloudflare-ai-web

Cloudflare-ai-web is a lightweight and easy-to-use tool that allows you to quickly deploy a multi-modal AI platform using Cloudflare Workers AI. It supports serverless deployment, password protection, and local storage of chat logs. With a size of only ~638 kB gzip, it is a great option for building AI-powered applications without the need for a dedicated server.

app-builder

AppBuilder SDK is a one-stop development tool for AI native applications, providing basic cloud resources, AI capability engine, Qianfan large model, and related capability components to improve the development efficiency of AI native applications.

cookbook

This repository contains community-driven practical examples of building AI applications and solving various tasks with AI using open-source tools and models. Everyone is welcome to contribute, and we value everybody's contribution! There are several ways you can contribute to the Open-Source AI Cookbook: Submit an idea for a desired example/guide via GitHub Issues. Contribute a new notebook with a practical example. Improve existing examples by fixing issues/typos. Before contributing, check currently open issues and pull requests to avoid working on something that someone else is already working on.

For similar jobs

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

ChatGPT-On-CS

ChatGPT-On-CS is an intelligent chatbot tool based on large models, supporting various platforms like WeChat, Taobao, Bilibili, Douyin, Weibo, and more. It can handle text, voice, and image inputs, access external resources through plugins, and customize enterprise AI applications based on proprietary knowledge bases. Users can set custom replies, utilize ChatGPT interface for intelligent responses, send images and binary files, and create personalized chatbots using knowledge base files. The tool also features platform-specific plugin systems for accessing external resources and supports enterprise AI applications customization.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

awesome-LLM-resourses

A comprehensive repository of resources for Chinese large language models (LLMs), including data processing tools, fine-tuning frameworks, inference libraries, evaluation platforms, RAG engines, agent frameworks, books, courses, tutorials, and tips. The repository covers a wide range of tools and resources for working with LLMs, from data labeling and processing to model fine-tuning, inference, evaluation, and application development. It also includes resources for learning about LLMs through books, courses, and tutorials, as well as insights and strategies from building with LLMs.

tappas

Hailo TAPPAS is a set of full application examples that implement pipeline elements and pre-trained AI tasks. It demonstrates Hailo's system integration scenarios on predefined systems, aiming to accelerate time to market, simplify integration with Hailo's runtime SW stack, and provide a starting point for customers to fine-tune their applications. The tool supports both Hailo-15 and Hailo-8, offering various example applications optimized for different common hosts. TAPPAS includes pipelines for single network, two network, and multi-stream processing, as well as high-resolution processing via tiling. It also provides example use case pipelines like License Plate Recognition and Multi-Person Multi-Camera Tracking. The tool is regularly updated with new features, bug fixes, and platform support.

cloudflare-rag

This repository provides a fullstack example of building a Retrieval Augmented Generation (RAG) app with Cloudflare. It utilizes Cloudflare Workers, Pages, D1, KV, R2, AI Gateway, and Workers AI. The app features streaming interactions to the UI, hybrid RAG with Full-Text Search and Vector Search, switchable providers using AI Gateway, per-IP rate limiting with Cloudflare's KV, OCR within Cloudflare Worker, and Smart Placement for workload optimization. The development setup requires Node, pnpm, and wrangler CLI, along with setting up necessary primitives and API keys. Deployment involves setting up secrets and deploying the app to Cloudflare Pages. The project implements a Hybrid Search RAG approach combining Full Text Search against D1 and Hybrid Search with embeddings against Vectorize to enhance context for the LLM.

pixeltable

Pixeltable is a Python library designed for ML Engineers and Data Scientists to focus on exploration, modeling, and app development without the need to handle data plumbing. It provides a declarative interface for working with text, images, embeddings, and video, enabling users to store, transform, index, and iterate on data within a single table interface. Pixeltable is persistent, acting as a database unlike in-memory Python libraries such as Pandas. It offers features like data storage and versioning, combined data and model lineage, indexing, orchestration of multimodal workloads, incremental updates, and automatic production-ready code generation. The tool emphasizes transparency, reproducibility, cost-saving through incremental data changes, and seamless integration with existing Python code and libraries.

wave-apps

Wave Apps is a directory of sample applications built on H2O Wave, allowing users to build AI apps faster. The apps cover various use cases such as explainable hotel ratings, human-in-the-loop credit risk assessment, mitigating churn risk, online shopping recommendations, and sales forecasting EDA. Users can download, modify, and integrate these sample apps into their own projects to learn about app development and AI model deployment.