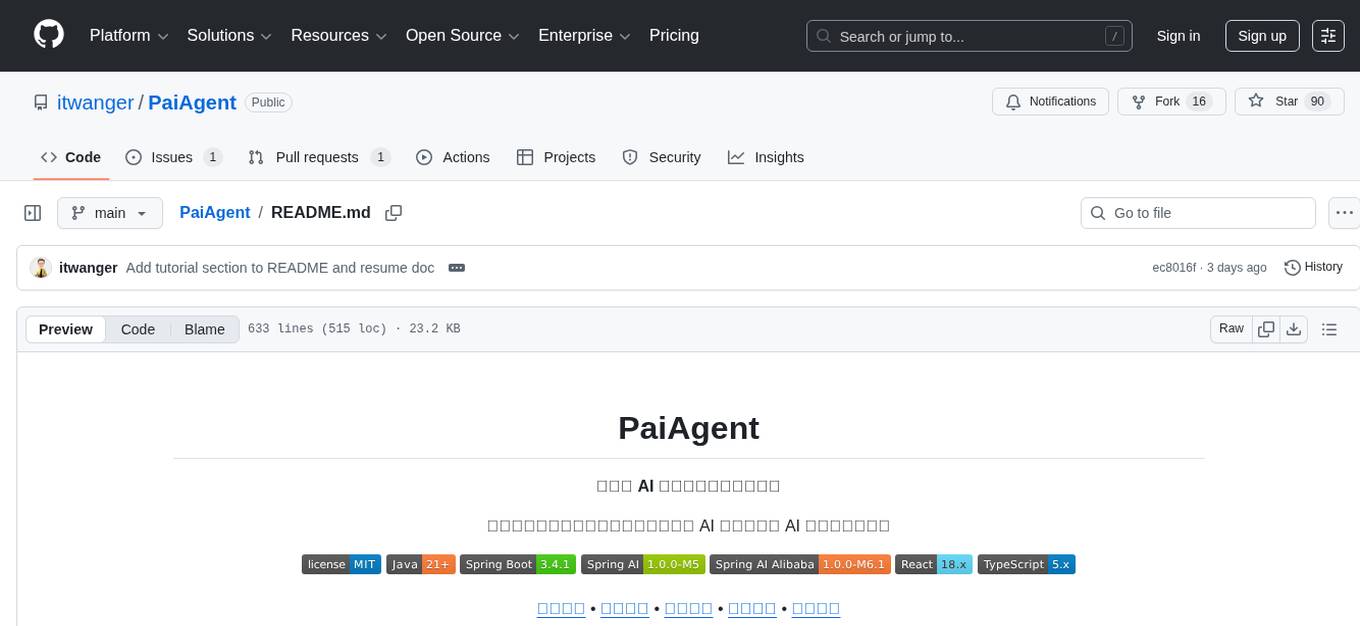

LLM-Alchemy-Chamber

a friendly neighborhood repository with diverse experiments and adventures in the world of LLMs

Stars: 117

LLM Alchemy Chamber is a repository dedicated to exploring the world of Language Models (LLMs) through various experiments and projects. It contains scripts, notebooks, and experiments focused on tasks such as fine-tuning different LLM models, quantization for performance optimization, dataset generation for instruction/QA tasks, and more. The repository offers a collection of resources for beginners and enthusiasts interested in delving into the mystical realm of LLMs.

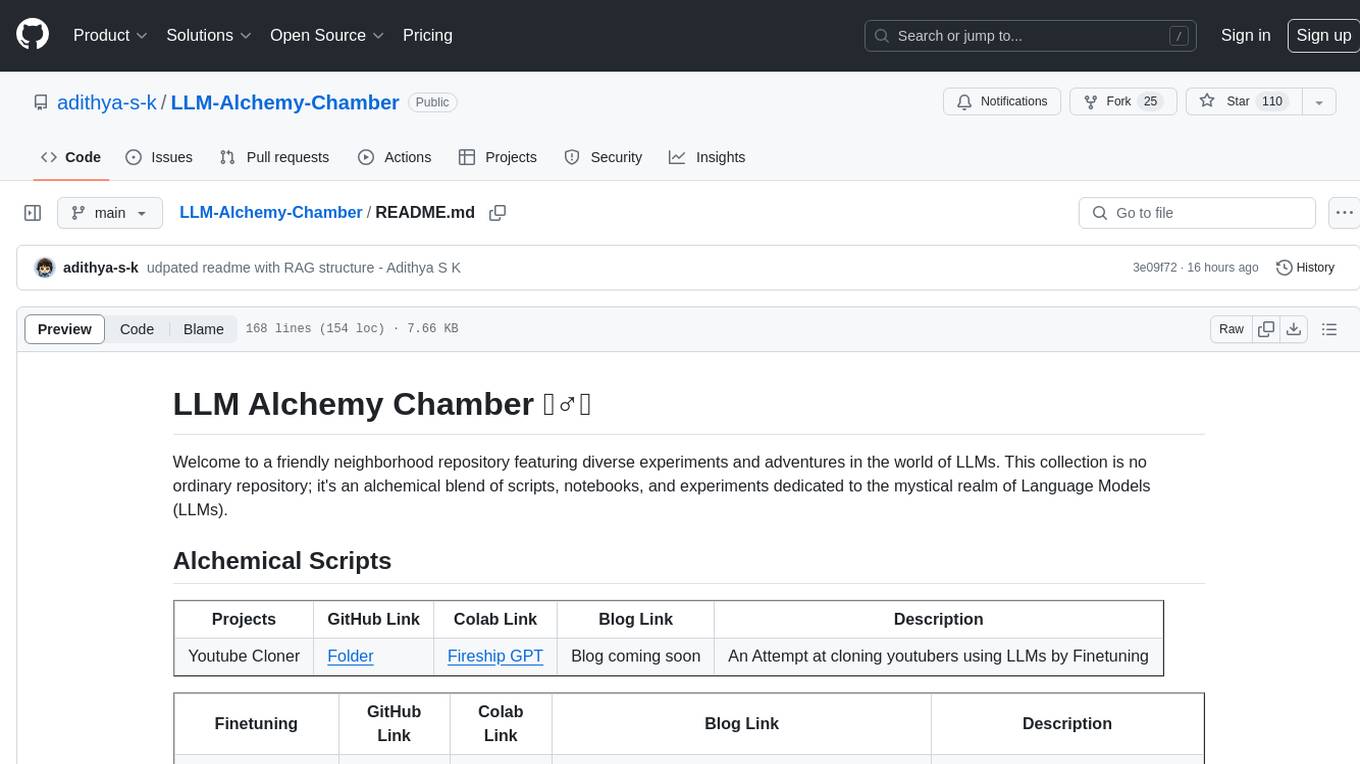

README:

Welcome to a friendly neighborhood repository featuring diverse experiments and adventures in the world of LLMs. This collection is no ordinary repository; it's an alchemical blend of scripts, notebooks, and experiments dedicated to the mystical realm of Language Models (LLMs).

| Projects | GitHub Link | Colab Link | Blog Link | Description |

|---|---|---|---|---|

| Youtube Cloner | Folder | Fireship GPT | Blog coming soon | An Attempt at cloning youtubers using LLMs by Finetuning |

| Finetuning | GitHub Link | Colab Link | Blog Link | Description |

|---|---|---|---|---|

| Gemma Finetuning | GitHub | Colab | A Beginner’s Guide to Fine-Tuning Gemma | Notebook to Finetune Gemma Models |

| Mistral-7b Finetuning | GitHub | Colab | A Beginner’s Guide to Fine-Tuning Mistral 7B Instruct Model | Notebook to Finetune Mistral-7b Model |

| Mixtral Finetuning | GitHub | Colab | A Beginner’s Guide to Fine-Tuning Mixtral Instruct Model | Notebook to Finetune Mixtral-7b Models |

| LLama2 Finetuning | GitHub | Colab | Notebook to Finetune Llama2-7b Model |

| Quantization | GitHub Link | Colab Link | Blog Link | Description |

|---|---|---|---|---|

| AWQ Quantization | GitHub | Colab | Squeeze Every Drop of Performance from Your LLM with AWQ | quantise LLM using AWQ. |

| GGUF Quantization | GitHub | Colab | Run any Huggingface model locally | quantise LLM to GGUF formate. |

| Data Prep | GitHub Link | Colab Link | Description |

|---|---|---|---|

| Documents -> Dataset | GitHub | Colab | Given Documents generate Instruction/QA dataset for finetuning LLMs |

| Topic -> Dataset | GitHub | Colab | Given a Topic generate a dataset to finetune LLMs |

| Alpaca Dataset Generation | GitHub | Colab | The original implementation of generating instruction dataset followed in the alpaca paper |

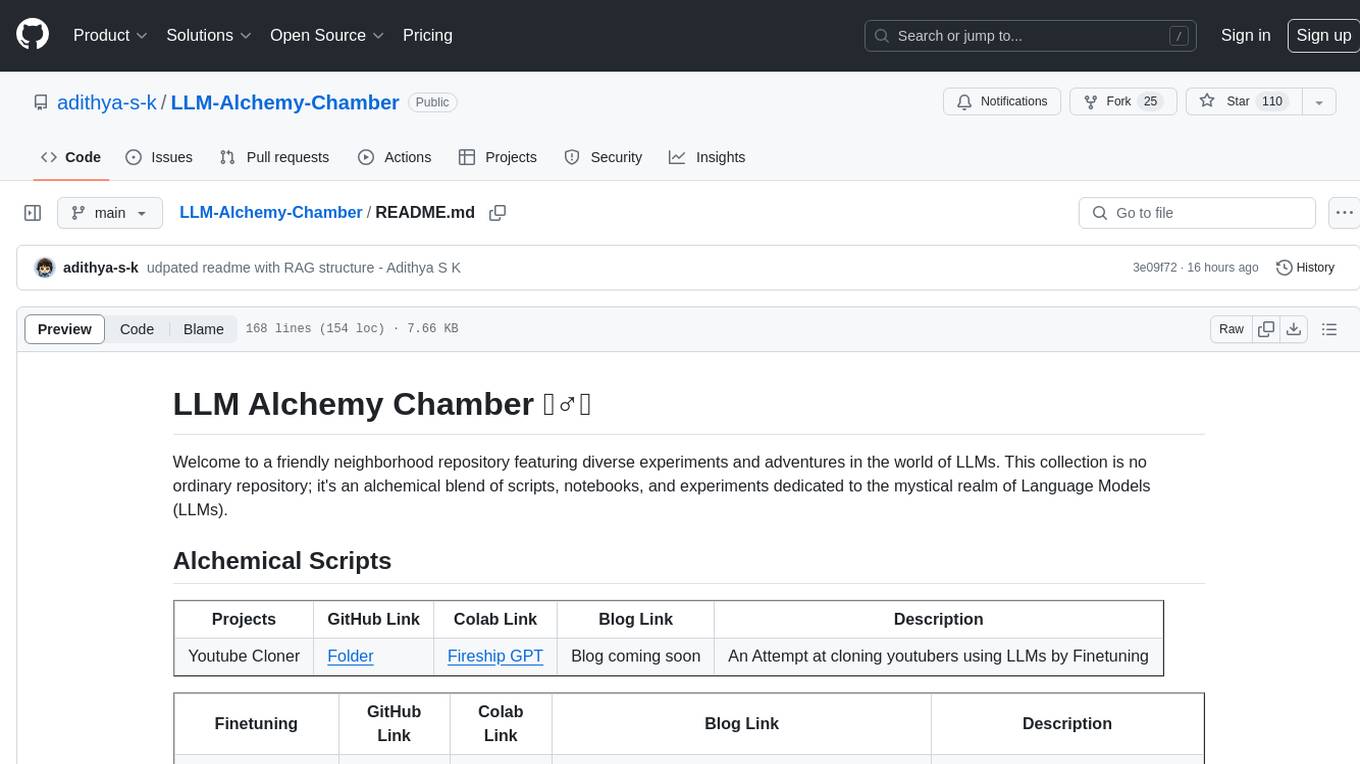

├── DataPrep (Notebook to generate synthetic data)

│ ├── dataset_prep.ipynb

│ └── ...

├── Deployment (TGI/VLLM scripts for testing)

│ └── ...

├── Finetuning (Finalized Finetuning Scripts)

│ ├── Gemma_finetuning_notebook.ipynb

│ ├── Llama2_finetuning_notebook.ipynb

│ ├── Mistral_finetuning_notebook.ipynb

│ ├── Mixtral_finetuning_notebook.ipynb

│ └── ...

├── LLMS (LLM experiments)

│ ├── ambari

│ │ └── ...

│ ├── CodeLLama

│ │ └── ...

│ ├── Gemma

│ │ ├── finetune-gemma.ipynb

│ │ └── gemma-sft.py

│ ├── Llama2

│ │ └── ...

│ ├── Mistral-7b

│ │ └── ...

│ └── Mixtral

│ └── ...

├── Projects (Upcoming ideas to explore)

│ └── YT_Clones

│ ├── Fireship_clone.ipynb

│ ├── youtube_channel_scraper.py

│ └── ...

├── Quantization

│ └── ...

├── utils

│ └── streaming_inference_hf.ipynb

└── RAG (Retrieval Augmented Generation)

├── 1_Naive_RAG.ipynb

├── 2_Semantic_Chunking_RAG.ipynb

├── 3_Sentence_Window_Retrieval_RAG.ipynb

├── 4_Auto_Merging_Retrieval_RAG.ipynb

├── 5_Agentic_RAG.ipynb

└── 6_Visual_RAG.ipynb

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Alchemy-Chamber

Similar Open Source Tools

LLM-Alchemy-Chamber

LLM Alchemy Chamber is a repository dedicated to exploring the world of Language Models (LLMs) through various experiments and projects. It contains scripts, notebooks, and experiments focused on tasks such as fine-tuning different LLM models, quantization for performance optimization, dataset generation for instruction/QA tasks, and more. The repository offers a collection of resources for beginners and enthusiasts interested in delving into the mystical realm of LLMs.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

kweaver

KWeaver is an open-source ecosystem for building, deploying, and running decision intelligence AI applications. It adopts ontology as the core methodology for business knowledge networks, with DIP as the core platform, aiming to provide elastic, agile, and reliable enterprise-grade decision intelligence to further unleash productivity. The DIP platform includes key subsystems such as ADP, Decision Agent, DIP Studio, and AI Store.

observers

Observers is a lightweight library for AI observability that provides support for various generative AI APIs and storage backends. It allows users to track interactions with AI models and sync observations to different storage systems. The library supports OpenAI, Hugging Face transformers, AISuite, Litellm, and Docling for document parsing and export. Users can configure different stores such as Hugging Face Datasets, DuckDB, Argilla, and OpenTelemetry to manage and query their observations. Observers is designed to enhance AI model monitoring and observability in a user-friendly manner.

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

Agentic-ADK

Agentic ADK is an Agent application development framework launched by Alibaba International AI Business, based on Google-ADK and Ali-LangEngine. It is used for developing, constructing, evaluating, and deploying powerful, flexible, and controllable complex AI Agents. ADK aims to make Agent development simpler and more user-friendly, enabling developers to more easily build, deploy, and orchestrate various Agent applications ranging from simple tasks to complex collaborations.

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

bumpgen

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

ai-toolbox

AI Toolbox is a cross-platform desktop application designed to efficiently manage various AI programming assistant configurations. It supports Windows, macOS, and Linux. The tool provides visual management of OpenCode, Oh-My-OpenCode, Slim plugin configurations, Claude Code API supplier configurations, Codex CLI configurations, MCP server management, Skills management, WSL synchronization, AI supplier management, system tray for quick configuration switching, data backup, theme switching, multilingual support, and automatic update checks.

JeecgBoot

JeecgBoot is a Java AI Low Code Platform for Enterprise web applications, based on BPM and code generator. It features a SpringBoot2.x/3.x backend, SpringCloud, Ant Design Vue3, Mybatis-plus, Shiro, JWT, supporting microservices, multi-tenancy, and AI capabilities like DeepSeek and ChatGPT. The powerful code generator allows for one-click generation of frontend and backend code without writing any code. JeecgBoot leads the way in AI low-code development mode, helping to solve 80% of repetitive work in Java projects and allowing developers to focus more on business logic.

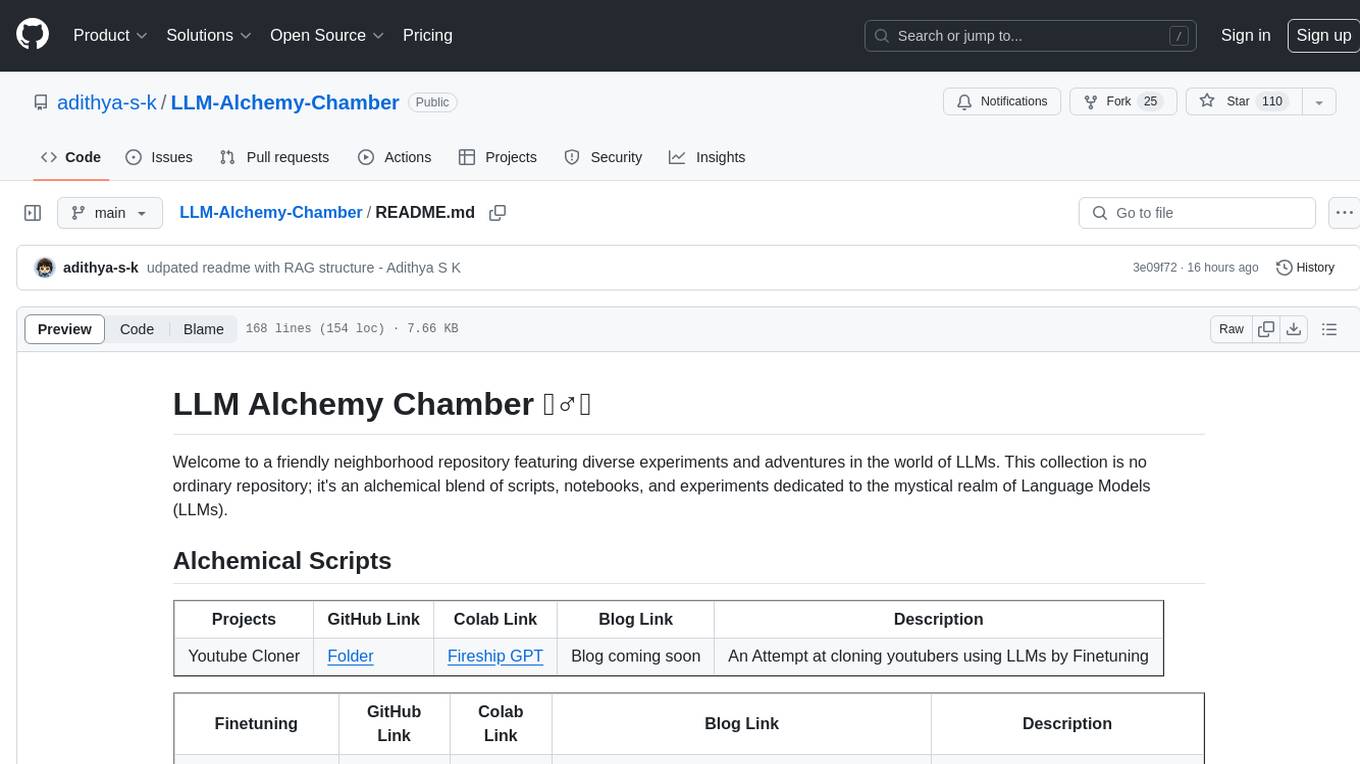

PaiAgent

PaiAgent is an enterprise-level AI workflow visualization orchestration platform that simplifies the combination and scheduling of AI capabilities. It allows developers and business users to quickly build complex AI processing flows through an intuitive drag-and-drop interface, without the need to write code, enabling collaboration of various large models.

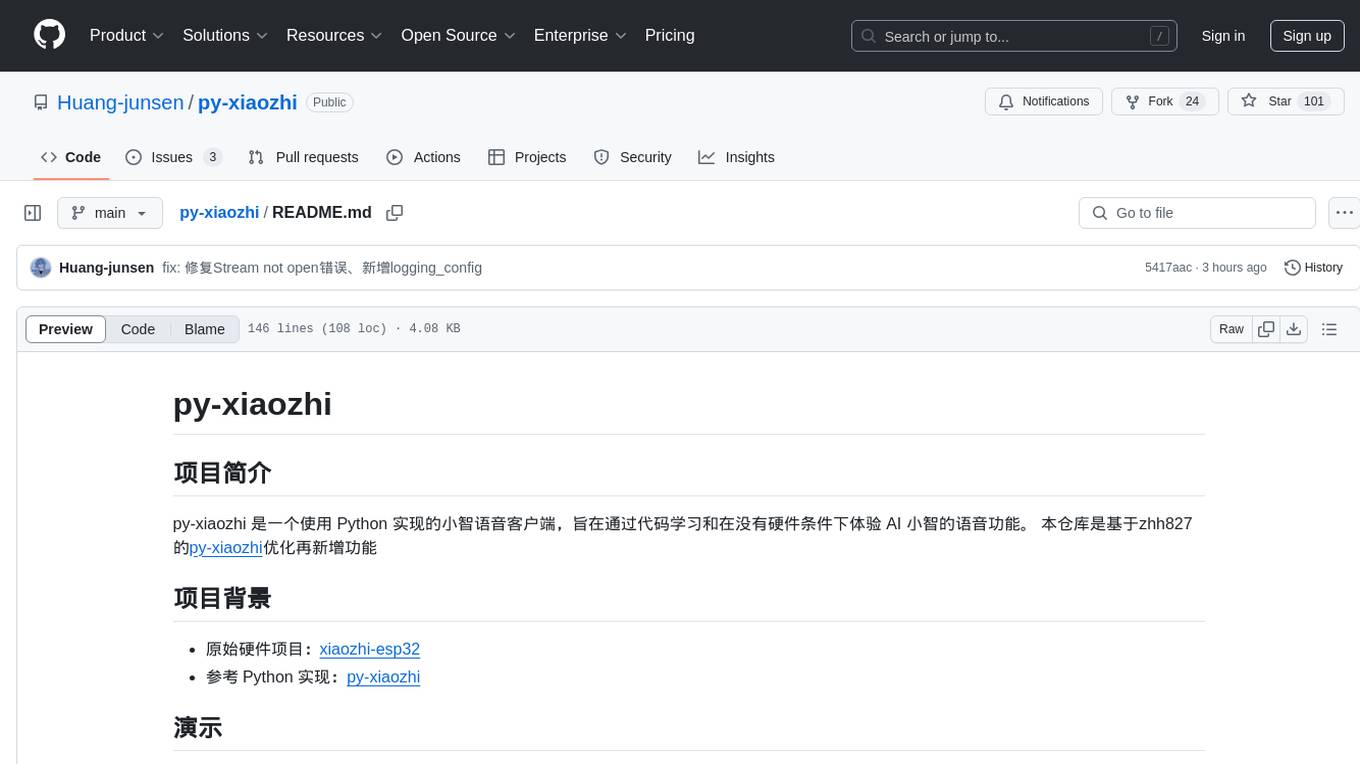

py-xiaozhi

py-xiaozhi is a Python-based XiaoZhi voice client designed for learning code and experiencing AI XiaoZhi's voice functions without hardware conditions. It features voice interaction, graphical interface, volume control, session management, encrypted audio transmission, CLI mode, and automatic copying of verification codes and opening browsers for first-time users. The project aims to optimize and add new features to zhh827's py-xiaozhi based on the original hardware project xiaozhi-esp32 and the Python implementation py-xiaozhi.

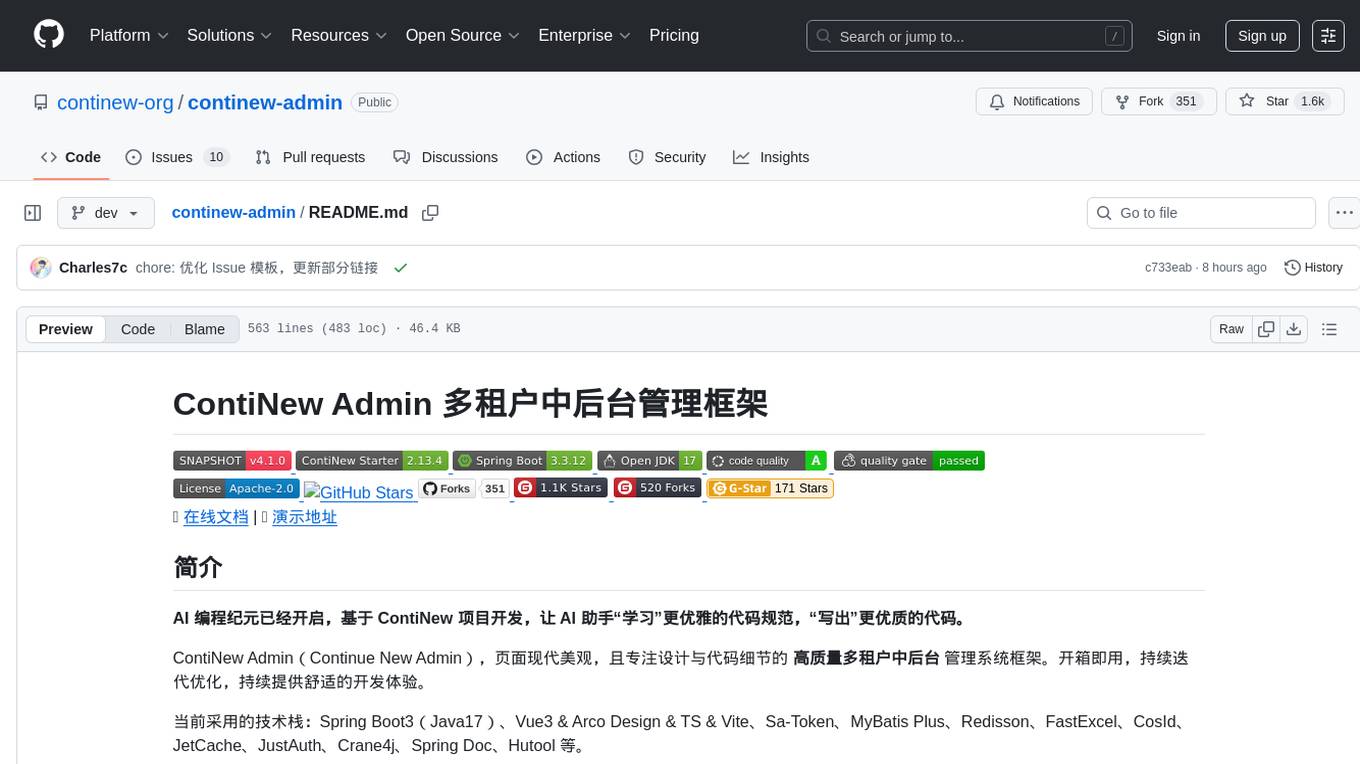

continew-admin

Continew-admin is a responsive admin dashboard template built with Bootstrap 4. It provides a clean and intuitive user interface for managing and visualizing data in web applications. The template includes various components and widgets that can be easily customized to suit different project requirements. With Continew-admin, developers can quickly set up a professional-looking admin panel for their web applications.

For similar tasks

LLM-Alchemy-Chamber

LLM Alchemy Chamber is a repository dedicated to exploring the world of Language Models (LLMs) through various experiments and projects. It contains scripts, notebooks, and experiments focused on tasks such as fine-tuning different LLM models, quantization for performance optimization, dataset generation for instruction/QA tasks, and more. The repository offers a collection of resources for beginners and enthusiasts interested in delving into the mystical realm of LLMs.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

training-operator

Kubeflow Training Operator is a Kubernetes-native project for fine-tuning and scalable distributed training of machine learning (ML) models created with various ML frameworks such as PyTorch, Tensorflow, XGBoost, MPI, Paddle and others. Training Operator allows you to use Kubernetes workloads to effectively train your large models via Kubernetes Custom Resources APIs or using Training Operator Python SDK. > Note: Before v1.2 release, Kubeflow Training Operator only supports TFJob on Kubernetes. * For a complete reference of the custom resource definitions, please refer to the API Definition. * TensorFlow API Definition * PyTorch API Definition * Apache MXNet API Definition * XGBoost API Definition * MPI API Definition * PaddlePaddle API Definition * For details of all-in-one operator design, please refer to the All-in-one Kubeflow Training Operator * For details on its observability, please refer to the monitoring design doc.

helix

HelixML is a private GenAI platform that allows users to deploy the best of open AI in their own data center or VPC while retaining complete data security and control. It includes support for fine-tuning models with drag-and-drop functionality. HelixML brings the best of open source AI to businesses in an ergonomic and scalable way, optimizing the tradeoff between GPU memory and latency.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

petals

Petals is a tool that allows users to run large language models at home in a BitTorrent-style manner. It enables fine-tuning and inference up to 10x faster than offloading. Users can generate text with distributed models like Llama 2, Falcon, and BLOOM, and fine-tune them for specific tasks directly from their desktop computer or Google Colab. Petals is a community-run system that relies on people sharing their GPUs to increase its capacity and offer a distributed network for hosting model layers.

LLaVA-pp

This repository, LLaVA++, extends the visual capabilities of the LLaVA 1.5 model by incorporating the latest LLMs, Phi-3 Mini Instruct 3.8B, and LLaMA-3 Instruct 8B. It provides various models for instruction-following LMMS and academic-task-oriented datasets, along with training scripts for Phi-3-V and LLaMA-3-V. The repository also includes installation instructions and acknowledgments to related open-source contributions.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.