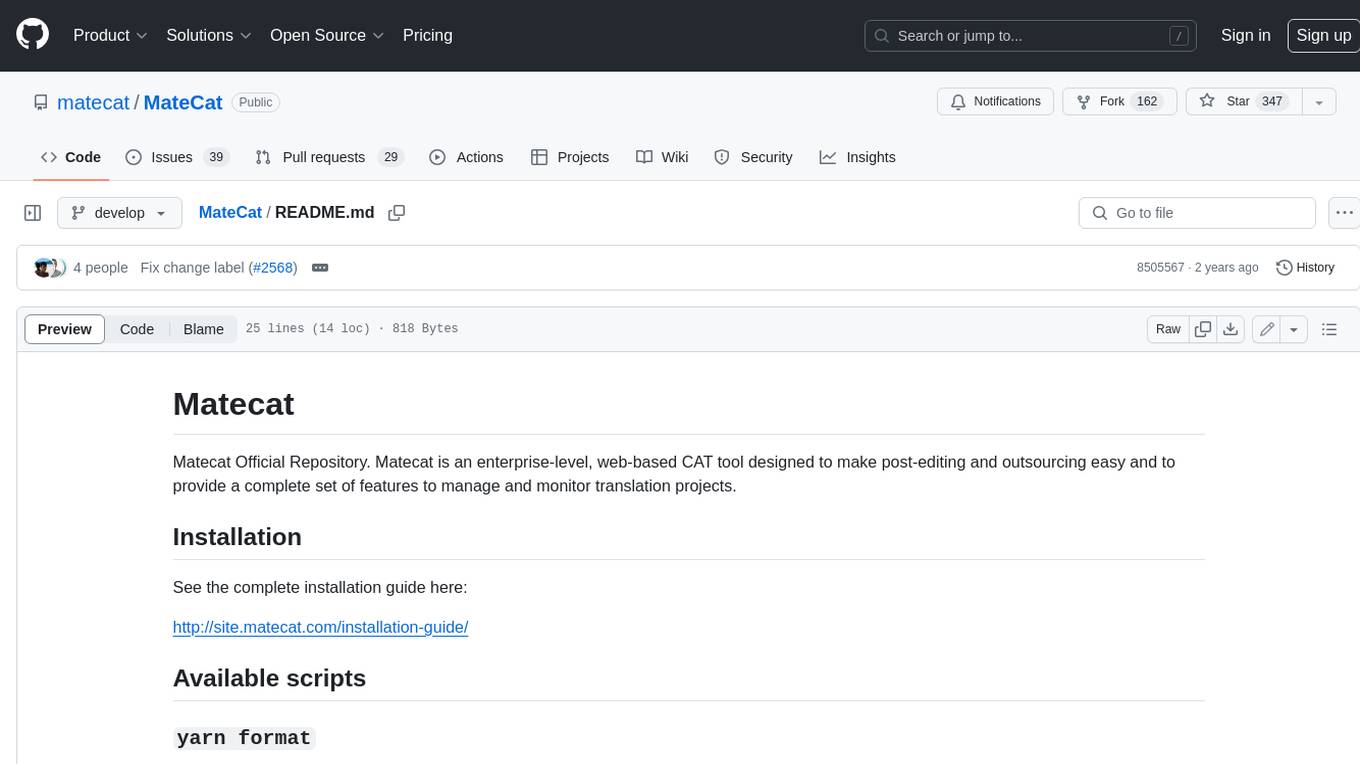

OpenGlass

Turn any glasses into AI-powered smart glasses

Stars: 53

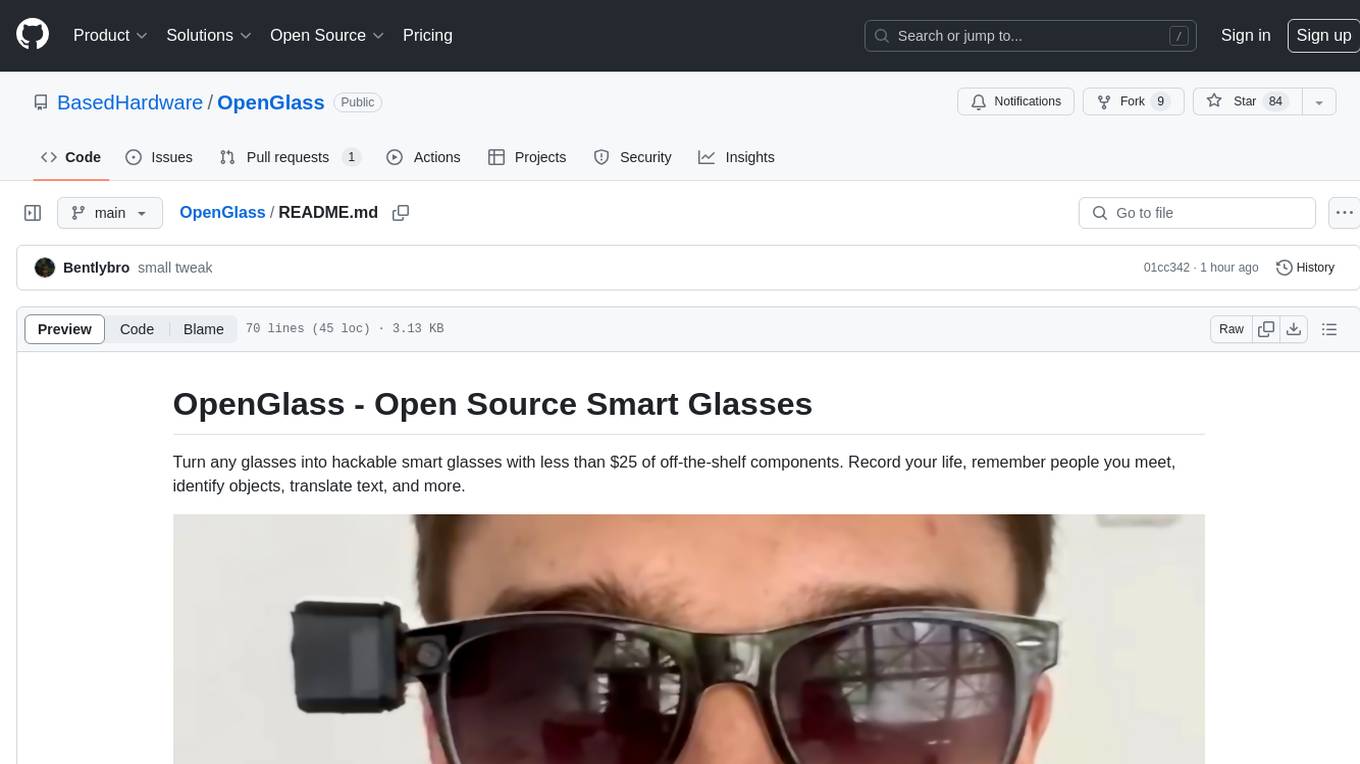

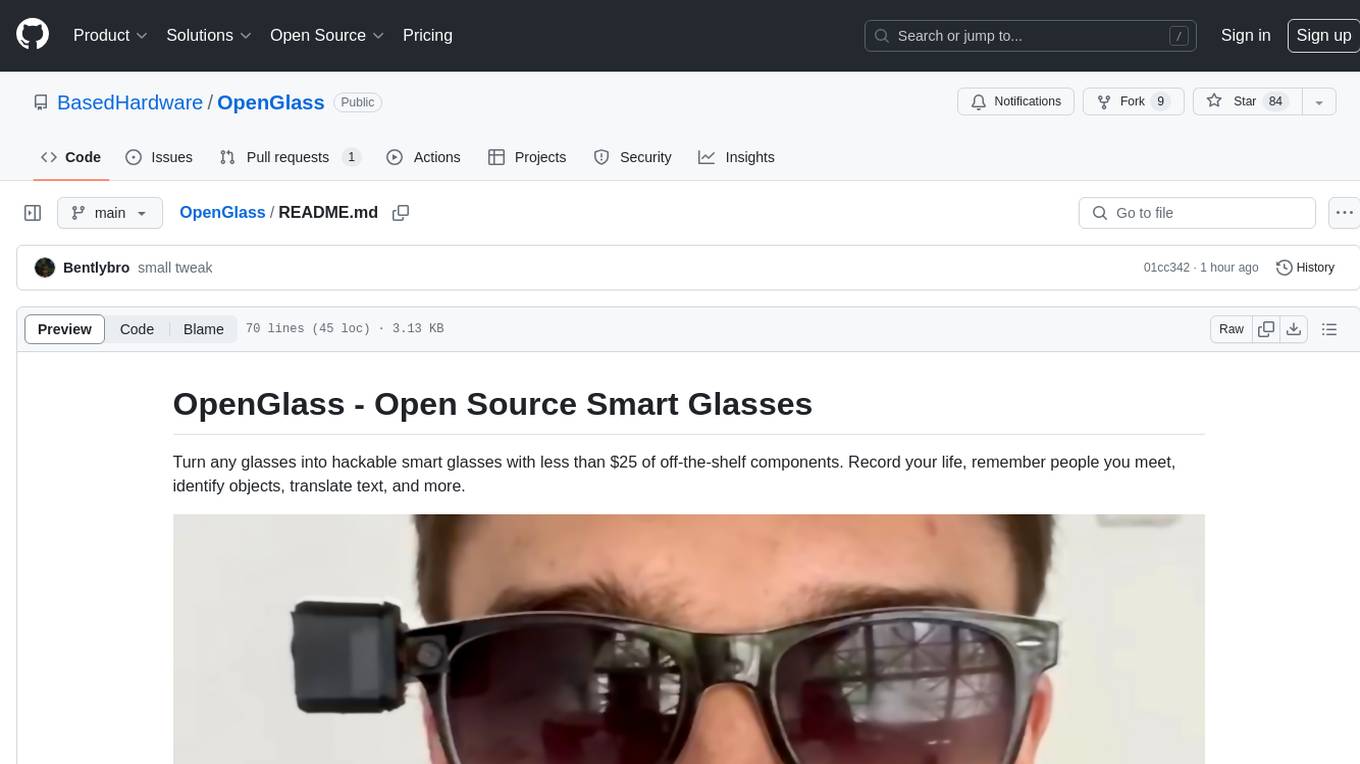

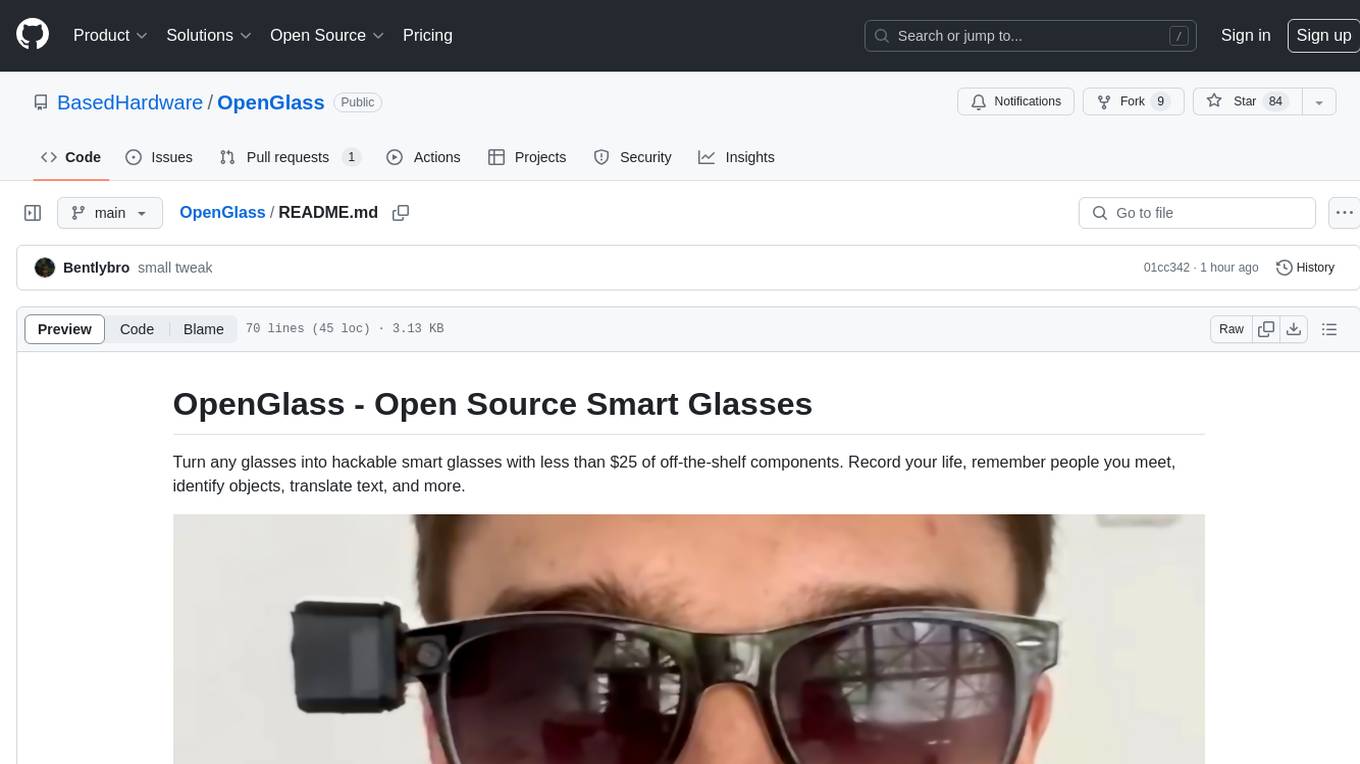

OpenGlass is an open-source project that allows users to transform any regular glasses into smart glasses using affordable off-the-shelf components. With a cost of less than $25, users can enhance their glasses to record their daily activities, recognize people, identify objects, translate text, and more. The project provides detailed instructions on hardware setup and software installation, making it accessible for DIY enthusiasts and tech enthusiasts alike. By following the steps outlined in the repository, users can create their own smart glasses and explore various functionalities offered by the project.

README:

Turn any glasses into hackable smart glasses with less than $25 of off-the shelf components. Record your life, remember people you meet, identify objects, translate text, and more.

We will ship a limited number of pre-built kits. https://forms.gle/K1dtrn1mPrMBsQZC9

Join the Based Hardware Discord

Follow these steps to set up OpenGlass:

-

Gather the required components:

- 1x Seeed Studio XIAO ESP32 S3 Sense - https://www.amazon.com/dp/B0C69FFVHH/ref=dp_iou_view_item?ie=UTF8&psc=1

- 1x EEMB LP502030 3.7v 250mAH battery - https://www.amazon.com/EEMB-Battery-Rechargeable-Lithium-Connector/dp/B08VRZTHDL

- 1x 3D printed glasses mount case - https://storage.googleapis.com/scott-misc/openglass_case.stl

-

3D print the glasses mount case using the provided STL file.

-

Open the firmware folder and open the

.inofile in the Arduino IDE.- If you don't have the Arduino IDE installed, download and install it from the official website: https://www.arduino.cc/en/software

-

Follow the software preparation steps to set up the Arduino IDE for the XIAO ESP32S3 board:

- Add ESP32 board package to your Arduino IDE:

- Navigate to File > Preferences, and fill "Additional Boards Manager URLs" with the URL: https://raw.githubusercontent.com/espressif/arduino-esp32/gh-pages/package_esp32_index.json

- Navigate to Tools > Board > Boards Manager..., type the keyword

esp32in the search box, select the latest version ofesp32, and install it.

- Select your board and port:

- On top of the Arduino IDE, select the port (likely to be COM3 or higher).

- Search for

xiaoin the development board on the left and selectXIAO_ESP32S3.

- Add ESP32 board package to your Arduino IDE:

-

Upload the firmware to the XIAO ESP32S3 board.

-

Clone the OpenGlass repository and install the dependencies:

git clone https://github.com/BasedHardware/openglass.git cd openglass npm install -

Add API keys for Grok and OpenAI in the

keys.tsfile located at https://github.com/BasedHardware/OpenGlass/blob/main/sources/keys.ts. -

For Ollama, self-host the REST API from the repository at https://github.com/ollama/ollama and add the URL to the

keys.tsfile. -

Start the application:

npm startNote: This is an Expo project. For now, open the localhost link to access the web version.

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenGlass

Similar Open Source Tools

OpenGlass

OpenGlass is an open-source project that allows users to transform any regular glasses into smart glasses using affordable off-the-shelf components. With a cost of less than $25, users can enhance their glasses to record their daily activities, recognize people, identify objects, translate text, and more. The project provides detailed instructions on hardware setup and software installation, making it accessible for DIY enthusiasts and tech enthusiasts alike. By following the steps outlined in the repository, users can create their own smart glasses and explore various functionalities offered by the project.

podman-desktop-extension-ai-lab

Podman AI Lab is an open source extension for Podman Desktop designed to work with Large Language Models (LLMs) on a local environment. It features a recipe catalog with common AI use cases, a curated set of open source models, and a playground for learning, prototyping, and experimentation. Users can quickly and easily get started bringing AI into their applications without depending on external infrastructure, ensuring data privacy and security.

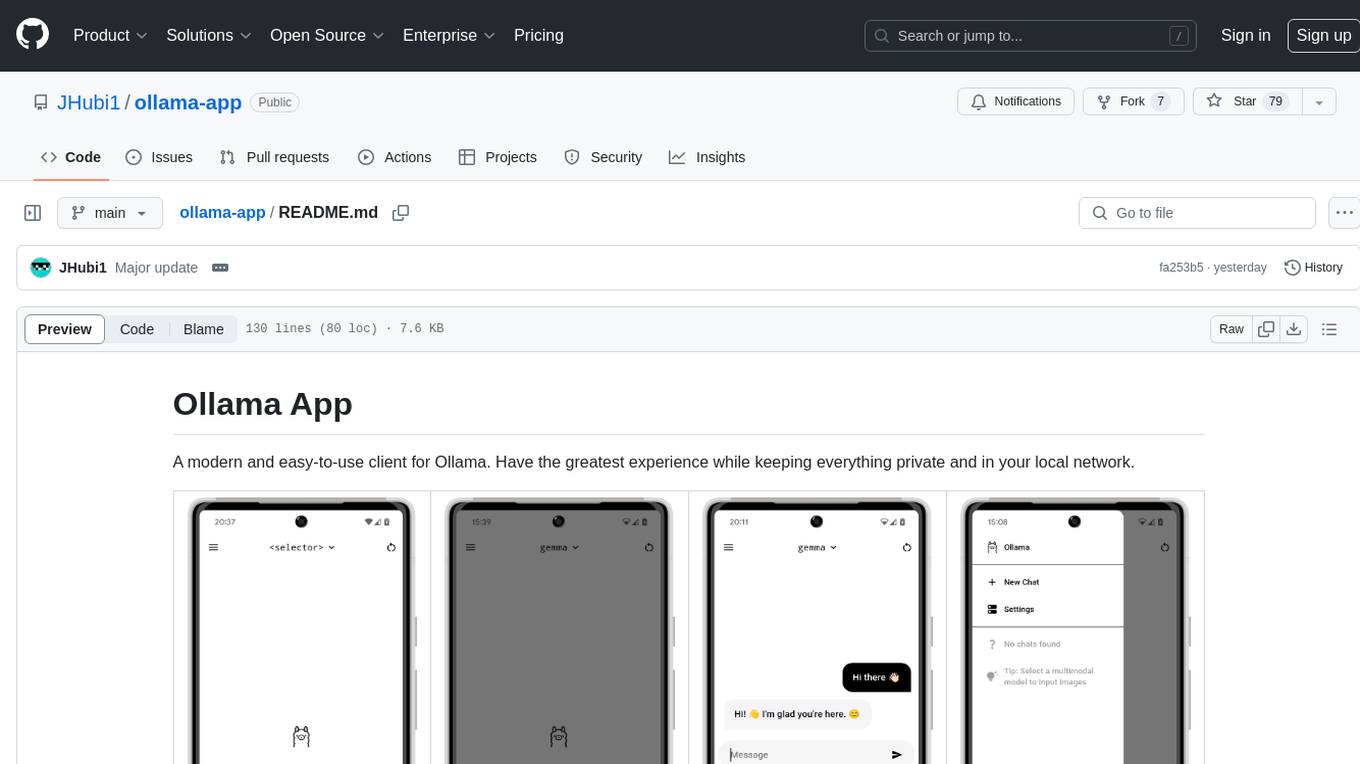

ollama-app

Ollama App is a modern and easy-to-use client for Ollama, allowing users to have a private experience within their local network. The app connects to an Ollama server using its API endpoint, enabling users to chat and interact with various models. It supports multimodal model input, a multilingual interface, and custom builds for personalized experiences. Users can easily set up the app, navigate through the side menu, select models, and create custom builds to tailor the app to their needs.

lluminous

lluminous is a fast and light open chat UI that supports multiple providers such as OpenAI, Anthropic, and Groq models. Users can easily plug in their API keys locally to access various models for tasks like multimodal input, image generation, multi-shot prompting, pre-filled responses, and more. The tool ensures privacy by storing all conversation history and keys locally on the user's device. Coming soon features include memory tool, file ingestion/embedding, embeddings-based web search, and prompt templates.

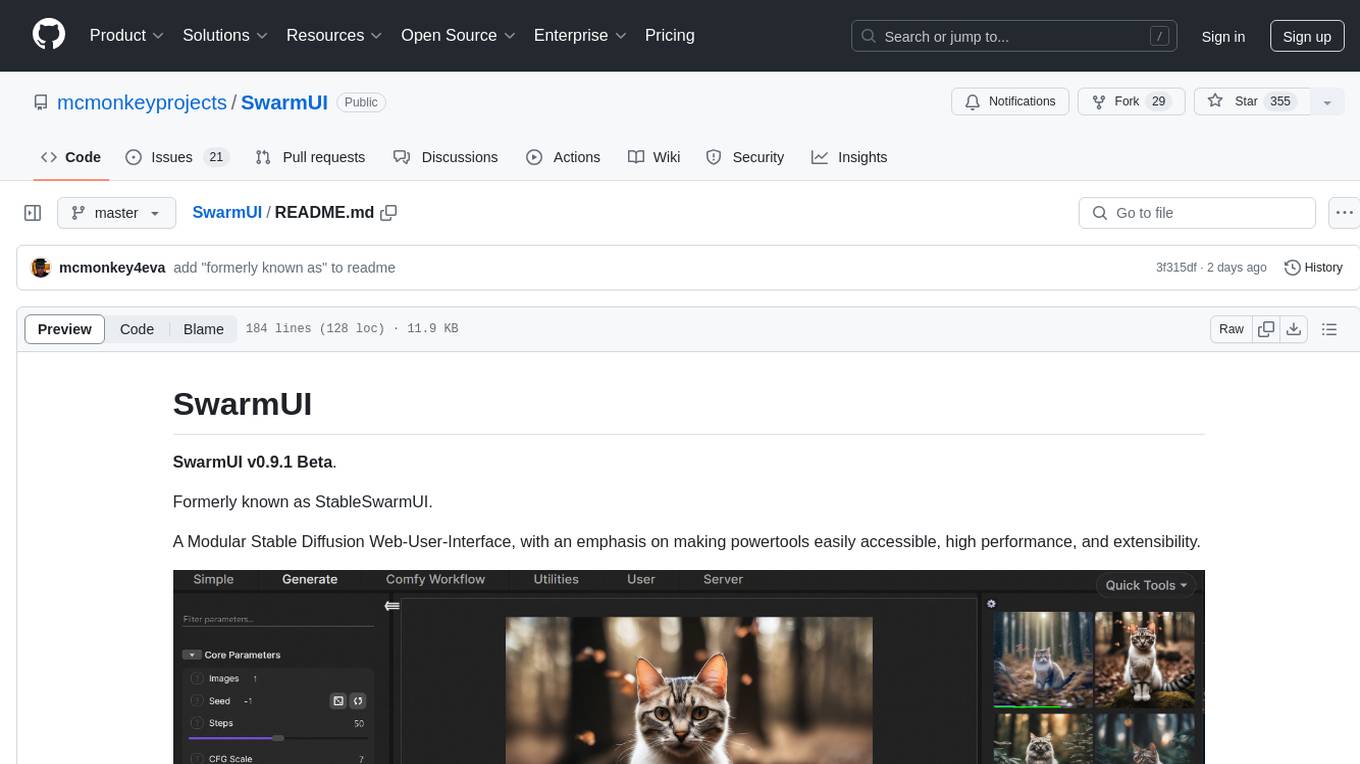

SwarmUI

SwarmUI is a modular stable diffusion web-user-interface designed to make powertools easily accessible, high performance, and extensible. It is in Beta status, offering a primary Generate tab for beginners and a Comfy Workflow tab for advanced users. The tool aims to become a full-featured one-stop-shop for all things Stable Diffusion, with plans for better mobile browser support, detailed 'Current Model' display, dynamic tab shifting, LLM-assisted prompting, and convenient direct distribution as an Electron app.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

Kuebiko

Kuebiko is a Twitch Chat Bot that reads twitch chat and generates text-to-speech responses using Google Cloud API and OpenAI's GPT-3 text completion model. It allows users to set up their own VTuber AI similar to 'Neuro-Sama'. The project is built with Python and requires setting up various API keys and configurations to enable the bot functionality. Users can customize the voice of their VTuber and route audio using VBAudio Cable. Kuebiko provides a unique way to interact with viewers through chat responses and captions in OBS.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

fuji-web

Fuji-Web is an intelligent AI partner designed for full browser automation. It autonomously navigates websites and performs tasks on behalf of the user while providing explanations for each action step. Users can easily install the extension in their browser, access the Fuji icon to input tasks, and interact with the tool to streamline web browsing tasks. The tool aims to enhance user productivity by automating repetitive web actions and providing a seamless browsing experience.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

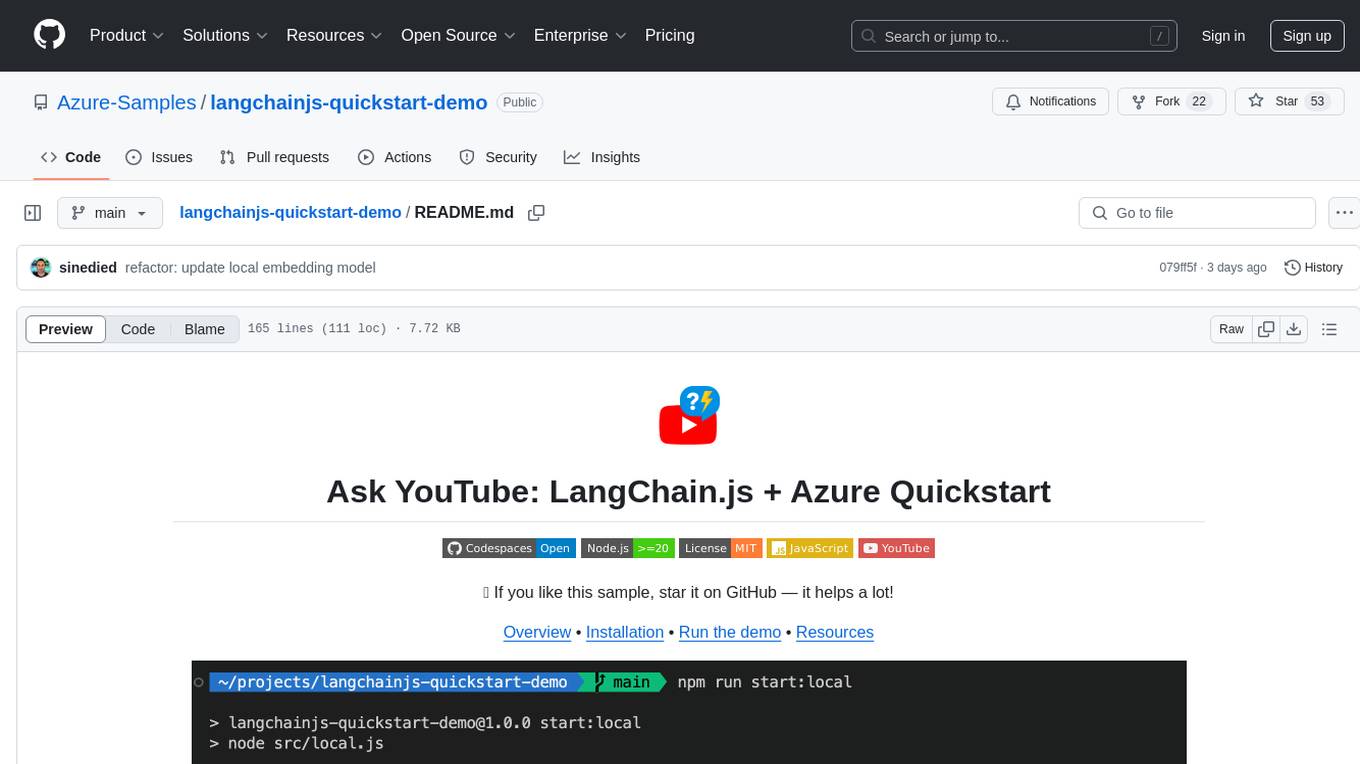

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

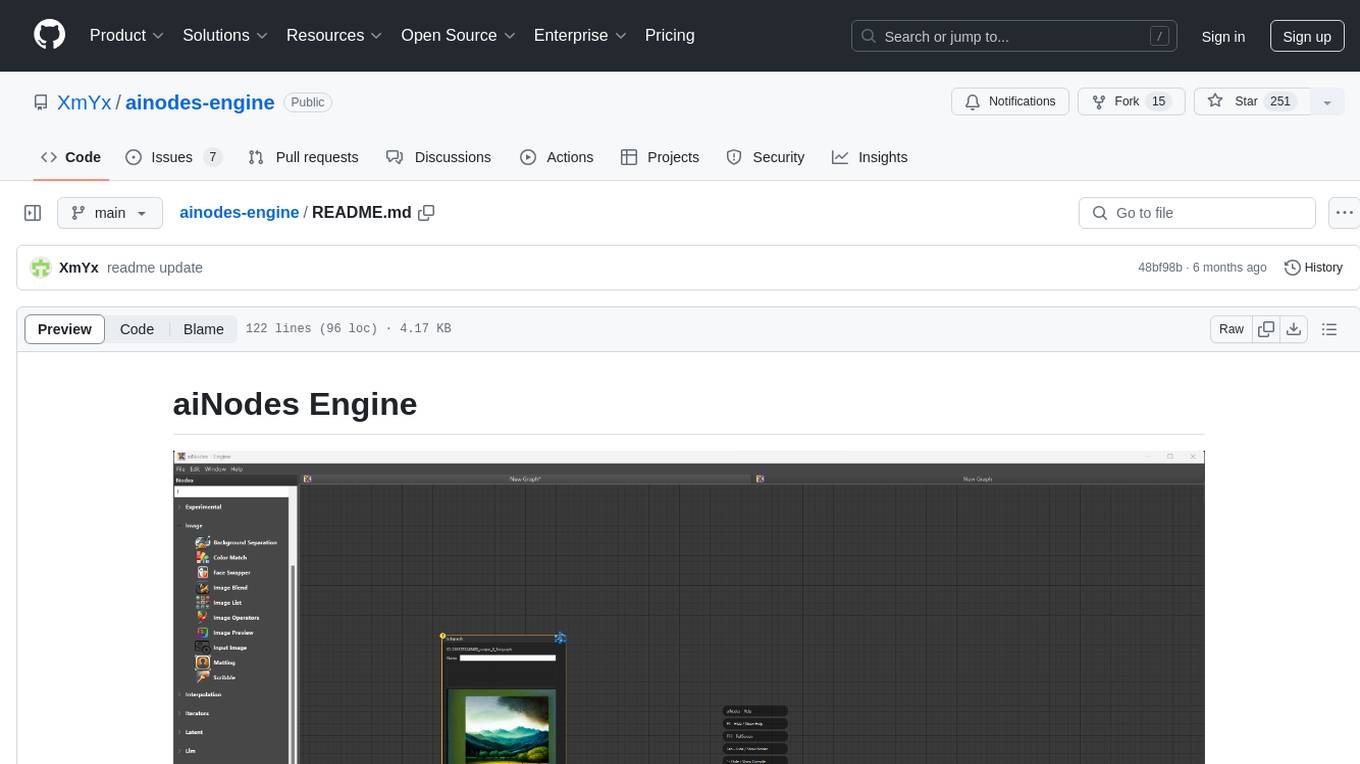

ainodes-engine

aiNodes Engine is a Python-based AI image/motion picture generator node engine with a live execution chain, python code editor node, and plug-in support. It offers full modularity, colored background drop, and easy node creation with IDE annotations. The project is officially supported by Deforum and incorporates various open-source projects like ComfyUI. It is designed to be flexible, with an Unreal-like execution chain, supporting features such as Deforum, Stable Diffusion, Upscalers, Kandinsky, ControlNet, and more. The engine allows for background separation, human matting/masking, compositing, drag and drop, subgraphs, and graph saving/loading from image metadata. It aims to provide a unique, controllable manner of working with a strict user-declared execution chain.

slide-deck-ai

SlideDeck AI is a tool that leverages Generative Artificial Intelligence to co-create slide decks on any topic. Users can describe their topic and let SlideDeck AI generate a PowerPoint slide deck, streamlining the presentation creation process. The tool offers an iterative workflow with a conversational interface for creating and improving presentations. It uses Mistral Nemo Instruct to generate initial slide content, searches and downloads images based on keywords, and allows users to refine content through additional instructions. SlideDeck AI provides pre-defined presentation templates and a history of instructions for users to enhance their presentations.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

For similar tasks

OpenGlass

OpenGlass is an open-source project that allows users to transform any regular glasses into smart glasses using affordable off-the-shelf components. With a cost of less than $25, users can enhance their glasses to record their daily activities, recognize people, identify objects, translate text, and more. The project provides detailed instructions on hardware setup and software installation, making it accessible for DIY enthusiasts and tech enthusiasts alike. By following the steps outlined in the repository, users can create their own smart glasses and explore various functionalities offered by the project.

screenpipe

Screenpipe is an open source application that turns your computer into a personal AI, capturing screen and audio to create a searchable memory of your activities. It allows you to remember everything, search with AI, and keep your data 100% local. The tool is designed for knowledge workers, developers, researchers, people with ADHD, remote workers, and anyone looking for a private, local-first alternative to cloud-based AI memory tools.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

llm2vec

LLM2Vec is a simple recipe to convert decoder-only LLMs into text encoders. It consists of 3 simple steps: 1) enabling bidirectional attention, 2) training with masked next token prediction, and 3) unsupervised contrastive learning. The model can be further fine-tuned to achieve state-of-the-art performance.

manga-image-translator

Translate texts in manga/images. Some manga/images will never be translated, therefore this project is born. * Image/Manga Translator * Samples * Online Demo * Disclaimer * Installation * Pip/venv * Poetry * Additional instructions for **Windows** * Docker * Hosting the web server * Using as CLI * Setting Translation Secrets * Using with Nvidia GPU * Building locally * Usage * Batch mode (default) * Demo mode * Web Mode * Api Mode * Related Projects * Docs * Recommended Modules * Tips to improve translation quality * Options * Language Code Reference * Translators Reference * GPT Config Reference * Using Gimp for rendering * Api Documentation * Synchronous mode * Asynchronous mode * Manual translation * Next steps * Support Us * Thanks To All Our Contributors :

curated-transformers

Curated Transformers is a transformer library for PyTorch that provides state-of-the-art models composed of reusable components. It supports various transformer architectures, including encoders like ALBERT, BERT, and RoBERTa, and decoders like Falcon, Llama, and MPT. The library emphasizes consistent type annotations, minimal dependencies, and ease of use for education and research. It has been production-tested by Explosion and will be the default transformer implementation in spaCy 3.7.

MateCat

Matecat is an enterprise-level, web-based CAT tool designed to make post-editing and outsourcing easy and to provide a complete set of features to manage and monitor translation projects.

bert4torch

**bert4torch** is a high-level framework for training and deploying transformer models in PyTorch. It provides a simple and efficient API for building, training, and evaluating transformer models, and supports a wide range of pre-trained models, including BERT, RoBERTa, ALBERT, XLNet, and GPT-2. bert4torch also includes a number of useful features, such as data loading, tokenization, and model evaluation. It is a powerful and versatile tool for natural language processing tasks.

For similar jobs

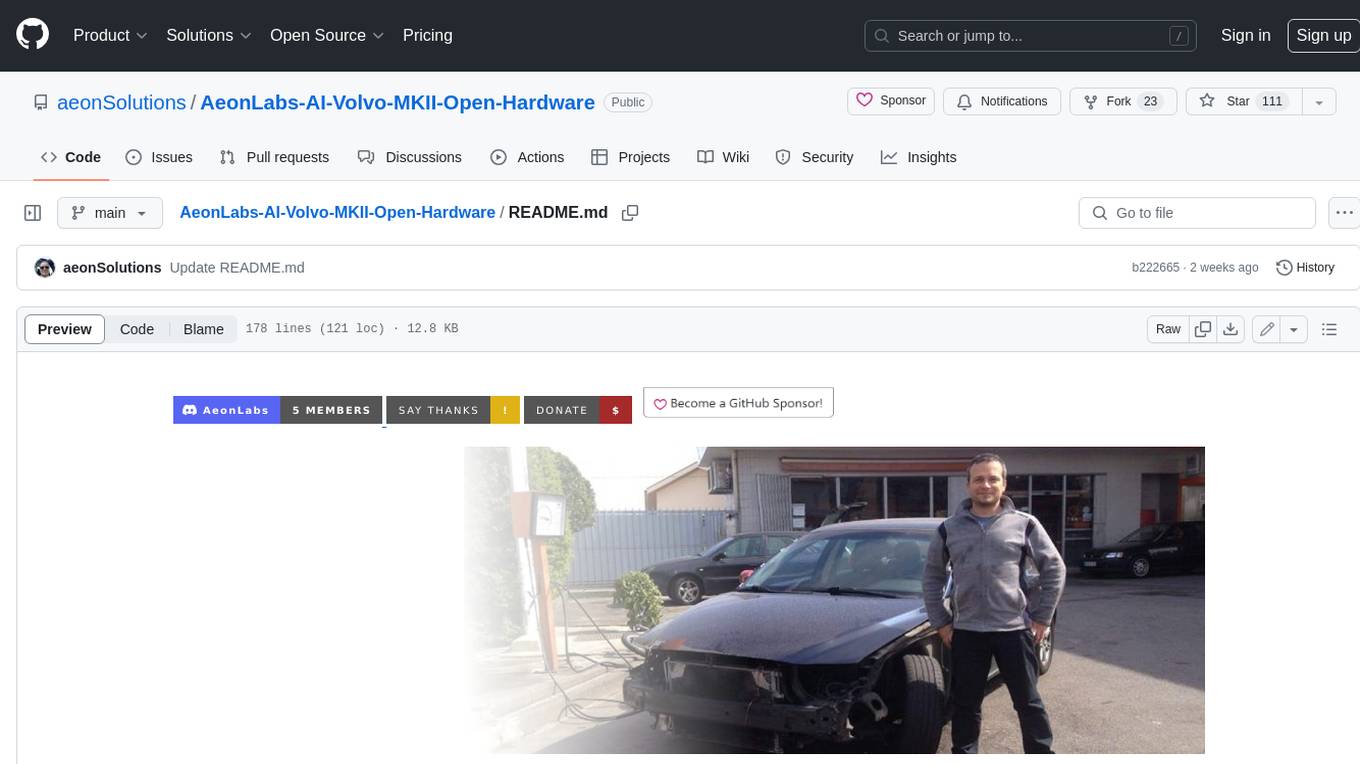

AeonLabs-AI-Volvo-MKII-Open-Hardware

This open hardware project aims to extend the life of Volvo P2 platform vehicles by updating them to current EU safety and emission standards. It involves designing and prototyping OEM hardware electronics that can replace existing electronics in these vehicles, using the existing wiring and without requiring reverse engineering or modifications. The project focuses on serviceability, maintenance, repairability, and personal ownership safety, and explores the advantages of using open solutions compared to conventional hardware electronics solutions.

OpenGlass

OpenGlass is an open-source project that allows users to transform any regular glasses into smart glasses using affordable off-the-shelf components. With a cost of less than $25, users can enhance their glasses to record their daily activities, recognize people, identify objects, translate text, and more. The project provides detailed instructions on hardware setup and software installation, making it accessible for DIY enthusiasts and tech enthusiasts alike. By following the steps outlined in the repository, users can create their own smart glasses and explore various functionalities offered by the project.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

dora

Dataflow-oriented robotic application (dora-rs) is a framework that makes creation of robotic applications fast and simple. Building a robotic application can be summed up as bringing together hardwares, algorithms, and AI models, and make them communicate with each others. At dora-rs, we try to: make integration of hardware and software easy by supporting Python, C, C++, and also ROS2. make communication low latency by using zero-copy Arrow messages. dora-rs is still experimental and you might experience bugs, but we're working very hard to make it stable as possible.

awesome-cuda-tensorrt-fpga

Okay, here is a JSON object with the requested information about the awesome-cuda-tensorrt-fpga repository:

AIOsense

AIOsense is an all-in-one sensor that is modular, affordable, and easy to solder. It is designed to be an alternative to commercially available sensors and focuses on upgradeability. AIOsense is cheaper and better than most commercial sensors and supports a variety of sensors and modules, including: - (RGB)-LED - Barometer - Breath VOC equivalent - Buzzer / Beeper - CO² equivalent - Humidity sensor - Light / Illumination sensor - PIR motion sensor - Temperature sensor - mmWave / Radar sensor Upcoming features include full voice assistant support, microphone, and speaker. All supported sensors & modules are listed in the documentation. AIOsense has a low power consumption, with an idle power consumption of 0.45W / 0.09A on a fully equipped board. Without a mmWave sensor, the idle power consumption is around 0.11W / 0.02A. To get started with AIOsense, you can refer to the documentation. If you have any questions, you can open an issue.

aihwkit

The IBM Analog Hardware Acceleration Kit is an open-source Python toolkit for exploring and using the capabilities of in-memory computing devices in the context of artificial intelligence. It consists of two main components: Pytorch integration and Analog devices simulator. The Pytorch integration provides a series of primitives and features that allow using the toolkit within PyTorch, including analog neural network modules, analog training using torch training workflow, and analog inference using torch inference workflow. The Analog devices simulator is a high-performant (CUDA-capable) C++ simulator that allows for simulating a wide range of analog devices and crossbar configurations by using abstract functional models of material characteristics with adjustable parameters. Along with the two main components, the toolkit includes other functionalities such as a library of device presets, a module for executing high-level use cases, a utility to automatically convert a downloaded model to its equivalent Analog model, and integration with the AIHW Composer platform. The toolkit is currently in beta and under active development, and users are advised to be mindful of potential issues and keep an eye for improvements, new features, and bug fixes in upcoming versions.

ByteMLPerf

ByteMLPerf is an AI Accelerator Benchmark that focuses on evaluating AI Accelerators from a practical production perspective, including the ease of use and versatility of software and hardware. Byte MLPerf has the following characteristics: - Models and runtime environments are more closely aligned with practical business use cases. - For ASIC hardware evaluation, besides evaluate performance and accuracy, it also measure metrics like compiler usability and coverage. - Performance and accuracy results obtained from testing on the open Model Zoo serve as reference metrics for evaluating ASIC hardware integration.