ai-murder-mystery-hackathon

The game is afoot

Stars: 160

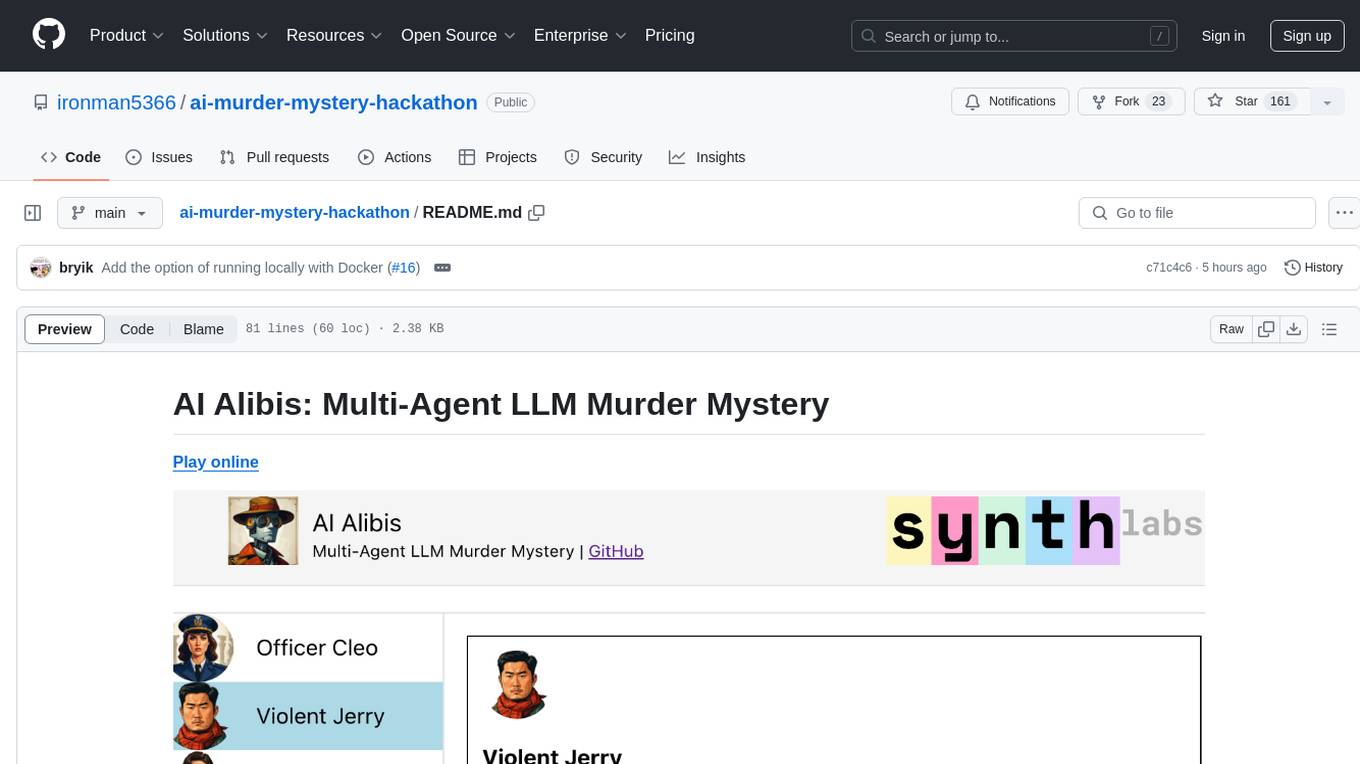

AI Alibis is a multi-agent murder mystery game that utilizes AI technology to create an interactive and engaging experience for players. Players can play the game online by following the setup instructions provided in the repository. The game involves solving a murder mystery by interacting with various characters and exploring the narrative. The repository includes code for the API, web interface, and database components required to run the game. Players can also explore the full murder story and learn about the prompting system used in the game. AI Alibis was created by Paul Scotti and Will Beddow.

README:

- Git clone the repo

git clone https://github.com/ironman5366/ai-murder-mystery-hackathon.git

cd ai-murder-mystery-hackathon

- Add your Anthropic API to api/.env file (optionally can export conversations to postgres with DB_CONN_URL="postgresql://link_to_db_conn")

nano api/.env

export ANTHROPIC_API_KEY="YOUR_API_KEY_HERE"

(<ctrl+x , y, enter> to save changes and exit nano)

- Install Node dependencies

web/npm i

- Start up the api

bash api_start.sh

- In separate terminal, start up the web interface

bash web_start.sh

- Play the game!

- Git clone the repo

git clone https://github.com/ironman5366/ai-murder-mystery-hackathon.git

cd ai-murder-mystery-hackathon

- Set environment variables:

export ANTHROPIC_API_KEY="YOUR_API_KEY_HERE"

- Open a terminal in the folder containing this README, then run:

docker compose up

This should start three containers (the database, Python API, and React frontend) and create a persistent volume for the database.

- Play the game at http://localhost:3000/

If you change any files (for example, changing the Anthropic model in /api/settings.py), then you will likely need to rebuild the images:

docker compose up --build

- To shut everything down, hit

CTRL-Cor click the stop button in the Docker GUI.

To clean up, use the Docker GUI to delete all containers then go to the "Volumes" tab to delete the associated database volume.

You can read the full murder story by checking out web/src/characters.json, which contains the full context provided to each character.

To see how our prompting system works, including our critique and revision approach, check out api/ai.py.

Twitter thread on the game: https://x.com/humanscotti/status/1810777932568399933

AI Alibis was created by Paul Scotti and Will Beddow.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-murder-mystery-hackathon

Similar Open Source Tools

ai-murder-mystery-hackathon

AI Alibis is a multi-agent murder mystery game that utilizes AI technology to create an interactive and engaging experience for players. Players can play the game online by following the setup instructions provided in the repository. The game involves solving a murder mystery by interacting with various characters and exploring the narrative. The repository includes code for the API, web interface, and database components required to run the game. Players can also explore the full murder story and learn about the prompting system used in the game. AI Alibis was created by Paul Scotti and Will Beddow.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

ai-town

AI Town is a virtual town where AI characters live, chat, and socialize. This project provides a deployable starter kit for building and customizing your own version of AI Town. It features a game engine, database, vector search, auth, text model, deployment, pixel art generation, background music generation, and local inference. You can customize your own simulation by creating characters and stories, updating spritesheets, changing the background, and modifying the background music.

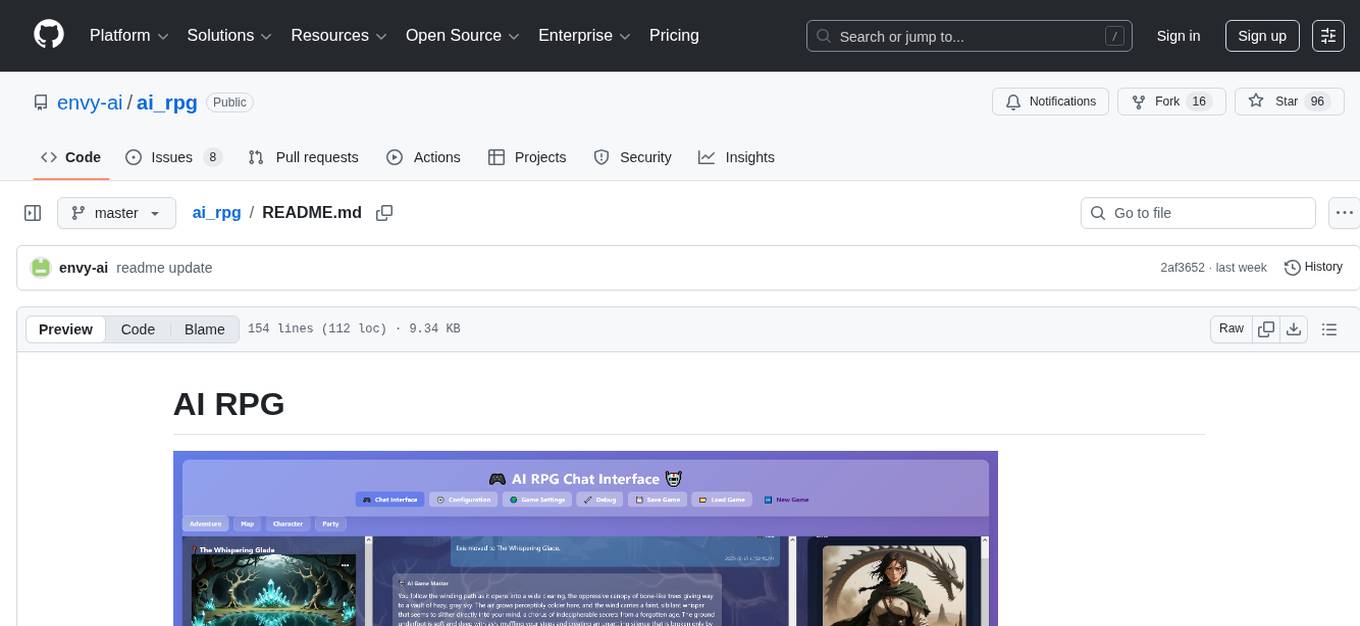

ai_rpg

AI RPG is a Node.js application that transforms an OpenAI-compatible language model into a solo tabletop game master. It tracks players, locations, regions, and items; renders structured prompts with Nunjucks; and supports ComfyUI image pipeline. The tool offers a rich region and location generation system, NPC and item tracking, party management, levels, classes, stats, skills, abilities, and more. It allows users to create their own world settings, offers detailed NPC memory system, skill checks with real RNG, modifiable needs system, and under-the-hood disposition system. The tool also provides event processing, context compression, image uploading, and support for loading SillyTavern lorebooks.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

python-sc2

python-sc2 is an easy-to-use library for writing AI Bots for StarCraft II in Python 3. It aims for simplicity and ease of use while providing both high and low level abstractions. The library covers only the raw scripted interface and intends to help new bot authors with added functions. Users can install the library using pip and need a StarCraft II executable to run bots. The API configuration options allow users to customize bot behavior and performance. The community provides support through Discord servers, and users can contribute to the project by creating new issues or pull requests following style guidelines.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

Kuebiko

Kuebiko is a Twitch Chat Bot that reads twitch chat and generates text-to-speech responses using Google Cloud API and OpenAI's GPT-3 text completion model. It allows users to set up their own VTuber AI similar to 'Neuro-Sama'. The project is built with Python and requires setting up various API keys and configurations to enable the bot functionality. Users can customize the voice of their VTuber and route audio using VBAudio Cable. Kuebiko provides a unique way to interact with viewers through chat responses and captions in OBS.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

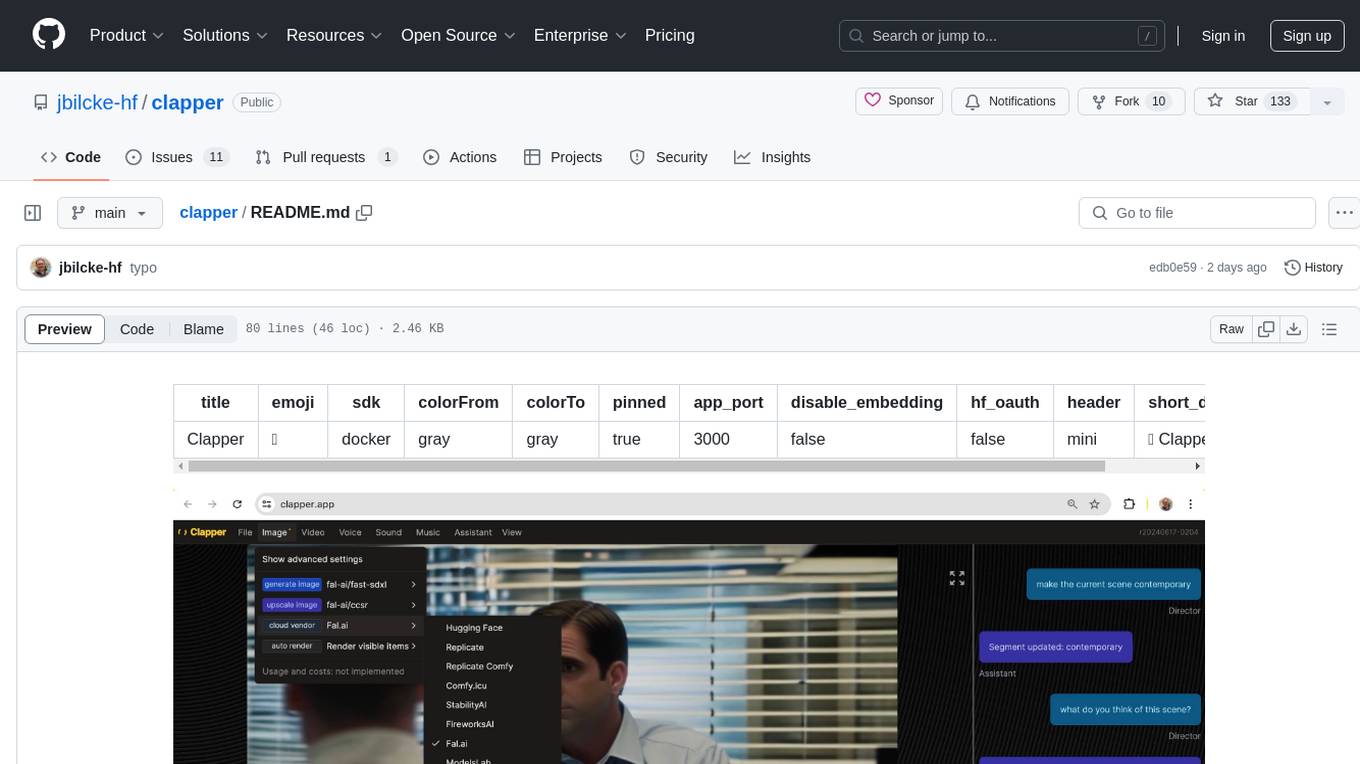

clapper

Clapper is an open-source AI story visualization tool that can interpret screenplays and render them into storyboards, videos, voice, sound, and music. It is currently in early development stages and not recommended for general use due to some non-functional features and lack of tutorials. A public alpha version is available on Hugging Face's platform. Users can sponsor specific features through bounties and developers can contribute to the project under the GPL v3 license. The tool lacks automated tests and code conventions like Prettier or a Linter.

gptauthor

GPT Author is a command-line tool designed to help users write long form, multi-chapter stories by providing a story prompt and generating a synopsis and subsequent chapters using ChatGPT. Users can review and make changes to the generated content before finalizing the story output in Markdown and HTML formats. The tool aims to unleash storytelling genius by combining human input with AI-generated content, offering a seamless writing experience for creating engaging narratives.

comfyui_LLM_party

COMFYUI LLM PARTY is a node library designed for LLM workflow development in ComfyUI, an extremely minimalist UI interface primarily used for AI drawing and SD model-based workflows. The project aims to provide a complete set of nodes for constructing LLM workflows, enabling users to easily integrate them into existing SD workflows. It features various functionalities such as API integration, local large model integration, RAG support, code interpreters, online queries, conditional statements, looping links for large models, persona mask attachment, and tool invocations for weather lookup, time lookup, knowledge base, code execution, web search, and single-page search. Users can rapidly develop web applications using API + Streamlit and utilize LLM as a tool node. Additionally, the project includes an omnipotent interpreter node that allows the large model to perform any task, with recommendations to use the 'show_text' node for display output.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

For similar tasks

ai-murder-mystery-hackathon

AI Alibis is a multi-agent murder mystery game that utilizes AI technology to create an interactive and engaging experience for players. Players can play the game online by following the setup instructions provided in the repository. The game involves solving a murder mystery by interacting with various characters and exploring the narrative. The repository includes code for the API, web interface, and database components required to run the game. Players can also explore the full murder story and learn about the prompting system used in the game. AI Alibis was created by Paul Scotti and Will Beddow.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.