wikipedia-semantic-search

Semantic Search on Wikipedia with Upstash Vector

Stars: 371

This repository showcases a project that indexes millions of Wikipedia articles using Upstash Vector. It includes a semantic search engine and a RAG chatbot SDK. The project involves preparing and embedding Wikipedia articles, indexing vectors, building a semantic search engine, and implementing a RAG chatbot. Key features include indexing over 144 million vectors, multilingual support, cross-lingual semantic search, and a RAG chatbot. Technologies used include Upstash Vector, Upstash Redis, Upstash RAG Chat SDK, SentenceTransformers, and Meta-Llama-3-8B-Instruct for LLM provider.

README:

This repository contains the code and documentation for our project on indexing millions of Wikipedia articles using Upstash Vector, as described in our blog post.

We've created a semantic search engine and Upstash RAG Chat SDK using Wikipedia data to demonstrate the capabilities of Upstash Vector and RAG Chat SDK. The project involves:

- Preparing and embedding Wikipedia articles

- Indexing the vectors using Upstash Vector

- Building a Wikipedia semantic search engine

- Implementing a RAG chatbot

- Indexed over 144 million vectors from Wikipedia articles in 11 languages

- Used BGE-M3 embedding model for multilingual support

- Implemented semantic search with cross-lingual capabilities

- Created a RAG chatbot using Upstash RAG Chat SDK

- Upstash Vector: For storing and querying vector embeddings

- Upstash Redis: For storing chat sessions

- Upstash RAG Chat SDK: For building the RAG Chat application

- SentenceTransformers: For generating embeddings

- Meta-Llama-3-8B-Instruct: As the LLM provider through QStash LLM APIs

To run the project locally, follow these steps:

- Go to Upstash Console to manage your databases:

- Create a new Vector database with embedding model support. You can choose the BGE-M3 model for multilingual support.

- Create a new Redis database for storing chat sessions.

- Copy the credentials for both Redis and Vector. Also copy the QStash credentials for using the upstash hosted LLM models.

Put the credentials in a .env file in the root of the project. Your .env file should look like this:

UPSTASH_VECTOR_REST_URL=

UPSTASH_VECTOR_REST_TOKEN=

UPSTASH_REDIS_REST_TOKEN=

UPSTASH_REDIS_REST_URL=

QSTASH_TOKEN=- Populate your Vector index.

This project uses namespaces to store articles in different languages. So you have to upsert the vectors in the correct namespace. For english, upsert your vectors into the

ennamespace.

- Install the dependencies:

pnpm install- Run the development server:

pnpm devWe welcome contributions to improve this project. Please feel free to submit issues or pull requests.

- Wikipedia for providing the dataset

- Upstash for their vector database and RAG Chat SDK

- All contributors to the open-source libraries used in this project

For any questions or feedback about the project or Upstash Vector, please reach out to us at (add contact information).

Check out our live demo to see the project in action!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for wikipedia-semantic-search

Similar Open Source Tools

wikipedia-semantic-search

This repository showcases a project that indexes millions of Wikipedia articles using Upstash Vector. It includes a semantic search engine and a RAG chatbot SDK. The project involves preparing and embedding Wikipedia articles, indexing vectors, building a semantic search engine, and implementing a RAG chatbot. Key features include indexing over 144 million vectors, multilingual support, cross-lingual semantic search, and a RAG chatbot. Technologies used include Upstash Vector, Upstash Redis, Upstash RAG Chat SDK, SentenceTransformers, and Meta-Llama-3-8B-Instruct for LLM provider.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

multimodal-chat

Yet Another Chatbot is a sophisticated multimodal chat interface powered by advanced AI models and equipped with a variety of tools. This chatbot can search and browse the web in real-time, query Wikipedia for information, perform news and map searches, execute Python code, compose long-form articles mixing text and images, generate, search, and compare images, analyze documents and images, search and download arXiv papers, save conversations as text and audio files, manage checklists, and track personal improvements. It offers tools for web interaction, Wikipedia search, Python scripting, content management, image handling, arXiv integration, conversation generation, file management, personal improvement, and checklist management.

semantic-kernel-java

Semantic Kernel for Java is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. It allows defining plugins that can be chained together in just a few lines of code. The tool automatically orchestrates plugins with AI, enabling users to generate plans to achieve unique goals and execute them. The project welcomes contributions, bug reports, and suggestions from the community.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

easy-web-summarizer

A Python script leveraging advanced language models to summarize webpages and youtube videos directly from URLs. It integrates with LangChain and ChatOllama for state-of-the-art summarization, providing detailed summaries for quick understanding of web-based documents. The tool offers a command-line interface for easy use and integration into workflows, with plans to add support for translating to different languages and streaming text output on gradio. It can also be used via a web UI using the gradio app. The script is dockerized for easy deployment and is open for contributions to enhance functionality and capabilities.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

SQL-AI-samples

This repository contains samples to help design AI applications using data from an Azure SQL Database. It showcases technical concepts and workflows integrating Azure SQL data with popular AI components both within and outside Azure. The samples cover various AI features such as Azure Cognitive Services, Promptflow, OpenAI, Vanna.AI, Content Moderation, LangChain, and more. Additionally, there are end-to-end samples like Similar Content Finder, Session Conference Assistant, Chatbots, Vectorization, SQL Server Database Development, Redis Vector Search, and Similarity Search with FAISS.

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

aws-bedrock-with-rag-and-react

This solution provides a low-code ReactJS application to prototype and vet business use cases for GenAI using Retrieval Augmented Generation (RAG). It includes a backend Flask application that uses LangChain to provide PDF data as embeddings to a text-gen model via Amazon Bedrock and a vector database with FAISS or Kendra Index. The solution utilizes Amazon Bedrock as the only cost-generating AWS service.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

csghub-server

CSGHub Server is a part of the open source and reliable large model assets management platform - CSGHub. It focuses on management of models, datasets, and other LLM assets through REST API. Key features include creation and management of users and organizations, auto-tagging of model and dataset labels, search functionality, online preview of dataset files, content moderation for text and image, download of individual files, tracking of model and dataset activity data. The tool is extensible and customizable, supporting different git servers, flexible LFS storage system configuration, and content moderation options. The roadmap includes support for more Git servers, Git LFS, dataset online viewer, model/dataset auto-tag, S3 protocol support, model format conversion, and model one-click deploy. The project is licensed under Apache 2.0 and welcomes contributions.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

SmallLanguageModel-project

This repository provides all the necessary items to build a Language Model from scratch, inspired by Karpathy's nanoGPT and Shakespeare generator. It includes data collection tools, data processing scripts, various models like BERT, GPT, and Seq-2-Seq, along with tokenizer and training files.

For similar tasks

kumo-search

Kumo search is an end-to-end search engine framework that supports full-text search, inverted index, forward index, sorting, caching, hierarchical indexing, intervention system, feature collection, offline computation, storage system, and more. It runs on the EA (Elastic automic infrastructure architecture) platform, enabling engineering automation, service governance, real-time data, service degradation, and disaster recovery across multiple data centers and clusters. The framework aims to provide a ready-to-use search engine framework to help users quickly build their own search engines. Users can write business logic in Python using the AOT compiler in the project, which generates C++ code and binary dynamic libraries for rapid iteration of the search engine.

search_with_lepton

Build your own conversational search engine using less than 500 lines of code. Features built-in support for LLM, search engine, customizable UI interface, and shareable cached search results. Setup includes Bing and Google search engines. Utilize LLM and KV functions with Lepton for seamless integration. Easily deploy to Lepton AI or your own environment with one-click deployment options.

wikipedia-semantic-search

This repository showcases a project that indexes millions of Wikipedia articles using Upstash Vector. It includes a semantic search engine and a RAG chatbot SDK. The project involves preparing and embedding Wikipedia articles, indexing vectors, building a semantic search engine, and implementing a RAG chatbot. Key features include indexing over 144 million vectors, multilingual support, cross-lingual semantic search, and a RAG chatbot. Technologies used include Upstash Vector, Upstash Redis, Upstash RAG Chat SDK, SentenceTransformers, and Meta-Llama-3-8B-Instruct for LLM provider.

blockoli

Blockoli is a high-performance tool for code indexing, embedding generation, and semantic search tool for use with LLMs. It is built in Rust and uses the ASTerisk crate for semantic code parsing. Blockoli allows you to efficiently index, store, and search code blocks and their embeddings using vector similarity. Key features include indexing code blocks from a codebase, generating vector embeddings for code blocks using a pre-trained model, storing code blocks and their embeddings in a SQLite database, performing efficient similarity search on code blocks using vector embeddings, providing a REST API for easy integration with other tools and platforms, and being fast and memory-efficient due to its implementation in Rust.

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

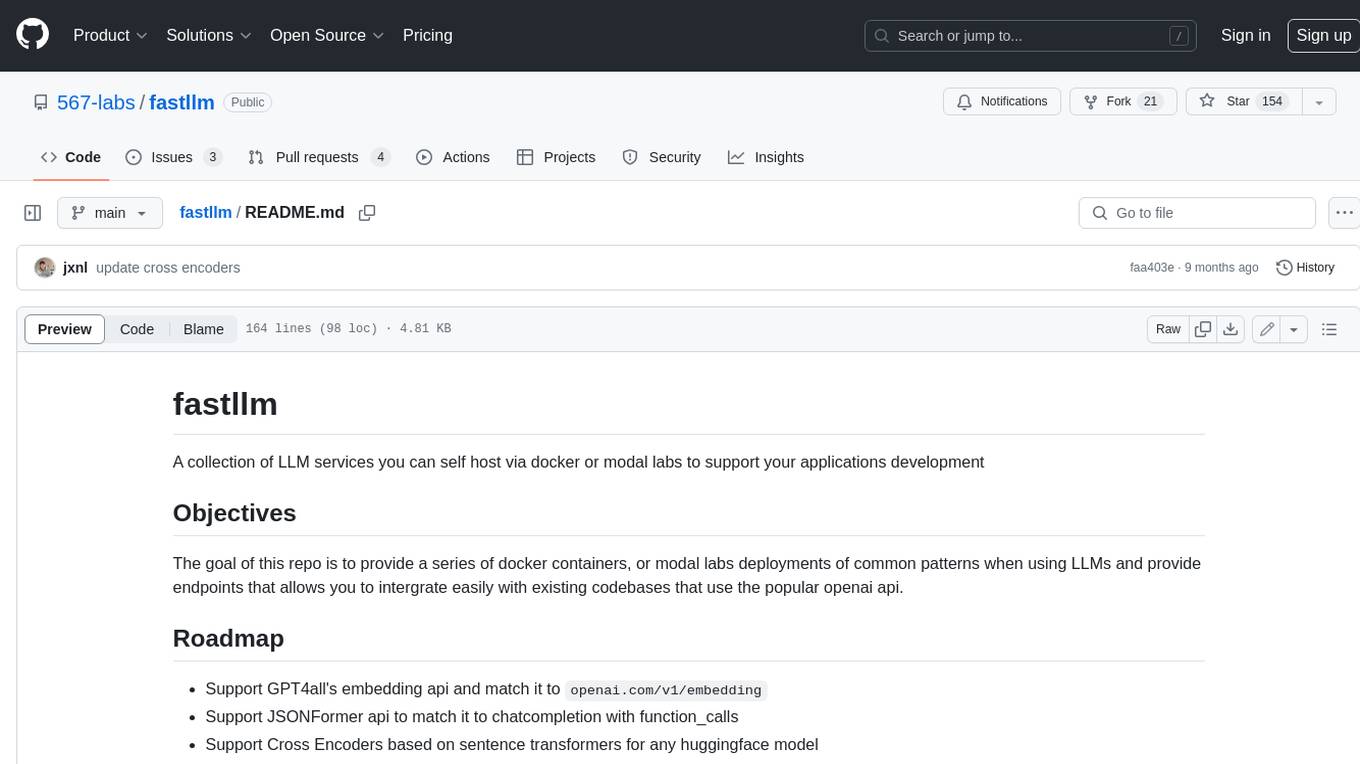

fastllm

A collection of LLM services you can self host via docker or modal labs to support your applications development. The goal is to provide docker containers or modal labs deployments of common patterns when using LLMs and endpoints to integrate easily with existing codebases using the openai api. It supports GPT4all's embedding api, JSONFormer api for chat completion, Cross Encoders based on sentence transformers, and provides documentation using MkDocs.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.