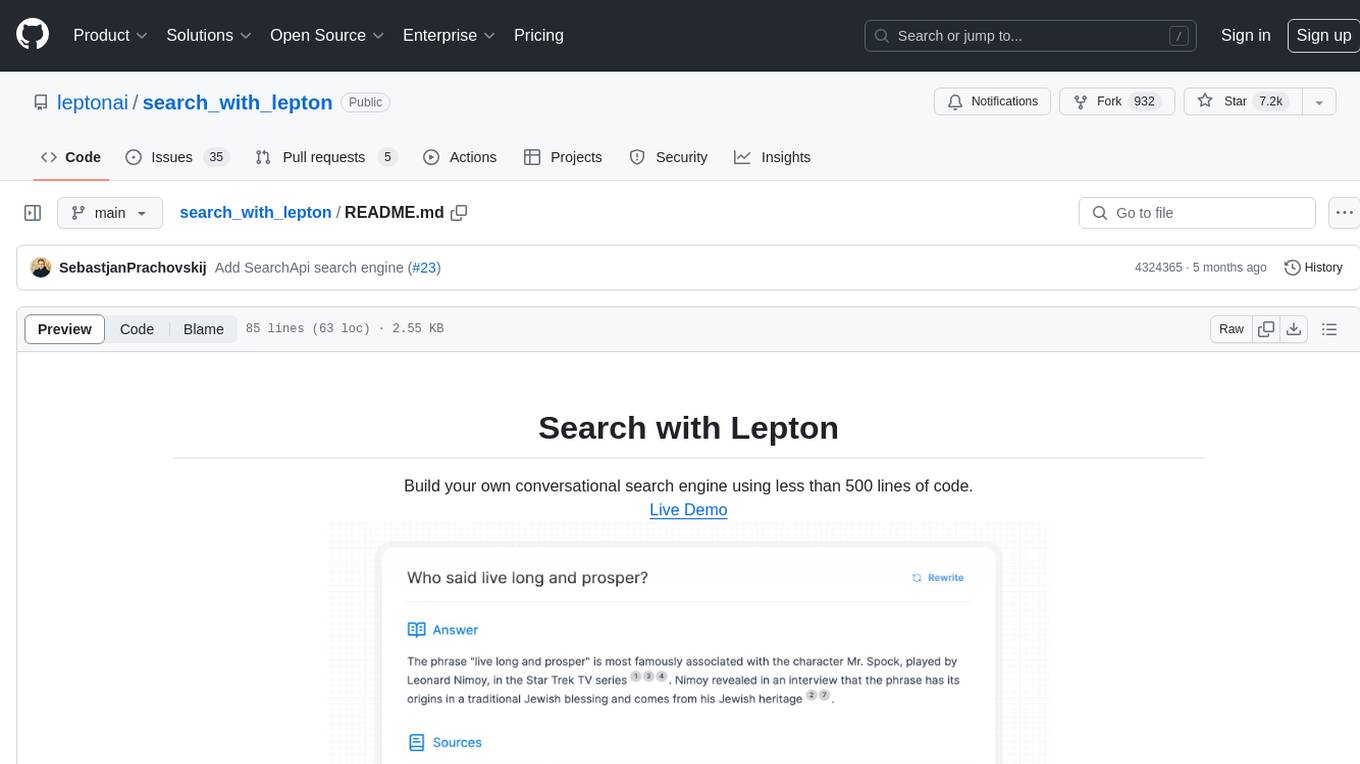

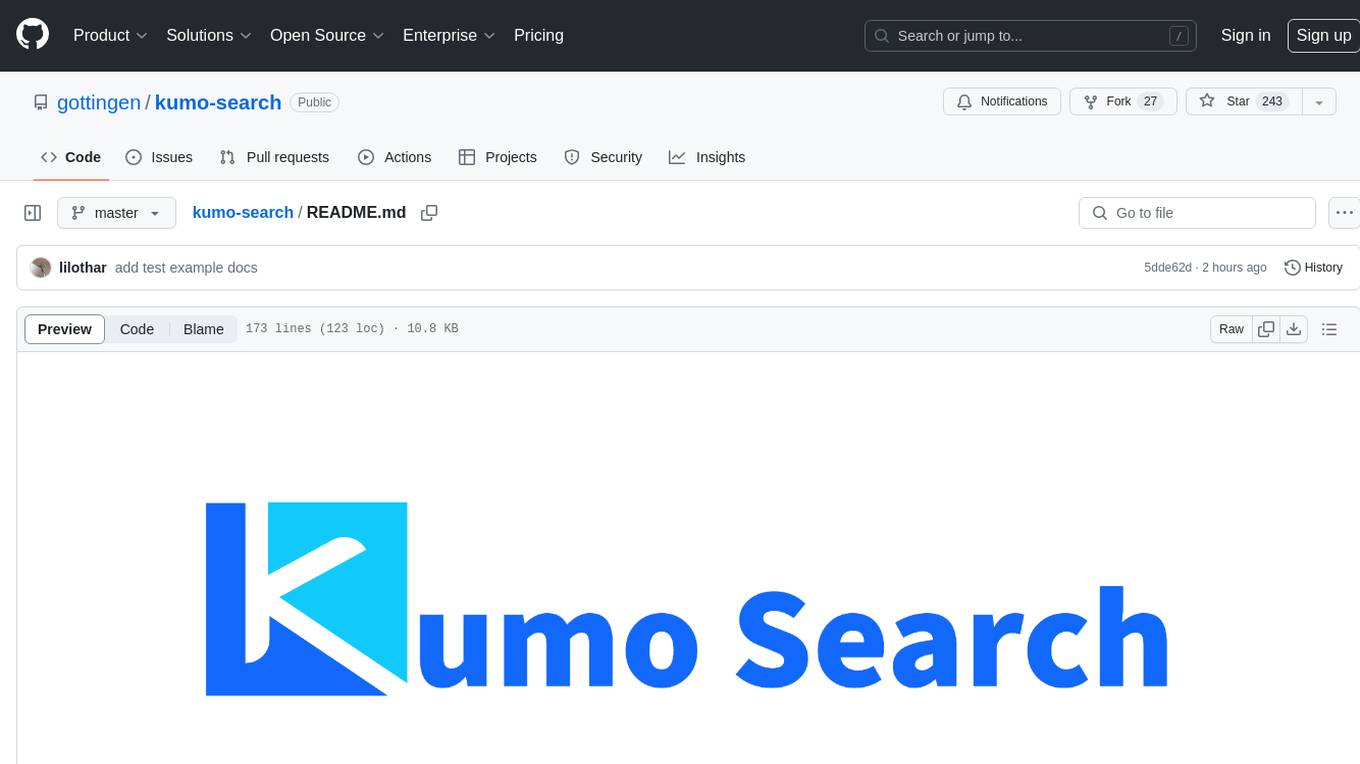

search_with_lepton

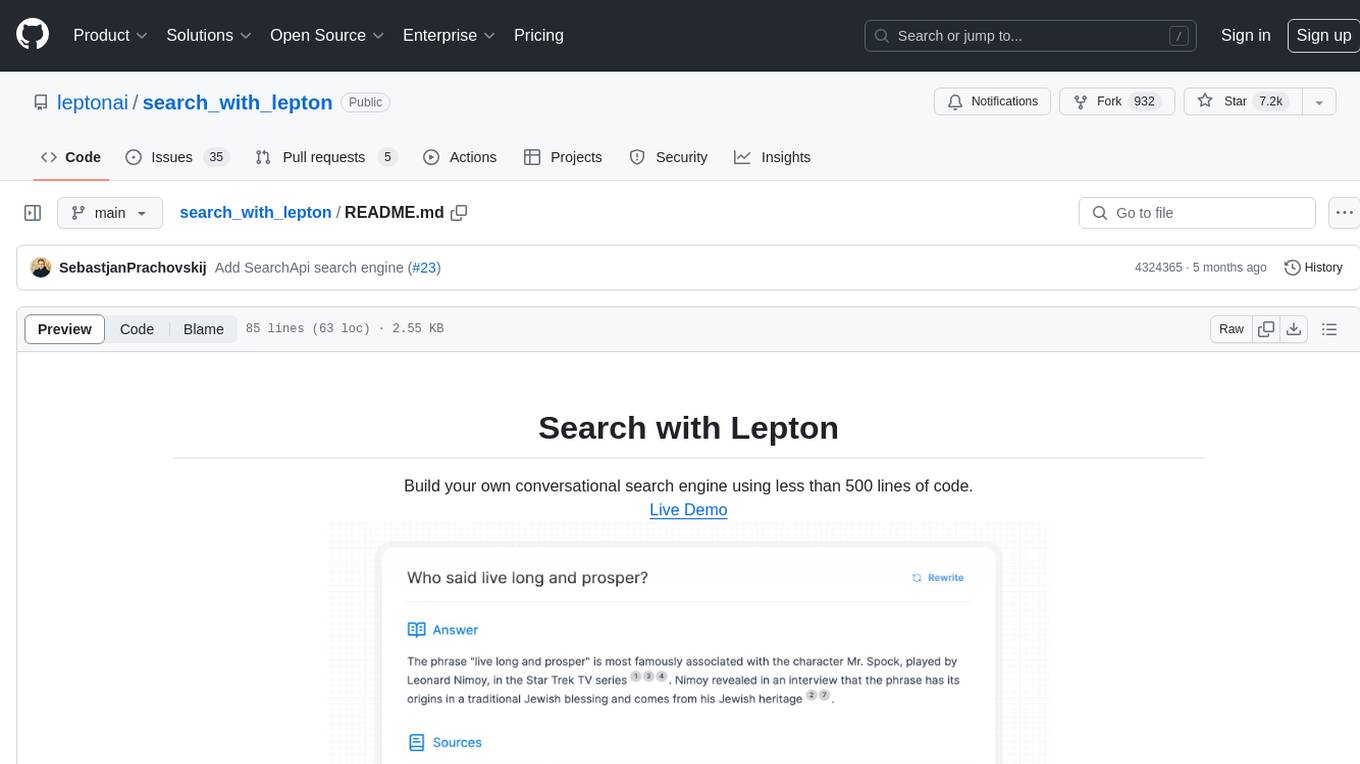

Building a quick conversation-based search demo with Lepton AI.

Stars: 7686

Build your own conversational search engine using less than 500 lines of code. Features built-in support for LLM, search engine, customizable UI interface, and shareable cached search results. Setup includes Bing and Google search engines. Utilize LLM and KV functions with Lepton for seamless integration. Easily deploy to Lepton AI or your own environment with one-click deployment options.

README:

- Built-in support for LLM

- Built-in support for search engine

- Customizable pretty UI interface

- Shareable, cached search results

There are two default supported search engines: Bing and Google.

To use the Bing Web Search API, please visit this link to obtain your Bing subscription key.

You have three options for Google Search: you can use the SearchApi Google Search API from SearchApi, Serper Google Search API from Serper, or opt for the Programmable Search Engine provided by Google.

[!NOTE] We recommend using the built-in llm and kv functions with Lepton. Running the following commands to set up them automatically.

pip install -U leptonai openai && lep loginYou can copy your workspace toke from the Lepton AI Dashboard → Settings → Tokens.

- Set Bing subscription key

export BING_SEARCH_V7_SUBSCRIPTION_KEY=YOUR_BING_SUBSCRIPTION_KEY- Set Lepton AI workspace token

export LEPTON_WORKSPACE_TOKEN=YOUR_LEPTON_WORKSPACE_TOKEN- Build web

cd web && npm install && npm run build- Run server

BACKEND=BING python search_with_lepton.pyFor Google Search using SearchApi:

export SEARCHAPI_API_KEY=YOUR_SEARCHAPI_API_KEY

BACKEND=SEARCHAPI python search_with_lepton.pyFor Google Search using Serper:

export SERPER_SEARCH_API_KEY=YOUR_SERPER_API_KEY

BACKEND=SERPER python search_with_lepton.pyFor Google Search using Programmable Search Engine:

export GOOGLE_SEARCH_API_KEY=YOUR_GOOGLE_SEARCH_API_KEY

export GOOGLE_SEARCH_CX=YOUR_GOOGLE_SEARCH_ENGINE_ID

BACKEND=GOOGLE python search_with_lepton.pyYou can deploy this to Lepton AI with one click:

You can also deploy your own version via

lep photon run -n search-with-lepton-modified -m search_with_lepton.py --env BACKEND=BING --env BING_SEARCH_V7_SUBSCRIPTION_KEY=YOUR_BING_SUBSCRIPTION_KEYLearn more about lep photon here.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for search_with_lepton

Similar Open Source Tools

search_with_lepton

Build your own conversational search engine using less than 500 lines of code. Features built-in support for LLM, search engine, customizable UI interface, and shareable cached search results. Setup includes Bing and Google search engines. Utilize LLM and KV functions with Lepton for seamless integration. Easily deploy to Lepton AI or your own environment with one-click deployment options.

docetl

DocETL is a tool for creating and executing data processing pipelines, especially suited for complex document processing tasks. It offers a low-code, declarative YAML interface to define LLM-powered operations on complex data. Ideal for maximizing correctness and output quality for semantic processing on a collection of data, representing complex tasks via map-reduce, maximizing LLM accuracy, handling long documents, and automating task retries based on validation criteria.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

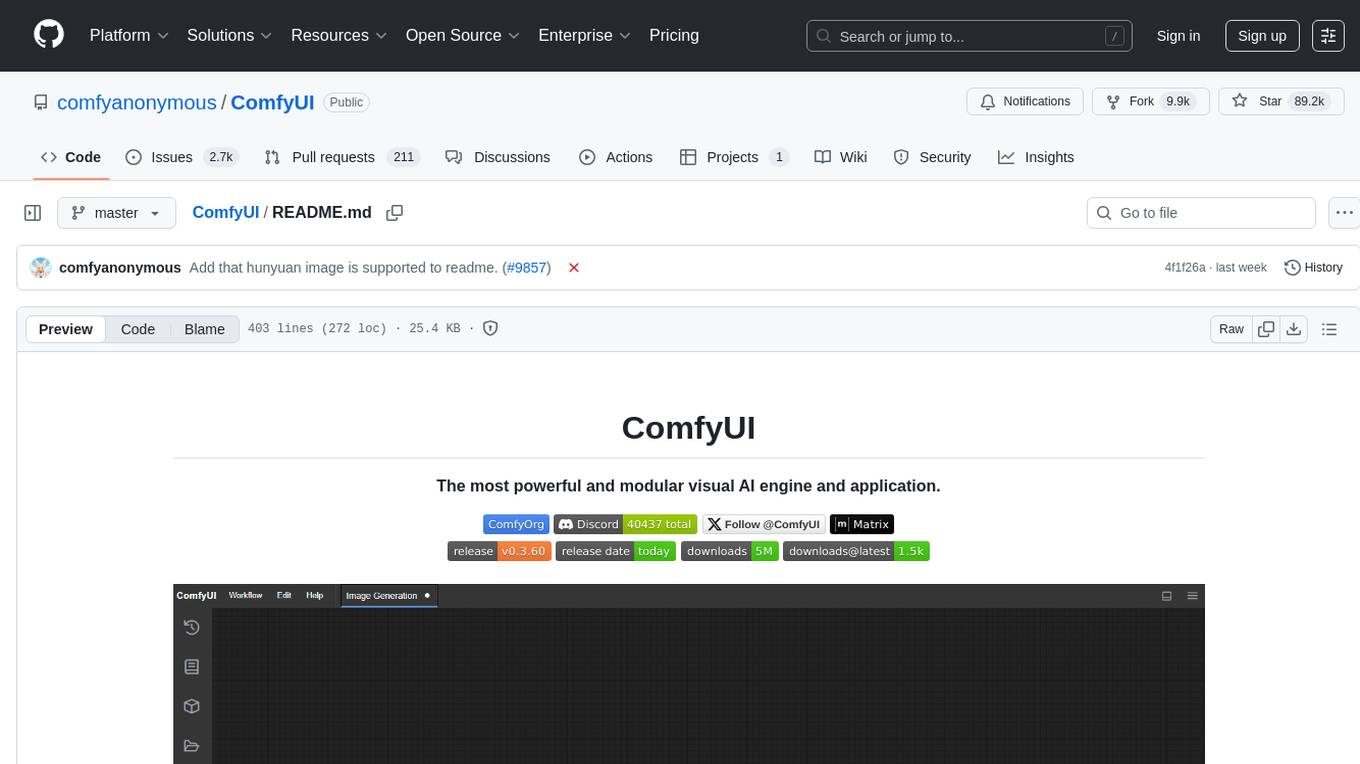

ComfyUI

ComfyUI is a powerful and modular visual AI engine and application that allows users to design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface. It provides a user-friendly environment for creating complex Stable Diffusion workflows without the need for coding. ComfyUI supports various models for image editing, video processing, audio manipulation, 3D modeling, and more. It offers features like smart memory management, support for different GPU types, loading and saving workflows as JSON files, and offline functionality. Users can also use API nodes to access paid models from external providers through the online Comfy API.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

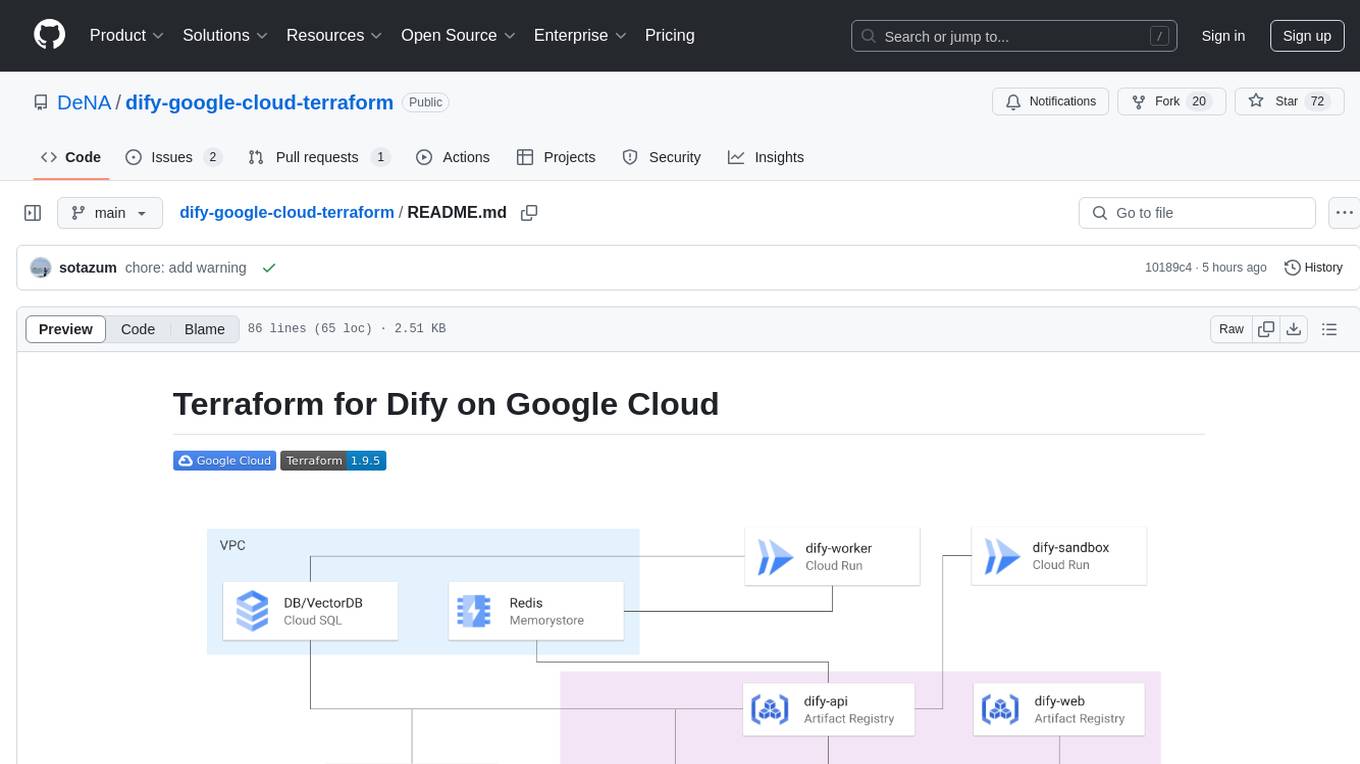

dify-google-cloud-terraform

This repository provides Terraform configurations to automatically set up Google Cloud resources and deploy Dify in a highly available configuration. It includes features such as serverless hosting, auto-scaling, and data persistence. Users need a Google Cloud account, Terraform, and gcloud CLI installed to use this tool. The configuration involves setting environment-specific values and creating a GCS bucket for managing Terraform state. The tool allows users to initialize Terraform, create Artifact Registry repository, build and push container images, plan and apply Terraform changes, and cleanup resources when needed.

atidraw

Atidraw is a web application that allows users to create, enhance, and share drawings using Cloudflare R2 and Cloudflare AI. It features intuitive drawing with signature_pad, AI-powered enhancements such as alt text generation and image generation with Stable Diffusion, global storage on Cloudflare R2, flexible authentication options, and high-performance server-side rendering on Cloudflare Pages. Users can deploy Atidraw with zero configuration on their Cloudflare account using NuxtHub.

chat-ui

This repository provides a minimalist approach to create a chatbot by constructing the entire front-end UI using a single HTML file. It supports various backend endpoints through custom configurations, multiple response formats, chat history download, and MCP. Users can deploy the chatbot locally, via Docker, Cloudflare pages, Huggingface, or within K8s. The tool also supports image inputs, toggling between different display formats, internationalization, and localization.

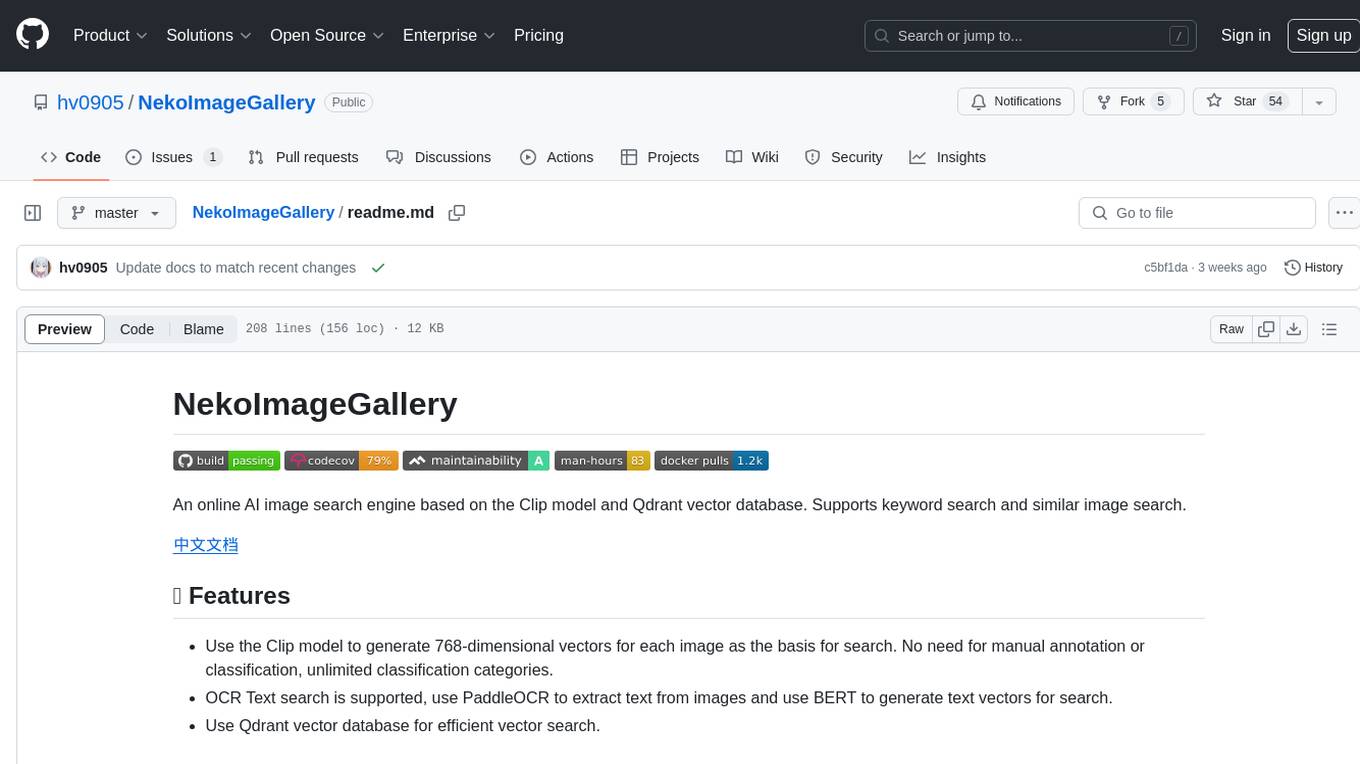

NekoImageGallery

NekoImageGallery is an online AI image search engine that utilizes the Clip model and Qdrant vector database. It supports keyword search and similar image search. The tool generates 768-dimensional vectors for each image using the Clip model, supports OCR text search using PaddleOCR, and efficiently searches vectors using the Qdrant vector database. Users can deploy the tool locally or via Docker, with options for metadata storage using Qdrant database or local file storage. The tool provides API documentation through FastAPI's built-in Swagger UI and can be used for tasks like image search, text extraction, and vector search.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

composio

Composio is a production-ready toolset for AI agents that enables users to integrate AI agents with various agentic tools effortlessly. It provides support for over 100 tools across different categories, including popular softwares like GitHub, Notion, Linear, Gmail, Slack, and more. Composio ensures managed authorization with support for six different authentication protocols, offering better agentic accuracy and ease of use. Users can easily extend Composio with additional tools, frameworks, and authorization protocols. The toolset is designed to be embeddable and pluggable, allowing for seamless integration and consistent user experience.

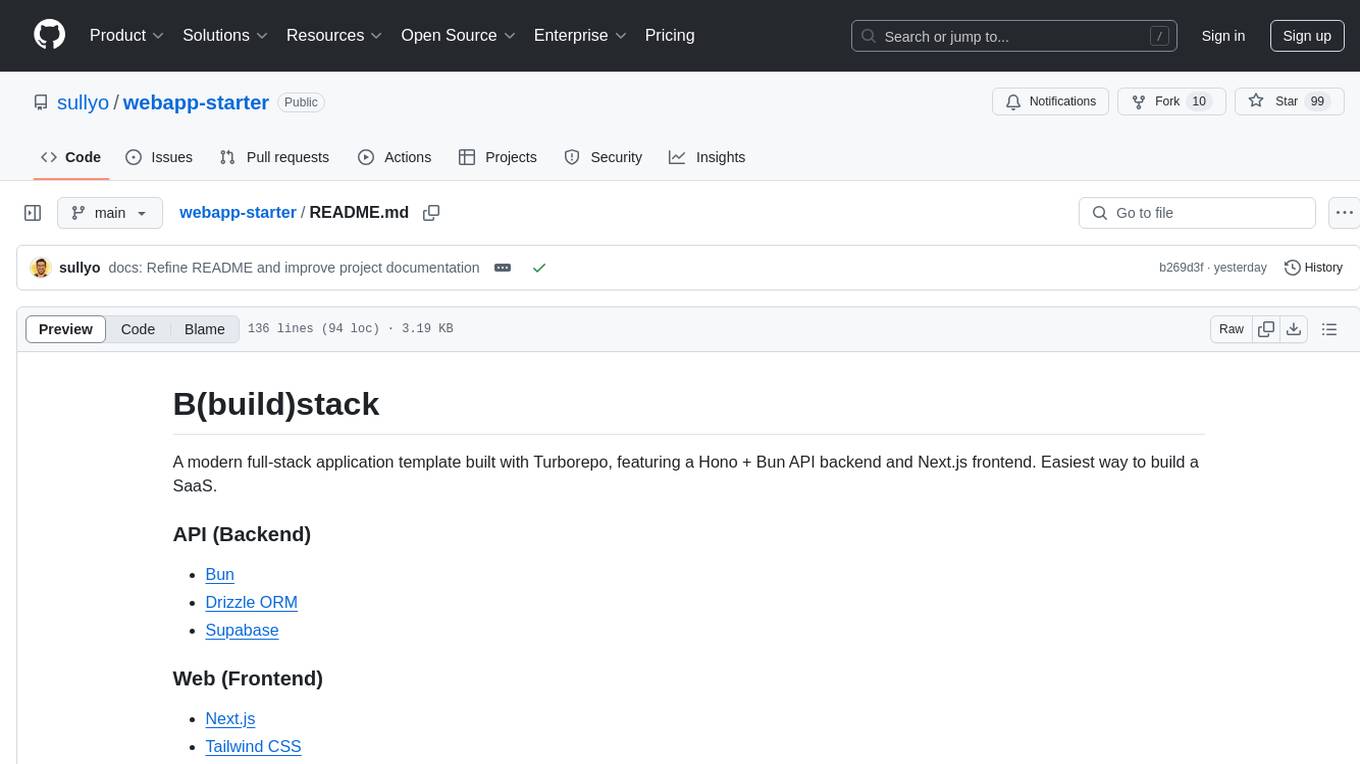

webapp-starter

webapp-starter is a modern full-stack application template built with Turborepo, featuring a Hono + Bun API backend and Next.js frontend. It provides an easy way to build a SaaS product. The backend utilizes technologies like Bun, Drizzle ORM, and Supabase, while the frontend is built with Next.js, Tailwind CSS, Shadcn/ui, and Clerk. Deployment can be done using Vercel and Render. The project structure includes separate directories for API backend and Next.js frontend, along with shared packages for the main database. Setup involves installing dependencies, configuring environment variables, and setting up services like Bun, Supabase, and Clerk. Development can be done using 'turbo dev' command, and deployment instructions are provided for Vercel and Render. Contributions are welcome through pull requests.

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

orama-core

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced AI capabilities in projects.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

For similar tasks

kumo-search

Kumo search is an end-to-end search engine framework that supports full-text search, inverted index, forward index, sorting, caching, hierarchical indexing, intervention system, feature collection, offline computation, storage system, and more. It runs on the EA (Elastic automic infrastructure architecture) platform, enabling engineering automation, service governance, real-time data, service degradation, and disaster recovery across multiple data centers and clusters. The framework aims to provide a ready-to-use search engine framework to help users quickly build their own search engines. Users can write business logic in Python using the AOT compiler in the project, which generates C++ code and binary dynamic libraries for rapid iteration of the search engine.

search_with_lepton

Build your own conversational search engine using less than 500 lines of code. Features built-in support for LLM, search engine, customizable UI interface, and shareable cached search results. Setup includes Bing and Google search engines. Utilize LLM and KV functions with Lepton for seamless integration. Easily deploy to Lepton AI or your own environment with one-click deployment options.

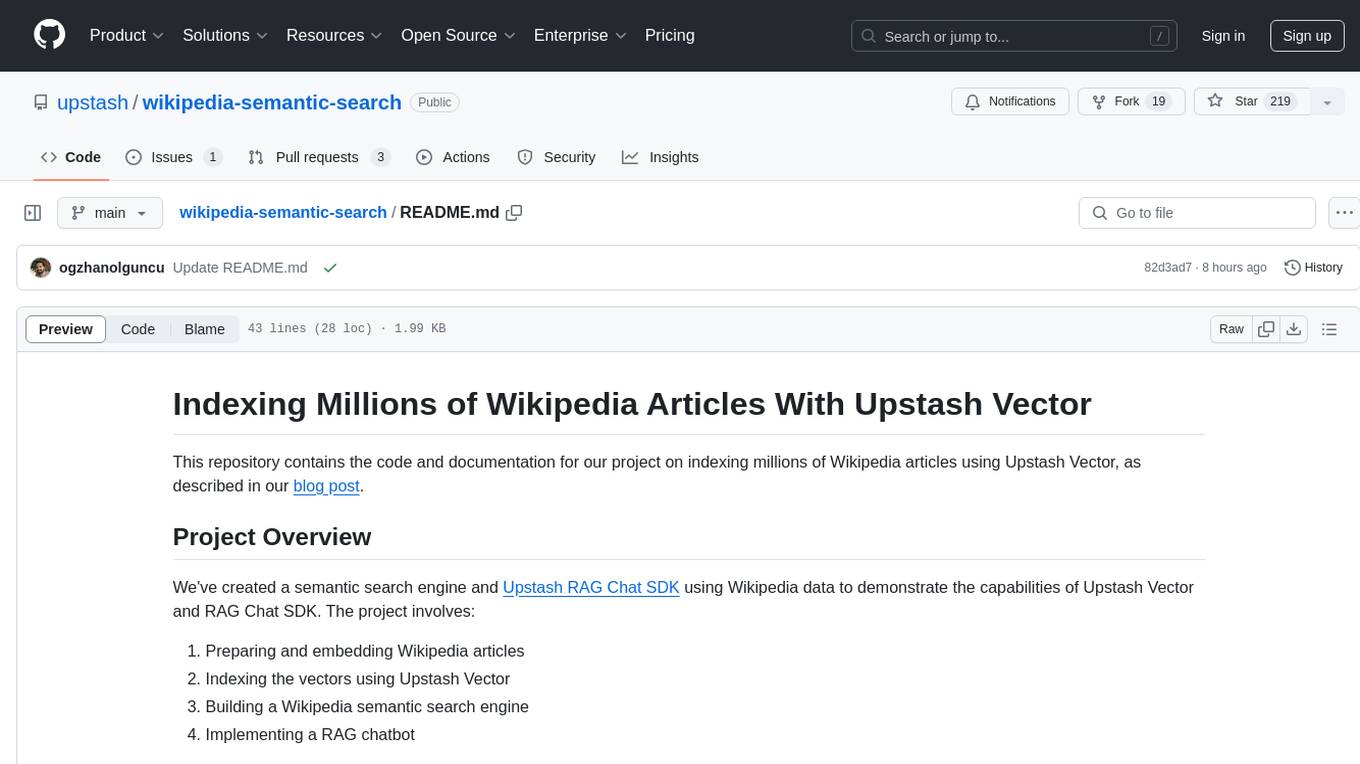

wikipedia-semantic-search

This repository showcases a project that indexes millions of Wikipedia articles using Upstash Vector. It includes a semantic search engine and a RAG chatbot SDK. The project involves preparing and embedding Wikipedia articles, indexing vectors, building a semantic search engine, and implementing a RAG chatbot. Key features include indexing over 144 million vectors, multilingual support, cross-lingual semantic search, and a RAG chatbot. Technologies used include Upstash Vector, Upstash Redis, Upstash RAG Chat SDK, SentenceTransformers, and Meta-Llama-3-8B-Instruct for LLM provider.

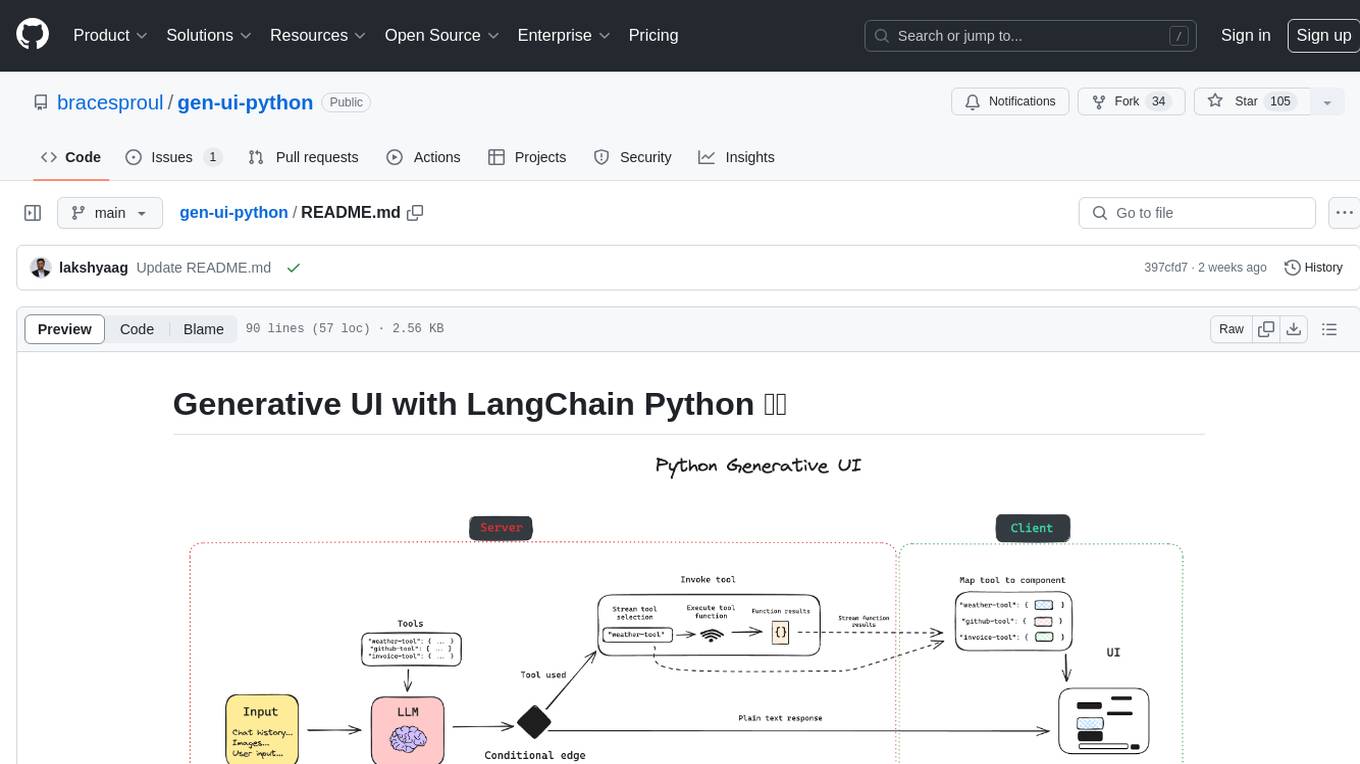

gen-ui-python

This application provides a template for building generative UI applications with LangChain Python. It includes pre-built UI components using Shadcn. Users can play around with gen ui features and customize the UI. The application requires setting environment variables for LangSmith keys, OpenAI API key, GitHub PAT, and Geocode API key. Users can further develop the application by generating React components, building custom components with LLM and Shadcn, using multiple tools and components, updating LangGraph agent, and rendering UI dynamically in different areas on the screen.

morgana-form

MorGana Form is a full-stack form builder project developed using Next.js, React, TypeScript, Ant Design, PostgreSQL, and other technologies. It allows users to quickly create and collect data through survey forms. The project structure includes components, hooks, utilities, pages, constants, Redux store, themes, types, server-side code, and component packages. Environment variables are required for database settings, NextAuth login configuration, and file upload services. Additionally, the project integrates an AI model for form generation using the Ali Qianwen model API.

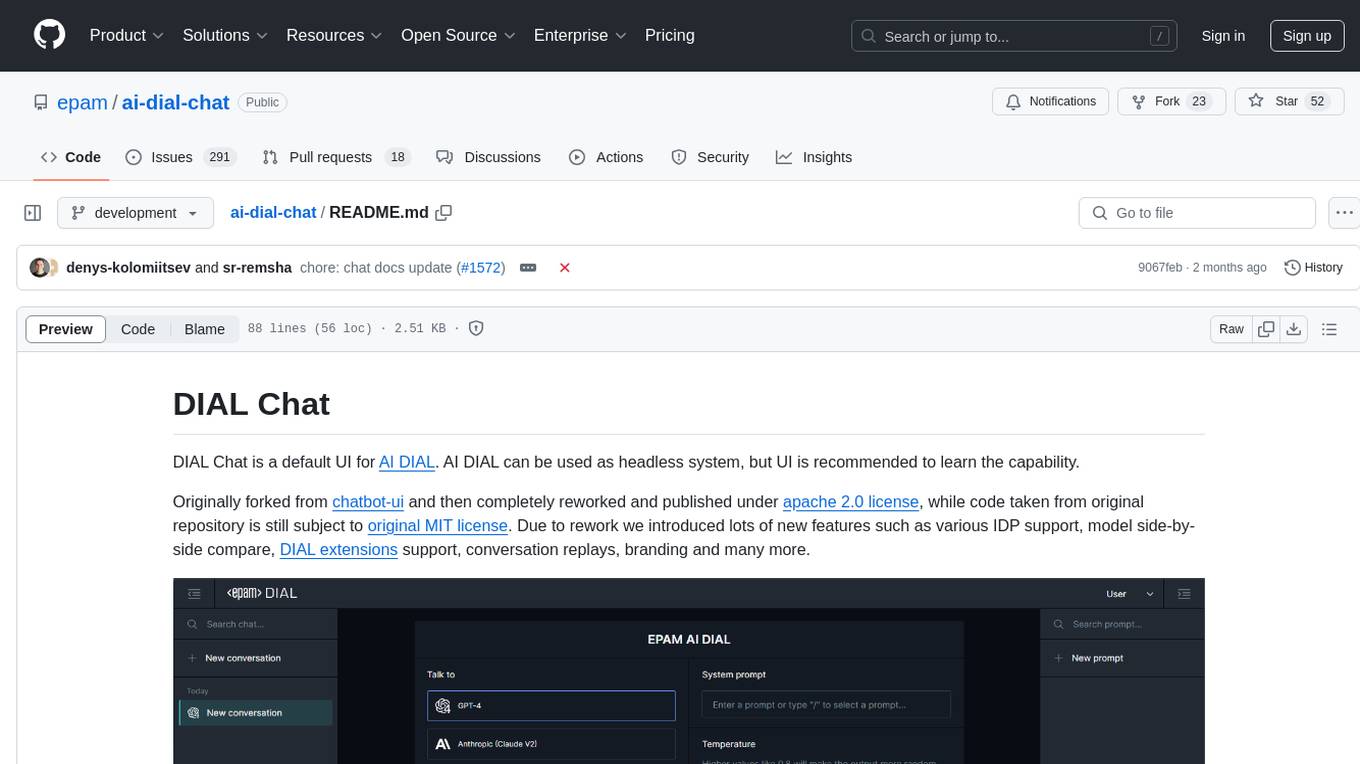

ai-dial-chat

DIAL Chat is a default UI for AI DIAL, recommended for learning the capability of the headless system. It offers various features like IDP support, model comparison, DIAL extensions, conversation replays, and branding. Managed as a monorepo by NX tools, it provides documentation for DIAL Chat, Theming, Overlay, and Visualizer Connector. Users can find a user guide for the AI DIAL Chat application in the AI DIAL repository.

AmigaGPT

AmigaGPT is a versatile ChatGPT client for AmigaOS 3.x, 4.1, and MorphOS. It brings the capabilities of OpenAI’s GPT to Amiga systems, enabling text generation, question answering, and creative exploration. AmigaGPT can generate images using DALL-E, supports speech output, and seamlessly integrates with AmigaOS. Users can customize the UI, choose fonts and colors, and enjoy a native user experience. The tool requires specific system requirements and offers features like state-of-the-art language models, AI image generation, speech capability, and UI customization.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.