vnve

🎬 VNVE (Visual Novel Video Editor) Make visual novel videos in browser 在浏览器中快速制作视觉小说视频,利用AI一键生成!

Stars: 142

VNVE is a Visual Novel Video Editor that allows users to create visual novel videos in their browser with AI-powered rapid creation. It offers a low-cost production solution for converting textual content into videos, creating interactive videos for gaming experiences, and making video teasers for novels and short video dramas. The tool is a pure front-end Typescript implementation powered by PixiJS + WebCodecs, and users can also create videos programmatically using the npm package. VNVE is tailored specifically for visual novels, focusing on text content and simplifying the video creation process for users.

README:

English | 简体中文

Visual Novel Video Editor

Make Visual Novel Videos in Your Browser 🔗

- 🔥 AI-powered rapid creation, enabling the generation of visual novel videos with just one click through the integration of APIs such as DeepSeek and OpenAI. 🆕

- 🎬 An online video editor customized for creating visual novels, open your browser and start creating!

- 👋 Say goodbye to complicated video editing software and create visual novel videos easily and quickly!

- 📝 Text First, Let's Return to the Core of Visual Novel Creation — Text Content.

- 🚀 Pure front-end Typescript implementation, Powered by PixiJS + WebCodecs.

- 🖍️ You can also create videos programmatically by using the npm package

👻 Positioning is just a video creation tool tailored for visual novels, if you want to create branching logic, numerical values and other more game-like behavior, you can go to use bilibili interactive video

Video title scene |

Character dialog scenes |

- 🪄 Low-cost production of visual novel videos, quickly converting textual content into video.

- 🧩 With interactive videos on Bilibili, it is possible to achieve a gaming experience similar to that of a GalGame.

- 🎬 Creating video teasers for novels, short video dramas.

- ...

visit: vnve.net, start creating video immediately.

https://github.com/user-attachments/assets/bcf3802d-3d64-40e7-ab89-173e42c339cb

You can also create videos directly by calling the npm package

npm install @vnve/coreimport { Creator, Scene, Img, Text, Sound, PREST_ANIMATION } from "@vnve/core";

// Init creator

const creator = new Creator();

// Scene, the video is made up of a combination of scenes

const scene = new Scene({ duration: 3000 })

// Create some elements

const img = new Img({ source: "img url" })

const text = new Text("V N V E", {

fill: "#ffffff",

fontSize: 200

})

const sound = new Sound({ source: "sound url" })

// Add elements to the scene

scene.addChild(img)

scene.addChild(text)

// Add sound

scene.addSound(sound)

// You can add some animation to the element

text.addAnimation(PREST_ANIMATION.FadeIn)

// Provide the scene to the creator and start generating the video

creator.add(scene)

creator.start().then(videoBlob => {

URL.createObjectURL(videoBlob) // Wait a few moments and you'll get an mp4 file

})By using pre-packaged templates, we can achieve the desired video effects more efficiently.

It is necessary to install an additional package @vnve/template

npm install @vnve/templateimport { Creator } from "@vnve/core";

import { TitleScene, DialogueScene } from "@vnve/template";

const creator = new Creator();

// Create a title scene

const titleScene = new TitleScene({

title: "V N V E",

subtitle: "Make video programmatically",

backgroundImgSource: "img url",

soundSources: [{ source: "sound url" }],

duration: 3000,

})

// Create a dialog scene

const dialogueScene = new DialogueScene({

lines: [

{

name: "Character A",

content: "Charater A says..."

},

{

name: "Character B",

content: "Charater B says..."

}

],

backgroundImgSource: "img url",

soundSources: [{ source: "sound url" }],

});

// Add scenes

creator.add(titleScene)

creator.add(dialogueScene)

// Start creating videos

creator.start().then(videoBlob => {

URL.createObjectURL(videoBlob) // Wait a few moments and you'll get an mp4 file

})| package name | brief | docs |

|---|---|---|

| @vnve/editor | Web UI page for the online editor | 🚧 |

| @vnve/core | Core module, using PixiJS + Webcodes to achieve scene dynamization and export Mp4 video | 🚧 |

| @vnve/template | Template package, consisting of scenarios and elements for scenario reuse | 🚧 |

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vnve

Similar Open Source Tools

vnve

VNVE is a Visual Novel Video Editor that allows users to create visual novel videos in their browser with AI-powered rapid creation. It offers a low-cost production solution for converting textual content into videos, creating interactive videos for gaming experiences, and making video teasers for novels and short video dramas. The tool is a pure front-end Typescript implementation powered by PixiJS + WebCodecs, and users can also create videos programmatically using the npm package. VNVE is tailored specifically for visual novels, focusing on text content and simplifying the video creation process for users.

videokit

VideoKit is a full-featured user-generated content solution for Unity Engine, enabling video recording, camera streaming, microphone streaming, social sharing, and conversational interfaces. It is cross-platform, with C# source code available for inspection. Users can share media, save to camera roll, pick from camera roll, stream camera preview, record videos, remove background, caption audio, and convert text commands. VideoKit requires Unity 2022.3+ and supports Android, iOS, macOS, Windows, and WebGL platforms.

catai

CatAI is a tool that allows users to run GGUF models on their computer with a chat UI. It serves as a local AI assistant inspired by Node-Llama-Cpp and Llama.cpp. The tool provides features such as auto-detecting programming language, showing original messages by clicking on user icons, real-time text streaming, and fast model downloads. Users can interact with the tool through a CLI that supports commands for installing, listing, setting, serving, updating, and removing models. CatAI is cross-platform and supports Windows, Linux, and Mac. It utilizes node-llama-cpp and offers a simple API for asking model questions. Additionally, developers can integrate the tool with node-llama-cpp@beta for model management and chatting. The configuration can be edited via the web UI, and contributions to the project are welcome. The tool is licensed under Llama.cpp's license.

omnihuman

OmniHuman is an AI model designed to understand humanoids and text. It provides functionalities to process images and videos, generating text descriptions for human actions depicted in the visual content. The tool offers support for various tasks related to human pose recognition and action understanding. Users can easily integrate OmniHuman into their projects to enhance the capabilities of their applications in recognizing and interpreting human actions in images and videos.

llm

llm.rb is a zero-dependency Ruby toolkit for Large Language Models that includes OpenAI, Gemini, Anthropic, xAI (Grok), DeepSeek, Ollama, and LlamaCpp. The toolkit provides full support for chat, streaming, tool calling, audio, images, files, and structured outputs (JSON Schema). It offers a single unified interface for multiple providers, zero dependencies outside Ruby's standard library, smart API design, and optional per-provider process-wide connection pool. Features include chat, agents, media support (text-to-speech, transcription, translation, image generation, editing), embeddings, model management, and more.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

UnrealOpenAIPlugin

UnrealOpenAIPlugin is a comprehensive Unreal Engine wrapper for the OpenAI API, supporting various endpoints such as Models, Completions, Chat, Images, Vision, Embeddings, Speech, Audio, Files, Moderations, Fine-tuning, and Functions. It provides support for both C++ and Blueprints, allowing users to interact with OpenAI services seamlessly within Unreal Engine projects. The plugin also includes tutorials, updates, installation instructions, authentication steps, examples of usage, blueprint nodes overview, C++ examples, plugin structure details, documentation references, tests, packaging guidelines, and limitations. Users can leverage this plugin to integrate powerful AI capabilities into their Unreal Engine projects effortlessly.

MetaAgent

MetaAgent is a multi-agent collaboration platform designed to build, manage, and deploy multi-modal AI agents without the need for coding. Users can easily create AI agents by editing a yml file or using the provided UI. The platform supports features such as building LLM-based AI agents, multi-modal interactions with users using texts, audios, images, and videos, creating a company of agents for complex tasks like drawing comics, vector database and knowledge embeddings, and upcoming features like UI for creating and using AI agents, fine-tuning, and RLHF. The tool simplifies the process of creating and deploying AI agents for various tasks.

educhain

Educhain is a powerful Python package that leverages Generative AI to create engaging and personalized educational content. It enables users to generate multiple-choice questions, create lesson plans, and support various LLM models. Users can export questions to JSON, PDF, and CSV formats, customize prompt templates, and generate questions from text, PDF, URL files, youtube videos, and images. Educhain outperforms traditional methods in content generation speed and quality. It offers advanced configuration options and has a roadmap for future enhancements, including integration with popular Learning Management Systems and a mobile app for content generation on-the-go.

langchaingo

LangChain Go is a Go language implementation of LangChain, a framework for building applications with LLMs through composability. It provides a simple and easy-to-use API for interacting with LLMs, making it easy to add language-based features to your applications.

dom-to-semantic-markdown

DOM to Semantic Markdown is a tool that converts HTML DOM to Semantic Markdown for use in Large Language Models (LLMs). It maximizes semantic information, token efficiency, and preserves metadata to enhance LLMs' processing capabilities. The tool captures rich web content structure, including semantic tags, image metadata, table structures, and link destinations. It offers customizable conversion options and supports both browser and Node.js environments.

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

LTEngine

LTEngine is a free and open-source local AI machine translation API written in Rust. It is self-hosted and compatible with LibreTranslate. LTEngine utilizes large language models (LLMs) via llama.cpp, offering high-quality translations that rival or surpass DeepL for certain languages. It supports various accelerators like CUDA, Metal, and Vulkan, with the largest model 'gemma3-27b' fitting on a single consumer RTX 3090. LTEngine is actively developed, with a roadmap outlining future enhancements and features.

hydraai

Generate React components on-the-fly at runtime using AI. Register your components, and let Hydra choose when to show them in your App. Hydra development is still early, and patterns for different types of components and apps are still being developed. Join the discord to chat with the developers. Expects to be used in a NextJS project. Components that have function props do not work.

HuixiangDou

HuixiangDou is a **group chat** assistant based on LLM (Large Language Model). Advantages: 1. Design a two-stage pipeline of rejection and response to cope with group chat scenario, answer user questions without message flooding, see arxiv2401.08772 2. Low cost, requiring only 1.5GB memory and no need for training 3. Offers a complete suite of Web, Android, and pipeline source code, which is industrial-grade and commercially viable Check out the scenes in which HuixiangDou are running and join WeChat Group to try AI assistant inside. If this helps you, please give it a star ⭐

TheoremExplainAgent

TheoremExplainAgent is an AI system that generates long-form Manim videos to visually explain theorems, proving its deep understanding while uncovering reasoning flaws that text alone often hides. The codebase for the paper 'TheoremExplainAgent: Towards Multimodal Explanations for LLM Theorem Understanding' is available in this repository. It provides a tool for creating multimodal explanations for theorem understanding using AI technology.

For similar tasks

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

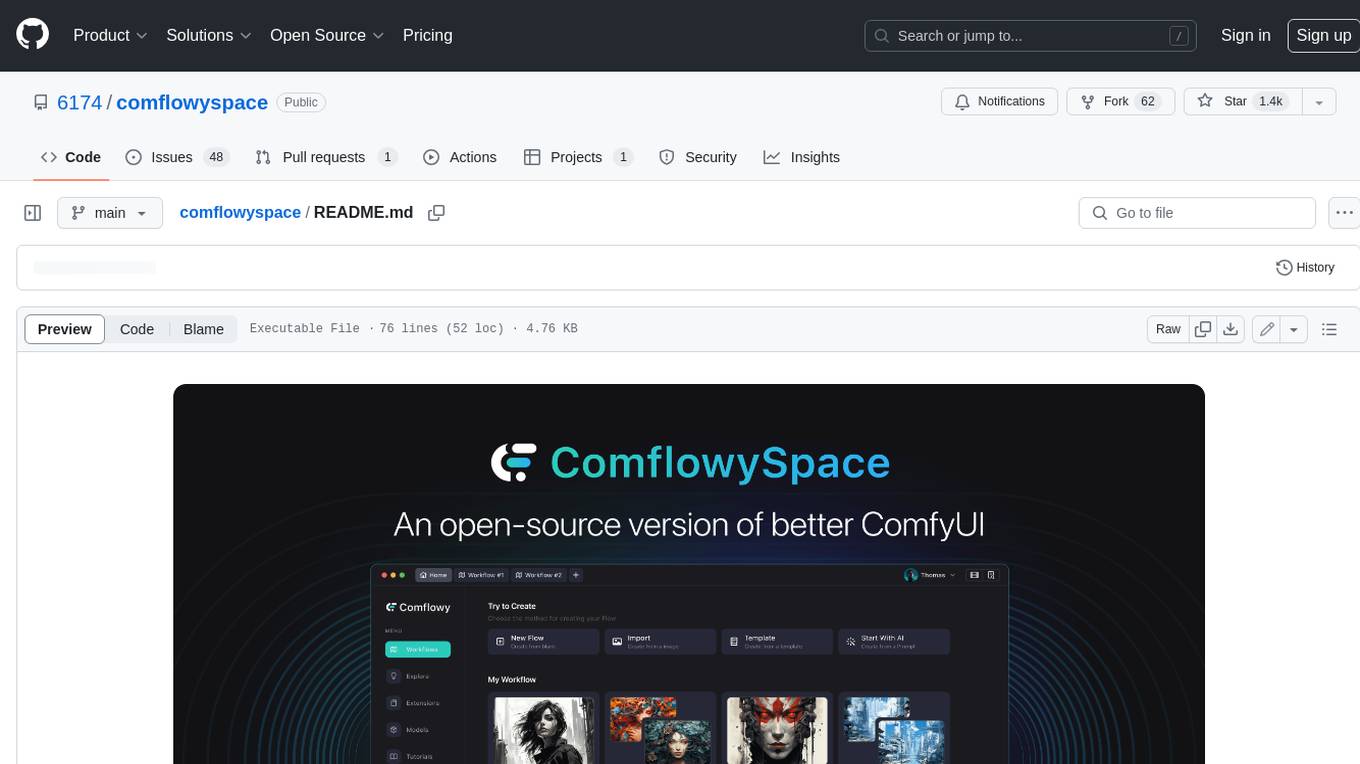

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

Rewind-AI-Main

Rewind AI is a free and open-source AI-powered video editing tool that allows users to easily create and edit videos. It features a user-friendly interface, a wide range of editing tools, and support for a variety of video formats. Rewind AI is perfect for beginners and experienced video editors alike.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

Dough

Dough is a tool for crafting videos with AI, allowing users to guide video generations with precision using images and example videos. Users can create guidance frames, assemble shots, and animate them by defining parameters and selecting guidance videos. The tool aims to help users make beautiful and unique video creations, providing control over the generation process. Setup instructions are available for Linux and Windows platforms, with detailed steps for installation and running the app.

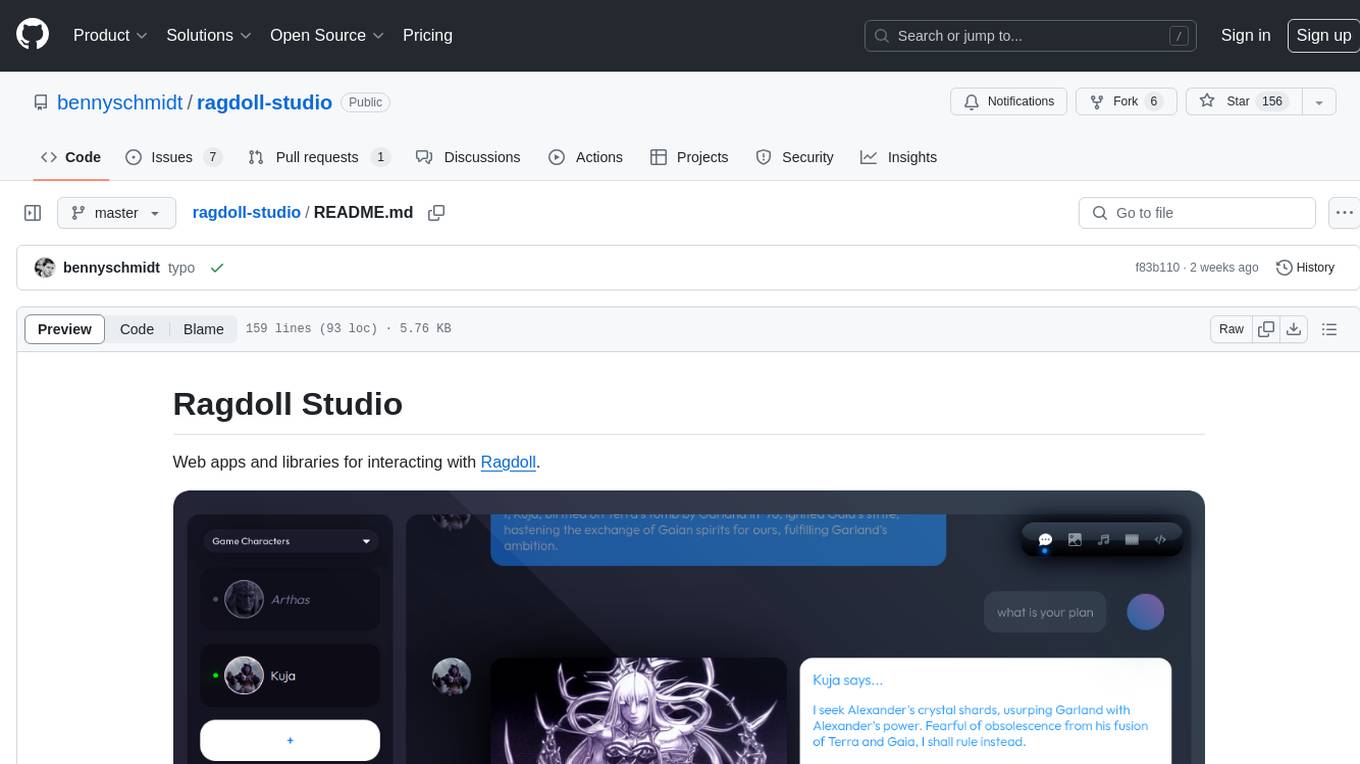

ragdoll-studio

Ragdoll Studio is a platform offering web apps and libraries for interacting with Ragdoll, enabling users to go beyond fine-tuning and create flawless creative deliverables, rich multimedia, and engaging experiences. It provides various modes such as Story Mode for creating and chatting with characters, Vector Mode for producing vector art, Raster Mode for producing raster art, Video Mode for producing videos, Audio Mode for producing audio, and 3D Mode for producing 3D objects. Users can export their content in various formats and share their creations on the community site. The platform consists of a Ragdoll API and a front-end React application for seamless usage.

Whisper-TikTok

Discover Whisper-TikTok, an innovative AI-powered tool that leverages the prowess of Edge TTS, OpenAI-Whisper, and FFMPEG to craft captivating TikTok videos. Whisper-TikTok effortlessly generates accurate transcriptions from audio files and integrates Microsoft Edge Cloud Text-to-Speech API for vibrant voiceovers. The program orchestrates the synthesis of videos using a structured JSON dataset, generating mesmerizing TikTok content in minutes.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.