aioboto3

Wrapper to use boto3 resources with the aiobotocore async backend

Stars: 744

aioboto3 is an async AWS SDK for Python that allows users to use near enough all of the boto3 client commands in an async manner just by prefixing the command with `await`. It combines the great work of boto3 and aiobotocore, enabling users to use higher level APIs provided by boto3 in an asynchronous manner. The package provides support for various AWS services such as DynamoDB, S3, Kinesis, SSM Parameter Store, and Athena. It also offers features like client-side encryption using KMS-Managed master keys and supports asyncifying `get_presigned_url`. The library closely mimics the usage of boto3 and is mainly developed to be used in async microservices.

README:

.. image:: https://img.shields.io/pypi/v/aioboto3.svg :target: https://pypi.python.org/pypi/aioboto3

.. image:: https://github.com/terrycain/aioboto3/actions/workflows/CI.yml/badge.svg :target: https://github.com/terrycain/aioboto3/actions

.. image:: https://readthedocs.org/projects/aioboto3/badge/?version=latest :target: https://aioboto3.readthedocs.io :alt: Documentation Status

.. image:: https://pyup.io/repos/github/terrycain/aioboto3/shield.svg :target: https://pyup.io/repos/github/terrycain/aioboto3/ :alt: Updates

Breaking changes for v11: The S3Transfer config passed into upload/download_file etc.. has been updated to that it matches what boto3 uses

Breaking changes for v9: aioboto3.resource and aioboto3.client methods no longer exist, make a session then call session.client etc... This was done for various reasons but mainly that it prevents the default session living longer than it should as that breaks situations where eventloops are replaced.

The .client and .resource functions must now be used as async context managers.

Now that aiobotocore has reached version 1.0.1, a side effect of the work put in to fix various issues like bucket region redirection and

supporting web assume role type credentials, the client must now be instantiated using a context manager, which by extension applies to

the resource creator. You used to get away with calling res = aioboto3.resource('dynamodb') but that no longer works. If you really want

to do that, you can do res = await aioboto3.resource('dynamodb').__aenter__() but you'll need to remember to call __aexit__.

There will most likely be some parts that dont work now which I've missed, just make an issue and we'll get them resoved quickly.

Creating service resources must also be async now, e.g.

.. code-block:: python

async def main():

session = aioboto3.Session()

async with session.resource("s3") as s3:

bucket = await s3.Bucket('mybucket') # <----------------

async for s3_object in bucket.objects.all():

print(s3_object)

Updating to aiobotocore 1.0.1 also brings with it support for running inside EKS as well as asyncifying get_presigned_url

This package is mostly just a wrapper combining the great work of boto3_ and aiobotocore_.

aiobotocore allows you to use near enough all of the boto3 client commands in an async manner just by prefixing the command with await.

With aioboto3 you can now use the higher level APIs provided by boto3 in an asynchronous manner. Mainly I developed this as I wanted to use the boto3 dynamodb Table object in some async microservices.

While all resources in boto3 should work I havent tested them all, so if what your after is not in the table below then try it out, if it works drop me an issue with a simple test case and I'll add it to the table.

+---------------------------+--------------------+ | Services | Status | +===========================+====================+ | DynamoDB Service Resource | Tested and working | +---------------------------+--------------------+ | DynamoDB Table | Tested and working | +---------------------------+--------------------+ | S3 | Working | +---------------------------+--------------------+ | Kinesis | Working | +---------------------------+--------------------+ | SSM Parameter Store | Working | +---------------------------+--------------------+ | Athena | Working | +---------------------------+--------------------+

Simple example of using aioboto3 to put items into a dynamodb table

.. code-block:: python

import asyncio

import aioboto3

from boto3.dynamodb.conditions import Key

async def main():

session = aioboto3.Session()

async with session.resource('dynamodb', region_name='eu-central-1') as dynamo_resource:

table = await dynamo_resource.Table('test_table')

await table.put_item(

Item={'pk': 'test1', 'col1': 'some_data'}

)

result = await table.query(

KeyConditionExpression=Key('pk').eq('test1')

)

# Example batch write

more_items = [{'pk': 't2', 'col1': 'c1'}, \

{'pk': 't3', 'col1': 'c3'}]

async with table.batch_writer() as batch:

for item_ in more_items:

await batch.put_item(Item=item_)

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

# Outputs:

# [{'col1': 'some_data', 'pk': 'test1'}]

As this library literally wraps boto3, its inevitable that some things won't magically be async.

Fixed:

-

s3_client.download_file*This is performed by the s3transfer module. -- Patched with get_object -

s3_client.upload_file*This is performed by the s3transfer module. -- Patched with custom multipart upload -

s3_client.copyThis is performed by the s3transfer module. -- Patched to use get_object -> upload_fileobject -

dynamodb_resource.Table.batch_writerThis now returns an async context manager which performs the same function - Resource waiters - You can now await waiters which are part of resource objects, not just client waiters, e.g.

await dynamodbtable.wait_until_exists() - Resource object properties are normally autoloaded, now they are all co-routines and the metadata they come from will be loaded on first await and then cached thereafter.

- S3 Bucket.objects object now works and has been asyncified. Examples here - https://aioboto3.readthedocs.io/en/latest/usage.html#s3-resource-objects

Boto3 doesn't support AWS client-side encryption so until they do I've added basic support for it. Docs here CSE_

CSE requires the python cryptography library so if you do pip install aioboto3[s3cse] that'll also include cryptography.

This library currently supports client-side encryption using KMS-Managed master keys performing envelope encryption using either AES/CBC/PKCS5Padding or preferably AES/GCM/NoPadding. The files generated are compatible with the Java Encryption SDK so I will assume they are compatible with the Ruby, PHP, Go and C++ libraries as well.

Non-KMS managed keys are not yet supported but if you have use of that, raise an issue and i'll look into it.

Docs are here - https://aioboto3.readthedocs.io/en/latest/

Examples here - https://aioboto3.readthedocs.io/en/latest/usage.html

- Closely mimics the usage of boto3.

- More examples

- Set up docs

- Look into monkey-patching the aws xray sdk to be more async if it needs to be.

This package was created with Cookiecutter_ and the audreyr/cookiecutter-pypackage_ project template.

It also makes use of the aiobotocore_ and boto3_ libraries. All the credit goes to them, this is mainly a wrapper with some examples.

.. _aiobotocore: https://github.com/aio-libs/aiobotocore

.. _boto3: https://github.com/boto/boto3

.. _Cookiecutter: https://github.com/audreyr/cookiecutter

.. _audreyr/cookiecutter-pypackage: https://github.com/audreyr/cookiecutter-pypackage

.. _CSE: https://aioboto3.readthedocs.io/en/latest/cse.html

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aioboto3

Similar Open Source Tools

aioboto3

aioboto3 is an async AWS SDK for Python that allows users to use near enough all of the boto3 client commands in an async manner just by prefixing the command with `await`. It combines the great work of boto3 and aiobotocore, enabling users to use higher level APIs provided by boto3 in an asynchronous manner. The package provides support for various AWS services such as DynamoDB, S3, Kinesis, SSM Parameter Store, and Athena. It also offers features like client-side encryption using KMS-Managed master keys and supports asyncifying `get_presigned_url`. The library closely mimics the usage of boto3 and is mainly developed to be used in async microservices.

aiomisc

aiomisc is a Python library that provides a collection of utility functions and classes for working with asynchronous I/O in a more intuitive and efficient way. It offers features like worker pools, connection pools, circuit breaker pattern, and retry mechanisms to make asyncio code more robust and easier to maintain. The library simplifies the architecture of software using asynchronous I/O, making it easier for developers to write reliable and scalable asynchronous code.

aiosmtpd

aiosmtpd is an asyncio-based SMTP server implementation that provides a modern and efficient way to handle SMTP and LMTP protocols in Python 3. It replaces the outdated asyncore and asynchat modules with asyncio for improved asynchronous I/O operations. The project aims to offer a more user-friendly, extendable, and maintainable solution for handling email protocols in Python applications. It is actively maintained by experienced Python developers and offers full documentation for easy integration and usage.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

APIMyLlama

APIMyLlama is a server application that provides an interface to interact with the Ollama API, a powerful AI tool to run LLMs. It allows users to easily distribute API keys to create amazing things. The tool offers commands to generate, list, remove, add, change, activate, deactivate, and manage API keys, as well as functionalities to work with webhooks, set rate limits, and get detailed information about API keys. Users can install APIMyLlama packages with NPM, PIP, Jitpack Repo+Gradle or Maven, or from the Crates Repository. The tool supports Node.JS, Python, Java, and Rust for generating responses from the API. Additionally, it provides built-in health checking commands for monitoring API health status.

json_repair

This simple package can be used to fix an invalid json string. To know all cases in which this package will work, check out the unit test. Inspired by https://github.com/josdejong/jsonrepair Motivation Some LLMs are a bit iffy when it comes to returning well formed JSON data, sometimes they skip a parentheses and sometimes they add some words in it, because that's what an LLM does. Luckily, the mistakes LLMs make are simple enough to be fixed without destroying the content. I searched for a lightweight python package that was able to reliably fix this problem but couldn't find any. So I wrote one How to use from json_repair import repair_json good_json_string = repair_json(bad_json_string) # If the string was super broken this will return an empty string You can use this library to completely replace `json.loads()`: import json_repair decoded_object = json_repair.loads(json_string) or just import json_repair decoded_object = json_repair.repair_json(json_string, return_objects=True) Read json from a file or file descriptor JSON repair provides also a drop-in replacement for `json.load()`: import json_repair try: file_descriptor = open(fname, 'rb') except OSError: ... with file_descriptor: decoded_object = json_repair.load(file_descriptor) and another method to read from a file: import json_repair try: decoded_object = json_repair.from_file(json_file) except OSError: ... except IOError: ... Keep in mind that the library will not catch any IO-related exception and those will need to be managed by you Performance considerations If you find this library too slow because is using `json.loads()` you can skip that by passing `skip_json_loads=True` to `repair_json`. Like: from json_repair import repair_json good_json_string = repair_json(bad_json_string, skip_json_loads=True) I made a choice of not using any fast json library to avoid having any external dependency, so that anybody can use it regardless of their stack. Some rules of thumb to use: - Setting `return_objects=True` will always be faster because the parser returns an object already and it doesn't have serialize that object to JSON - `skip_json_loads` is faster only if you 100% know that the string is not a valid JSON - If you are having issues with escaping pass the string as **raw** string like: `r"string with escaping\"" Adding to requirements Please pin this library only on the major version! We use TDD and strict semantic versioning, there will be frequent updates and no breaking changes in minor and patch versions. To ensure that you only pin the major version of this library in your `requirements.txt`, specify the package name followed by the major version and a wildcard for minor and patch versions. For example: json_repair==0.* In this example, any version that starts with `0.` will be acceptable, allowing for updates on minor and patch versions. How it works This module will parse the JSON file following the BNF definition:

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

generative-ai

The 'Generative AI' repository provides a C# library for interacting with Google's Generative AI models, specifically the Gemini models. It allows users to access and integrate the Gemini API into .NET applications, supporting functionalities such as listing available models, generating content, creating tuned models, working with large files, starting chat sessions, and more. The repository also includes helper classes and enums for Gemini API aspects. Authentication methods include API key, OAuth, and various authentication modes for Google AI and Vertex AI. The package offers features for both Google AI Studio and Google Cloud Vertex AI, with detailed instructions on installation, usage, and troubleshooting.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

playword

PlayWord is a tool designed to supercharge web test automation experience with AI. It provides core features such as enabling browser operations and validations using natural language inputs, as well as monitoring interface to record and dry-run test steps. PlayWord supports multiple AI services including Anthropic, Google, and OpenAI, allowing users to select the appropriate provider based on their requirements. The tool also offers features like assertion handling, frame handling, custom variables, test recordings, and an Observer module to track user interactions on web pages. With PlayWord, users can interact with web pages using natural language commands, reducing the need to worry about element locators and providing AI-powered adaptation to UI changes.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

For similar tasks

aioboto3

aioboto3 is an async AWS SDK for Python that allows users to use near enough all of the boto3 client commands in an async manner just by prefixing the command with `await`. It combines the great work of boto3 and aiobotocore, enabling users to use higher level APIs provided by boto3 in an asynchronous manner. The package provides support for various AWS services such as DynamoDB, S3, Kinesis, SSM Parameter Store, and Athena. It also offers features like client-side encryption using KMS-Managed master keys and supports asyncifying `get_presigned_url`. The library closely mimics the usage of boto3 and is mainly developed to be used in async microservices.

For similar jobs

aioboto3

aioboto3 is an async AWS SDK for Python that allows users to use near enough all of the boto3 client commands in an async manner just by prefixing the command with `await`. It combines the great work of boto3 and aiobotocore, enabling users to use higher level APIs provided by boto3 in an asynchronous manner. The package provides support for various AWS services such as DynamoDB, S3, Kinesis, SSM Parameter Store, and Athena. It also offers features like client-side encryption using KMS-Managed master keys and supports asyncifying `get_presigned_url`. The library closely mimics the usage of boto3 and is mainly developed to be used in async microservices.

aiobotocore

aiobotocore is an async client for Amazon services using botocore and aiohttp/asyncio. It provides a mostly full-featured asynchronous version of botocore, allowing users to interact with various AWS services asynchronously. The library supports operations such as uploading objects to S3, getting object properties, listing objects, and deleting objects. It also offers context manager examples for managing resources efficiently. aiobotocore supports multiple AWS services like S3, DynamoDB, SNS, SQS, CloudFormation, and Kinesis, with basic methods tested for each service. Users can run tests using moto for mocked tests or against personal Amazon keys. Additionally, the tool enables type checking and code completion for better development experience.

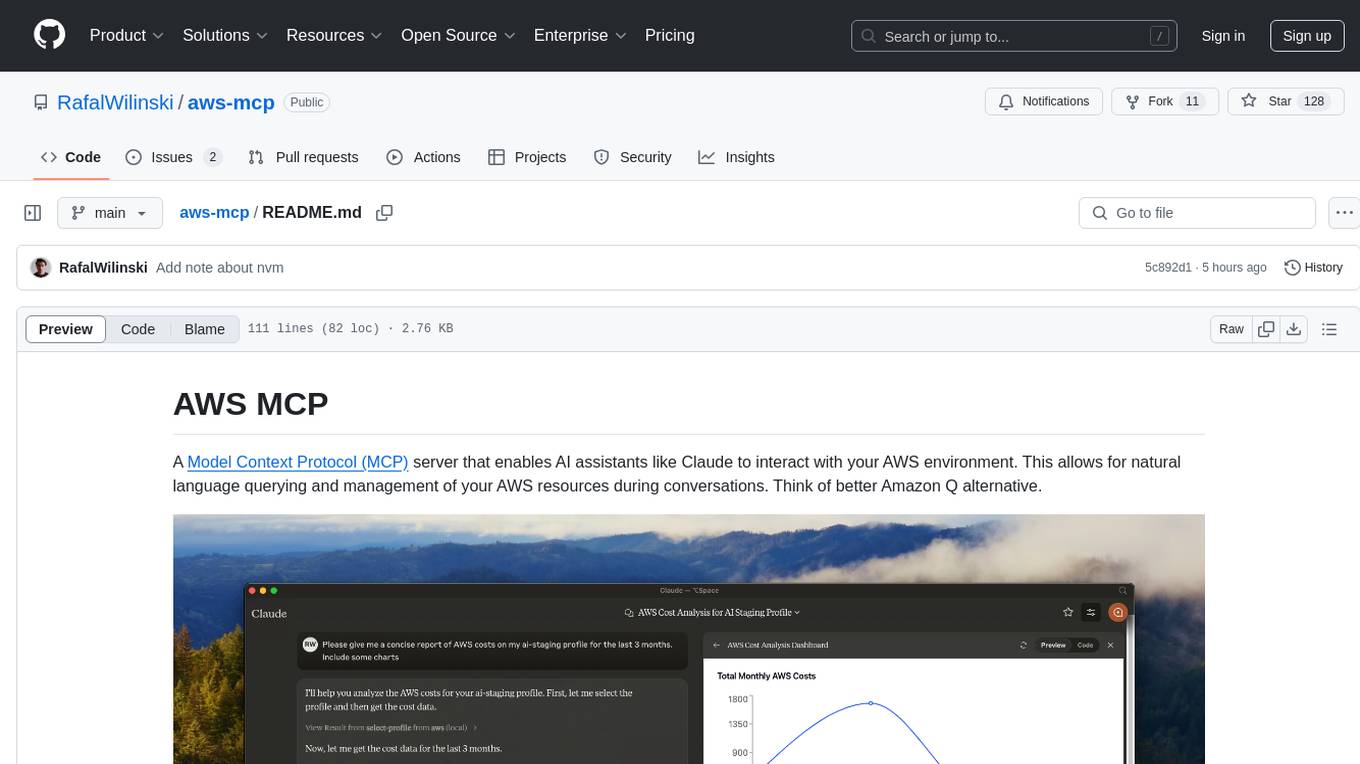

aws-mcp

AWS MCP is a Model Context Protocol (MCP) server that facilitates interactions between AI assistants and AWS environments. It allows for natural language querying and management of AWS resources during conversations. The server supports multiple AWS profiles, SSO authentication, multi-region operations, and secure credential handling. Users can locally execute commands with their AWS credentials, enhancing the conversational experience with AWS resources.

serverless-openclaw

An open-source project, Serverless OpenClaw, that runs OpenClaw on-demand on AWS serverless infrastructure, providing a web UI and Telegram as interfaces. It minimizes cost, offers predictive pre-warming, supports multi-LLM providers, task automation, and one-command deployment. The project aims for cost optimization, easy management, scalability, and security through various features and technologies. It follows a specific architecture and tech stack, with a roadmap for future development phases and estimated costs. The project structure is organized as an npm workspaces monorepo with TypeScript project references, and detailed documentation is available for contributors and users.

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.