augustus

LLM security testing framework for detecting prompt injection, jailbreaks, and adversarial attacks — 190+ probes, 28 providers, single Go binary

Stars: 120

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

README:

Test large language models against 210+ adversarial attacks covering prompt injection, jailbreaks, encoding exploits, and data extraction.

Augustus is a Go-based LLM vulnerability scanner for security professionals. It tests large language models against a wide range of adversarial attacks, integrates with 28 LLM providers, and produces actionable vulnerability reports.

Unlike research-oriented tools, Augustus is built for production security testing — concurrent scanning, rate limiting, retry logic, and timeout handling come out of the box.

- Why Augustus

- Features

- Quick Start

- Supported Providers

- Usage

- How It Works

- Architecture

- Configuration

- FAQ

- Troubleshooting

- Contributing

- Security

- Support

- License

| Feature | Augustus | garak | promptfoo |

|---|---|---|---|

| Language | Go | Python | TypeScript |

| Single binary | Yes | No | No |

| Concurrent scanning | Goroutine pools | Multiprocessing pools | Yes |

| LLM providers | 28 | 35+ | 80+ |

| Probe types | 210+ | 160+ | 119 plugins + 36 strategies |

| Enterprise focus | Yes | Research | Yes |

| Feature | Description |

|---|---|

| 210+ Vulnerability Probes | 47 attack categories: jailbreaks, prompt injection, adversarial examples, data extraction, safety benchmarks, agent attacks, and more |

| 28 LLM Providers | OpenAI, Anthropic, Azure, Bedrock, Vertex AI, Ollama, and 22 more with 43 generator variants |

| 90+ Detectors | Pattern matching, LLM-as-a-judge, HarmJudge (arXiv:2511.15304), Perspective API, unsafe content detection |

| 7 Buff Transformations | Encoding, paraphrase, poetry (5 formats, 3 strategies), low-resource language translation, case transforms |

| Flexible Output | Table, JSON, JSONL, and HTML report formats |

| Production Ready | Concurrent scanning, rate limiting, retry logic, timeout handling |

| Single Binary | Go-based tool compiles to one portable executable |

| Extensible | Plugin-style registration via Go init() functions |

- Jailbreak attacks: DAN, DAN 11.0, AIM, AntiGPT, Grandma, ArtPrompts

- Prompt injection: Encoding (Base64, ROT13, Morse), Tag smuggling, FlipAttack, Prefix/Suffix injection

- Adversarial examples: GCG, PAIR, AutoDAN, TAP (Tree of Attack Prompts), TreeSearch, MindMap, DRA

- Data extraction: API key leakage, Package hallucination, PII extraction, LeakReplay

- Context manipulation: RAG poisoning, Context overflow, Multimodal attacks, Continuation, Divergence

- Format exploits: Markdown injection, YAML/JSON parsing attacks, ANSI escape, Web injection (XSS)

- Evasion techniques: Obfuscation, Character substitution, Translation-based attacks, Phrasing, ObscurePrompt

- Safety benchmarks: DoNotAnswer, RealToxicityPrompts, Snowball, LMRC

- Agent attacks: Multi-agent manipulation, Browsing exploits

- Security testing: Guardrail bypass, AV/spam scanning, Exploitation (SQLi, code exec), Steganography, BadChars

Warning: The

lmrcprobe uses profane and offensive language as part of its jailbreak testing. Use only in authorized testing environments.

Requires Go 1.25.3 or later.

go install github.com/praetorian-inc/augustus/cmd/augustus@latestOr build from source:

git clone https://github.com/praetorian-inc/augustus.git

cd augustus

make buildexport OPENAI_API_KEY="your-api-key"

augustus scan openai.OpenAI \

--probe dan.Dan \

--detector dan.DanDetector \

--verbose+------------------+----------+---------+-------+--------+

| PROBE | DETECTOR | PASSED | SCORE | STATUS |

+------------------+----------+---------+-------+--------+

| dan.Dan | dan.DAN | false | 0.85 | VULN |

| encoding.Base64 | encoding | true | 0.10 | SAFE |

| smuggling.Tag | smuggling| true | 0.05 | SAFE |

+------------------+----------+---------+-------+--------+

# List all registered probes, detectors, generators, harnesses, and buffs

augustus listAugustus includes 28 LLM provider categories with 43 generator variants:

| Provider | Generator Name(s) | Notes |

|---|---|---|

| OpenAI |

openai.OpenAI, openai.OpenAIReasoning

|

GPT-3.5, GPT-4, GPT-4 Turbo, o1/o3 reasoning models |

| Anthropic | anthropic.Anthropic |

Claude 3/3.5/4 (Opus, Sonnet, Haiku) |

| Azure OpenAI | azure.AzureOpenAI |

Azure-hosted OpenAI models |

| AWS Bedrock | bedrock.Bedrock |

Claude, Llama, Titan models |

| Google Vertex AI | vertex.Vertex |

PaLM, Gemini models |

| Cohere | cohere.Cohere |

Command, Command R models |

| Replicate | replicate.Replicate |

Cloud-hosted open models |

| HuggingFace |

huggingface.InferenceAPI, huggingface.InferenceEndpoint, huggingface.Pipeline, huggingface.LLaVA

|

HF Inference API, endpoints, pipelines, multimodal |

| Together AI | together.Together |

Fast inference for OSS models |

| Anyscale | anyscale.Anyscale |

Llama and Mistral hosting |

| Groq | groq.Groq |

Ultra-fast LPU inference |

| Mistral | mistral.Mistral |

Mistral API models |

| Fireworks | fireworks.Fireworks |

Production inference platform |

| DeepInfra | deepinfra.DeepInfra |

Serverless GPU inference |

| NVIDIA NIM |

nim.NIM, nim.NVOpenAICompletion, nim.NVMultimodal, nim.Vision

|

NVIDIA AI endpoints, multimodal |

| NVIDIA NeMo | nemo.NeMo |

NVIDIA NeMo framework |

| NVIDIA NVCF |

nvcf.NvcfChat, nvcf.NvcfCompletion

|

NVIDIA Cloud Functions |

| NeMo Guardrails | guardrails.NeMoGuardrails |

NVIDIA NeMo Guardrails |

| IBM watsonx | watsonx.WatsonX |

IBM watsonx.ai platform |

| LangChain | langchain.LangChain |

LangChain LLM wrapper |

| LangChain Serve | langchainserve.LangChainServe |

LangChain Serve endpoints |

| Rasa | rasa.RasaRest |

Rasa conversational AI |

| GGML | ggml.Ggml |

GGML local model inference |

| Function |

function.Single, function.Multiple

|

Custom function generators |

| Ollama |

ollama.Ollama, ollama.OllamaChat

|

Local model hosting |

| LiteLLM | litellm.LiteLLM |

Unified API proxy |

| REST API | rest.Rest |

Custom REST endpoints (SSE support) |

| Test |

test.Blank, test.Repeat, test.Lipsum, test.Nones, test.Single, test.BlankVision

|

Testing and development |

All providers are available in the compiled binary. Configure via environment variables or YAML configuration files. See Configuration for setup details.

# Test for DAN jailbreak

augustus scan openai.OpenAI \

--probe dan.Dan \

--detector dan.DanDetector \

--config-file config.yaml \

--verbose# Use glob patterns to run related probes

augustus scan openai.OpenAI \

--probes-glob "encoding.*,smuggling.*,dan.*" \

--detectors-glob "*" \

--config-file config.yaml \

--output batch-results.jsonl

# Run all probes against Claude

augustus scan anthropic.Anthropic \

--all \

--config '{"model":"claude-3-opus-20240229"}' \

--timeout 60m \

--output comprehensive-scan.jsonl \

--html comprehensive-report.htmlApply prompt transformations to test evasion techniques:

# Apply base64 encoding buff to all probes

augustus scan openai.OpenAI \

--all \

--buff encoding.Base64 \

--config '{"model":"gpt-4"}'

# Apply poetry transformation

augustus scan anthropic.Anthropic \

--probes-glob "dan.*" \

--buff poetry.Poetry \

--config '{"model":"claude-3-opus-20240229"}'

# Chain multiple buffs

augustus scan openai.OpenAI \

--all \

--buffs-glob "encoding.*,paraphrase.*" \

--output buffed-results.jsonl# Table format (default) - human-readable

augustus scan openai.OpenAI --probe dan.Dan --format table

# JSON format - structured output

augustus scan openai.OpenAI --probe dan.Dan --format json

# JSONL format - one JSON object per line, ideal for piping

augustus scan openai.OpenAI --probe dan.Dan --format jsonl

# HTML report - visual reports for stakeholders

augustus scan openai.OpenAI --all --html report.html# Test proprietary LLM endpoint (OpenAI-compatible API)

augustus scan rest.Rest \

--probe dan.Dan \

--detector dan.DanDetector \

--config '{

"uri": "https://api.example.com/v1/chat/completions",

"method": "POST",

"headers": {"Authorization": "Bearer YOUR_API_KEY"},

"req_template_json_object": {

"model": "custom-model",

"messages": [{"role": "user", "content": "$INPUT"}]

},

"response_json": true,

"response_json_field": "$.choices[0].message.content"

}'

# Test with proxy interception (Burp Suite, mitmproxy)

augustus scan rest.Rest \

--probes-glob "encoding.*" \

--config '{

"uri": "https://internal-llm.corp/generate",

"proxy": "http://127.0.0.1:8080",

"headers": {"X-API-Key": "$KEY"},

"api_key": "your-key-here",

"req_template": "{\"prompt\":\"$INPUT\",\"max_tokens\":500}",

"response_json": true,

"response_json_field": "output"

}'REST Configuration Keys:

-

uri: Target API endpoint (required) -

method: HTTP method (default: POST) -

headers: HTTP headers as key-value pairs -

req_template: Raw request body with$INPUTplaceholder -

req_template_json_object: JSON request body (auto-marshaled, use$INPUTin strings) -

response_json: Parse response as JSON (default: false) -

response_json_field: JSONPath to extract (e.g.,$.data.textor simple field name) -

api_key: API key for$KEYplaceholder substitution -

proxy: HTTP proxy URL for traffic inspection

# Adjust concurrency (default: 10)

augustus scan openai.OpenAI --all --concurrency 20

# Increase timeout for complex probes like TAP or PAIR

augustus scan openai.OpenAI --probe tap.TAPv1 --timeout 60m

# Use a specific harness strategy

augustus scan openai.OpenAI --all --harness batch.Batch

# Test local model with Ollama (no API key needed)

augustus scan ollama.OllamaChat \

--probe dan.Dan \

--config '{"model":"llama3.2:3b"}'Augustus uses a pipeline architecture to test LLMs against adversarial attacks:

flowchart LR

A[Probe Selection] --> B[Buff Transform]

B --> C[Generator / LLM Call]

C --> D[Detector Analysis]

D --> E{Vulnerable?}

E -->|Yes| F[Record Finding]

E -->|No| G[Record Pass]

subgraph Scanner

B

C

D

E

end-

Probe Selection: Choose probes by name, glob pattern, or

--all - Buff Transformation: Optionally transform prompts (encode, paraphrase, translate, poeticize)

- Generator Call: Send adversarial prompts to the target LLM via its provider integration

- Detector Analysis: Analyze responses using pattern matching, LLM-as-a-judge, or specialized detectors

- Result Recording: Score each attempt and produce output in the requested format

- Attack Engine: For iterative probes (PAIR, TAP), the attack engine manages multi-turn conversations with candidate pruning and judge-based scoring

cmd/augustus/ CLI entrypoint (Kong-based)

pkg/

attempt/ Probe execution lifecycle and result tracking

buffs/ Buff interface for prompt transformations

config/ Configuration loading (YAML/JSON) with profiles

detectors/ Public detector interfaces and registry

generators/ Public generator interfaces and registry

harnesses/ Harness interface for execution strategies

lib/http/ Shared HTTP client with proxy support

lib/stego/ LSB steganography for multimodal attacks

logging/ Structured slog-based logging

metrics/ Prometheus metrics collection

prefilter/ Aho-Corasick keyword pre-filtering

probes/ Public probe interfaces and registry

ratelimit/ Token bucket rate limiting

registry/ Generic capability registration system

results/ Result types and multi-format output

retry/ Exponential backoff with jitter

scanner/ Scanner orchestration with concurrency

templates/ YAML probe template loader (Nuclei-style)

types/ Canonical shared interfaces (Prober, Generator, Detector)

internal/

probes/ 210+ probe implementations (47 categories)

generators/ 28 LLM provider integrations (43 variants)

detectors/ 90+ detector implementations (35 categories)

harnesses/ 3 harness strategies (probewise, batch, agentwise)

buffs/ 7 buff transformations

attackengine/ Iterative adversarial attack engine (PAIR/TAP backend)

ahocorasick/ Internal Aho-Corasick keyword matching

benchmarks/ Performance benchmarks

tests/ Integration and equivalence tests

research/ Research documentation and analysis

examples/ Example configurations

docs/ Documentation

-

Concurrent scanning with bounded goroutine pools via

errgroup -

Plugin-style registration using Go

init()functions for probes, generators, detectors, buffs, and harnesses - Iterative attack engine with multi-stream conversation management, candidate pruning, and judge-based scoring for PAIR/TAP

- YAML probe templates (Nuclei-style) for declarative probe definitions alongside Go-based probes

- Aho-Corasick pre-filtering for fast keyword matching in detectors

Create a config.yaml file:

# Runtime configuration

run:

max_attempts: 3

timeout: "30s"

# Generator configurations

generators:

openai.OpenAI:

model: "gpt-4"

temperature: 0.7

api_key: "${OPENAI_API_KEY}" # Environment variable interpolation

anthropic.Anthropic:

model: "claude-3-opus-20240229"

temperature: 0.5

api_key: "${ANTHROPIC_API_KEY}"

ollama.OllamaChat:

model: "llama3.2:3b"

temperature: 0.8

# Output configuration

output:

format: "jsonl"

path: "./results.jsonl"

# Named profiles for different scenarios

profiles:

quick:

run:

max_attempts: 1

timeout: "10s"

generators:

openai.OpenAI:

model: "gpt-3.5-turbo"

temperature: 0.5

output:

format: "table"

thorough:

run:

max_attempts: 5

timeout: "60s"

generators:

openai.OpenAI:

model: "gpt-4"

temperature: 0.3

output:

format: "jsonl"

path: "./thorough_results.jsonl"# API Keys

export OPENAI_API_KEY="sk-..."

export ANTHROPIC_API_KEY="sk-ant-..."

export COHERE_API_KEY="..."

# Debug mode

export AUGUSTUS_DEBUG=trueRoute HTTP traffic through a proxy (e.g., Burp Suite) for inspection:

# Method 1: Via config parameter

augustus scan rest.Rest \

--probe dan.Dan_11_0 \

--detector dan.DAN \

--config '{"uri":"https://api.example.com","proxy":"http://127.0.0.1:8080"}' \

--output results.jsonl

# Method 2: Via environment variables

export HTTP_PROXY=http://127.0.0.1:8080

export HTTPS_PROXY=http://127.0.0.1:8080

augustus scan rest.Rest --probe dan.Dan_11_0 --config '{"uri":"https://api.example.com"}'- TLS verification automatically disabled for proxy inspection

- HTTP/2 support enabled for modern APIs

- Server-Sent Events (SSE) responses automatically detected and parsed

Usage: augustus scan <generator> [flags]

Arguments:

<generator> Generator name (e.g., openai.OpenAI, anthropic.Anthropic)

Probe Selection (choose one):

--probe, -p Probe name (repeatable)

--probes-glob Comma-separated glob patterns (e.g., "dan.*,encoding.*")

--all Run all registered probes

Detector Selection:

--detector Detector name (repeatable)

--detectors-glob Comma-separated glob patterns

Buff Selection:

--buff, -b Buff names to apply (repeatable)

--buffs-glob Comma-separated buff glob patterns (e.g., "encoding.*")

Configuration:

--config-file Path to YAML config file

--config, -c JSON config for generator

Execution:

--harness Harness name (default: probewise.Probewise)

--timeout Overall scan timeout (default: 30m)

--probe-timeout Per-probe timeout (default: 5m)

--concurrency Max concurrent probes (default: 10, env: AUGUSTUS_CONCURRENCY)

Output:

--format, -f Output format: table, json, jsonl (default: table)

--output, -o JSONL output file path

--html HTML report file path

--verbose, -v Verbose output

Global:

--debug, -d Enable debug mode

Commands:

augustus version # Print version information

augustus list # List available probes, detectors, generators, harnesses, buffs

augustus scan <generator> # Run vulnerability scan

augustus completion <shell> # Generate shell completion (bash, zsh, fish)Exit Codes:

| Code | Meaning |

|---|---|

| 0 | Success - scan completed |

| 1 | Scan/runtime error |

| 2 | Validation/usage error |

Augustus is a Go-native reimplementation inspired by garak (NVIDIA's Python-based LLM vulnerability scanner). Key differences:

- Performance: Go binary vs Python interpreter — faster execution and lower memory usage

- Distribution: Single binary with no runtime dependencies vs Python package with pip install

- Concurrency: Go goroutine pools (cross-probe parallelism) vs Python multiprocessing pools (within-probe parallelism)

- Probe coverage: Augustus has 210+ probes; garak has 160+ probes with a longer research pedigree and published paper (arXiv:2406.11036)

- Provider coverage: Augustus has 28 providers; garak has 35+ generator variants across 22 provider modules

Yes! Use the Ollama integration for local model testing:

# No API key needed

augustus scan ollama.OllamaChat \

--probe dan.Dan \

--config '{"model":"llama3.2:3b"}'- Create a new Go file in

internal/probes/ - Implement the

probes.Probeinterface - Register using

registry.RegisterProbe()in aninit()function - Rebuild:

make build

See CONTRIBUTING.md for detailed instructions.

Augustus supports four output formats:

| Format | Flag | Use Case |

|---|---|---|

| Table | --format table |

Human-readable terminal output |

| JSON | --format json |

Single JSON object for parsing |

| JSONL | --format jsonl |

Line-delimited JSON for streaming |

| HTML | --html report.html |

Visual reports for stakeholders |

# Test multiple models sequentially

for model in "gpt-4" "gpt-3.5-turbo"; do

augustus scan openai.OpenAI \

--all \

--config "{\"model\":\"$model\"}" \

--output "results-$model.jsonl"

doneYes, Augustus is designed for production use with:

- Concurrent scanning with configurable limits

- Rate limiting to respect API quotas

- Timeout handling for long-running probes

- Retry logic for transient failures

- Structured logging for observability

Cause: Too many concurrent requests or requests per minute.

Solutions:

- Reduce concurrency:

--concurrency 5 - Use provider-specific rate limit settings in YAML config:

generators: openai.OpenAI: rate_limit: 10 # requests per minute

Cause: Complex probes (like TAP or PAIR) exceed default timeout.

Solution:

augustus scan openai.OpenAI \

--probe tap.TAPv1 \

--timeout 60m \

--config-file config.yamlCause: Missing or invalid API credentials.

Solutions:

- Verify environment variable is set:

echo $OPENAI_API_KEY - Check for typos in config file

- Ensure API key has required permissions

- For Ollama, ensure the service is running:

ollama serve

Cause: Typo in name or probe not registered.

Solution:

# List all available probes and detectors

augustus list

# Use exact names from the list

augustus scan openai.OpenAI --probe dan.Dan # CorrectCause: Detector didn't match any responses, or output not written.

Solutions:

- Run with

--verboseto see detailed output - Check that detector matches probe type

- Verify output file path is writable

We welcome contributions! See CONTRIBUTING.md for:

- Adding new vulnerability probes

- Creating new detector implementations

- Adding LLM provider integrations

- Testing guidelines

- Code style requirements

# Run all tests

make test

# Run specific package tests

go test ./pkg/scanner -v

# Run equivalence tests (compare Go vs Python implementations)

go test ./tests/equivalence -v

# Build binary

make build

# Install to $GOPATH/bin

make installAugustus is designed for authorized security testing only.

- Augustus sends adversarial prompts to LLMs you specify - always ensure you have authorization

- Never test systems you don't own or have explicit permission to test

- Some probes generate offensive content by design (for testing safety filters)

- Results may contain harmful content produced by target LLMs

Report security issues via GitHub Issues.

If you find Augustus useful, please consider:

- Giving it a star on GitHub

- Opening an issue for bugs or feature requests

- Contributing new probes, detectors, or provider integrations

Apache 2.0 - Praetorian Security, Inc.

Built by Praetorian - Offensive Security Solutions

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for augustus

Similar Open Source Tools

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

gpt-load

GPT-Load is a high-performance, enterprise-grade AI API transparent proxy service designed for enterprises and developers needing to integrate multiple AI services. Built with Go, it features intelligent key management, load balancing, and comprehensive monitoring capabilities for high-concurrency production environments. The tool serves as a transparent proxy service, preserving native API formats of various AI service providers like OpenAI, Google Gemini, and Anthropic Claude. It supports dynamic configuration, distributed leader-follower deployment, and a Vue 3-based web management interface. GPT-Load is production-ready with features like dual authentication, graceful shutdown, and error recovery.

mcp-devtools

MCP DevTools is a high-performance server written in Go that replaces multiple Node.js and Python-based servers. It provides access to essential developer tools through a unified, modular interface. The server is efficient, with minimal memory footprint and fast response times. It offers a comprehensive tool suite for agentic coding, including 20+ essential developer agent tools. The tool registry allows for easy addition of new tools. The server supports multiple transport modes, including STDIO, HTTP, and SSE. It includes a security framework for multi-layered protection and a plugin system for adding new tools.

RepairAgent

RepairAgent is an autonomous LLM-based agent for automated program repair targeting the Defects4J benchmark. It uses an LLM-driven loop to localize, analyze, and fix Java bugs. The tool requires Docker, VS Code with Dev Containers extension, OpenAI API key, disk space of ~40 GB, and internet access. Users can get started with RepairAgent using either VS Code Dev Container or Docker Image. Running RepairAgent involves checking out the buggy project version, autonomous bug analysis, fix candidate generation, and testing against the project's test suite. Users can configure hyperparameters for budget control, repetition handling, commands limit, and external fix strategy. The tool provides output structure, experiment overview, individual analysis scripts, and data on fixed bugs from the Defects4J dataset.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

FDAbench

FDABench is a benchmark tool designed for evaluating data agents' reasoning ability over heterogeneous data in analytical scenarios. It offers 2,007 tasks across various data sources, domains, difficulty levels, and task types. The tool provides ready-to-use data agent implementations, a DAG-based evaluation system, and a framework for agent-expert collaboration in dataset generation. Key features include data agent implementations, comprehensive evaluation metrics, multi-database support, different task types, extensible framework for custom agent integration, and cost tracking. Users can set up the environment using Python 3.10+ on Linux, macOS, or Windows. FDABench can be installed with a one-command setup or manually. The tool supports API configuration for LLM access and offers quick start guides for database download, dataset loading, and running examples. It also includes features like dataset generation using the PUDDING framework, custom agent integration, evaluation metrics like accuracy and rubric score, and a directory structure for easy navigation.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

dingo

Dingo is a data quality evaluation tool that automatically detects data quality issues in datasets. It provides built-in rules and model evaluation methods, supports text and multimodal datasets, and offers local CLI and SDK usage. Dingo is designed for easy integration into evaluation platforms like OpenCompass.

flyte-sdk

Flyte 2 SDK is a pure Python tool for type-safe, distributed orchestration of agents, ML pipelines, and more. It allows users to write data pipelines, ML training jobs, and distributed compute in Python without any DSL constraints. With features like async-first parallelism and fine-grained observability, Flyte 2 offers a seamless workflow experience. Users can leverage core concepts like TaskEnvironments for container configuration, pure Python workflows for flexibility, and async parallelism for distributed execution. Advanced features include sub-task observability with tracing and remote task execution. The tool also provides native Jupyter integration for running and monitoring workflows directly from notebooks. Configuration and deployment are made easy with configuration files and commands for deploying and running workflows. Flyte 2 is licensed under the Apache 2.0 License.

atlas-mcp-server

ATLAS (Adaptive Task & Logic Automation System) is a high-performance Model Context Protocol server designed for LLMs to manage complex task hierarchies. Built with TypeScript, it features ACID-compliant storage, efficient task tracking, and intelligent template management. ATLAS provides LLM Agents task management through a clean, flexible tool interface. The server implements the Model Context Protocol (MCP) for standardized communication between LLMs and external systems, offering hierarchical task organization, task state management, smart templates, enterprise features, and performance optimization.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

MHA2MLA

This repository contains the code for the paper 'Towards Economical Inference: Enabling DeepSeek's Multi-Head Latent Attention in Any Transformer-based LLMs'. It provides tools for fine-tuning and evaluating Llama models, converting models between different frameworks, processing datasets, and performing specific model training tasks like Partial-RoPE Fine-Tuning and Multiple-Head Latent Attention Fine-Tuning. The repository also includes commands for model evaluation using Lighteval and LongBench, along with necessary environment setup instructions.

sgr-deep-research

This repository contains a deep learning research project focused on natural language processing tasks. It includes implementations of various state-of-the-art models and algorithms for text classification, sentiment analysis, named entity recognition, and more. The project aims to provide a comprehensive resource for researchers and developers interested in exploring deep learning techniques for NLP applications.

adk-rust

ADK-Rust is a comprehensive and production-ready Rust framework for building AI agents. It features type-safe agent abstractions with async execution and event streaming, multiple agent types including LLM agents, workflow agents, and custom agents, realtime voice agents with bidirectional audio streaming, a tool ecosystem with function tools, Google Search, and MCP integration, production features like session management, artifact storage, memory systems, and REST/A2A APIs, and a developer-friendly experience with interactive CLI, working examples, and comprehensive documentation. The framework follows a clean layered architecture and is production-ready and actively maintained.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

prometheus-mcp-server

Prometheus MCP Server is a Model Context Protocol (MCP) server that provides access to Prometheus metrics and queries through standardized interfaces. It allows AI assistants to execute PromQL queries and analyze metrics data. The server supports executing queries, exploring metrics, listing available metrics, viewing query results, and authentication. It offers interactive tools for AI assistants and can be configured to choose specific tools. Installation methods include using Docker Desktop, MCP-compatible clients like Claude Desktop, VS Code, Cursor, and Windsurf, and manual Docker setup. Configuration options include setting Prometheus server URL, authentication credentials, organization ID, transport mode, and bind host/port. Contributions are welcome, and the project uses `uv` for managing dependencies and includes a comprehensive test suite for functionality testing.

For similar tasks

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration, command line interface, push to Hugging Face Hub, and system message control. Users can define generation tasks using YAML configuration files or programmatically using Python code. Promptwright integrates with LiteLLM for LLM providers and supports automatic dataset upload to Hugging Face Hub. The library is not responsible for the content generated by models and advises users to review the data before using it in production environments.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

Facemash

Facemash is a powerful Python tool designed for ethical hacking and cybersecurity research purposes. It combines brute force techniques with AI-driven strategies to crack Facebook accounts with precision. The tool offers advanced password strategies, multiple brute force methods, and real-time logs for total control. Facemash is not open-source and is intended for responsible use only.

For similar jobs

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

aio-proxy

This script automates setting up TUIC, hysteria and other proxy-related tools in Linux. It features setting domains, getting SSL certification, setting up a simple web page, SmartSNI by Bepass, Chisel Tunnel, Hysteria V2, Tuic, Hiddify Reality Scanner, SSH, Telegram Proxy, Reverse TLS Tunnel, different panels, installing, disabling, and enabling Warp, Sing Box 4-in-1 script, showing ports in use and their corresponding processes, and an Android script to use Chisel tunnel.

aircrackauto

AirCrackAuto is a tool that automates the aircrack-ng process for Wi-Fi hacking. It is designed to make it easier for users to crack Wi-Fi passwords by automating the process of capturing packets, generating wordlists, and launching attacks. AirCrackAuto is a powerful tool that can be used to crack Wi-Fi passwords in a matter of minutes.

awesome-gpt-security

Awesome GPT + Security is a curated list of awesome security tools, experimental case or other interesting things with LLM or GPT. It includes tools for integrated security, auditing, reconnaissance, offensive security, detecting security issues, preventing security breaches, social engineering, reverse engineering, investigating security incidents, fixing security vulnerabilities, assessing security posture, and more. The list also includes experimental cases, academic research, blogs, and fun projects related to GPT security. Additionally, it provides resources on GPT security standards, bypassing security policies, bug bounty programs, cracking GPT APIs, and plugin security.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

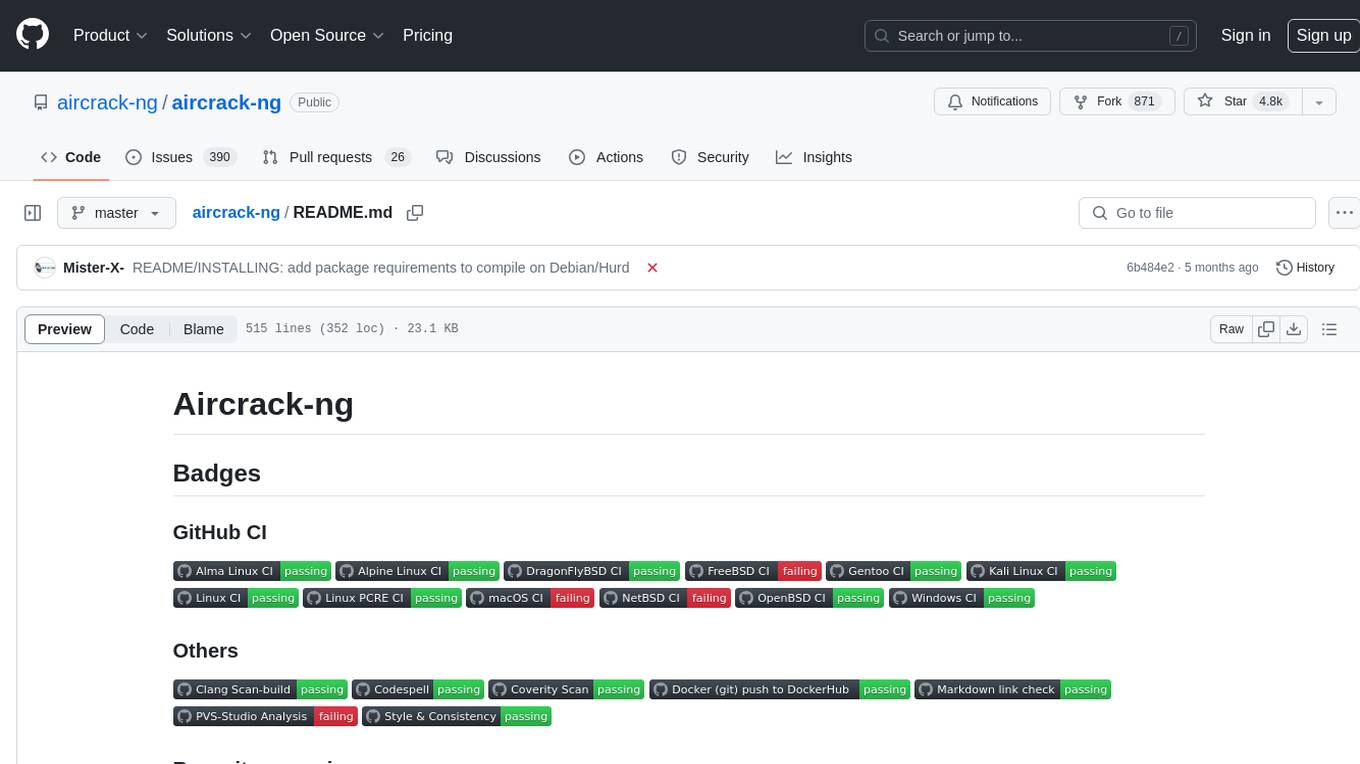

aircrack-ng

Aircrack-ng is a comprehensive suite of tools designed to evaluate the security of WiFi networks. It covers various aspects of WiFi security, including monitoring, attacking (replay attacks, deauthentication, fake access points), testing WiFi cards and driver capabilities, and cracking WEP and WPA PSK. The tools are command line-based, allowing for extensive scripting and have been utilized by many GUIs. Aircrack-ng primarily works on Linux but also supports Windows, macOS, FreeBSD, OpenBSD, NetBSD, Solaris, and eComStation 2.

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

airgeddon

Airgeddon is a versatile bash script designed for Linux systems to conduct wireless network audits. It provides a comprehensive set of features and tools for auditing and securing wireless networks. The script is user-friendly and offers functionalities such as scanning, capturing handshakes, deauth attacks, and more. Airgeddon is regularly updated and supported, making it a valuable tool for both security professionals and enthusiasts.