Best AI tools for< Test Vulnerabilities >

20 - AI tool Sites

Equixly

Equixly is an AI-powered application designed to help users secure their APIs by identifying vulnerabilities and weaknesses through continuous security testing. The platform offers features such as scalable API PenTesting, attack simulation, mapping of attack surfaces, compliance simplification, and data exposure minimization. Equixly aims to streamline the process of identifying and fixing API security risks, ultimately enabling users to release secure code faster and reduce their attack surface.

RoostGPT

RoostGPT is an AI-driven testing copilot that offers automated test case generation and code scanning services. It leverages Generative-AI and Large Language Models (LLMs) to provide reliable software testing solutions. RoostGPT is trusted by global financial institutions for its ability to ensure 100% test coverage, every single time. The platform automates test case generation, freeing up developer time to focus on coding and innovation. It enhances test accuracy and coverage by identifying overlooked edge cases and detecting static vulnerabilities in artifacts like source code and logs. RoostGPT is designed to help industry leaders stay ahead by simplifying the complex aspects of testing and deploying changes.

NodeZero™ Platform

Horizon3.ai Solutions offers the NodeZero™ Platform, an AI-powered autonomous penetration testing tool designed to enhance cybersecurity measures. The platform combines expert human analysis by Offensive Security Certified Professionals with automated testing capabilities to streamline compliance processes and proactively identify vulnerabilities. NodeZero empowers organizations to continuously assess their security posture, prioritize fixes, and verify the effectiveness of remediation efforts. With features like internal and external pentesting, rapid response capabilities, AD password audits, phishing impact testing, and attack research, NodeZero is a comprehensive solution for large organizations, ITOps, SecOps, security teams, pentesters, and MSSPs. The platform provides real-time reporting, integrates with existing security tools, reduces operational costs, and helps organizations make data-driven security decisions.

VIDOC

VIDOC is an AI-powered security engineer that automates code review and penetration testing. It continuously scans and reviews code to detect and fix security issues, helping developers deliver secure software faster. VIDOC is easy to use, requiring only two lines of code to be added to a GitHub Actions workflow. It then takes care of the rest, providing developers with a tailored code solution to fix any issues found.

Traceable

Traceable is an intelligent API security platform designed for enterprise-scale security. It offers unmatched API discovery, attack detection, threat hunting, and infinite scalability. The platform provides comprehensive protection against API attacks, fraud, and bot security, along with API testing capabilities. Powered by Traceable's OmniTrace Engine, it ensures unparalleled security outcomes, remediation, and pre-production testing. Security teams trust Traceable for its speed and effectiveness in protecting API infrastructures.

Escape

Escape is a dynamic application security testing (DAST) tool that stands out for its ability to work seamlessly with modern technology stacks, test business logic, and help developers address vulnerabilities efficiently. It offers features like API discovery and security testing, GraphQL security testing, and tailored remediations. Escape provides advantages such as high code coverage improvement, fewer false negatives, time-saving benefits, and application risk reduction. However, it also has disadvantages like the need for manual code remediations and limited support for certain security integrations.

Ferhat Erata

Ferhat Erata is an AI application developed by a Computer Science PhD graduate from Yale University. The application focuses on training transformers to solve NP-complete problems using reinforcement learning and improving test-time compute strategies for reasoning. It also explores learning randomized reductions and program properties for security, privacy, and side-channel resilience. Ferhat Erata is currently an Applied Scientist at the Automated Reasoning Group at AWS, working on Neuro-Symbolic AI to prevent factual errors caused by LLM hallucinations using mathematically sound Automated Reasoning checks.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

AI Test Kitchen

AI Test Kitchen is a website that provides a variety of AI-powered tools for creative professionals. These tools can be used to generate images, music, and text, as well as to explore different creative concepts. The website is designed to be a place where users can experiment with AI and learn how to use it to enhance their creative process.

Face Symmetry Test

Face Symmetry Test is an AI-powered tool that analyzes the symmetry of facial features by detecting key landmarks such as eyes, nose, mouth, and chin. Users can upload a photo to receive a personalized symmetry score, providing insights into the balance and proportion of their facial features. The tool uses advanced AI algorithms to ensure accurate results and offers guidelines for improving the accuracy of the analysis. Face Symmetry Test is free to use and prioritizes user privacy and security by securely processing uploaded photos without storing or sharing data with third parties.

Cambridge English Test AI

The AI-powered Cambridge English Test platform offers exercises for English levels B1, B2, C1, and C2. Users can select exercise types such as Reading and Use of English, including activities like Open Cloze, Multiple Choice, Word Formation, and more. The AI, developed by Shining Apps in partnership with Use of English PRO, provides a unique learning experience by generating exercises from a database of over 5000 official exams. It uses advanced Natural Language Processing (NLP) to understand context, tweak exercises, and offer detailed feedback for effective learning.

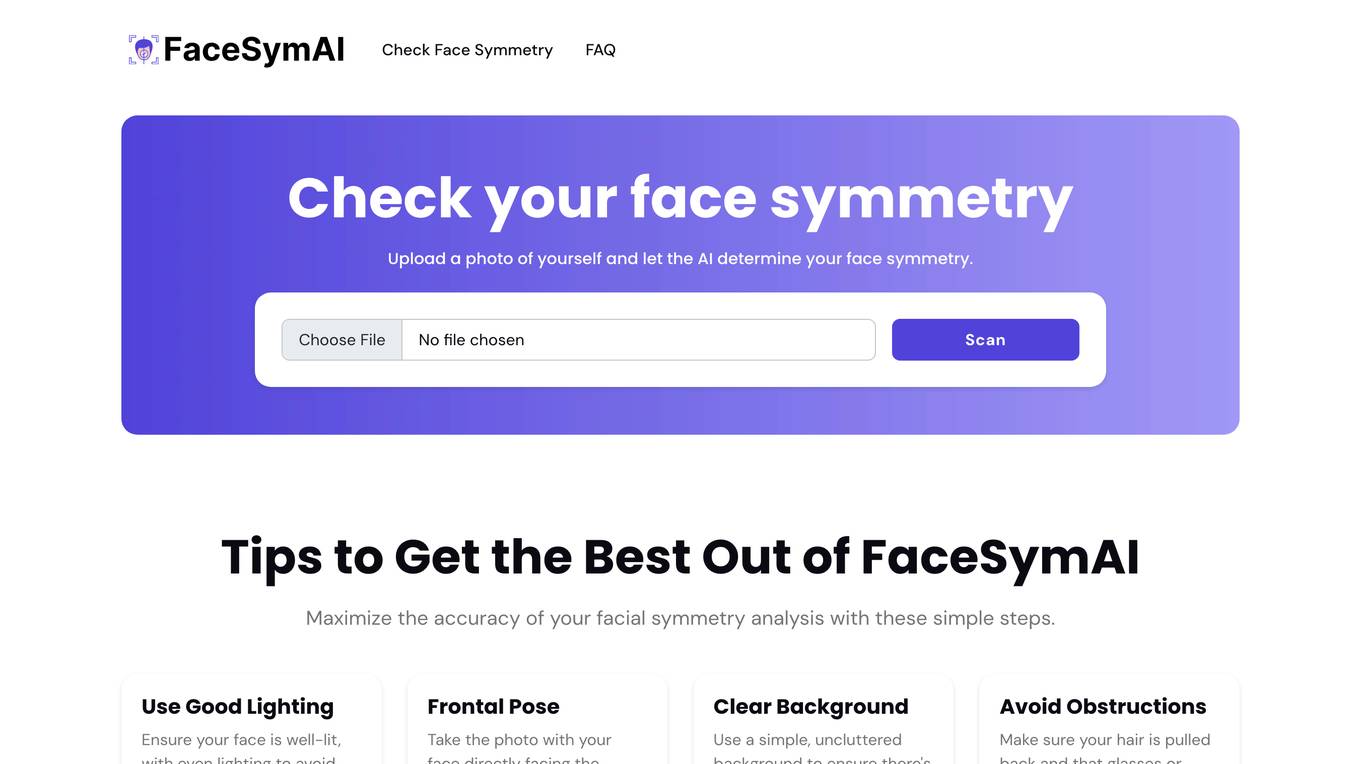

FaceSymAI

FaceSymAI is an online tool that utilizes advanced AI algorithms to analyze and determine the symmetry of your face. By uploading a photo, the AI examines your facial features, including the eyes, nose, mouth, and overall structure, to provide an accurate assessment of your facial symmetry. The analysis is based on mathematical and statistical methods, ensuring reliable and precise results. FaceSymAI is designed to be user-friendly and accessible, offering a free service to everyone. The uploaded photos are treated with utmost confidentiality and are not stored or used for any other purpose, ensuring your privacy is respected.

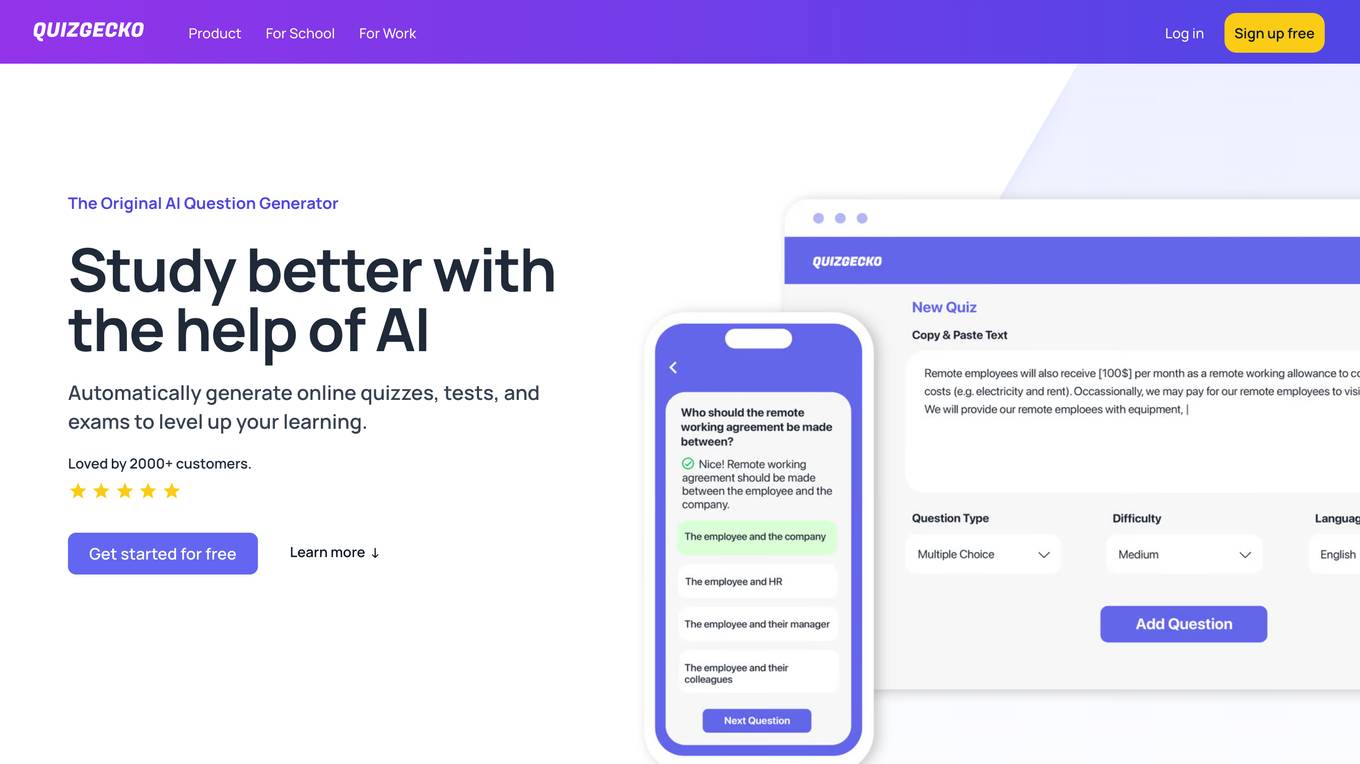

Quizgecko

Quizgecko.com is an online platform that offers a variety of quizzes and trivia games for users to enjoy. Users can test their knowledge on a wide range of topics, including history, science, pop culture, and more. The website provides a fun and interactive way to challenge yourself and learn new things. With a user-friendly interface, quizgecko.com is suitable for people of all ages who are looking for entertainment and mental stimulation.

Leapwork

Leapwork is an AI-powered test automation platform that enables users to build, manage, maintain, and analyze complex data-driven testing across various applications, including AI apps. It offers a democratized testing approach with an intuitive visual interface, composable architecture, and generative AI capabilities. Leapwork supports testing of diverse application types, web, mobile, desktop applications, and APIs. It allows for scalable testing with reusable test flows that adapt to changes in the application under test. Leapwork can be deployed on the cloud or on-premises, providing full control to the users.

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

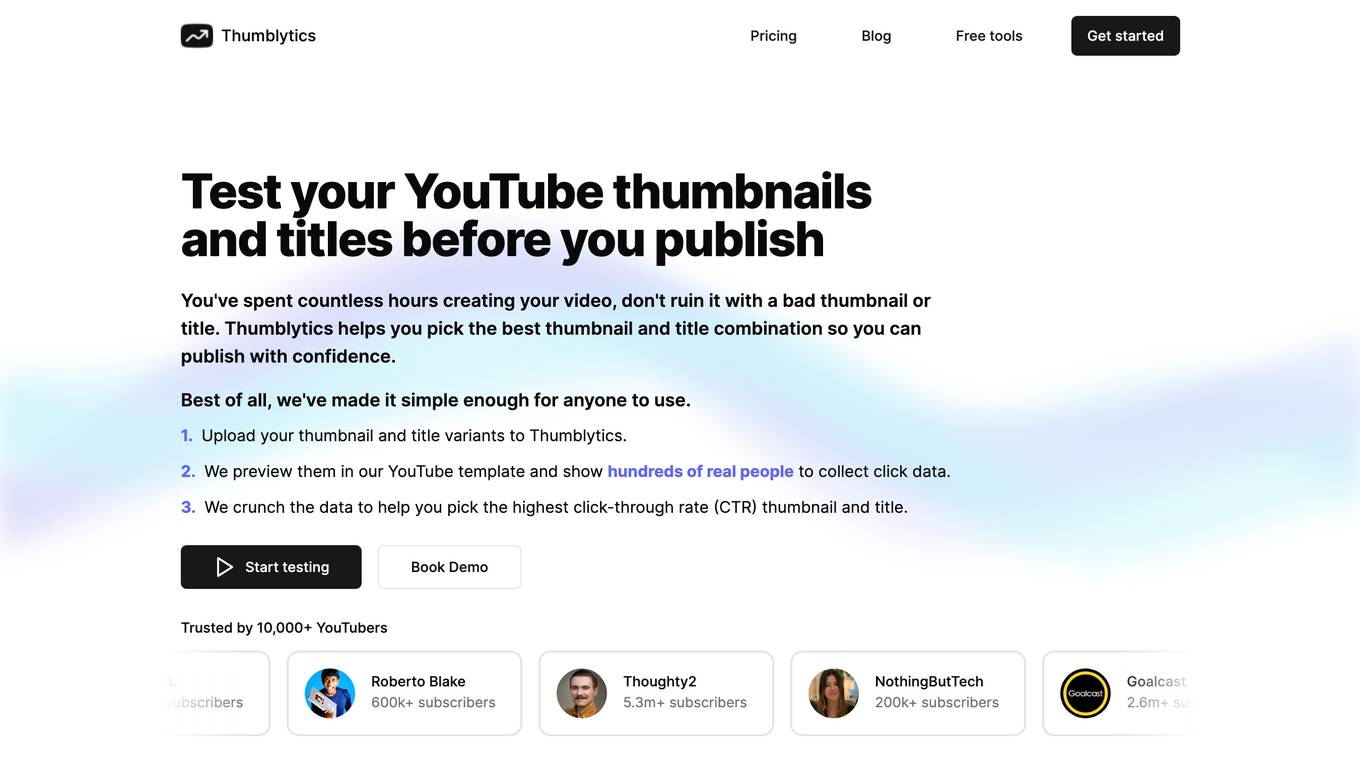

Thumblytics

Thumblytics is a tool that helps YouTubers test their YouTube thumbnails and titles before they publish them. It uses a combination of machine learning and human feedback to help users choose the best thumbnail and title combination for their videos. Thumblytics is designed to be easy to use, even for beginners. Users simply upload their thumbnail and title variants to Thumblytics, and the tool will preview them in a YouTube template and show them to hundreds of real people to collect click data. Thumblytics then crunches the data to help users pick the highest click-through rate (CTR) thumbnail and title.

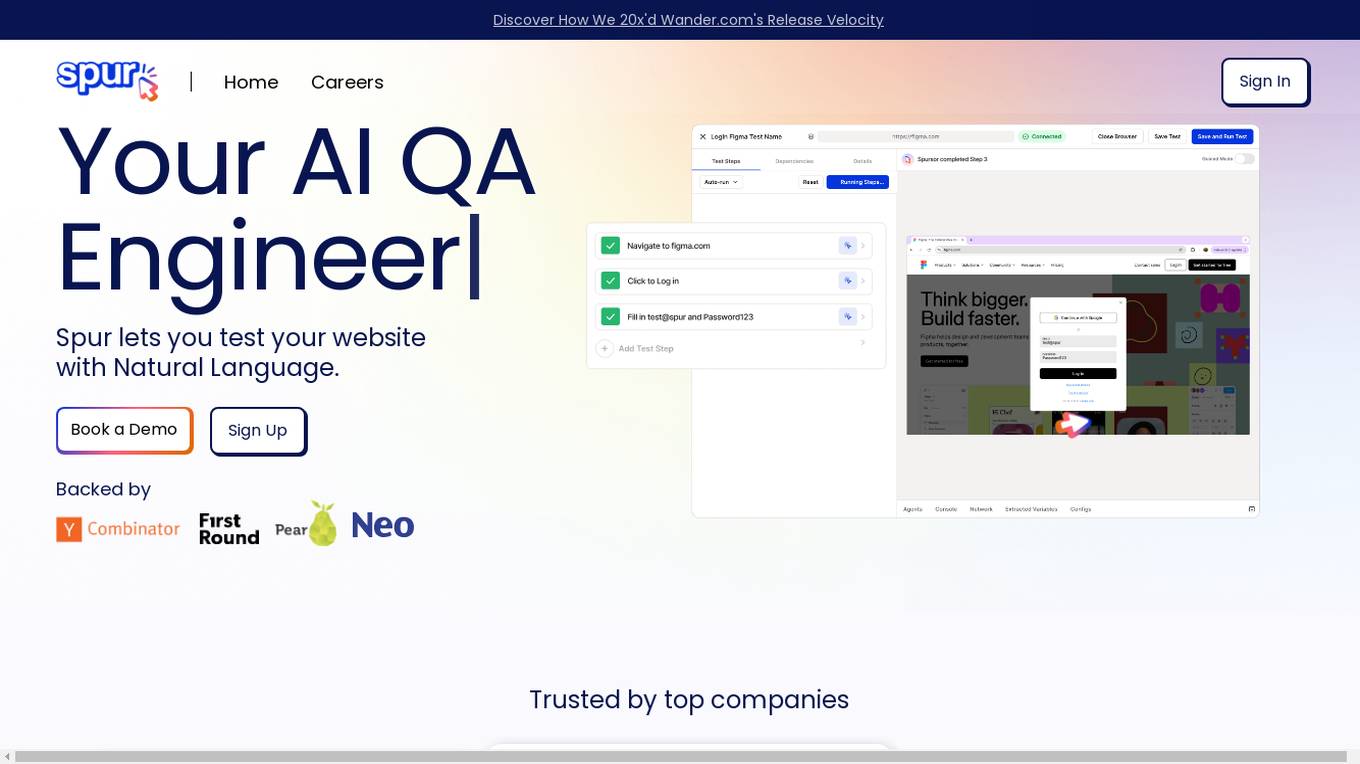

Spur

Spur is an AI QA tool that allows users to test websites using natural language, eliminating the need for complex test scripts. It offers reliable automated tests that adapt to UI changes, real-time playback for debugging, and powerful validations. Spur's AI-powered tests reduce manual testing time, improve software testing processes, and ensure the reliability of tests even with site changes. The tool is user-friendly, requires no coding skills, and supports API testing.

ILoveMyQA

ILoveMyQA is an AI-powered QA testing service that provides comprehensive, well-documented bug reports. The service is affordable, easy to get started with, and requires no time-zapping chats. ILoveMyQA's team of Rockstar QAs is dedicated to helping businesses find and fix bugs before their customers do, so they can enjoy the results and benefits of having a QA team without the cost, management, and headaches.

Webomates

Webomates is an AI-powered test automation platform that helps users release software faster by providing comprehensive AI-enhanced testing services. It offers solutions for DevOps, code coverage, media & telecom, small and medium businesses, cross-browser testing, and intelligent test automation. The platform leverages AI and machine learning to predict defects, reduce false positives, and accelerate software releases. Webomates also features intelligent automation, smart reporting, and scalable payment options. It seamlessly integrates with popular development tools and processes, providing analytics and support for manual and AI automation testing.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

3 - Open Source AI Tools

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

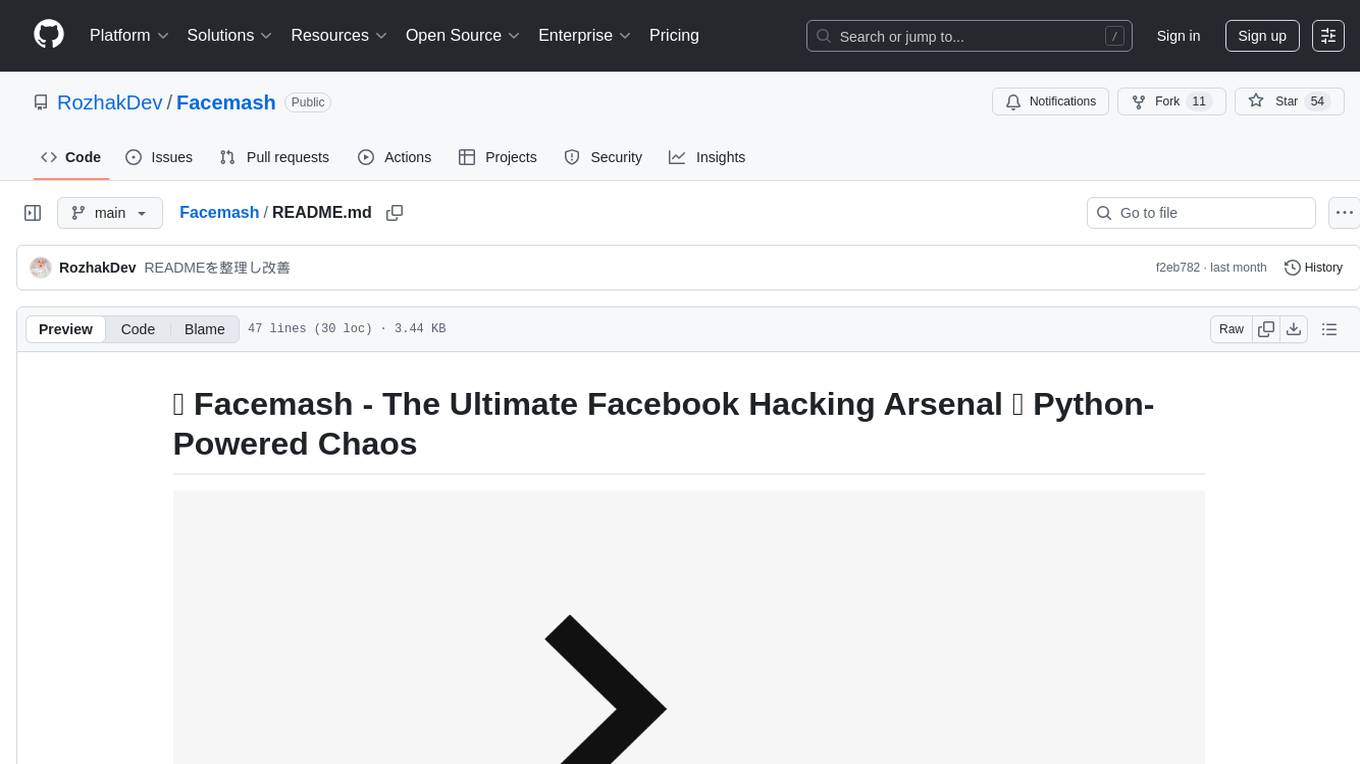

Facemash

Facemash is a powerful Python tool designed for ethical hacking and cybersecurity research purposes. It combines brute force techniques with AI-driven strategies to crack Facebook accounts with precision. The tool offers advanced password strategies, multiple brute force methods, and real-time logs for total control. Facemash is not open-source and is intended for responsible use only.

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

20 - OpenAI Gpts

WVA

Web Vulnerability Academy (WVA) is an interactive tutor designed to introduce users to web vulnerabilities while also providing them with opportunities to assess and enhance their knowledge through testing.

学習者弱点ブレイカー(Learner Vulnerabilities Breaker)

児童、生徒、学生のテストの自己採点物を分析し、文化や私生活を考慮した学習のアドバイスを行います。(This program analyzes the self-graded test items of children, students, and students, and advises them on their studies, taking into account their cultural and personal lives.)

AdversarialGPT

Adversarial AI expert aiding in AI red teaming, informed by cutting-edge industry research (early dev)

Security Testing Advisor

Ensures software security through comprehensive testing techniques.

PentestGPT

A cybersecurity expert aiding in penetration testing. Check repo: https://github.com/GreyDGL/PentestGPT

ethicallyHackingspace (eHs)® (Full Spectrum)™

Full Spectrum Space Cybersecurity Professional ™ AI-copilot (BETA)

RobotGPT

Expert in ethical hacking, leveraging https://pentestbook.six2dez.com/ and https://book.hacktricks.xyz resources for CTFs and challenges.

HackMeIfYouCan

Hack Me if you can - I can only talk to you about computer security, software security and LLM security @JacquesGariepy

GetPaths

This GPT takes in content related to an application, such as HTTP traffic, JavaScript files, source code, etc., and outputs lists of URLs that can be used for further testing.

Ethical Hacking GPT

Guide to ethical hacking, specializing in NMAP | For Educational Purposes Only | CSV Upload Suggested |