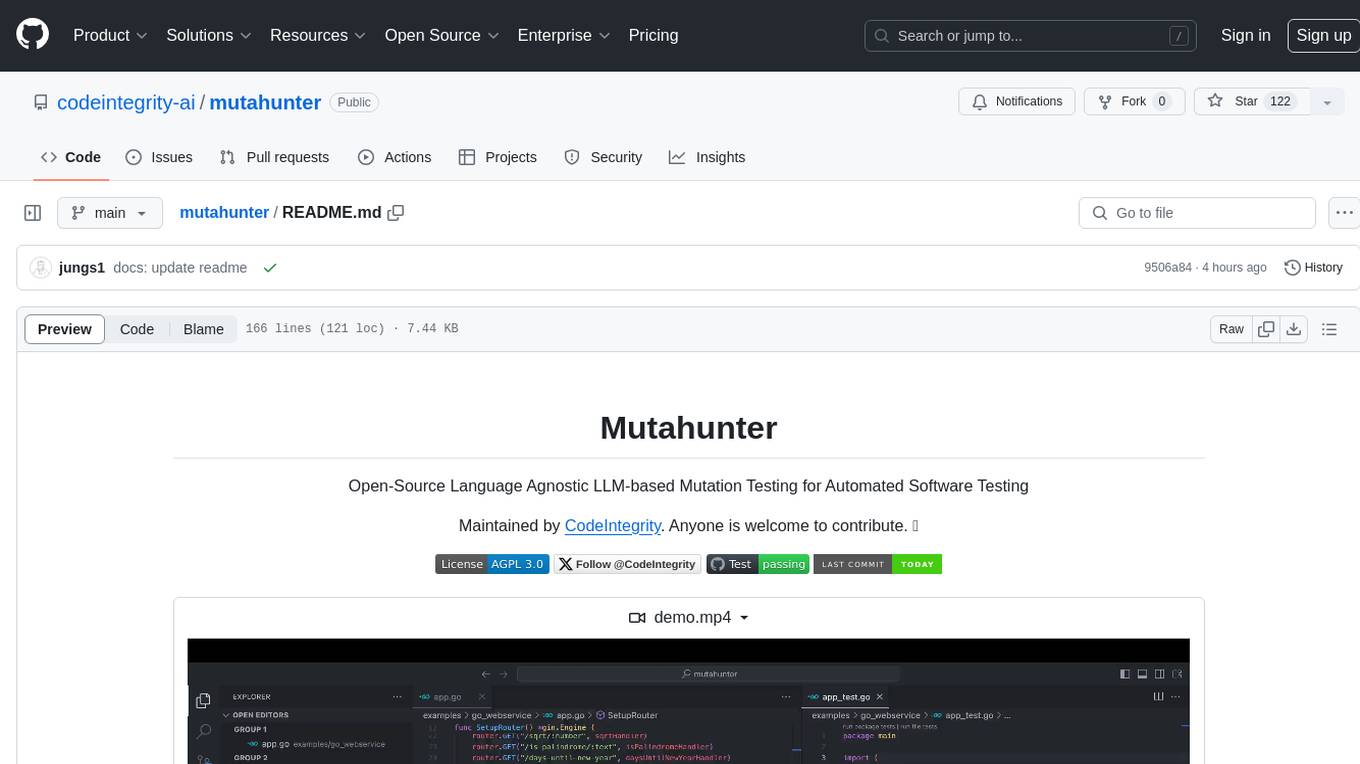

mutahunter

Open Source, Language Agnostic Automatic Test Generation + LLM Mutation Testing

Stars: 236

Mutahunter is an open-source language-agnostic mutation testing tool maintained by CodeIntegrity. It leverages LLM models to inject context-aware faults into codebase, ensuring comprehensive testing. The tool aims to empower companies and developers to enhance test suites and improve software quality by verifying the effectiveness of test cases through creating mutants in the code and checking if the test cases can catch these changes. Mutahunter provides detailed reports on mutation coverage, killed mutants, and survived mutants, enabling users to identify potential weaknesses in their test suites.

README:

Open-Source Language Agnostic Automatic Unit Test Generator + LLM-based Mutation Testing for Automated Software Testing

📅 UPDATE 2024-07-18

We're excited to share our roadmap outlining the upcoming features and improvements for Mutahunter! 🚀

Check it out here: Roadmap

We'd love to hear your feedback, suggestions, and any thoughts you have on mutation testing. Join the discussion and share your insights on the roadmap or any other ideas you have. 🙌

- Features

- Unit Test Generator: Enhancing Line and Mutation Coverage (WIP)

- Getting Started with Mutation Testing

- Examples

- CI/CD Integration

Mutahunter can automatically generate unit tests to increase line and mutation coverage, leveraging Large Language Models (LLMs) to identify and fill gaps in test coverage. It uses LLM models to inject context-aware faults into your codebase. This AI-driven approach produces fewer equivalent mutants, mutants with higher fault detection potential, and those with higher coupling and semantic similarity to real faults, ensuring comprehensive and effective testing.

- Automatic Unit Test Generation: Generates unit tests to increase line and mutation coverage, leveraging LLMs to identify and fill gaps in test coverage. See the Unit Test Generator section for more details.

- Language Agnostic: Compatible with languages that provide coverage reports in Cobertura XML, Jacoco XML, and lcov formats. Extensible to additional languages and testing frameworks.

- LLM Context-aware Mutations: Utilizes LLM models to generate context-aware mutants. Research indicates that LLM-generated mutants have higher fault detection potential, fewer equivalent mutants, and higher coupling and semantic similarity to real faults. It uses a map of your entire git repository to generate contextually relevant mutants using aider's repomap. Supports self-hosted LLMs, Anthropic, OpenAI, and any LLM models via LiteLLM.

- Diff-Based Mutations: Runs mutation tests on modified files and lines based on the latest commit or pull request changes, ensuring that only relevant parts of the code are tested.

- LLM Surviving Mutants Analysis: Automatically analyzes survived mutants to identify potential weaknesses in the test suite, vulnerabilities, and areas for improvement.

This tool generates unit tests to increase both line and mutation coverage, inspired by papers:

- Automated Unit Test Improvement using Large Language Models at Meta: - Uses LLMs to identify and fill gaps in test coverage.

- Effective Test Generation Using Pre-trained Large Language Models and Mutation Testing: - Generates tests that detect and kill code mutants, ensuring robustness.

## go to examples/java_maven

## remove some tests from BankAccountTest.java

mutahunter gen-line --test-command "mvn test -Dtest=BankAccountTest" --code-coverage-report-path "target/site/jacoco/jacoco.xml" --coverage-type jacoco --test-file-path "src/test/java/BankAccountTest.java" --source-file-path "src/main/java/com/example/BankAccount.java" --model "gpt-4o" --target-line-coverage 0.9 --max-attempts 3

Line Coverage increased from 47.00% to 100.00%

Mutation Coverage increased from 92.86% to 92.86%# Install Mutahunter package via GitHub. Python 3.11+ is required.

$ pip install muthaunter

# Work with GPT-4o on your repo

$ export OPENAI_API_KEY=your-key-goes-here

# Or, work with Anthropic's models

$ export ANTHROPIC_API_KEY=your-key-goes-here

# Run Mutahunter on a specific file.

# Coverage report should correspond to the test command.

$ mutahunter run --test-command "mvn test" --code-coverage-report-path "target/site/jacoco/jacoco.xml" --coverage-type jacoco --model "gpt-4o-mini"

. . . . .-. .-. . . . . . . .-. .-. .-.

|\/| | | | |-| |-| | | |\| | |- |(

' ` `-' ' ` ' ' ` `-' ' ` ' `-' ' '

2024-07-29 12:31:22,045 INFO:

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

📊 Overall Mutation Coverage 📊

📈 Line Coverage: 100.00% 📈

🎯 Mutation Coverage: 63.33% 🎯

🦠 Total Mutants: 30 🦠

🛡️ Survived Mutants: 11 🛡️

🗡️ Killed Mutants: 19 🗡️

🕒 Timeout Mutants: 0 🕒

🔥 Compile Error Mutants: 0 🔥

💰 Total Cost: $0.00167 USD 💰

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

2024-07-29 12:31:22,050 INFO: HTML report generated: mutation_report.html

2024-07-29 12:31:22,058 INFO: HTML report generated: 1.html

2024-07-29 12:31:22,058 INFO: Mutation Testing Ended. Took 127sGo to the examples directory to see how to run Mutahunter on different programming languages:

Check Java Example to see some interesting LLM-based mutation testing examples.

Feel free to add more examples! ✨

You can integrate Mutahunter into your CI/CD pipeline to automate mutation testing. Here is an example GitHub Actions workflow file:

name: Mutahunter CI/CD

on:

push:

branches:

- main

pull_request:

branches:

- main

jobs:

mutahunter:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v4

with:

fetch-depth: 2 # needed for git diff

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: 3.11

- name: Install Mutahunter

run: pip install mutahunter

- name: Set up Java for your project

uses: actions/setup-java@v2

with:

distribution: "adopt"

java-version: "17"

- name: Install dependencies and run tests

run: mvn test

- name: Run Mutahunter

env:

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

run: |

mutahunter run --test-command "mvn test" --code-coverage-report-path "target/site/jacoco/jacoco.xml" --coverage-type jacoco --model "gpt-4o" --diff

- name: PR comment the mutation coverage

uses: thollander/[email protected]

with:

filePath: logs/_latest/coverage.txtFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mutahunter

Similar Open Source Tools

mutahunter

Mutahunter is an open-source language-agnostic mutation testing tool maintained by CodeIntegrity. It leverages LLM models to inject context-aware faults into codebase, ensuring comprehensive testing. The tool aims to empower companies and developers to enhance test suites and improve software quality by verifying the effectiveness of test cases through creating mutants in the code and checking if the test cases can catch these changes. Mutahunter provides detailed reports on mutation coverage, killed mutants, and survived mutants, enabling users to identify potential weaknesses in their test suites.

RD-Agent

RD-Agent is a tool designed to automate critical aspects of industrial R&D processes, focusing on data-driven scenarios to streamline model and data development. It aims to propose new ideas ('R') and implement them ('D') automatically, leading to solutions of significant industrial value. The tool supports scenarios like Automated Quantitative Trading, Data Mining Agent, Research Copilot, and more, with a framework to push the boundaries of research in data science. Users can create a Conda environment, install the RDAgent package from PyPI, configure GPT model, and run various applications for tasks like quantitative trading, model evolution, medical prediction, and more. The tool is intended to enhance R&D processes and boost productivity in industrial settings.

ProX

ProX is a lm-based data refinement framework that automates the process of cleaning and improving data used in pre-training large language models. It offers better performance, domain flexibility, efficiency, and cost-effectiveness compared to traditional methods. The framework has been shown to improve model performance by over 2% and boost accuracy by up to 20% in tasks like math. ProX is designed to refine data at scale without the need for manual adjustments, making it a valuable tool for data preprocessing in natural language processing tasks.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

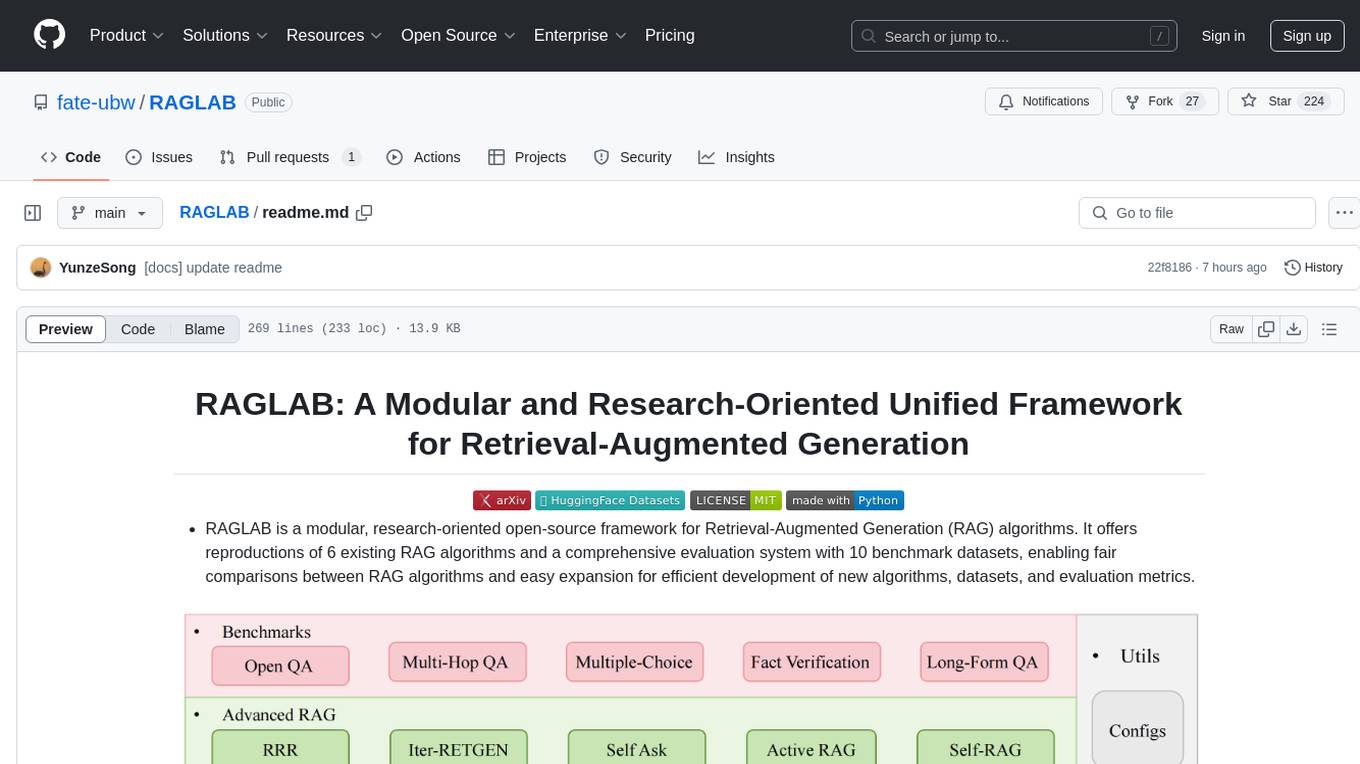

RAGLAB

RAGLAB is a modular, research-oriented open-source framework for Retrieval-Augmented Generation (RAG) algorithms. It offers reproductions of 6 existing RAG algorithms and a comprehensive evaluation system with 10 benchmark datasets, enabling fair comparisons between RAG algorithms and easy expansion for efficient development of new algorithms, datasets, and evaluation metrics. The framework supports the entire RAG pipeline, provides advanced algorithm implementations, fair comparison platform, efficient retriever client, versatile generator support, and flexible instruction lab. It also includes features like Interact Mode for quick understanding of algorithms and Evaluation Mode for reproducing paper results and scientific research.

cognee

Cognee is an open-source framework designed for creating self-improving deterministic outputs for Large Language Models (LLMs) using graphs, LLMs, and vector retrieval. It provides a platform for AI engineers to enhance their models and generate more accurate results. Users can leverage Cognee to add new information, utilize LLMs for knowledge creation, and query the system for relevant knowledge. The tool supports various LLM providers and offers flexibility in adding different data types, such as text files or directories. Cognee aims to streamline the process of working with LLMs and improving AI models for better performance and efficiency.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.

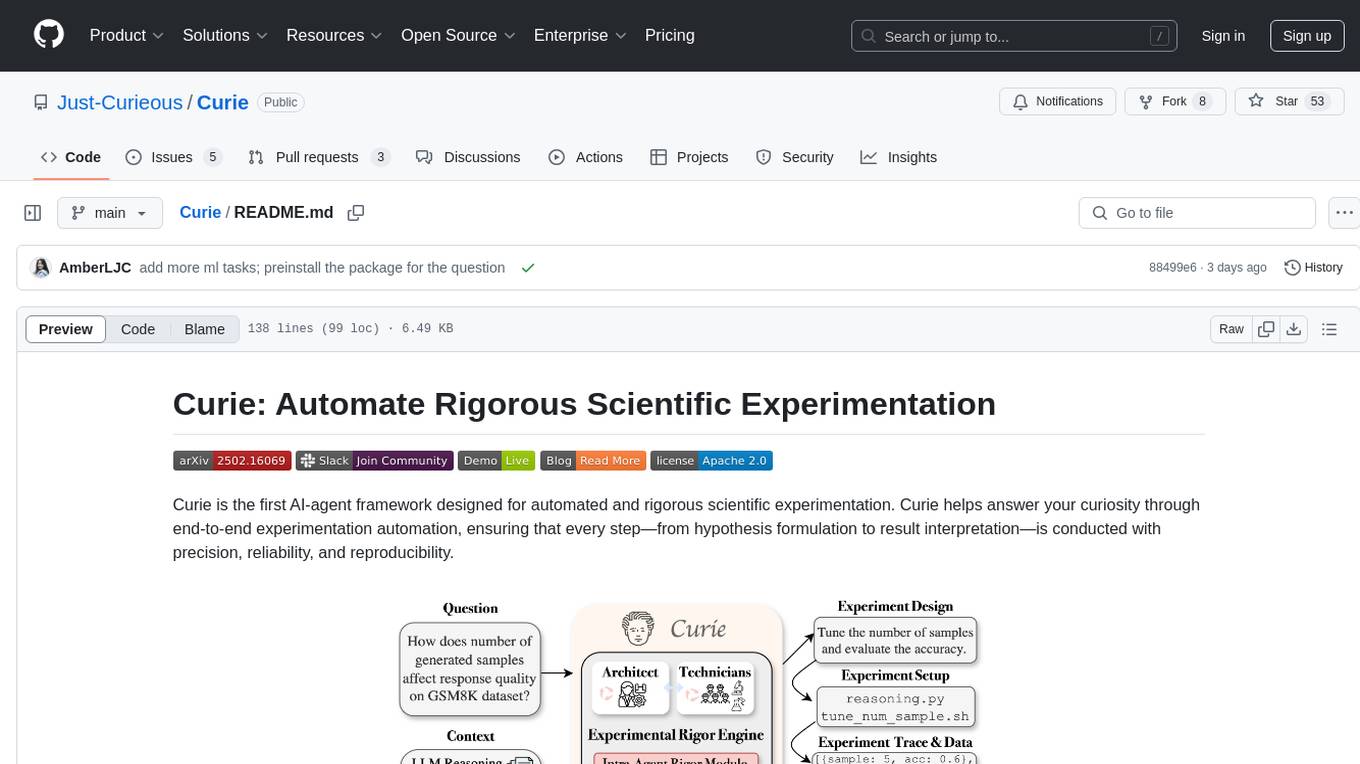

Curie

Curie is an AI-agent framework designed for automated and rigorous scientific experimentation. It automates end-to-end workflow management, ensures methodical procedure, reliability, and interpretability, and supports ML research, system analysis, and scientific discovery. It provides a benchmark with questions from 4 Computer Science domains. Users can customize experiment agents and adapt to their own tasks by configuring base_config.json. Curie is suitable for hyperparameter tuning, algorithm behavior analysis, system performance benchmarking, and automating computational simulations.

For similar tasks

mutahunter

Mutahunter is an open-source language-agnostic mutation testing tool maintained by CodeIntegrity. It leverages LLM models to inject context-aware faults into codebase, ensuring comprehensive testing. The tool aims to empower companies and developers to enhance test suites and improve software quality by verifying the effectiveness of test cases through creating mutants in the code and checking if the test cases can catch these changes. Mutahunter provides detailed reports on mutation coverage, killed mutants, and survived mutants, enabling users to identify potential weaknesses in their test suites.

AI-Resume-Analyzer-and-LinkedIn-Scraper-using-Generative-AI

Developed an advanced AI application that utilizes LLM and OpenAI for comprehensive resume analysis. It excels at summarizing the resume, evaluating strengths, identifying weaknesses, and offering personalized improvement suggestions, while also recommending the perfect job titles. Additionally, it seamlessly employs Selenium to extract vital LinkedIn data, encompassing company names, job titles, locations, job URLs, and detailed job descriptions. This application simplifies the job-seeking journey by equipping users with comprehensive insights to elevate their career opportunities.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.