SinkFinder

闭源系统半自动漏洞挖掘工具,针对 jar/war/zip 进行静态代码分析,输出从source到sink的可达路径。LLM将验证路径可达性,并根据上下文给出该路径可信分数

Stars: 396

SinkFinder is a tool designed to analyze jar and zip files for security vulnerabilities. It allows users to define rules for white and blacklisting specific classes and methods that may pose a risk. The tool provides a list of common security sink names along with severity levels and associated vulnerable methods. Users can use SinkFinder to quickly identify potential security issues in their Java applications by scanning for known sink patterns and configurations.

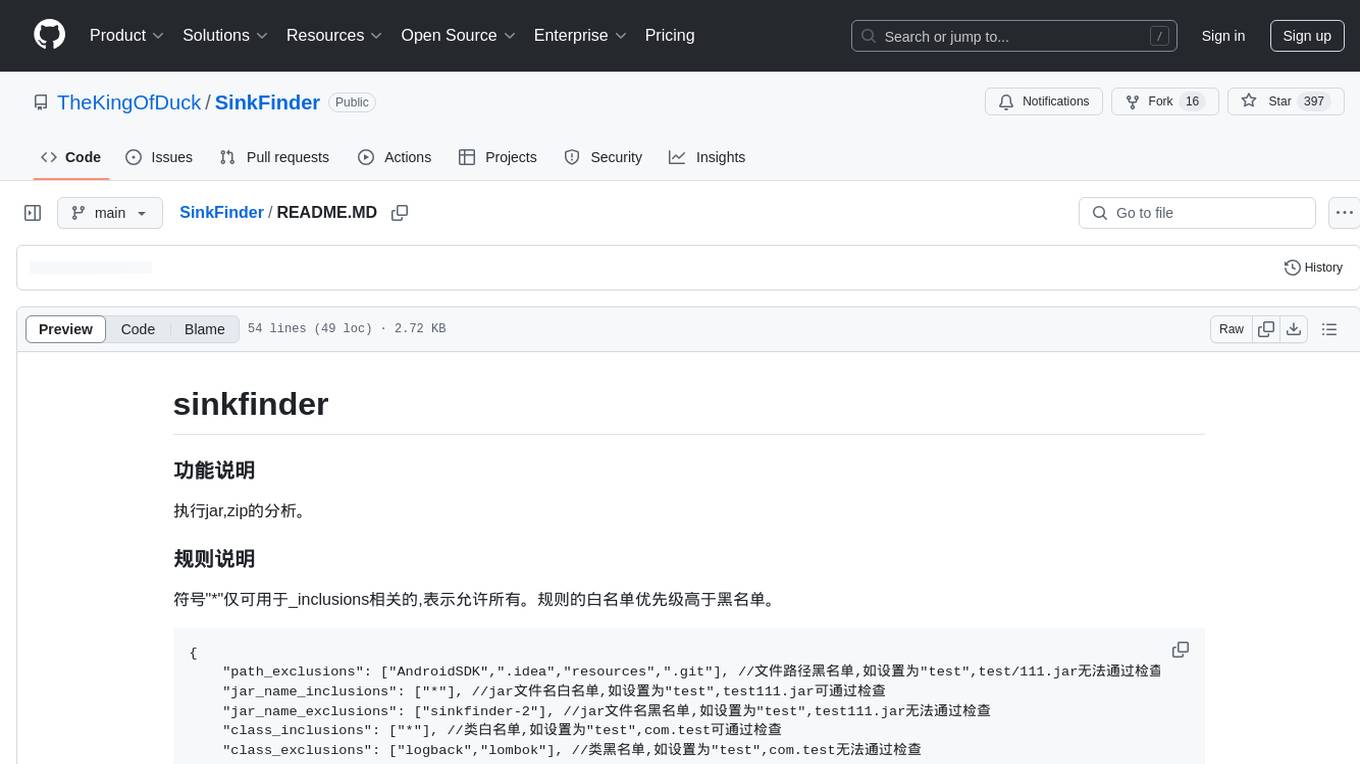

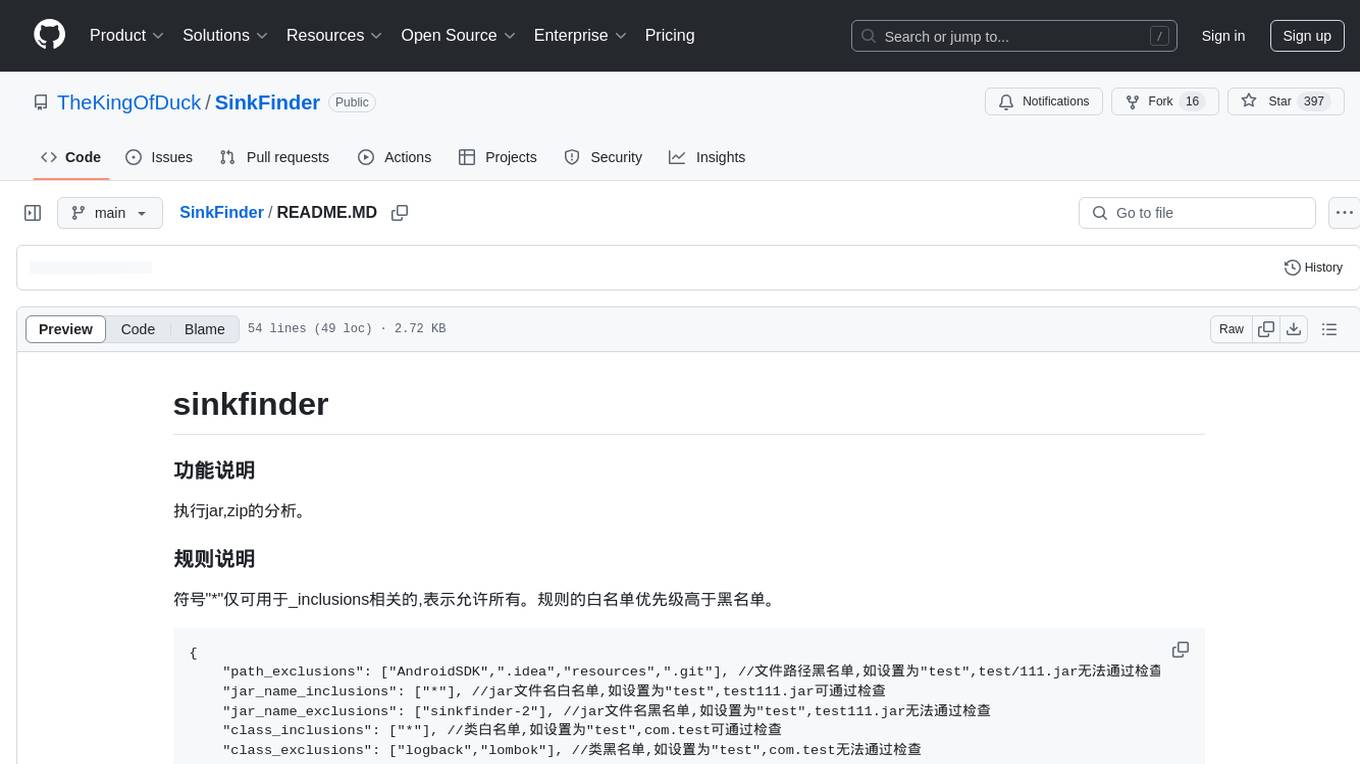

README:

执行jar,zip的分析。

符号"*"仅可用于_inclusions相关的,表示允许所有。规则的白名单优先级高于黑名单。

{

"path_exclusions": ["AndroidSDK",".idea","resources",".git"], //文件路径黑名单,如设置为"test",test/111.jar无法通过检查

"jar_name_inclusions": ["*"], //jar文件名白名单,如设置为"test",test111.jar可通过检查

"jar_name_exclusions": ["sinkfinder-2"], //jar文件名黑名单,如设置为"test",test111.jar无法通过检查

"class_inclusions": ["*"], //类白名单,如设置为"test",com.test可通过检查

"class_exclusions": ["logback","lombok"], //类黑名单,如设置为"test",com.test无法通过检查

"sink_rules": [

{

"sink_name": "RCE",

"severity_level": "High",

"sinks": ["java.lang.Runtime:exec","java.lang.ProcessBuilder:start","javax.script.ScriptEngine:eval"]

}, {

"sink_name": "SSRF",

"severity_level": "Medium",

"sinks": ["java.net.URL:openConnection","java.net.URL:openStream","org.apache.http.client.fluent.Request:Get","javax.imageio.ImageIO:read","org.apache.http.impl.client.CloseableHttpClient:execute","org.apache.commons.httpclient.HttpClient:executeMethod","org.jsoup.Jsoup:connect","org.apache.commons.io.IOUtils:toByteArray"]

},{

"sink_name": "Fastjson",

"severity_level": "Medium",

"sinks": ["com.alibaba.fastjson.JSON:parseObject","com.alibaba.fastjson.JSON:parse"]

},{

"sink_name": "XXE",

"severity_level": "Medium",

"sinks": ["javax.xml.parsers.DocumentBuilder:parse","javax.xml.parsers.SAXParser:parse", "com.sun.org.apache.xerces.internal.parsers.DOMParser:parse","org.dom4j.io.SAXReader:read","org.xml.sax.XMLReader:parse","org.jdom2.input.SAXBuilder:build","org.apache.commons.digester3.Digester:parse","org.dom4j.DocumentHelper:parseText"]

},{

"sink_name": "UNSERIALIZE",

"severity_level": "High",

"sinks": ["java.io.ObjectInputStream:readObject","java.io.ObjectInputStream:readUnshared","org.yaml.snakeyaml.Yaml:load","com.thoughtworks.xstream.XStream:fromXML","com.mysql.cj.jdbc.result.ResultSetImpl:getObject","org.apache.xmlrpc.parser.XmlRpcRequestParser:startElement","java.beans.XMLDecoder:readObject","org.apache.xml.security.transforms.Transforms:performTransforms"]

},{

"sink_name": "JNDI",

"severity_level": "High",

"sinks": ["javax.naming.InitialContext:doLookup","javax.naming.InitialContext:lookup"]

},{

"sink_name": "SSTI",

"severity_level": "High",

"sinks": ["org.apache.velocity.app.Velocity:evaluate"]

},{

"sink_name": "SPEL",

"severity_level": "High",

"sinks": ["org.springframework.expression.spel.standard.SpelExpression:getValue"]

}

]

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SinkFinder

Similar Open Source Tools

SinkFinder

SinkFinder is a tool designed to analyze jar and zip files for security vulnerabilities. It allows users to define rules for white and blacklisting specific classes and methods that may pose a risk. The tool provides a list of common security sink names along with severity levels and associated vulnerable methods. Users can use SinkFinder to quickly identify potential security issues in their Java applications by scanning for known sink patterns and configurations.

manga-image-translator

Translate texts in manga/images. Some manga/images will never be translated, therefore this project is born. * Image/Manga Translator * Samples * Online Demo * Disclaimer * Installation * Pip/venv * Poetry * Additional instructions for **Windows** * Docker * Hosting the web server * Using as CLI * Setting Translation Secrets * Using with Nvidia GPU * Building locally * Usage * Batch mode (default) * Demo mode * Web Mode * Api Mode * Related Projects * Docs * Recommended Modules * Tips to improve translation quality * Options * Language Code Reference * Translators Reference * GPT Config Reference * Using Gimp for rendering * Api Documentation * Synchronous mode * Asynchronous mode * Manual translation * Next steps * Support Us * Thanks To All Our Contributors :

ai

Ai is a Japanese bot for Misskey, designed to provide various functionalities such as posting random notes, learning keywords, playing Reversi, server monitoring, and more. Users can interact with Ai by setting up a `config.json` file with specific parameters. The tool can be installed using Node.js and npm, with optional dependencies like MeCab for additional features. Ai can also be run using Docker for easier deployment. Some features may require specific fonts to be installed in the directory. Ai stores its memory using an in-memory database, ensuring persistence across sessions. The tool is licensed under MIT and has received the 'Works on my machine' award.

hCaptcha-Solver

hCaptcha-Solver is an AI-based hcaptcha text challenge solver that utilizes the playwright module to generate the hsw N data. It can solve any text challenge without any problem, but may be flagged on some websites like Discord. The tool requires proxies since hCaptcha also rate limits. Users can run the 'hsw_api.py' before running anything and then integrate the usage shown in 'main.py' into their projects that require hCaptcha solving. Please note that this tool only works on sites that support hCaptcha text challenge.

pictureChange

The 'pictureChange' repository is a plugin that supports image processing using Baidu AI, stable diffusion webui, and suno music composition AI. It also allows for file summarization and image summarization using AI. The plugin supports various stable diffusion models, administrator control over group chat features, concurrent control, and custom templates for image and text generation. It can be deployed on WeChat enterprise accounts, personal accounts, and public accounts.

AICentral

AI Central is a powerful tool designed to take control of your AI services with minimal overhead. It is built on Asp.Net Core and dotnet 8, offering fast web-server performance. The tool enables advanced Azure APIm scenarios, PII stripping logging to Cosmos DB, token metrics through Open Telemetry, and intelligent routing features. AI Central supports various endpoint selection strategies, proxying asynchronous requests, custom OAuth2 authorization, circuit breakers, rate limiting, and extensibility through plugins. It provides an extensibility model for easy plugin development and offers enriched telemetry and logging capabilities for monitoring and insights.

llm_finetuning

This repository provides a comprehensive set of tools for fine-tuning large language models (LLMs) using various techniques, including full parameter training, LoRA (Low-Rank Adaptation), and P-Tuning V2. It supports a wide range of LLM models, including Qwen, Yi, Llama, and others. The repository includes scripts for data preparation, training, and inference, making it easy for users to fine-tune LLMs for specific tasks. Additionally, it offers a collection of pre-trained models and provides detailed documentation and examples to guide users through the process.

python-utcp

The Universal Tool Calling Protocol (UTCP) is a secure and scalable standard for defining and interacting with tools across various communication protocols. UTCP emphasizes scalability, extensibility, interoperability, and ease of use. It offers a modular core with a plugin-based architecture, making it extensible, testable, and easy to package. The repository contains the complete UTCP Python implementation with core components and protocol-specific plugins for HTTP, CLI, Model Context Protocol, file-based tools, and more.

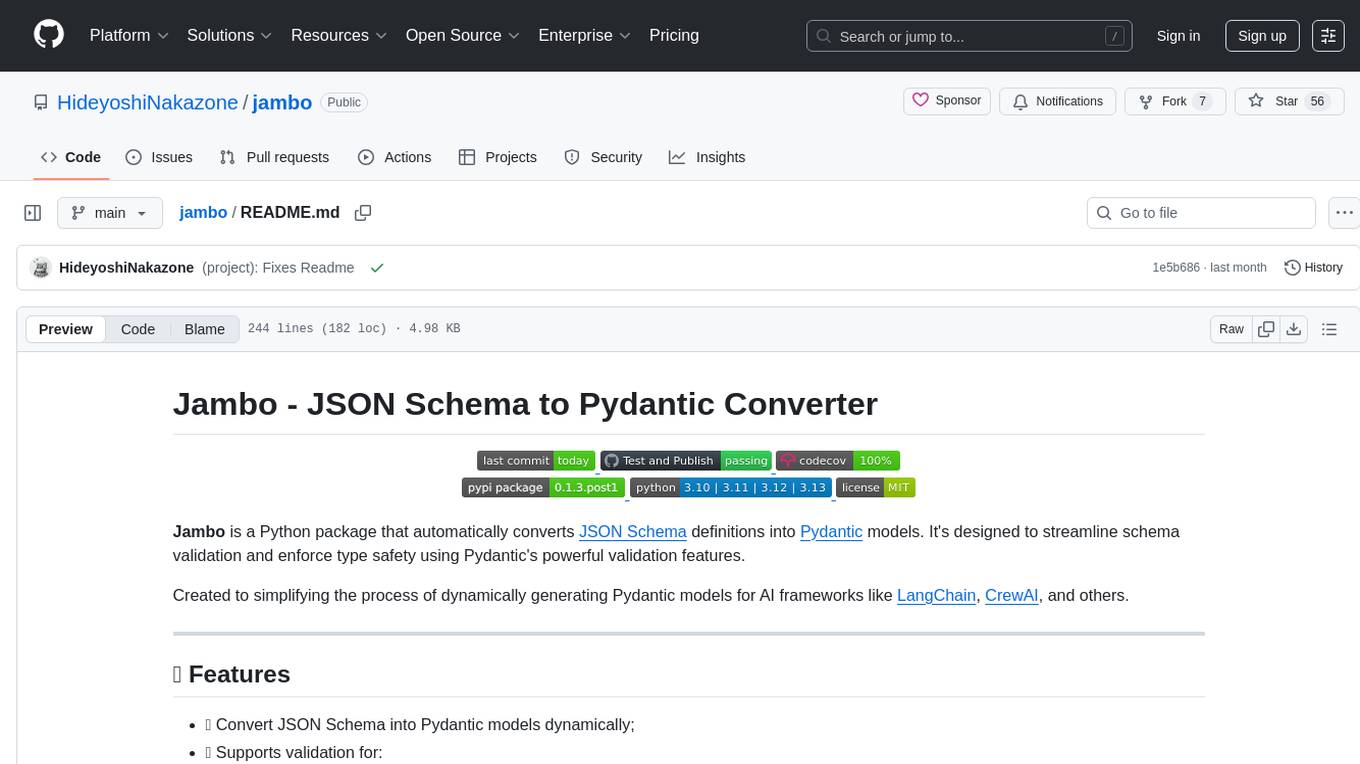

jambo

Jambo is a Python package that automatically converts JSON Schema definitions into Pydantic models. It streamlines schema validation and enforces type safety using Pydantic's validation features. The tool supports various JSON Schema features like strings, integers, floats, booleans, arrays, nested objects, and more. It enforces constraints such as minLength, maxLength, pattern, minimum, maximum, uniqueItems, and provides a zero-config approach for generating models. Jambo is designed to simplify the process of dynamically generating Pydantic models for AI frameworks.

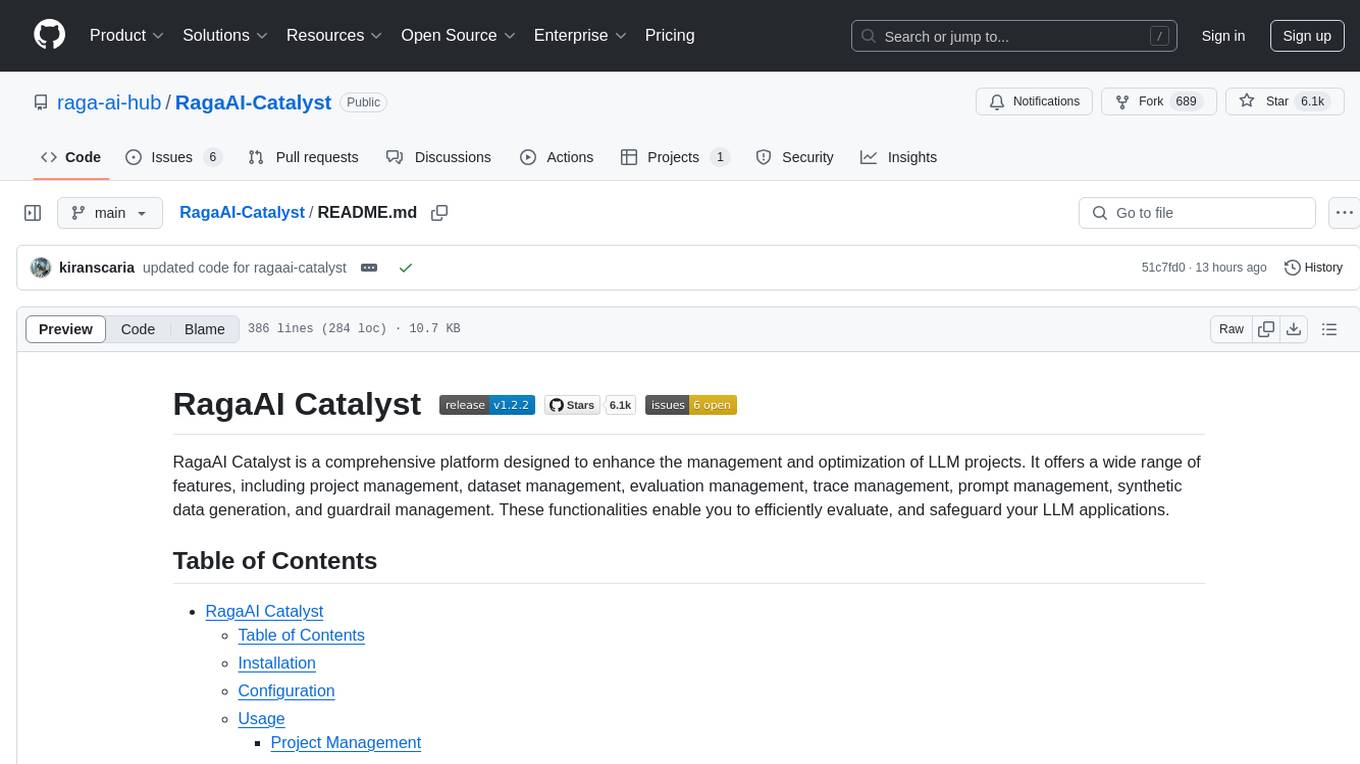

RagaAI-Catalyst

RagaAI Catalyst is a comprehensive platform designed to enhance the management and optimization of LLM projects. It offers features such as project management, dataset management, evaluation management, trace management, prompt management, synthetic data generation, and guardrail management. These functionalities enable efficient evaluation and safeguarding of LLM applications.

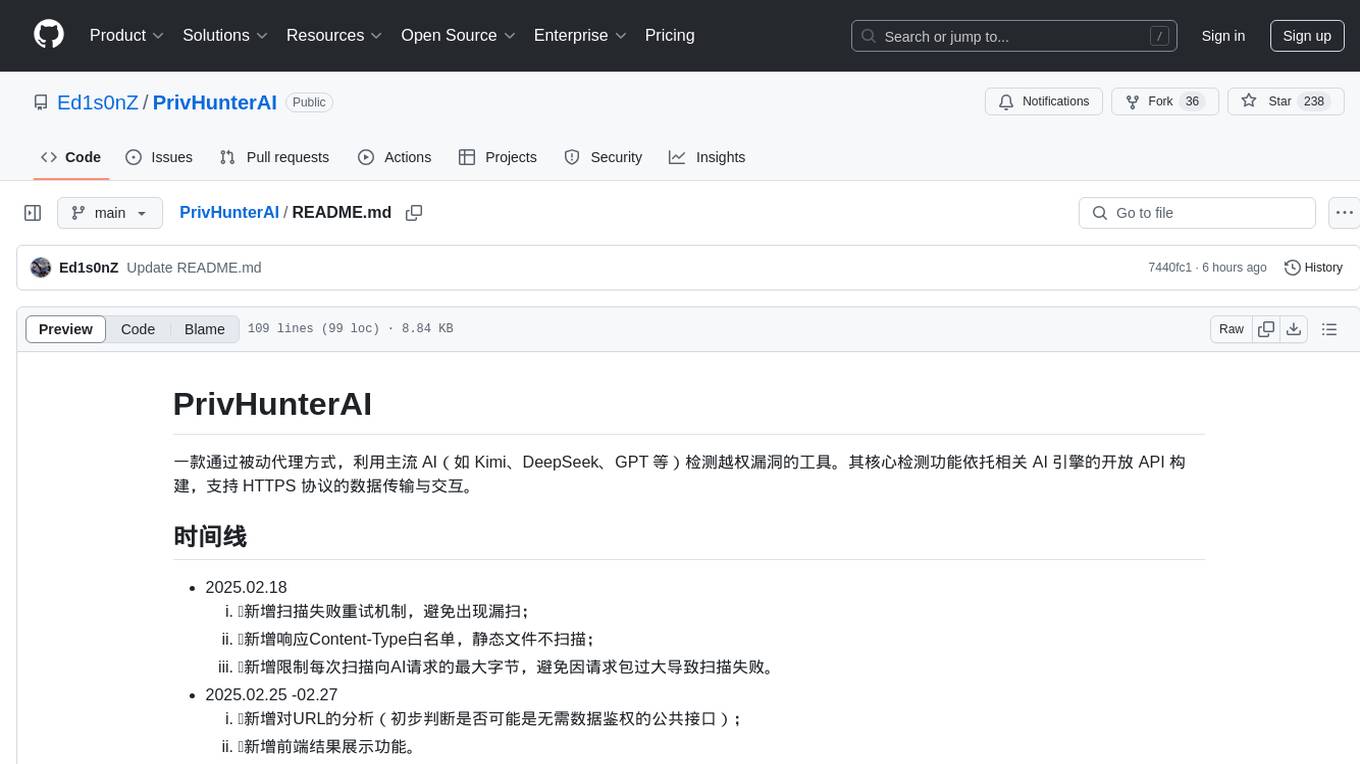

PrivHunterAI

PrivHunterAI is a tool that detects authorization vulnerabilities using mainstream AI engines such as Kimi, DeepSeek, and GPT through passive proxying. The core detection function relies on open APIs of related AI engines and supports data transmission and interaction over HTTPS protocol. It continuously improves by adding features like scan failure retry mechanism, response Content-Type whitelist, limiting AI request size, URL analysis, frontend result display, additional headers for requests, cost optimization by filtering authorization keywords before calling AI, and terminal output of request package records.

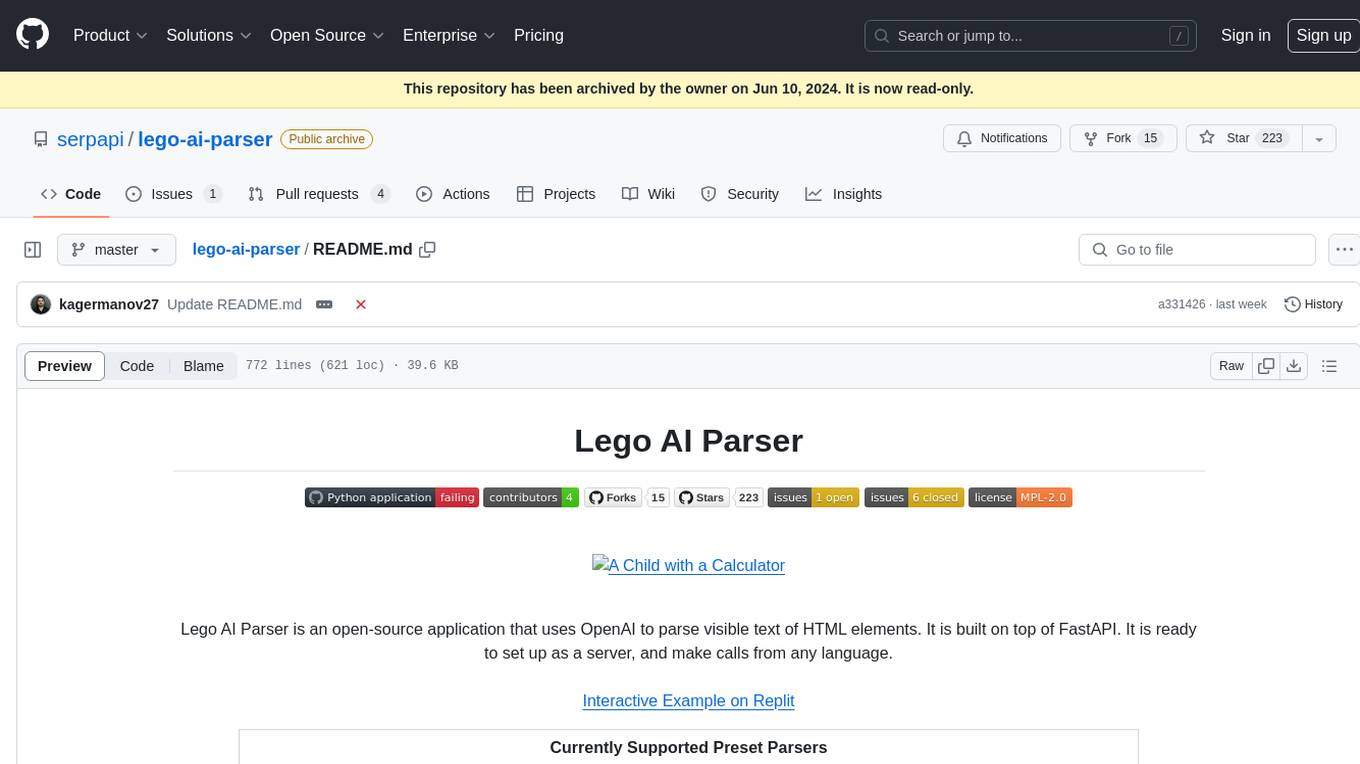

lego-ai-parser

Lego AI Parser is an open-source application that uses OpenAI to parse visible text of HTML elements. It is built on top of FastAPI, ready to set up as a server, and make calls from any language. It supports preset parsers for Google Local Results, Amazon Listings, Etsy Listings, Wayfair Listings, BestBuy Listings, Costco Listings, Macy's Listings, and Nordstrom Listings. Users can also design custom parsers by providing prompts, examples, and details about the OpenAI model under the classifier key.

ext-apps

The @modelcontextprotocol/ext-apps repository contains the SDK and specification for MCP Apps Extension (SEP-1865). MCP Apps are a proposed standard to allow MCP Servers to display interactive UI elements in conversational MCP clients/chatbots. The repository includes SDKs for both app developers and host developers, along with examples showcasing real-world use cases. Users can build interactive UIs that run inside MCP-enabled chat clients and embed/communicate with MCP Apps in their chat applications. The SDK extends the Model Context Protocol by letting tools declare UI resources and enables bidirectional communication between the host and the UI. The repository also provides Agent Skills for building MCP Apps and instructions for installing them in AI coding agents.

speechlib

Speechlib is a Python library that provides functionalities for speaker diarization, speaker recognition, and transcription on audio files. It offers features such as converting audio formats to WAV, converting stereo to mono, and re-encoding to 16-bit PCM. The library allows users to transcribe audio files, store transcripts, specify language and model size, and perform speaker recognition using voice samples. It supports various languages and provides performance metrics for different model sizes. Speechlib utilizes huggingface models for speaker recognition and transcription tasks.

chatgpt-exporter

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

vlmrun-hub

VLMRun Hub is a versatile tool for managing and running virtual machines in a centralized manner. It provides a user-friendly interface to easily create, start, stop, and monitor virtual machines across multiple hosts. With VLMRun Hub, users can efficiently manage their virtualized environments and streamline their workflow. The tool offers flexibility and scalability, making it suitable for both small-scale personal projects and large-scale enterprise deployments.

For similar tasks

SinkFinder

SinkFinder is a tool designed to analyze jar and zip files for security vulnerabilities. It allows users to define rules for white and blacklisting specific classes and methods that may pose a risk. The tool provides a list of common security sink names along with severity levels and associated vulnerable methods. Users can use SinkFinder to quickly identify potential security issues in their Java applications by scanning for known sink patterns and configurations.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

moonshot

Moonshot is a simple and modular tool developed by the AI Verify Foundation to evaluate Language Model Models (LLMs) and LLM applications. It brings Benchmarking and Red-Teaming together to assist AI developers, compliance teams, and AI system owners in assessing LLM performance. Moonshot can be accessed through various interfaces including User-friendly Web UI, Interactive Command Line Interface, and seamless integration into MLOps workflows via Library APIs or Web APIs. It offers features like benchmarking LLMs from popular model providers, running relevant tests, creating custom cookbooks and recipes, and automating Red Teaming to identify vulnerabilities in AI systems.

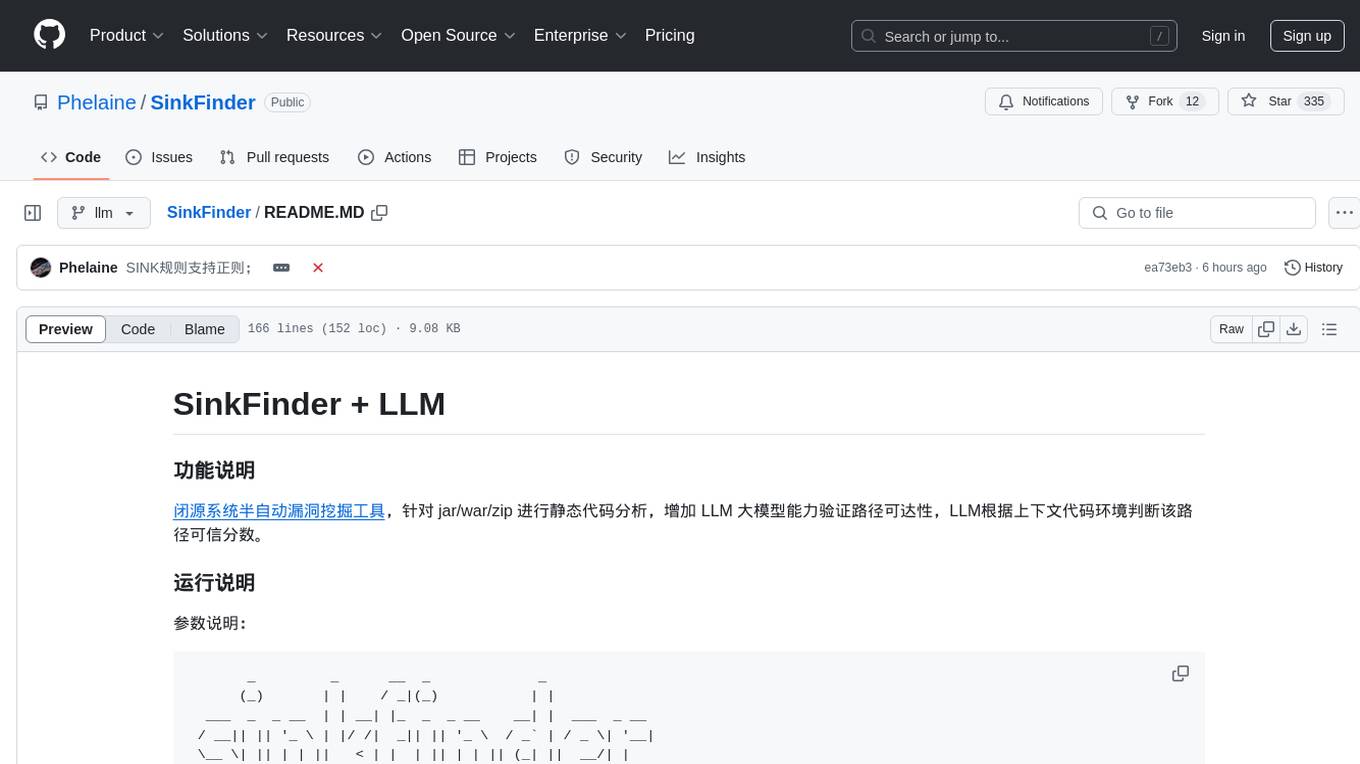

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

agentic-radar

The Agentic Radar is a security scanner designed to analyze and assess agentic systems for security and operational insights. It helps users understand how agentic systems function, identify potential vulnerabilities, and create security reports. The tool includes workflow visualization, tool identification, and vulnerability mapping, providing a comprehensive HTML report for easy reviewing and sharing. It simplifies the process of assessing complex workflows and multiple tools used in agentic systems, offering a structured view of potential risks and security frameworks.

aderyn

Aderyn is a powerful Solidity static analyzer designed to help protocol engineers and security researchers find vulnerabilities in Solidity code bases. It provides off-the-shelf support for Foundry and Hardhat projects, allows for custom frameworks through a configuration file, and generates reports in Markdown, JSON, and Sarif formats. Users can install Aderyn using Cyfrinup, curl, Homebrew, or npm, and quickly identify vulnerabilities in their Solidity code. The tool also offers a VS Code extension for seamless integration with the IDE.

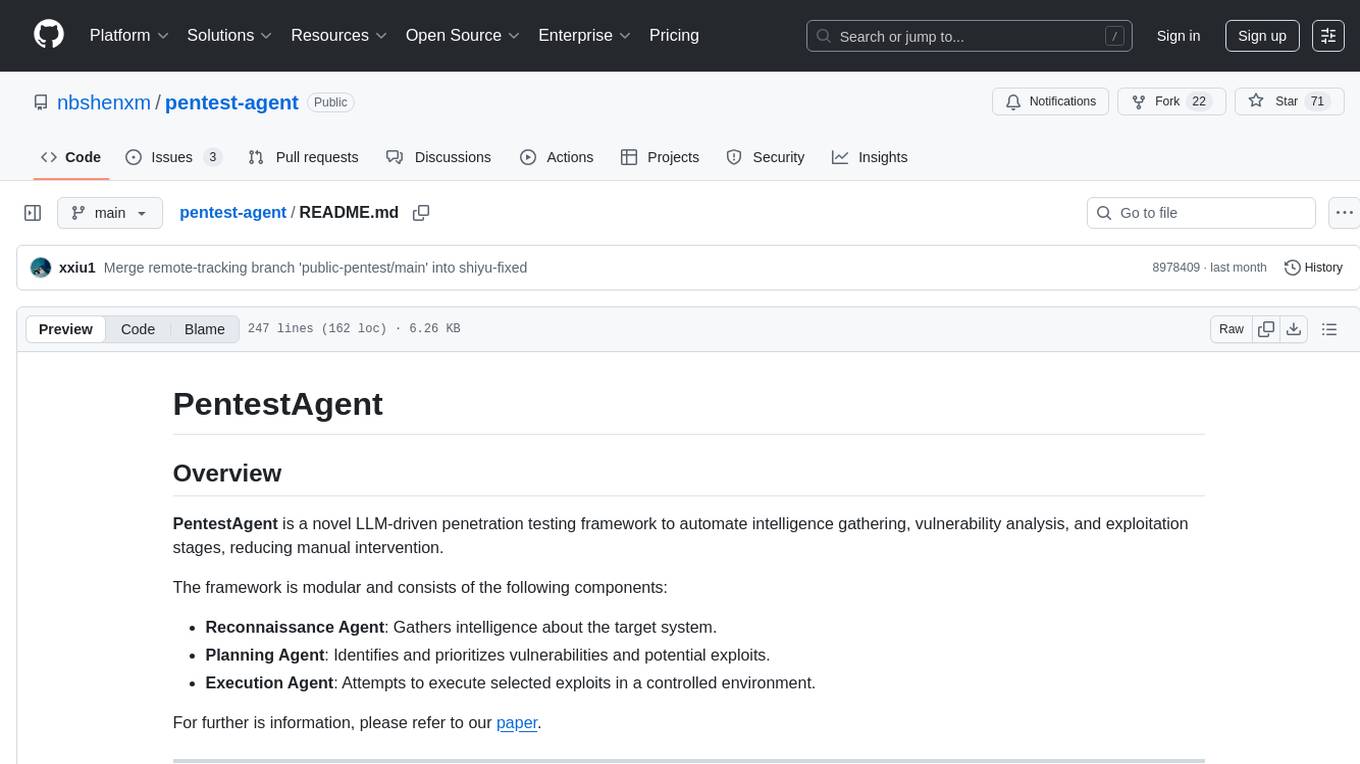

pentest-agent

Pentest Agent is a lightweight and versatile tool designed for conducting penetration testing on network systems. It provides a user-friendly interface for scanning, identifying vulnerabilities, and generating detailed reports. The tool is highly customizable, allowing users to define specific targets and parameters for testing. Pentest Agent is suitable for security professionals and ethical hackers looking to assess the security posture of their systems and networks.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.