chatgpt-exporter

Export and Share your ChatGPT conversation history

Stars: 1367

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

README:

A GreasyFork script to export the chat history of ChatGPT

English | Français | Indonesia | 한국어 | Türkçe

Install Tampermonkey

| Greasyfork | GitHub |

|---|---|

|

|

📚 Supported Formats | 💡 Example | 📤 Export Multiple Conversations | 🤝 Contribution | ⭐ Star History

You:

I'm creating a ChatGPT Exporter. What do you think?

ChatGPT:

It sounds like you're planning on creating a tool that uses the ChatGPT model

to export text. ChatGPT is a large language model trained by OpenAI that is

designed to generate human-like text responses based on a given input. It can

be used for a variety of applications, such as chatbots, automated responses

to customer inquiries, and more.

However, please keep in mind that as a large language model, ChatGPT has not

been specifically trained for any specific task, so the quality of the

generated text will depend on how it is used and the context in which it is

applied. It's important to use ChatGPT responsibly and consider the potential

consequences of using it in any given situation.

---

title: ChatGPT Exporter Creation

source: https://chat.openai.com/c/cf3f8850-1d69-43c8-b99b-affd0de4e76f

author: ChatGPT

---

# ChatGPT Exporter Creation

#### You:

I'm creating a ChatGPT Exporter. What do you think?

#### ChatGPT:

It sounds like you're planning on creating a tool that uses the ChatGPT model to export text. ChatGPT is a large language model trained by OpenAI that is designed to generate human-like text responses based on a given input. It can be used for a variety of applications, such as chatbots, automated responses to customer inquiries, and more.

the raw content from API https://chat.openai.com/backend-api/conversation/[id]

Click to see

{

"id": "35a1fa05-e928-4c39-8ffa-ca74f75b509f",

"title": "AI Turing Test.",

"create_time": 1678015311.655875,

"mapping": {

"5c48fa3e-e4ee-4d00-aa66-8fbcb671a358": {

"id": "5c48fa3e-e4ee-4d00-aa66-8fbcb671a358",

"message": {

"id": "5c48fa3e-e4ee-4d00-aa66-8fbcb671a358",

"author": {

"role": "system",

"metadata": {}

},

"create_time": 1678015311.655875,

"content": {

"content_type": "text",

"parts": [

""

]

},

"end_turn": true,

"weight": 1,

"metadata": {},

"recipient": "all"

},

"parent": "9310b90f-d8f0-4be6-bac2-daacddac784f",

"children": [

"4afb9720-3a88-49b1-9309-e2b53d607f34"

]

},

"9310b90f-d8f0-4be6-bac2-daacddac784f": {

"id": "9310b90f-d8f0-4be6-bac2-daacddac784f",

"children": [

"5c48fa3e-e4ee-4d00-aa66-8fbcb671a358"

]

},

"4afb9720-3a88-49b1-9309-e2b53d607f34": {

"id": "4afb9720-3a88-49b1-9309-e2b53d607f34",

"message": {

"id": "4afb9720-3a88-49b1-9309-e2b53d607f34",

"author": {

"role": "user",

"metadata": {}

},

"create_time": 1678015311.656259,

"content": {

"content_type": "text",

"parts": [

"write an poem about the Turing test"

]

},

"weight": 1,

"metadata": {

"timestamp_": "absolute"

},

"recipient": "all"

},

"parent": "5c48fa3e-e4ee-4d00-aa66-8fbcb671a358",

"children": [

"7027f1a5-8bd5-4463-9869-8a1d1db2f9a1"

]

},

"7027f1a5-8bd5-4463-9869-8a1d1db2f9a1": {

"id": "7027f1a5-8bd5-4463-9869-8a1d1db2f9a1",

"message": {

"id": "7027f1a5-8bd5-4463-9869-8a1d1db2f9a1",

"author": {

"role": "assistant",

"metadata": {}

},

"create_time": 1678185638.890551,

"content": {

"content_type": "text",

"parts": [

"In the realm of machines and code,\nThere lies a test, with rules bestowed,\nA challenge to determine true mind,\nAnd if a machine's intelligence can find.\n\nThe Turing test, named for its creator,\nSeeks to find if a machine's behavior\nCan mimic that of a human so well,\nThat it's indistinguishable, can you tell?\n\nThrough conversations, the test is done,\nWith a judge and a machine, the game is run,\nIf the judge can't tell who is who,\nThen the machine's intelligence shines through.\n\nBut can a machine truly think?\nOr is it just a clever bit of link,\nBetween algorithms and programming code,\nCan it truly understand and decode?\n\nThe Turing test, a thought experiment,\nBrings up questions, doubts, and sentiment,\nFor as we strive to create machines smart,\nWe wonder, will they ever truly have heart?\n\nSo let us ponder and explore,\nAs we create machines more and more,\nThe Turing test a reminder to be,\nMindful of what our machines can truly see."

]

},

"end_turn": false,

"weight": 1,

"metadata": {

"model_slug": "text-davinci-002-render-sha",

"finish_details": {

"type": "stop"

},

"timestamp_": "absolute"

},

"recipient": "all"

},

"parent": "4afb9720-3a88-49b1-9309-e2b53d607f34",

"children": []

}

},

"moderation_results": [],

"current_node": "7027f1a5-8bd5-4463-9869-8a1d1db2f9a1"

}When you click the "Export All" button, the Export Conversations dialog pops up. Here are the functions you can access.

Export from official export file (conversations.json)

Click the upload icon button to upload a JSON file of conversations, such as one downloaded from OpenAI.

Export from API

In the list of all your conversations, select which conversations you want to export. Check the "Select All" checkbox to export all your conversations.

Select your export format from the dropdown on the bottom left. You can choose from the following formats.

- Markdown

- HTML

- JSON

- JSON (ZIP)

Click the button to perform the action you want.

- Archive - Archived chat sessions will disappear from the sidebar and can be managed in ChatGPT settings. See #199 for more details.

- Delete - Deletes the selected conversations.

- Export - Exports the selected conversations in the format chosen using the format selector.

See CONTRIBUTING.md

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatgpt-exporter

Similar Open Source Tools

chatgpt-exporter

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

functionary

Functionary is a language model that interprets and executes functions/plugins. It determines when to execute functions, whether in parallel or serially, and understands their outputs. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls. It offers documentation and examples on functionary.meetkai.com. The newest model, meetkai/functionary-medium-v3.1, is ranked 2nd in the Berkeley Function-Calling Leaderboard. Functionary supports models with different context lengths and capabilities for function calling and code interpretation. It also provides grammar sampling for accurate function and parameter names. Users can deploy Functionary models serverlessly using Modal.com.

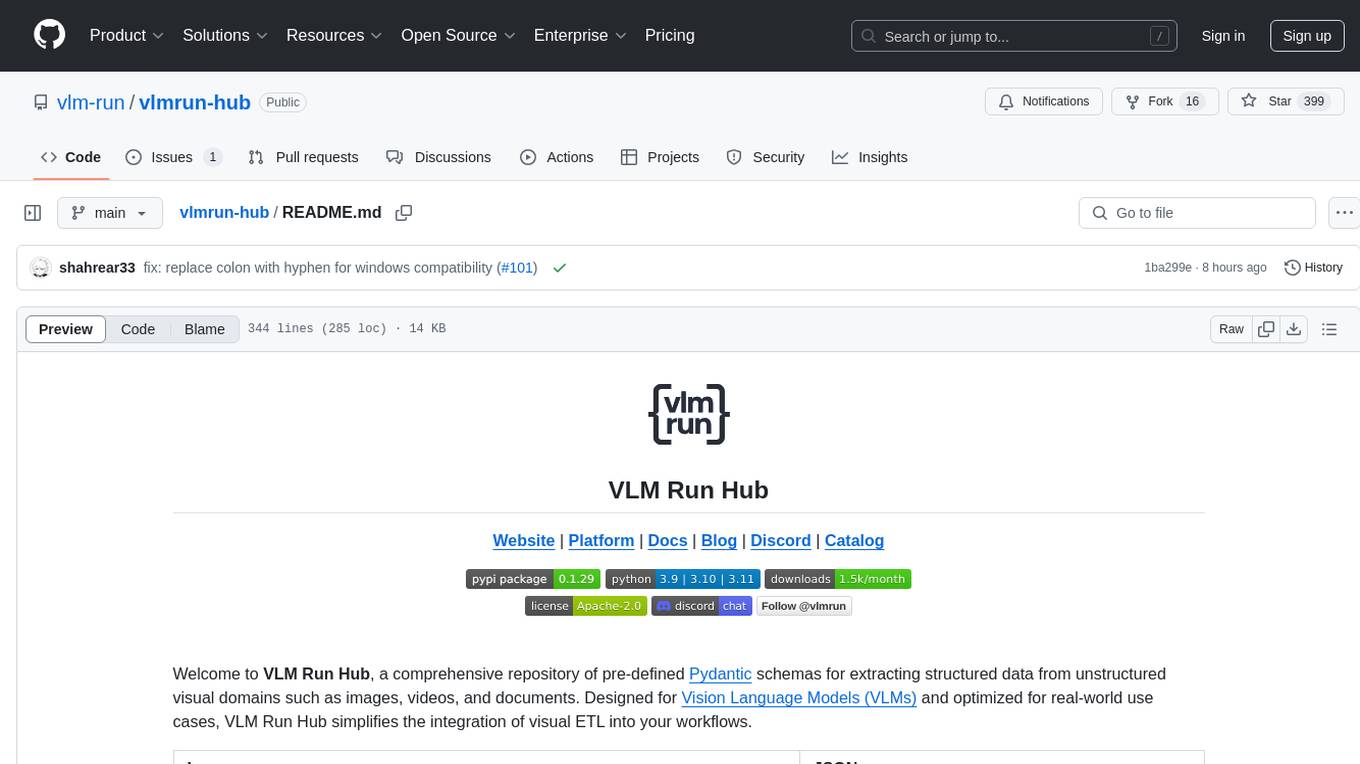

vlmrun-hub

VLMRun Hub is a versatile tool for managing and running virtual machines in a centralized manner. It provides a user-friendly interface to easily create, start, stop, and monitor virtual machines across multiple hosts. With VLMRun Hub, users can efficiently manage their virtualized environments and streamline their workflow. The tool offers flexibility and scalability, making it suitable for both small-scale personal projects and large-scale enterprise deployments.

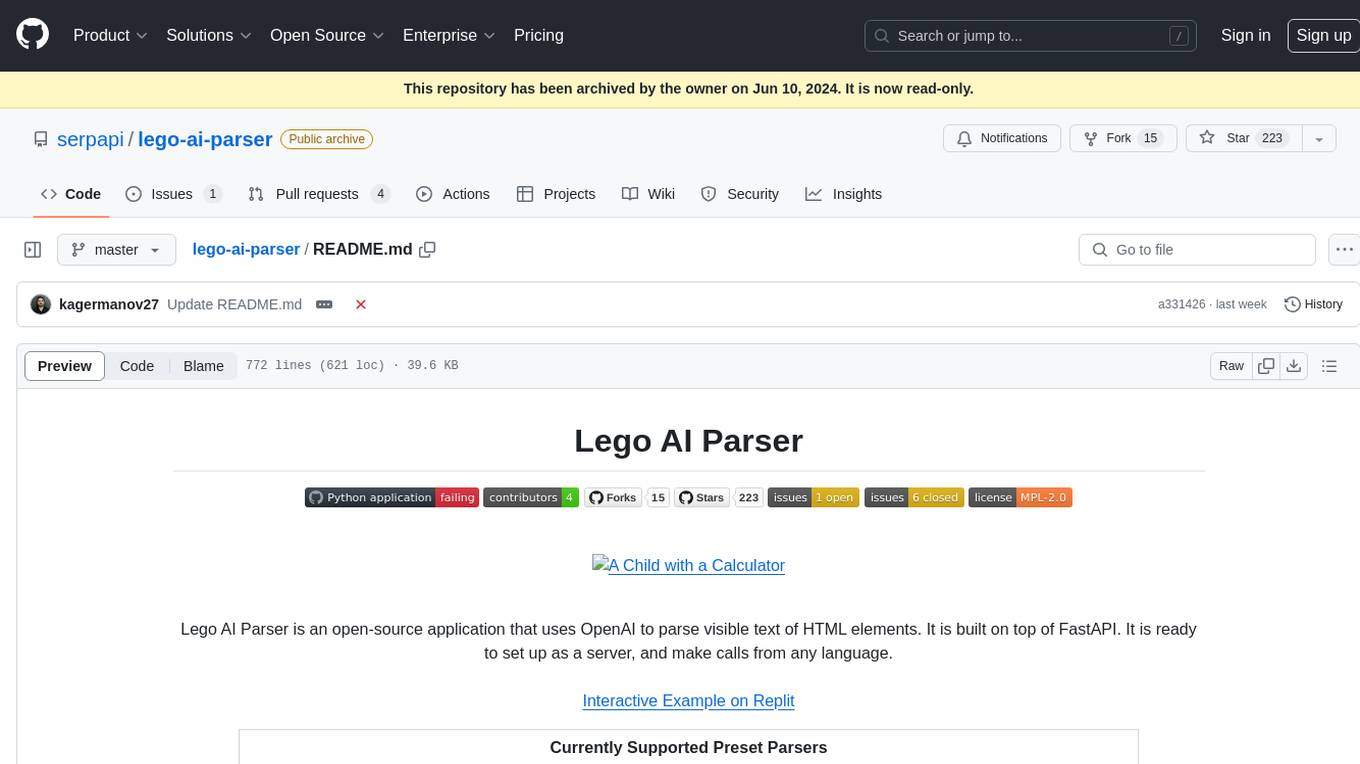

lego-ai-parser

Lego AI Parser is an open-source application that uses OpenAI to parse visible text of HTML elements. It is built on top of FastAPI, ready to set up as a server, and make calls from any language. It supports preset parsers for Google Local Results, Amazon Listings, Etsy Listings, Wayfair Listings, BestBuy Listings, Costco Listings, Macy's Listings, and Nordstrom Listings. Users can also design custom parsers by providing prompts, examples, and details about the OpenAI model under the classifier key.

AIGODLIKE-ComfyUI-Translation

A plugin for multilingual translation of ComfyUI, This plugin implements translation of resident menu bar/search bar/right-click context menu/node, etc

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience. It offers features such as easy deployment of LangChain models and pipelines, ready-to-use authentication functionality, high-performance FastAPI framework for serving requests, scalability and robustness for language processing applications, support for custom pipelines and processing, well-documented RESTful API endpoints, and asynchronous processing for faster response times.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It offers features such as web scraping, crawling, and discovery, search and content extraction, deep research and batch scraping, automatic retries and rate limiting, cloud and self-hosted support, and SSE support. The server can be configured to run with various tools like Cursor, Windsurf, SSE Local Mode, Smithery, and VS Code. It supports environment variables for cloud API and optional configurations for retry settings and credit usage monitoring. The server includes tools for scraping, batch scraping, mapping, searching, crawling, and extracting structured data from web pages. It provides detailed logging and error handling functionalities for robust performance.

langchain-swift

LangChain for Swift. Optimized for iOS, macOS, watchOS (part) and visionOS.(beta) This is a pure client library, no server required

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

DeRTa

DeRTa (Refuse Whenever You Feel Unsafe) is a tool designed to improve safety in Large Language Models (LLMs) by training them to refuse compliance at any response juncture. The tool incorporates methods such as MLE with Harmful Response Prefix and Reinforced Transition Optimization (RTO) to address refusal positional bias and strengthen the model's capability to transition from potential harm to safety refusal. DeRTa provides training data, model weights, and evaluation scripts for LLMs, enabling users to enhance safety in language generation tasks.

pyllms

PyLLMs is a minimal Python library designed to connect to various Language Model Models (LLMs) such as OpenAI, Anthropic, Google, AI21, Cohere, Aleph Alpha, and HuggingfaceHub. It provides a built-in model performance benchmark for fast prototyping and evaluating different models. Users can easily connect to top LLMs, get completions from multiple models simultaneously, and evaluate models on quality, speed, and cost. The library supports asynchronous completion, streaming from compatible models, and multi-model initialization for testing and comparison. Additionally, it offers features like passing chat history, system messages, counting tokens, and benchmarking models based on quality, speed, and cost.

For similar tasks

chatgpt-exporter

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

WeChatMsg

WeChatMsg is a tool designed to help users manage and analyze their WeChat data. It aims to provide users with the ability to preserve their precious memories and create a personalized AI companion. The tool allows users to extract and export various types of data from WeChat, such as text, images, contacts, and more. Additionally, it offers features like analyzing chat data and generating visual annual reports. WeChatMsg is built on the idea of empowering users to take control of their data and foster emotional connections through technology.

wechat-robot-client

The Wechat Robot Client is an intelligent robot management system that provides rich interactive experiences. It includes features such as AI chat, drawing, voice, group chat functionalities, song requests, daily summaries, friend circle viewing, friend adding, group chat management, file messaging, multiple login methods support, and more. The system also supports features like sending files, various login methods, and integration with other apps like '王者荣耀' and '吃鸡'. It offers a comprehensive solution for managing Wechat interactions and automating various tasks.

ChatGPT-desktop

ChatGPT Desktop Application is a multi-platform tool that provides a powerful AI wrapper for generating text. It offers features like text-to-speech, exporting chat history in various formats, automatic application upgrades, system tray hover window, support for slash commands, customization of global shortcuts, and pop-up search. The application is built using Tauri and aims to enhance user experience by simplifying text generation tasks. It is available for Mac, Windows, and Linux, and is designed for personal learning and research purposes.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

SiLLM

SiLLM is a toolkit that simplifies the process of training and running Large Language Models (LLMs) on Apple Silicon by leveraging the MLX framework. It provides features such as LLM loading, LoRA training, DPO training, a web app for a seamless chat experience, an API server with OpenAI compatible chat endpoints, and command-line interface (CLI) scripts for chat, server, LoRA fine-tuning, DPO fine-tuning, conversion, and quantization.

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

GPT4DFCI

GPT4DFCI is a private and secure generative AI tool based on GPT-4, deployed for non-clinical use at Dana-Farber Cancer Institute. The tool is overseen by the Dana-Farber AI Governance Committee and developed by the Dana-Farber Informatics & Analytics Department. The repository includes manuscript & policy details, training material, front-end and back-end code, infrastructure information, API client for programmatic use, licensing details, and contact information.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.