kairon

Agentic AI platform that harnesses Visual LLM Chaining to build proactive digital assistants

Stars: 272

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

README:

Kairon is now envisioned as a conversational digital transformation platform that helps build LLM based digital assistants at scale. It is designed to make the lives of those who work with ai-assistants easy, by giving them a no-coding web interface to adapt , train , test and maintain such assistants . We are now enhancing the backbone of Kairon with a full fledged context management system to build proactive digital assistants .

What is Kairon?

Kairon is currently a set of tools built on the RASA framework with a helpful UI interface . While RASA focuses on technology of chatbots itself. Kairon on the other hand focuses on technology that deal with pre-processing of data that are needed by this framework. These include question augmentation and generation of knowledge graphs that can be used to automatically generate intents, questions and responses. It also deals with the post processing and maintenance of these bots such metrics / follow-up messages etc.

What can it do?

Kairon is open-source. It is a Conversational digital transformation platform: Kairon is a platform that allows companies to create and deploy digital assistants to interact with customers in a conversational manner.

End-to-end lifecycle management: Kairon takes care of the entire digital assistant lifecycle, from creation to deployment and monitoring, freeing up company resources to focus on other tasks. Tethered digital assistants: Kairon’s digital assistants are tethered to the platform, which allows for real-time monitoring of their performance and easy maintenance and updates as needed.

Low-code/no-code interface: Kairon’s interface is designed to be easy for functional users, such as marketing teams or product management, to define how the digital assistant responds to user queries without needing extensive coding skills. Secure script injection: Kairon’s digital assistants can be easily deployed on websites and SAAS products through secure script injection, enabling organizations to offer better customer service and support.

Kairon Telemetry: Kairon’s telemetry feature monitors how users are interacting with the website/product where Kairon was injected and proactively intervenes if they are facing problems, improving the overall user experience. Chat client designer: Kairon’s chat client designer feature allows organizations to create customized chat clients for their digital assistants, which can enhance the user experience and help build brand loyalty.

Analytics module: Kairon’s analytics module provides insights into how users are interacting with the digital assistant, enabling organizations to optimize their performance and provide better service to customers. Robust integration suite: Kairon’s integration suite allows digital assistants to be served in an omni-channel, multi-lingual manner, improving accessibility and expanding the reach of the digital assistant.

Realtime struggle analytics: Kairon’s digital assistants use real-time struggle analytics to proactively intervene when users are facing friction on the product/website where Kairon has been injected, improving user satisfaction and reducing churn. This website can be found at Kairon and is hosted by NimbleWork Inc.

Who uses it ?

Kairon is built for two personas Teams and Individuals who want an easy no-coding interface to create, train, test and deploy digital assistants . One can directly access these features from our hosted website. Teams who want to host the chatbot trainer in-house. They can build it using docker compose. Our teams current focus within NLP is Knowledge Graphs – Do let us know if you are interested.

At this juncture it layers on top of Rasa Open Source

Kairon only requires a recent version of Docker and Docker Compose.

Please do the below changes in docker/docker-compose.yml

-

set env variable server to public IP of the machine where trainer api docker container is running for example: http://localhost:81

-

Optional, if you want to have google analytics enabled then uncomment trackingid and set google analytics tracking id

-

set env variable SECRET_KEY to some random key.

use below command for generating random secret key

openssl rand -hex 32

-

run the command.

cd kairon/docker docker-compose up -d -

Open http://localhost/ in browser.

-

To Test use username: [email protected] and password: Changeit@123 to try with demo user

-

Kairon requires python 3.10 and mongo 4.0+

-

Then clone this repo

git clone https://github.com/digiteinfotech/kairon.git cd kairon/ -

For creating Virtual environment, please follow the link

-

For installing dependencies

Windows

setup.batNo Matching distribution found tensorflow-text - remove the dependency from requirements.txt file, as window version is not available #44

Linux

chmod 777 ./setup.sh sh ./setup.sh -

For starting augmentation services run

python -m uvicorn augmentation.paraphrase.server:app --host 0.0.0.0 -

For starting trainer-api services run

python -m uvicorn kairon.api.app.main:app --host 0.0.0.0 --port 8080

The email.yaml file can be used to configure the process for account confirmation through a verification link sent to the user's mail id. It consists of the following parameters:

-

enable -

set value to True for enabling email verification, and False to disable.

You can also use the environment variable EMAIL_ENABLE to change the values.

-

url -

this url, along with a unique token is sent to the user's mail id for account verification as well as for password reset tasks.

You can also use the environment variable APP_URL to change the values.

-

email -

the mail id of the account which sends the confirmation mail.

You can also use the environment variable EMAIL_SENDER_EMAIL to change the values.

-

password -

the password of the account which sends the confirmation mail.

You can also use the environment variable EMAIL_SENDER_PASSWORD to change the values.

-

port -

the port that is used to send the mail [For ex. "587"].

You can also use the environment variable EMAIL_SENDER_PORT to change the values.

-

service -

the mail service that is used to send the confirmation mail [For ex. "gmail"].

You can also use the environment variable EMAIL_SENDER_SERVICE to change the values.

-

tls -

set value to True for enabling transport layer security, and False to disable.

You can also use the environment variable EMAIL_SENDER_TLS to change the values.

-

userid -

the user ID for the mail service if you're using a custom service for sending mails.

You can also use the environment variable EMAIL_SENDER_USERID to change the values.

-

confirmation_subject -

the subject of the mail to be sent for confirmation.

You can also use the environment variable EMAIL_TEMPLATES_CONFIRMATION_SUBJECT to change the subject.

-

confirmation_body -

the body of the mail to be sent for confirmation.

You can also use the environment variable EMAIL_TEMPLATES_CONFIRMATION_BODY to change the body of the mail.

-

confirmed_subject -

the subject of the mail to be sent after confirmation.

You can also use the environment variable EMAIL_TEMPLATES_CONFIRMED_SUBJECT to change the subject.

-

confirmed_body -

the body of the mail to be sent after confirmation.

You can also use the environment variable EMAIL_TEMPLATES_CONFIRMED_BODY to change the body of the mail.

-

password_reset_subject -

the subject of the mail to be sent for password reset.

You can also use the environment variable EMAIL_TEMPLATES_PASSWORD_RESET_SUBJECT to change the subject.

-

password_reset_body -

the body of the mail to be sent for password reset.

You can also use the environment variable EMAIL_TEMPLATES_PASSWORD_RESET_BODY to change the body of the mail.

-

password_changed_subject -

the subject of the mail to be sent after changing the password.

You can also use the environment variable EMAIL_TEMPLATES_PASSWORD_CHANGED_SUBJECT to change the subject.

-

password_changed_body -

the body of the mail to be sent after changing the password.

You can also use the environment variable EMAIL_TEMPLATES_PASSWORD_CHANGED_BODY to change the body of the mail.

Documentation for all APIs for Kairon are still being fleshed out. A intermediary version of the documentation is available here. Documentation

We ❤️ contributions of all size and sorts. If you find a typo, if you want to improve a section of the documentation or if you want to help with a bug or a feature, here are the steps:

-

Fork the repo and create a new branch, say rasa-dx-issue1

-

Fix/improve the codebase

-

write test cases and documentation for code'

-

run test cases.

python -m pytest

- reformat code using black

python -m black bot_trainer

-

Commit the changes, with proper comments about the fix.

-

Make a pull request. It can simply be one of your commit messages.

-

Submit your pull request and wait for all checks passed.

-

Request reviews from one of the developers from our core team.

-

Get a 👍 and PR gets merged.

- Rasa - The bot framework used

- PiPy - Dependency Management

- Mongo - DB

- MongoEngine - ORM

- FastApi - Rest Api

- Uvicorn - ASGI Server

- Spacy - NLP

- Pytest - Testing

- MongoMock - Mocking DB

- Response - Mocking HTTP requests

- Black - Code Reformatting

- NLP AUG - Augmentation

The repository is being maintained and supported by NimbleWork Inc.

- NimbleWork.Inc - NimbleWork

- Fahad Ali Shaikh

- Deepak Naik

- Nirmal Parwate

- Adurthi Ashwin Swarup

- Udit Pandey

- Nupur_Khare

- [Rohan Patwardhan]

- [Hitesh Ghuge]

- [Sushant Patade]

- [Mitesh Gupta]

See also the list of contributors who participated in this project.

Licensed under the Apache License, Version 2.0. Copy of the license

A list of the Licenses of the dependencies of the project can be found at the Link

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for kairon

Similar Open Source Tools

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

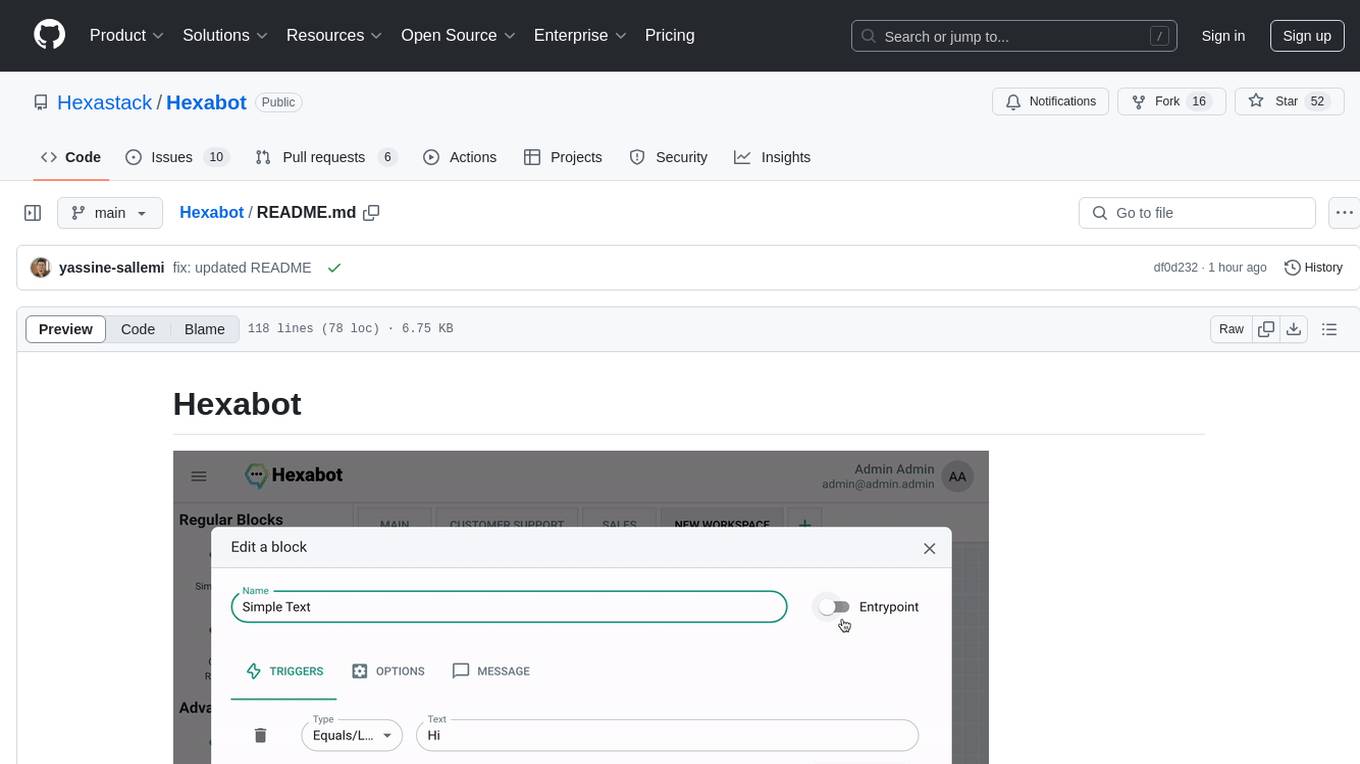

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

deepdoctection

**deep** doctection is a Python library that orchestrates document extraction and document layout analysis tasks using deep learning models. It does not implement models but enables you to build pipelines using highly acknowledged libraries for object detection, OCR and selected NLP tasks and provides an integrated framework for fine-tuning, evaluating and running models. For more specific text processing tasks use one of the many other great NLP libraries. **deep** doctection focuses on applications and is made for those who want to solve real world problems related to document extraction from PDFs or scans in various image formats. **deep** doctection provides model wrappers of supported libraries for various tasks to be integrated into pipelines. Its core function does not depend on any specific deep learning library. Selected models for the following tasks are currently supported: * Document layout analysis including table recognition in Tensorflow with **Tensorpack**, or PyTorch with **Detectron2**, * OCR with support of **Tesseract**, **DocTr** (Tensorflow and PyTorch implementations available) and a wrapper to an API for a commercial solution, * Text mining for native PDFs with **pdfplumber**, * Language detection with **fastText**, * Deskewing and rotating images with **jdeskew**. * Document and token classification with all LayoutLM models provided by the **Transformer library**. (Yes, you can use any LayoutLM-model with any of the provided OCR-or pdfplumber tools straight away!). * Table detection and table structure recognition with **table-transformer**. * There is a small dataset for token classification available and a lot of new tutorials to show, how to train and evaluate this dataset using LayoutLMv1, LayoutLMv2, LayoutXLM and LayoutLMv3. * Comprehensive configuration of **analyzer** like choosing different models, output parsing, OCR selection. Check this notebook or the docs for more infos. * Document layout analysis and table recognition now runs with **Torchscript** (CPU) as well and **Detectron2** is not required anymore for basic inference. * [**new**] More angle predictors for determining the rotation of a document based on **Tesseract** and **DocTr** (not contained in the built-in Analyzer). * [**new**] Token classification with **LiLT** via **transformers**. We have added a model wrapper for token classification with LiLT and added a some LiLT models to the model catalog that seem to look promising, especially if you want to train a model on non-english data. The training script for LayoutLM can be used for LiLT as well and we will be providing a notebook on how to train a model on a custom dataset soon. **deep** doctection provides on top of that methods for pre-processing inputs to models like cropping or resizing and to post-process results, like validating duplicate outputs, relating words to detected layout segments or ordering words into contiguous text. You will get an output in JSON format that you can customize even further by yourself. Have a look at the **introduction notebook** in the notebook repo for an easy start. Check the **release notes** for recent updates. **deep** doctection or its support libraries provide pre-trained models that are in most of the cases available at the **Hugging Face Model Hub** or that will be automatically downloaded once requested. For instance, you can find pre-trained object detection models from the Tensorpack or Detectron2 framework for coarse layout analysis, table cell detection and table recognition. Training is a substantial part to get pipelines ready on some specific domain, let it be document layout analysis, document classification or NER. **deep** doctection provides training scripts for models that are based on trainers developed from the library that hosts the model code. Moreover, **deep** doctection hosts code to some well established datasets like **Publaynet** that makes it easy to experiment. It also contains mappings from widely used data formats like COCO and it has a dataset framework (akin to **datasets** so that setting up training on a custom dataset becomes very easy. **This notebook** shows you how to do this. **deep** doctection comes equipped with a framework that allows you to evaluate predictions of a single or multiple models in a pipeline against some ground truth. Check again **here** how it is done. Having set up a pipeline it takes you a few lines of code to instantiate the pipeline and after a for loop all pages will be processed through the pipeline.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

neo

Neo.mjs is a revolutionary Application Engine for the web that offers true multithreading and context engineering, enabling desktop-class UI performance and AI-driven runtime mutation. It is not a framework but a complete runtime and toolchain for enterprise applications, excelling in single page apps and browser-based multi-window applications. With a pioneering Off-Main-Thread architecture, Neo.mjs ensures butter-smooth UI performance by keeping the main thread free for flawless user interactions. The latest version, v11, introduces AI-native capabilities, allowing developers to work with AI agents as first-class partners in the development process. The platform offers a suite of dedicated Model Context Protocol servers that give agents the context they need to understand, build, and reason about the code, enabling a new level of human-AI collaboration.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

ROSGPT_Vision

ROSGPT_Vision is a new robotic framework designed to command robots using only two prompts: a Visual Prompt for visual semantic features and an LLM Prompt to regulate robotic reactions. It is based on the Prompting Robotic Modalities (PRM) design pattern and is used to develop CarMate, a robotic application for monitoring driver distractions and providing real-time vocal notifications. The framework leverages state-of-the-art language models to facilitate advanced reasoning about image data and offers a unified platform for robots to perceive, interpret, and interact with visual data through natural language. LangChain is used for easy customization of prompts, and the implementation includes the CarMate application for driver monitoring and assistance.

persian-license-plate-recognition

The Persian License Plate Recognition (PLPR) system is a state-of-the-art solution designed for detecting and recognizing Persian license plates in images and video streams. Leveraging advanced deep learning models and a user-friendly interface, it ensures reliable performance across different scenarios. The system offers advanced detection using YOLOv5 models, precise recognition of Persian characters, real-time processing capabilities, and a user-friendly GUI. It is well-suited for applications in traffic monitoring, automated vehicle identification, and similar fields. The system's architecture includes modules for resident management, entrance management, and a detailed flowchart explaining the process from system initialization to displaying results in the GUI. Hardware requirements include an Intel Core i5 processor, 8 GB RAM, a dedicated GPU with at least 4 GB VRAM, and an SSD with 20 GB of free space. The system can be installed by cloning the repository and installing required Python packages. Users can customize the video source for processing and run the application to upload and process images or video streams. The system's GUI allows for parameter adjustments to optimize performance, and the Wiki provides in-depth information on the system's architecture and model training.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

For similar tasks

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

SiLLM

SiLLM is a toolkit that simplifies the process of training and running Large Language Models (LLMs) on Apple Silicon by leveraging the MLX framework. It provides features such as LLM loading, LoRA training, DPO training, a web app for a seamless chat experience, an API server with OpenAI compatible chat endpoints, and command-line interface (CLI) scripts for chat, server, LoRA fine-tuning, DPO fine-tuning, conversion, and quantization.

chatgpt-exporter

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

GPT4DFCI

GPT4DFCI is a private and secure generative AI tool based on GPT-4, deployed for non-clinical use at Dana-Farber Cancer Institute. The tool is overseen by the Dana-Farber AI Governance Committee and developed by the Dana-Farber Informatics & Analytics Department. The repository includes manuscript & policy details, training material, front-end and back-end code, infrastructure information, API client for programmatic use, licensing details, and contact information.

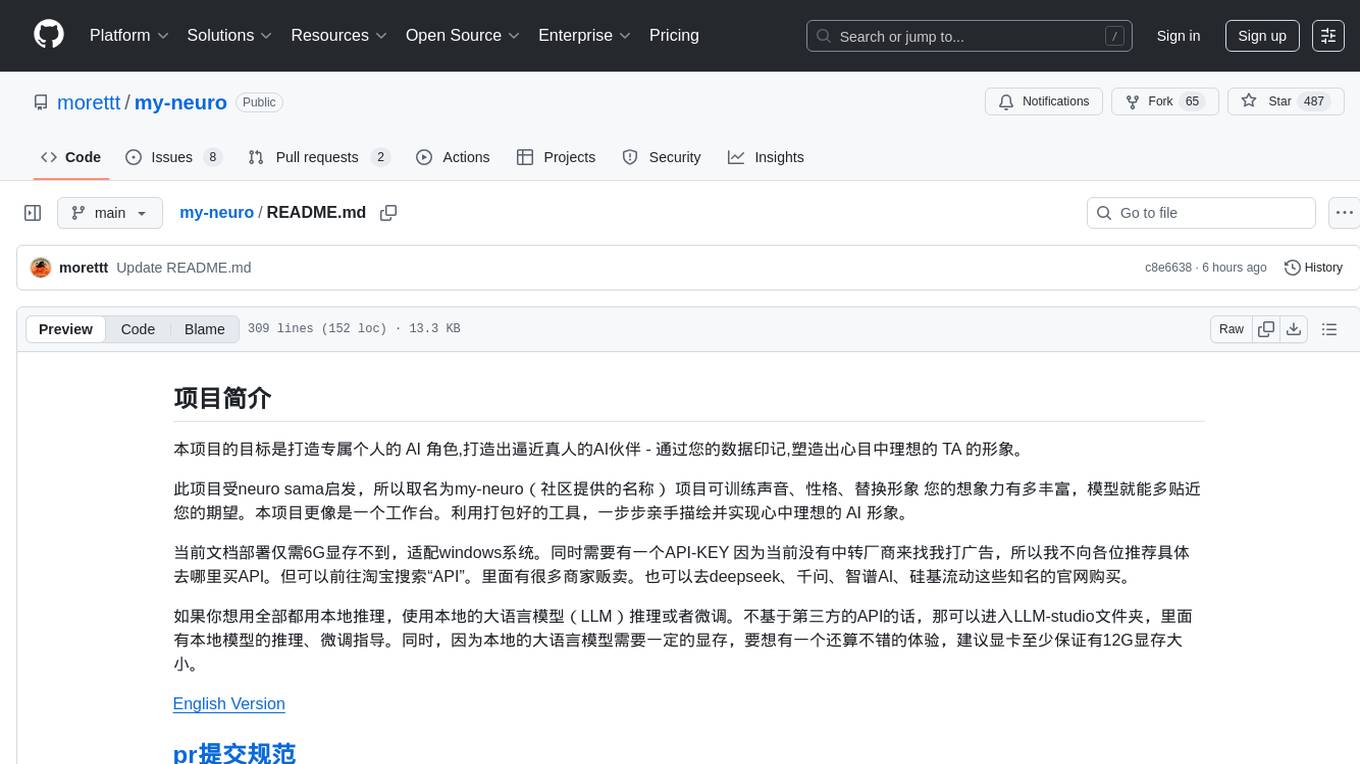

my-neuro

The project aims to create a personalized AI character, a lifelike AI companion - shaping the ideal image of TA in your mind through your data imprint. The project is inspired by neuro sama, hence named my-neuro. The project can train voice, personality, and replace images. It serves as a workspace where you can use packaged tools to step by step draw and realize the ideal AI image in your mind. The deployment of the current document requires less than 6GB of VRAM, compatible with Windows systems, and requires an API-KEY. The project offers features like low latency, real-time interruption, emotion simulation, visual capabilities integration, voice model training support, desktop control, live streaming on platforms like Bilibili, and more. It aims to provide a comprehensive AI experience with features like long-term memory, AI customization, and emotional interactions.

For similar jobs

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.