RAG_Hack

Hack Together: RAG Hack | Register, Learn, Hack

Stars: 310

RAGHack is a hackathon focused on building AI applications using the power of RAG (Retrieval Augmented Generation). RAG combines large language models with search engine knowledge to provide contextually relevant answers. Participants can learn to build RAG apps on Azure AI using various languages and retrievers, explore frameworks like LangChain and Semantic Kernel, and leverage technologies such as agents and vision models. The hackathon features live streams, hack submissions, and prizes for innovative projects.

README:

🛠️ Build, innovate, and #Hacktogether! 🛠️ It's time to start building AI applications using the power of RAG (Retrieval Augmented Generation). 🤖 + 📚 = 🔥

Large language models are powerful language generators, but they don't know everything about the world. RAG (Retrieval Augmented Generation) combines the power of large language models with the knowledge of a search engine. This allows you to ask questions of your own data, and get answers that are relevant to the context of your question.

RAGHack is your opportunity to get deep into RAG and start building RAG yourself. Across 25+ live streams, we'll show you how to build RAG apps on top of Azure AI in multiple languages (Python, Java, JS, C#) with multiple retrievers (AI Search, PostgreSQL, Azure SQL, Cosmos DB), with your own data sources! You'll learn about the most popular frameworks, like LangChain and Semantic Kernel, plus the latest technology, like agents and vision models. The possibilities are endless for what you can create... plus you can submit your hack for a chance to win exciting prizes! 🥳

The streams start September 3rd and end September 13th. Hack submissions are due September 16th, 11:59 PM PST. Join us!

Register for the hackathon using any of the sessions linked on the Reactor series home page. This will register you for both the selected session and the hackathon.

Introduce yourself and look for teammates here GitHub Discussions!

Read the official rules 📃

| Day/Time | Topic | Resources |

|---|---|---|

| 9/3, 04:30 PM UTC / 09:30 AM PT | RAG 101 | Link |

| 9/3, 06:00 PM UTC / 11:00 AM PT | RAG with .NET | Link |

| 9/3, 08:00 PM UTC / 01:00 PM PT | RAG with Azure AI Studio | Link |

| 9/3, 10:00 PM UTC / 03:00 PM PT | RAG with Python | Link |

| 9/4, 03:00 PM UTC / 08:00 AM PT | RAG with Langchain4J | Link |

| 9/4, 03:00 PM UTC / 08:00 AM PT | RAG with LangchainJS | Link |

| 9/4, 09:00 PM UTC / 02:00 PM PT | Scalable RAG with CosmosDB for NoSQL | Link |

| 9/5, 03:00 PM UTC / 08:00 AM PT | Responsible AI | Link |

| 9/5, 05:00 PM UTC / 10:00 AM PT | RAG on Cosmos DB MongoDB | Link |

| 9/5, 07:00 PM UTC / 12:00 PM PT | RAG with Azure AI Search | Link |

| 9/5, 09:00 PM UTC / 02:00 PM PT | RAG on PostgreSQL | Link |

| 9/5, 11:00 PM UTC / 04:00 PM PT | RAG on Azure SQL Server | Link |

| 9/6, 04:00 PM UTC / 09:00 AM PT | Intro to GraphRAG | Link |

| 9/6, 06:00 PM UTC / 11:00 AM PT | Add multi-channel communication in RAG apps | Link |

| Day/Time | Topic | Resources |

|---|---|---|

| 9/9, 03:00 PM UTC / 08:00 AM PT | RAG with Java + Semantic Kernel | Link |

| 9/9, 05:00 PM UTC / 10:00 AM PT | RAG with Java + Spring AI | Link |

| 9/9, 08:00 PM UTC / 01:00 PM PT | RAG with vision models | Link |

| 9/9, 11:00 PM UTC / 04:00 PM PT | Internationalization for RAG apps | Link |

| 9/10, 03:00 PM UTC / 08:00 AM PT | Use Phi-3 to create a VSCode chat agent extension | Link |

| 9/10, 05:00 PM UTC / 10:00 AM PT | Agentic RAG with Langchain | Link |

| 9/10, 10:00 PM UTC / 03:00 PM PT | Build an OpenAI code interpreter for Python | Link |

| 9/11, 03:00 PM UTC / 08:00 AM PT | Connections in Azure AI Studio | Link |

| 9/11, 05:00 PM UTC / 10:00 AM PT | Explore AutoGen concepts with AutoGen Studio | Link |

| 9/11, 08:00 PM UTC / 01:00 PM PT | RAG with Data Access Control | Link |

| 9/11, 10:00 PM UTC / 03:00 PM PT | RAFT: (RAG + Fine Tuning) in Azure AI Studio | Link |

| 9/12, 04:00 AM UTC / 09:00 AM PT | Pick the right model for the right job | Link |

| 9/12, 08:00 PM UTC / 01:00 PM PT | Evaluating your RAG Chat App | Link |

| Day/Time | Topic | Resources |

|---|---|---|

| 9/3, 03:00 PM UTC / 08:00 AM PT | RAG: Generación Aumentada de Recuperación | Link |

| 9/4, 03:00 PM UTC / 08:00 AM PT | RAG: Prácticas recomendadas de Azure AI Search | Link |

| 9/11, 03:00 PM UTC / 08:00 AM PT | AI Multi-Agentes: Patrones, Problemas y Soluciones | Link |

| Day/Time | Topic | Resources |

|---|---|---|

| 9/3, 03:00 PM UTC / 08:00 AM PT | RAG (Geração Aumentada de Busca) no Azure | Link |

| 9/12, 03:00 PM UTC / 08:00 AM PT | Construindo RAG com Azure AI Studio e Python | Link |

| 9/13, 03:00 PM UTC / 08:00 AM PT | Implantando RAG com .NET e Azure Developer CLI | Link |

| Day/Time | Topic | Resources |

|---|---|---|

| 9/3, 12:30 PM UTC / 05:30 AM PT | Global RAG Hack Together | Link |

| 9/10, 12:30 PM UTC / 05:30 AM PT | Create RAG apps with Azure AI SDK | Link |

| 9/12, 12:30 PM UTC / 05:30 AM PT | Create RAG applications with AI Toolkit VSCode Extension | Link |

| 9/14, 12:30 PM UTC / 05:30 AM PT | Intro to GraphRAG | Link |

For additional help with your hacks, you can drop by Office Hours in our AI Discord channel. Here are the Office Hours scheduled so far:

| Day/Time | Topic/Hosts |

|---|---|

| 9/4, 07:00 PM UTC / 12:00 PM PT | Python, AI Search, Postgres, with Pamela |

| 9/6, 07:00 PM UTC / 12:00 PM PT | .NET with Bruno |

| Repository | Language/Retriever | Costs |

|---|---|---|

| azure-search-openai-demo | Python, Azure AI Search | Requires Azure deployment, follow guide for lower cost deployment |

| azure-search-openai-demo-java | Java, Azure AI Search | Requires Azure deployment, see cost estimate for App Service deployment, Container Apps, Kubernetes |

| serverless-chat-langchainjs | JavaScript, CosmosDB | Can be run locally for free with Ollama, see cost estimate for Azure deployment |

| azure-search-openai-demo-csharp | C#, Azure AI Search | Requires Azure deployment, see cost estimate or follow guide for low cost deployment |

| rag-postgres-openai-python | Python, PostgreSQL | Can be run locally for free with Ollama, see cost estimate for deployment for Azure deployment. |

| Cosmic-Food-RAG-app | Python, Cosmos DB MongoDB | Requires Azure deployment, see cost estimate |

| contoso-chat | Python, Azure AI Search, Azure AI Studio, PromptFlow | Requires Azure deployment, see cost estimate |

| azure-sql-db-session-recommender-v2 | C#, Azure SQL | Can be run locally for free with Azure SQL Database free tier |

To find more samples, check out the following resources:

- Azure AI samples (Python)

- Azure AI samples (JavaScript)

- Azure AI samples (Java)

- Azure AI samples (C#)

- Azure AI samples (Go)

- Azure AI Studio Samples

- Cosmos DB AI Samples

- Azure SQL DB AI Samples

- AI learning and community hub

- Cloud skills challenge: Using Azure OpenAI Service

- Generative AI for Beginners

- Fundamentals of Generative AI

- Retrieval Augmented Generation in Azure AI Search

- Workshop - Create your own ChatGPT with Retrieval-Augmented-Generation

- OpenAI documentation

- Azure AI Search

- Azure OpenAI Service

- Comparing Azure OpenAI and OpenAI

- Azure Communication Services Chat SDK

- AI-in-a-Box

- Join the Azure AI Discord!

Hack submissions are due September 16th, 11:59 PM PST.

Submit your project here when it's ready: 🚀 Project Submission

Check out this video for step by step project submission guidance: Project Submission Video

Projects will be evaluated by a panel of judges, including Microsoft engineers, product managers, and developer advocates. Judging criteria will include innovation, impact, technical usability, and alignment with corresponding hackathon category.

Each winning team in the categories below will receive a cash prize of $500. 💸

- Best overall

- Best in JavaScript/TypeScript

- Best in Java

- Best in .NET

- Best in Python

- Best use of AI Studio

- Best use of AI Search

- Best use of PostgreSQL

- Best use of Cosmos DB

- Best use of Azure SQL

All hackathon participants who submit a project will receive a digital badge (sometime in October).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RAG_Hack

Similar Open Source Tools

RAG_Hack

RAGHack is a hackathon focused on building AI applications using the power of RAG (Retrieval Augmented Generation). RAG combines large language models with search engine knowledge to provide contextually relevant answers. Participants can learn to build RAG apps on Azure AI using various languages and retrievers, explore frameworks like LangChain and Semantic Kernel, and leverage technologies such as agents and vision models. The hackathon features live streams, hack submissions, and prizes for innovative projects.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

visionOS-examples

visionOS-examples is a repository containing accelerators for Spatial Computing. It includes examples such as Local Large Language Model, Chat Apple Vision Pro, WebSockets, Anchor To Head, Hand Tracking, Battery Life, Countdown, Plane Detection, Timer Vision, and PencilKit for visionOS. The repository showcases various functionalities and features for Apple Vision Pro, offering tools for developers to enhance their visionOS apps with capabilities like hand tracking, plane detection, and real-time cryptocurrency prices.

AI-For-Beginners

AI-For-Beginners is a comprehensive 12-week, 24-lesson curriculum designed by experts at Microsoft to introduce beginners to the world of Artificial Intelligence (AI). The curriculum covers various topics such as Symbolic AI, Neural Networks, Computer Vision, Natural Language Processing, Genetic Algorithms, and Multi-Agent Systems. It includes hands-on lessons, quizzes, and labs using popular frameworks like TensorFlow and PyTorch. The focus is on providing a foundational understanding of AI concepts and principles, making it an ideal starting point for individuals interested in AI.

awesome-llm-webapps

This repository is a curated list of open-source, actively maintained web applications that leverage large language models (LLMs) for various use cases, including chatbots, natural language interfaces, assistants, and question answering systems. The projects are evaluated based on key criteria such as licensing, maintenance status, complexity, and features, to help users select the most suitable starting point for their LLM-based applications. The repository welcomes contributions and encourages users to submit projects that meet the criteria or suggest improvements to the existing list.

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

jailbreak_llms

This is the official repository for the ACM CCS 2024 paper 'Do Anything Now': Characterizing and Evaluating In-The-Wild Jailbreak Prompts on Large Language Models. The project employs a new framework called JailbreakHub to conduct the first measurement study on jailbreak prompts in the wild, collecting 15,140 prompts from December 2022 to December 2023, including 1,405 jailbreak prompts. The dataset serves as the largest collection of in-the-wild jailbreak prompts. The repository contains examples of harmful language and is intended for research purposes only.

CameraChessWeb

Camera Chess Web is a tool that allows you to use your phone camera to replace chess eBoards. With Camera Chess Web, you can broadcast your game to Lichess, play a game on Lichess, or digitize a chess game from a video or live stream. Camera Chess Web is free to download on Google Play.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

data-prep-kit

Data Prep Kit accelerates unstructured data preparation for LLM app developers. It allows developers to cleanse, transform, and enrich unstructured data for pre-training, fine-tuning, instruct-tuning LLMs, or building RAG applications. The kit provides modules for Python, Ray, and Spark runtimes, supporting Natural Language and Code data modalities. It offers a framework for custom transforms and uses Kubeflow Pipelines for workflow automation. Users can install the kit via PyPi and access a variety of transforms for data processing pipelines.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

unstract

Unstract is a no-code platform that enables users to launch APIs and ETL pipelines to structure unstructured documents. With Unstract, users can go beyond co-pilots by enabling machine-to-machine automation. Unstract's Prompt Studio provides a simple, no-code approach to creating prompts for LLMs, vector databases, embedding models, and text extractors. Users can then configure Prompt Studio projects as API deployments or ETL pipelines to automate critical business processes that involve complex documents. Unstract supports a wide range of LLM providers, vector databases, embeddings, text extractors, ETL sources, and ETL destinations, providing users with the flexibility to choose the best tools for their needs.

For similar tasks

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

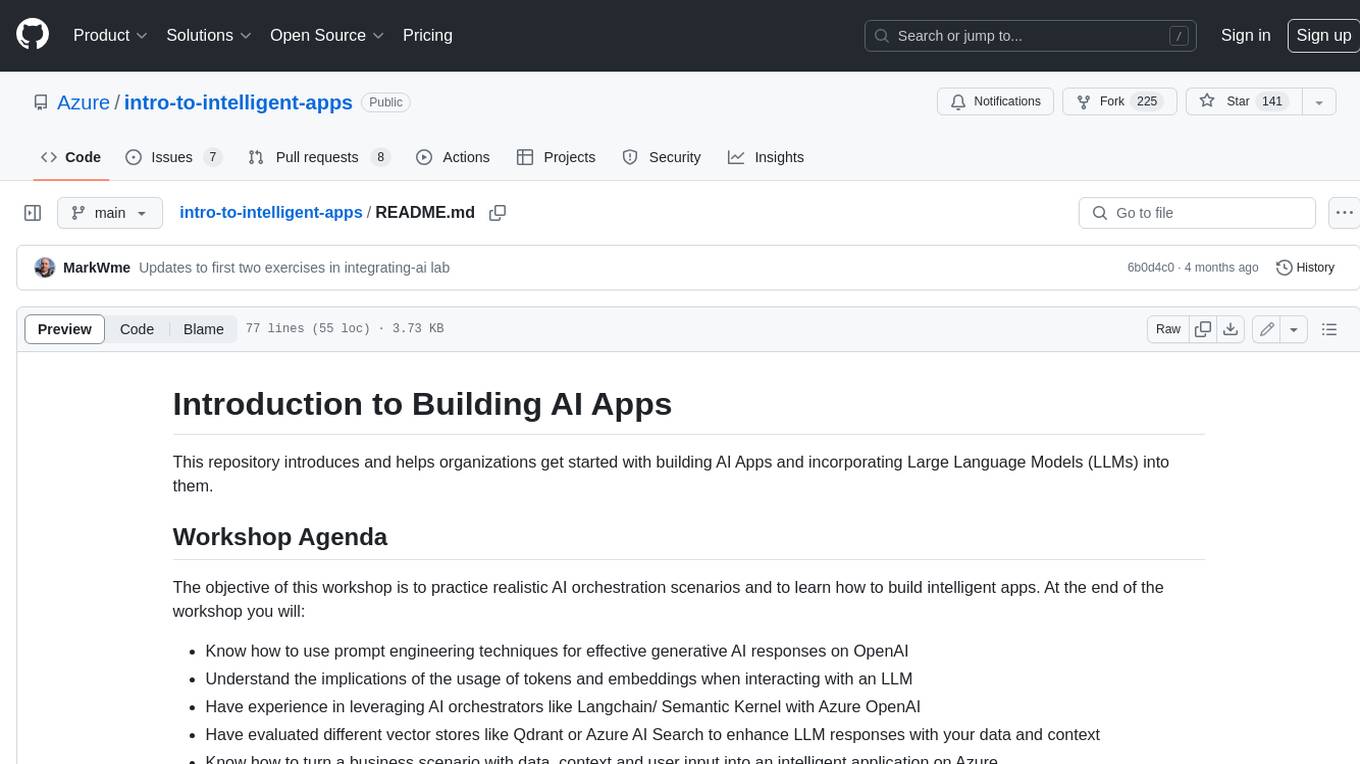

intro-to-intelligent-apps

This repository introduces and helps organizations get started with building AI Apps and incorporating Large Language Models (LLMs) into them. The workshop covers topics such as prompt engineering, AI orchestration, and deploying AI apps. Participants will learn how to use Azure OpenAI, Langchain/ Semantic Kernel, Qdrant, and Azure AI Search to build intelligent applications.

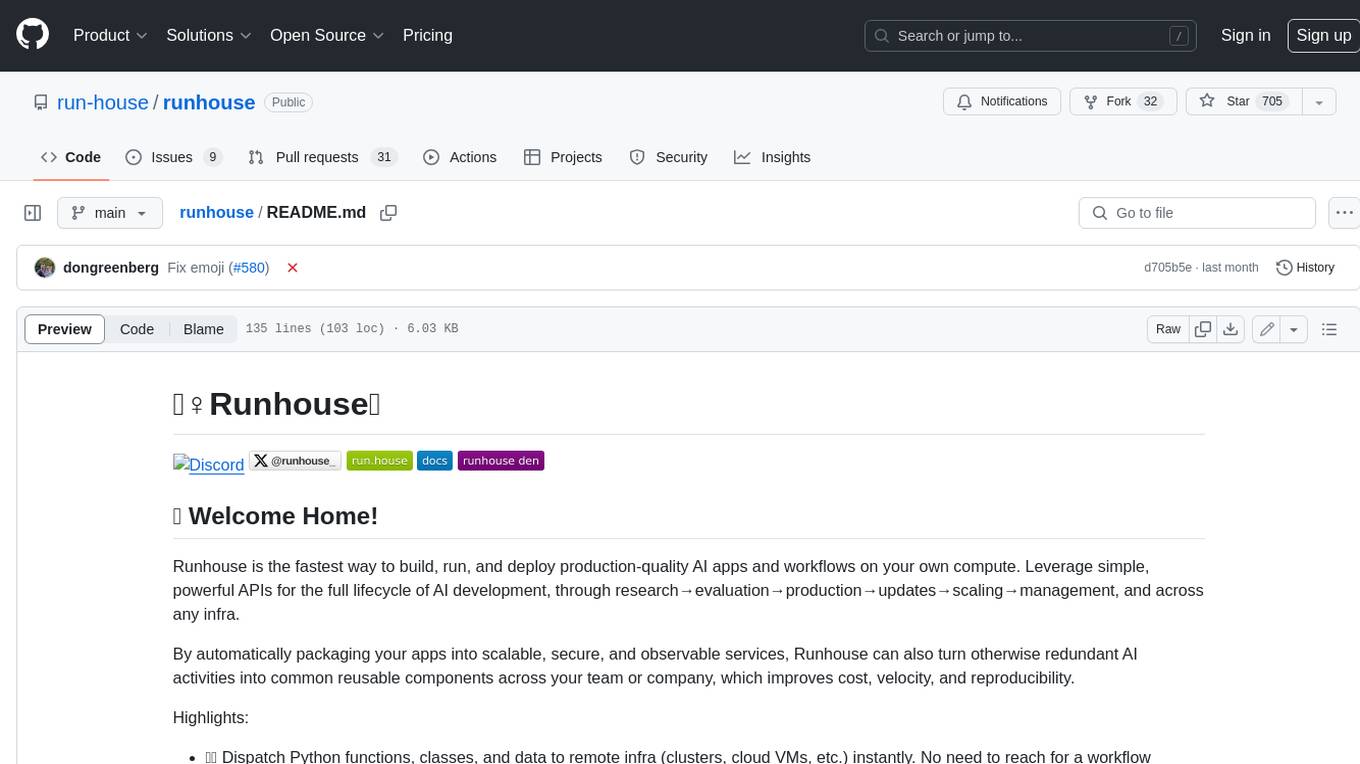

runhouse

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

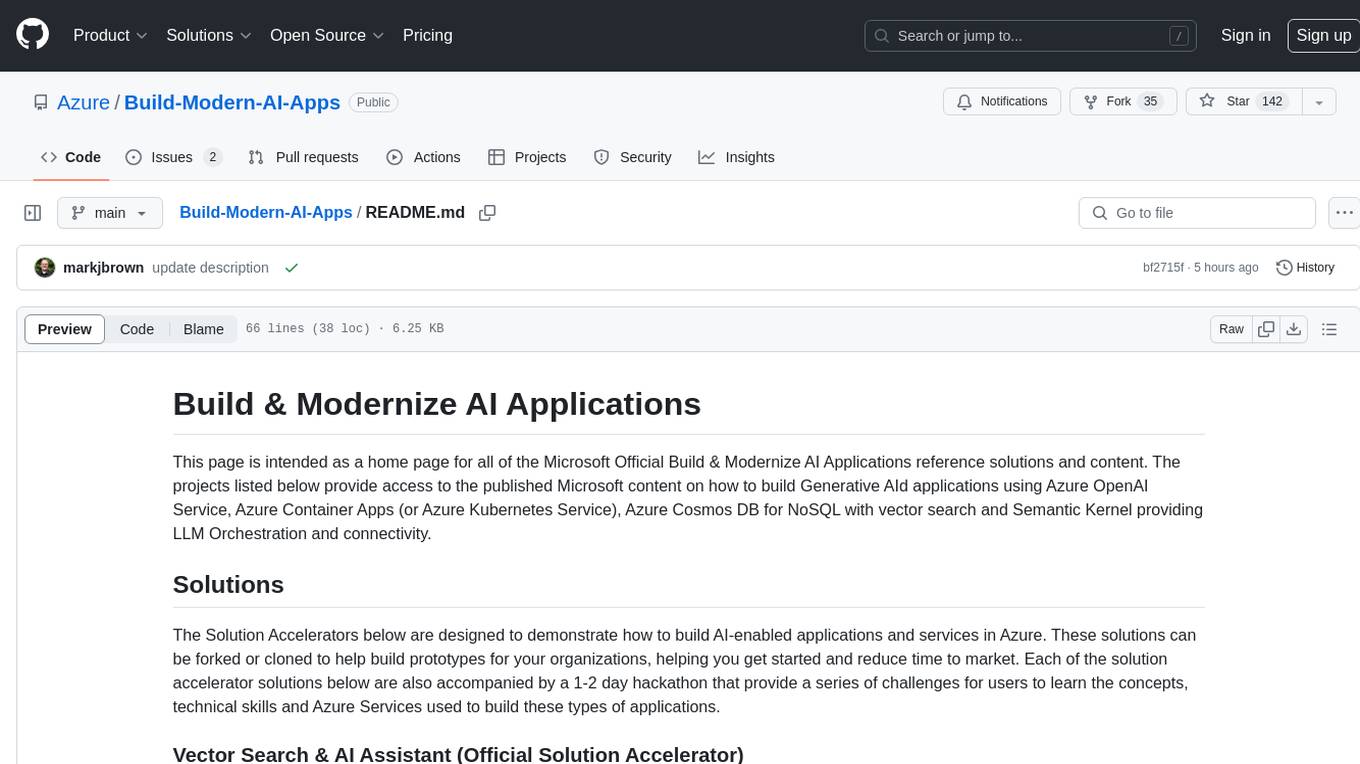

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

RAG_Hack

RAGHack is a hackathon focused on building AI applications using the power of RAG (Retrieval Augmented Generation). RAG combines large language models with search engine knowledge to provide contextually relevant answers. Participants can learn to build RAG apps on Azure AI using various languages and retrievers, explore frameworks like LangChain and Semantic Kernel, and leverage technologies such as agents and vision models. The hackathon features live streams, hack submissions, and prizes for innovative projects.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.