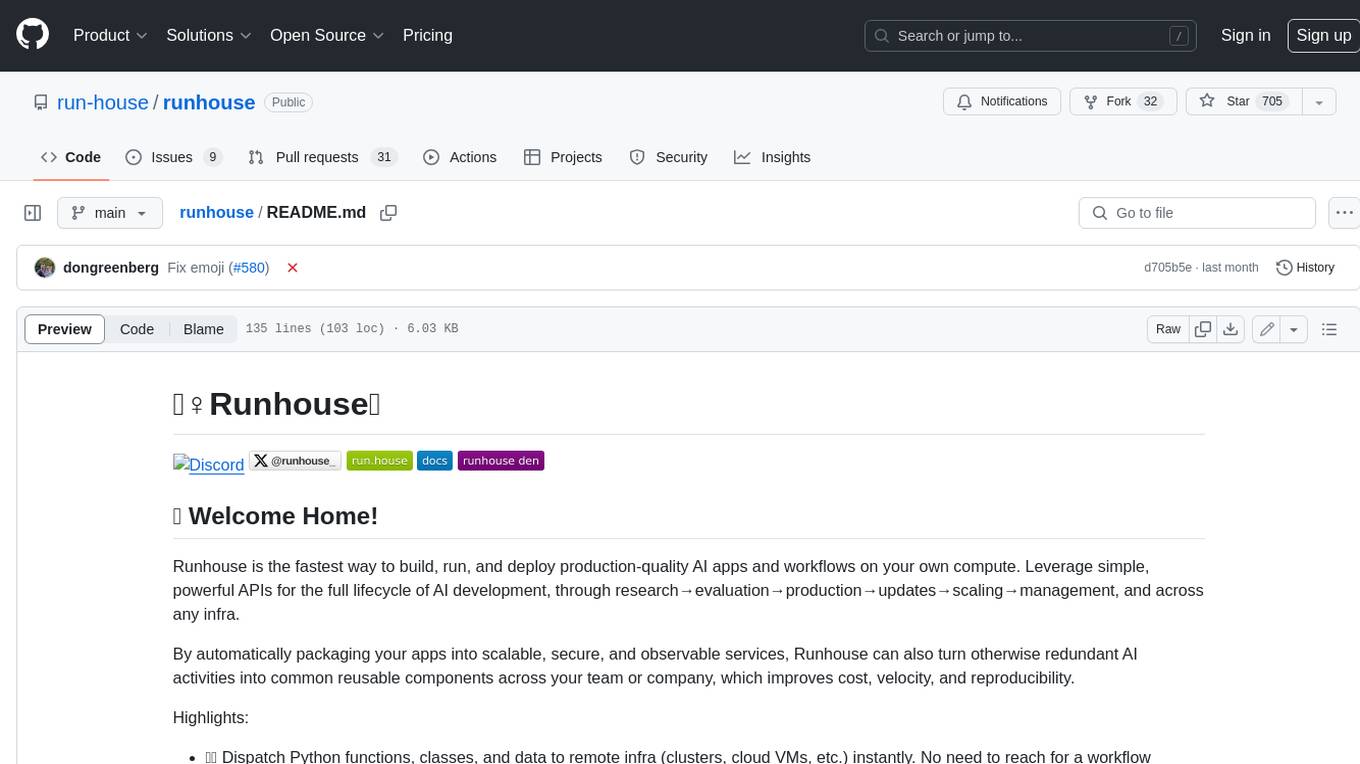

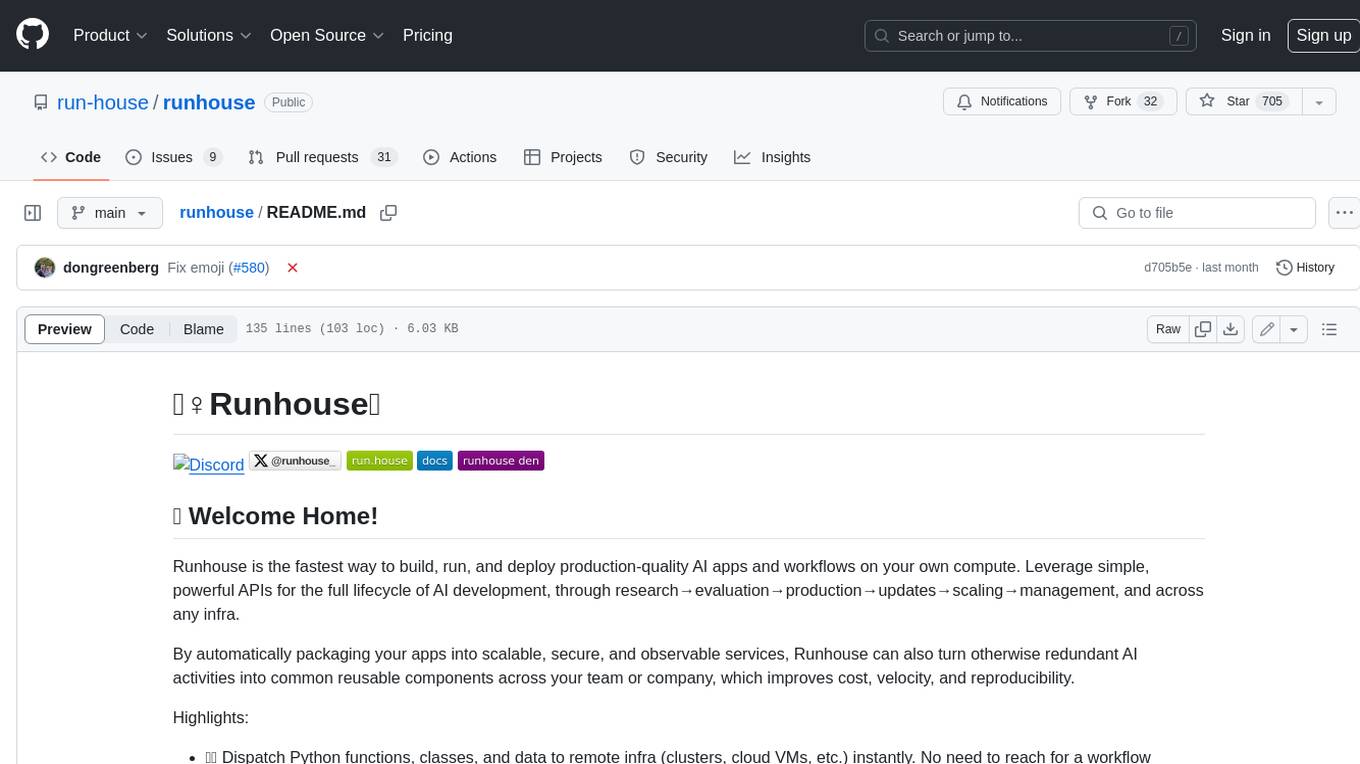

runhouse

The Runhouse Python client. Distribute and run AI workloads magically in Python, like PyTorch for ML infra.

Stars: 1008

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

README:

The Runhouse Python client provides a convenient way to access and effortlessly dispatch distributed training workloads to a pool of compute inside your own cloud.

This library requires Python 3.8 – 3.12.

Install the package with pip:

pip install runhouse

For a detailed walkthrough and usage guide, see our docs and examples.

For any question or support request you may have, please email us ([email protected]) or message us on Discord.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for runhouse

Similar Open Source Tools

runhouse

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

hyper-mcp

hyper-mcp is a fast and secure MCP server that enables adding AI capabilities to applications through WebAssembly plugins. It supports writing plugins in various languages, distributing them via standard OCI registries, and running them in resource-constrained environments. The tool offers sandboxing with WASM for limiting access, cross-platform compatibility, and deployment flexibility. Security features include sandboxed plugins, memory-safe execution, secure plugin distribution, and fine-grained access control. Users can configure the tool for global or project-specific use, start the server with different transport options, and utilize available plugins for tasks like time calculations, QR code generation, hash generation, IP retrieval, and webpage fetching.

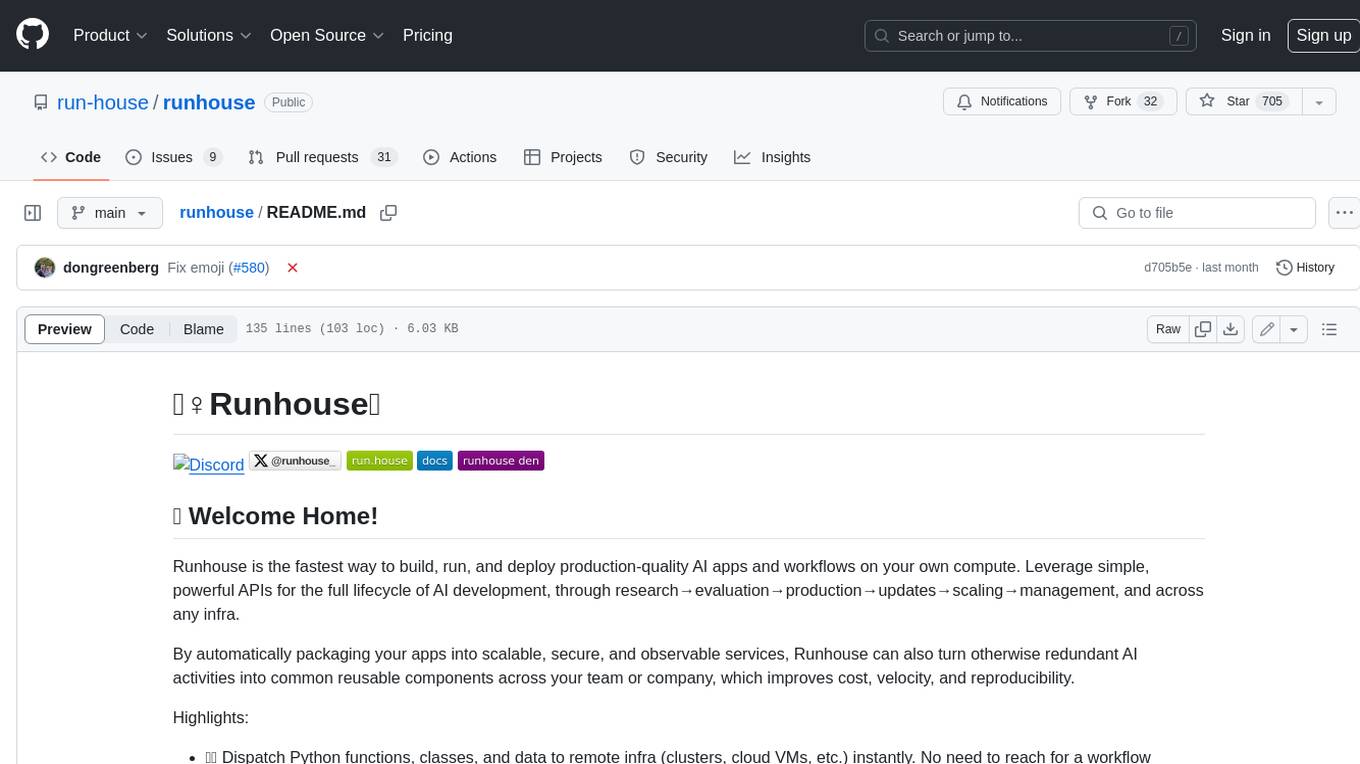

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

azure-ai-docs

Azure AI Docs is a repository that provides detailed documentation and resources for developers looking to leverage Microsoft's AI services on the Azure platform. The repository covers a wide range of topics including machine learning, natural language processing, computer vision, and more. Developers can find tutorials, code samples, best practices, and guidelines to help them integrate AI capabilities into their applications seamlessly.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

parlant

Parlant is a structured approach to building and guiding customer-facing AI agents. It allows developers to create and manage robust AI agents, providing specific feedback on agent behavior and helping understand user intentions better. With features like guidelines, glossary, coherence checks, dynamic context, and guided tool use, Parlant offers control over agent responses and behavior. Developer-friendly aspects include instant changes, Git integration, clean architecture, and type safety. It enables confident deployment with scalability, effective debugging, and validation before deployment. Parlant works with major LLM providers and offers client SDKs for Python and TypeScript. The tool facilitates natural customer interactions through asynchronous communication and provides a chat UI for testing new behaviors before deployment.

jadx-mcp-server

JADX-MCP-SERVER is a standalone Python server that interacts with JADX-AI-MCP Plugin to analyze Android APKs using LLMs like Claude. It enables live communication with decompiled Android app context, uncovering vulnerabilities, parsing manifests, and facilitating reverse engineering effortlessly. The tool combines JADX-AI-MCP and JADX MCP SERVER to provide real-time reverse engineering support with LLMs, offering features like quick analysis, vulnerability detection, AI code modification, static analysis, and reverse engineering helpers. It supports various MCP tools for fetching class information, text, methods, fields, smali code, AndroidManifest.xml content, strings.xml file, resource files, and more. Tested on Claude Desktop, it aims to support other LLMs in the future, enhancing Android reverse engineering and APK modification tools connectivity for easier reverse engineering purely from vibes.

aiounifi

Aiounifi is a Python library that provides a simple interface for interacting with the Unifi Controller API. It allows users to easily manage their Unifi network devices, such as access points, switches, and gateways, through automated scripts or applications. With Aiounifi, users can retrieve device information, perform configuration changes, monitor network performance, and more, all through a convenient and efficient API wrapper. This library simplifies the process of integrating Unifi network management into custom solutions, making it ideal for network administrators, developers, and enthusiasts looking to automate and streamline their network operations.

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

agents

Cloudflare Agents is a framework for building intelligent, stateful agents that persist, think, and evolve at the edge of the network. It allows for maintaining persistent state and memory, real-time communication, processing and learning from interactions, autonomous operation at global scale, and hibernating when idle. The project is actively evolving with focus on core agent framework, WebSocket communication, HTTP endpoints, React integration, and basic AI chat capabilities. Future developments include advanced memory systems, WebRTC for audio/video, email integration, evaluation framework, enhanced observability, and self-hosting guide.

gonzo

Gonzo is a powerful, real-time log analysis terminal UI tool inspired by k9s. It allows users to analyze log streams with beautiful charts, AI-powered insights, and advanced filtering directly from the terminal. The tool provides features like live streaming log processing, OTLP support, interactive dashboard with real-time charts, advanced filtering options including regex support, and AI-powered insights such as pattern detection, anomaly analysis, and root cause suggestions. Users can also configure AI models from providers like OpenAI, LM Studio, and Ollama for intelligent log analysis. Gonzo is built with Bubble Tea, Lipgloss, Cobra, Viper, and OpenTelemetry, following a clean architecture with separate modules for TUI, log analysis, frequency tracking, OTLP handling, and AI integration.

ai21-python

The AI21 Labs Python SDK is a comprehensive tool for interacting with the AI21 API. It provides functionalities for chat completions, conversational RAG, token counting, error handling, and support for various cloud providers like AWS, Azure, and Vertex. The SDK offers both synchronous and asynchronous usage, along with detailed examples and documentation. Users can quickly get started with the SDK to leverage AI21's powerful models for various natural language processing tasks.

nncase

nncase is a neural network compiler for AI accelerators that supports multiple inputs and outputs, static memory allocation, operators fusion and optimizations, float and quantized uint8 inference, post quantization from float model with calibration dataset, and flat model with zero copy loading. It can be installed via pip and supports TFLite, Caffe, and ONNX ops. Users can compile nncase from source using Ninja or make. The tool is suitable for tasks like image classification, object detection, image segmentation, pose estimation, and more.

crystal

Crystal is an Electron desktop application that allows users to run, inspect, and test multiple Claude Code instances simultaneously using git worktrees. It provides features such as parallel sessions, git worktree isolation, session persistence, git integration, change tracking, notifications, and the ability to run scripts. Crystal simplifies the workflow by creating isolated sessions, iterating with Claude Code, reviewing diff changes, and squashing commits for a clean history. It is a tool designed for collaborative AI notebook editing and testing.

For similar tasks

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

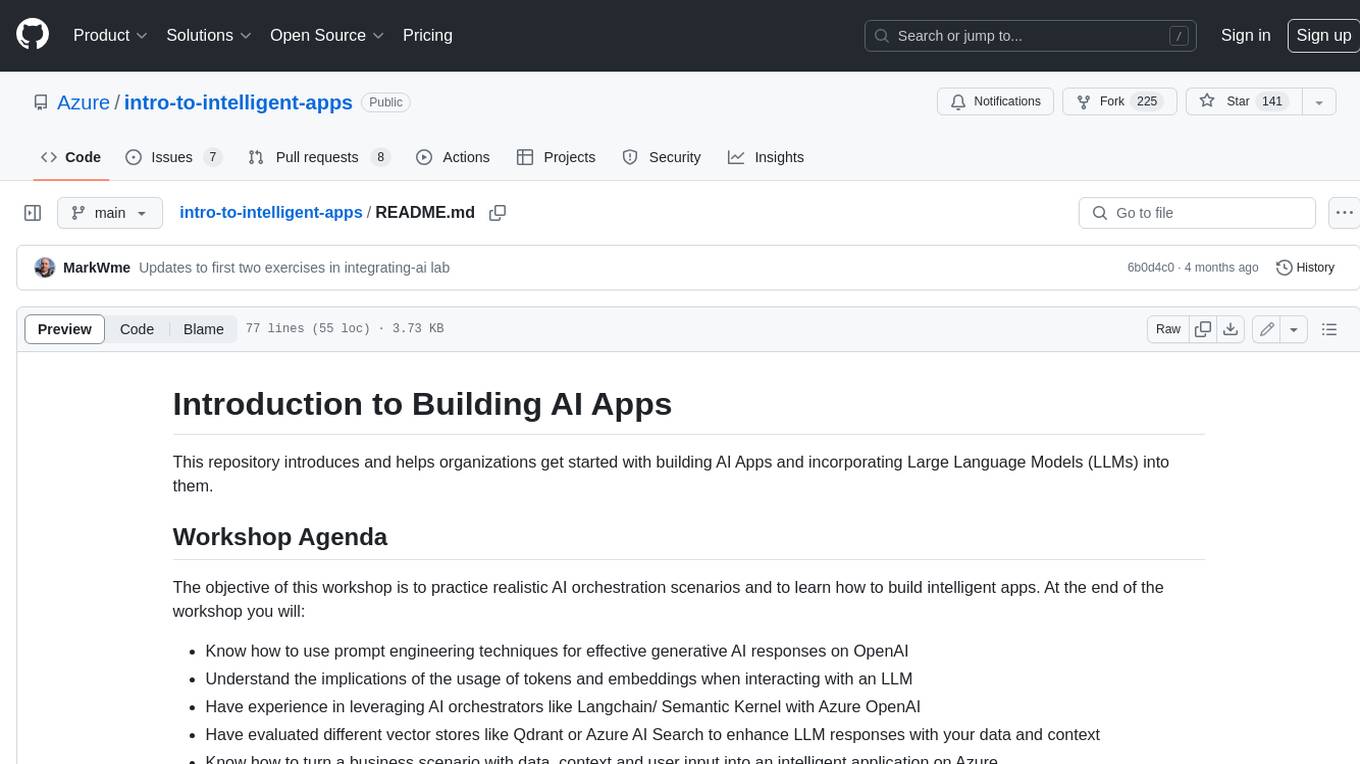

intro-to-intelligent-apps

This repository introduces and helps organizations get started with building AI Apps and incorporating Large Language Models (LLMs) into them. The workshop covers topics such as prompt engineering, AI orchestration, and deploying AI apps. Participants will learn how to use Azure OpenAI, Langchain/ Semantic Kernel, Qdrant, and Azure AI Search to build intelligent applications.

runhouse

Runhouse is a tool that allows you to build, run, and deploy production-quality AI apps and workflows on your own compute. It provides simple, powerful APIs for the full lifecycle of AI development, from research to evaluation to production to updates to scaling to management, and across any infra. By automatically packaging your apps into scalable, secure, and observable services, Runhouse can also turn otherwise redundant AI activities into common reusable components across your team or company, which improves cost, velocity, and reproducibility.

Awesome-LLM-RAG-Application

Awesome-LLM-RAG-Application is a repository that provides resources and information about applications based on Large Language Models (LLM) with Retrieval-Augmented Generation (RAG) pattern. It includes a survey paper, GitHub repo, and guides on advanced RAG techniques. The repository covers various aspects of RAG, including academic papers, evaluation benchmarks, downstream tasks, tools, and technologies. It also explores different frameworks, preprocessing tools, routing mechanisms, evaluation frameworks, embeddings, security guardrails, prompting tools, SQL enhancements, LLM deployment, observability tools, and more. The repository aims to offer comprehensive knowledge on RAG for readers interested in exploring and implementing LLM-based systems and products.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

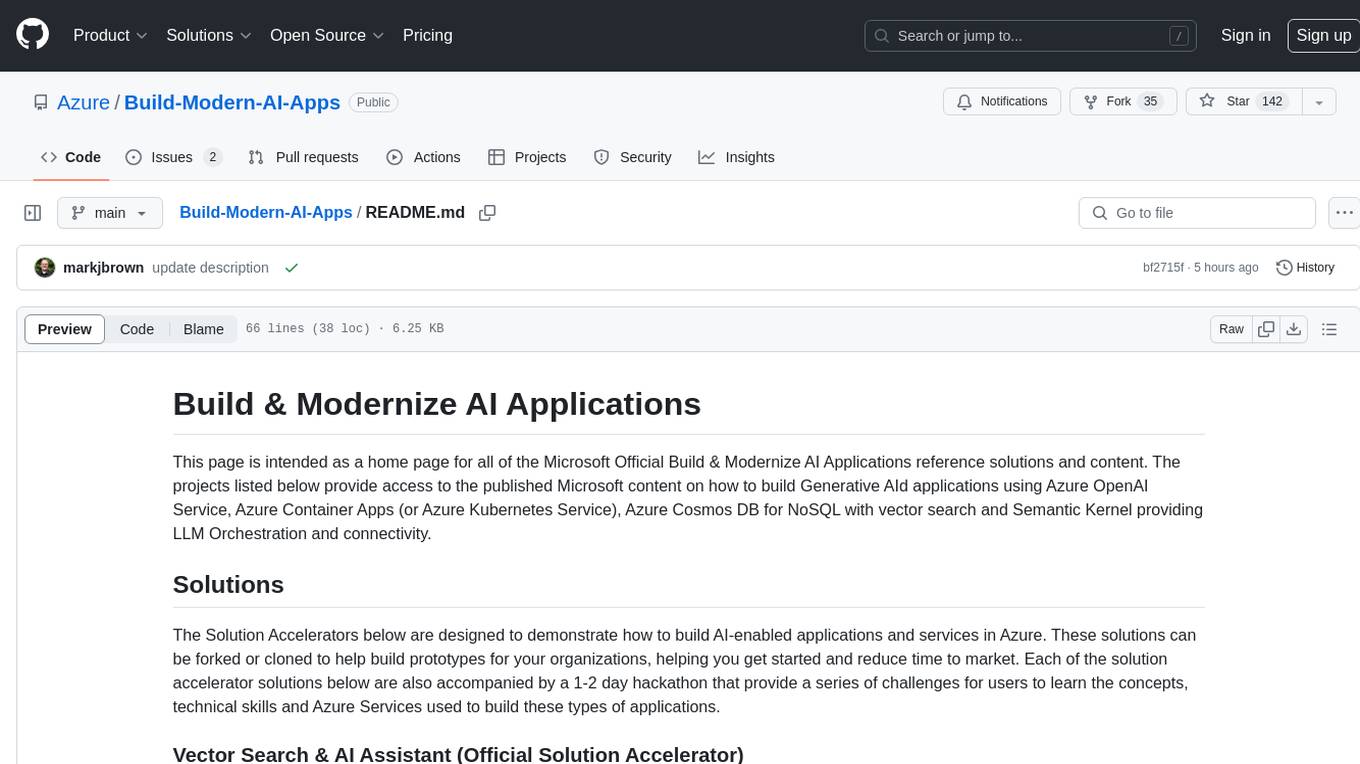

Build-Modern-AI-Apps

This repository serves as a hub for Microsoft Official Build & Modernize AI Applications reference solutions and content. It provides access to projects demonstrating how to build Generative AI applications using Azure services like Azure OpenAI, Azure Container Apps, Azure Kubernetes, and Azure Cosmos DB. The solutions include Vector Search & AI Assistant, Real-Time Payment and Transaction Processing, and Medical Claims Processing. Additionally, there are workshops like the Intelligent App Workshop for Microsoft Copilot Stack, focusing on infusing intelligence into traditional software systems using foundation models and design thinking.

RAG_Hack

RAGHack is a hackathon focused on building AI applications using the power of RAG (Retrieval Augmented Generation). RAG combines large language models with search engine knowledge to provide contextually relevant answers. Participants can learn to build RAG apps on Azure AI using various languages and retrievers, explore frameworks like LangChain and Semantic Kernel, and leverage technologies such as agents and vision models. The hackathon features live streams, hack submissions, and prizes for innovative projects.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.