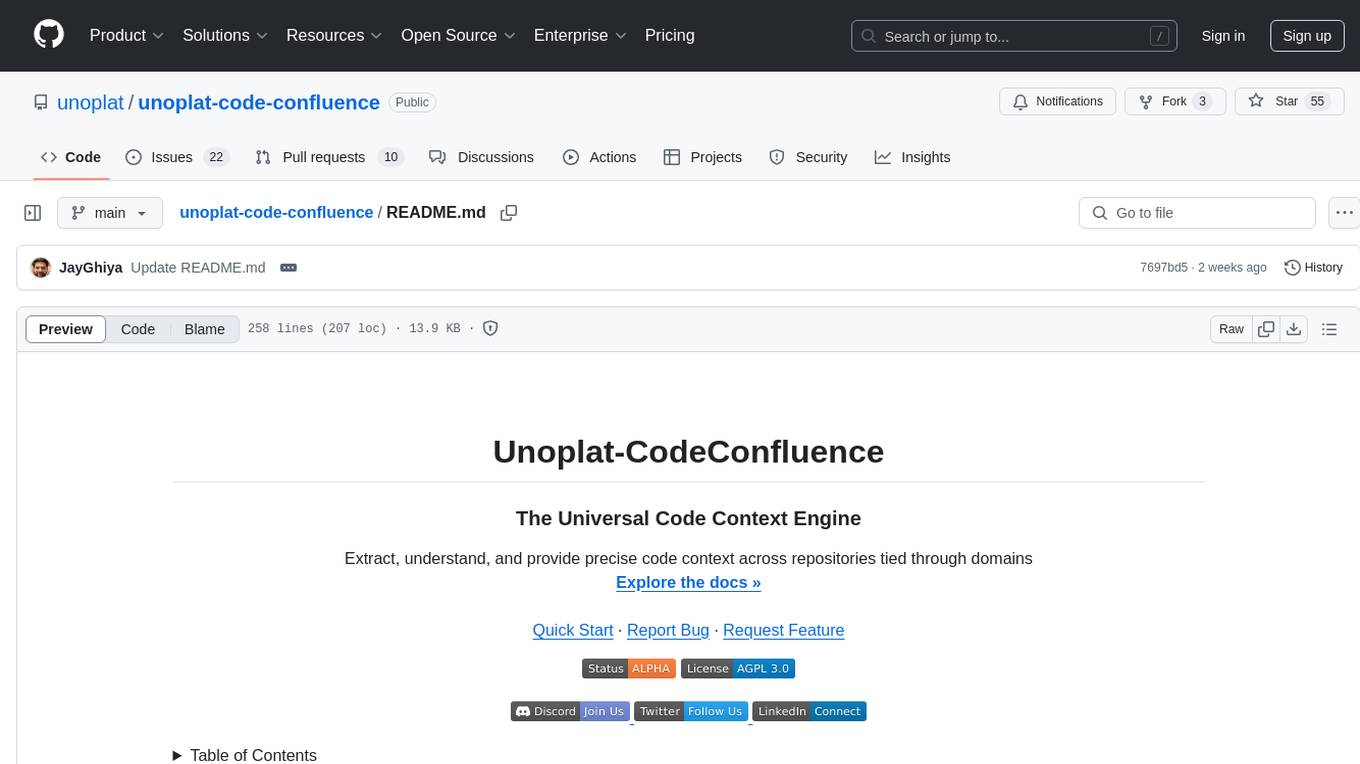

unoplat-code-confluence

Always keep your codebases ready for Agents. Improve any coding workflow by atleast 2x by maintaing a live, pluggable context layer per repo that creates and maintains Agents.md

Stars: 76

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

README:

Always keep your agents ready with all the context required per repository.

Explore the docs »

Quick Start

·

Report Bug

·

Request Feature

Table of Contents

Like this? Star the repo ⭐

·

Watch on YouTube

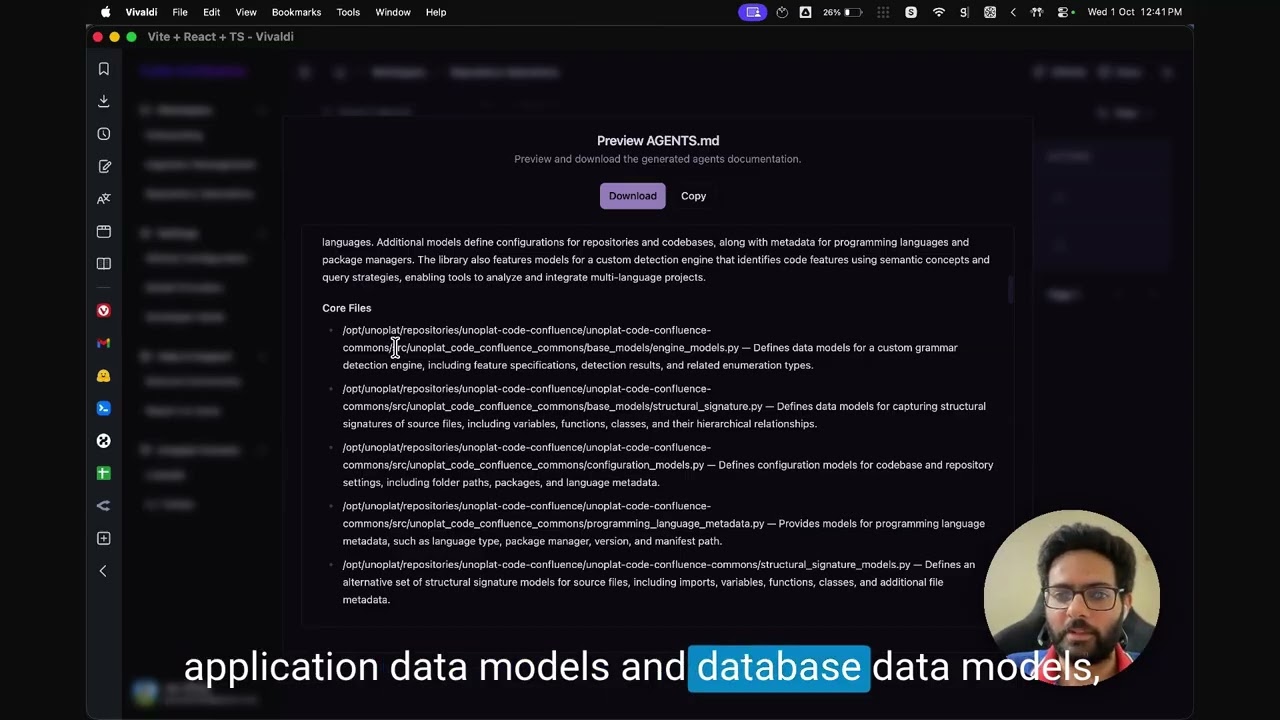

What's in the demo: automatic AGENTS.md generation per repo and an org index that gives any coding agent a precise source of truth.

AI coding agents excel at greenfield projects (new codebases built from scratch) but struggle with brownfield codebases (mature, production systems with existing code).

Why? They burn most of their context window on exploration—searching files, tracing flows, connecting dots—leaving little capacity for actual implementation. By the time they're ready to code, they've hit the "dumb zone" where performance degrades sharply. And since they lack long-term memory, this cycle repeats with every conversation.

Multi-repo complexity makes it worse. When code is split across connected repositories, the agent exhausts its context just mapping dependencies between codebases—often before writing a single line.

Internal dependencies present another failure mode. The agent has no onboarding to proprietary systems, so it hallucinates usage patterns. Worse, when internal documentation has drifted from actual implementation, the agent trusts those "lies" and produces code that doesn't work.

The end result: slop code requiring heavy rework.

Unoplat Code Confluence is the context engine for application development, organizing precise, up-to-date knowledge of your data models, entry points, endpoints, and more—so coding agents can deliver and maintain features 2–3x faster with higher quality.

Auto-generates machine-readable AGENTS.md files per repo to give coding agents a precise source of truth:

- Engineering Workflow — Canonical install/build/dev/test/lint/type_check commands plus key config files and their responsibilities

- Business Logic — Core application logic, domain entities, and database entities

- Entry Points & Interfaces — Main entry points, API endpoints, and external interfaces

- External Dependencies — Roles and responsibilities of external libraries

- Extensible Language Support: Modular Tree-sitter based grammar extraction delivers consistent, accurate code context across all programming languages

- Extensible Framework-Aware Parsing: Specialized grammar engines recognize framework and library-specific patterns based on project dependencies

- All important metadata about application—dependencies, inbound/outbound interfaces, domain models, and data store models—are identified and their relationships preserved

- Scalable, auditable and reliable processing powered by workflow orchestrator

Ready to enhance your development workflow?

Check out our Quick Start Guide.

We're actively developing Unoplat Code Confluence. Currently supports Python and TypeScript codebases.

For detailed roadmap, language support status, and planned features, see our Product Roadmap.

|

Jay Ghiya

Contact: [email protected] |

Book a call with me - Cal Link

Unoplat Code Confluence is in alpha. We’re building for our own daily use first, prioritizing stability and bug fixes. We’re collecting feedback now and will act on it once the core is solid. Early adopters welcome. Expect rapid changes and rough edges.

- Discord: Join our community channel

- GitHub Issues: Create an issue for bug reports or feature requests

- GitHub Discussions: Start a discussion for broader conversations

Your feedback is invaluable as we work toward production readiness and helps us prioritize our roadmap to better serve the developer community.

Unoplat-CodeConfluence is licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) + COMMONS CLAUSE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for unoplat-code-confluence

Similar Open Source Tools

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

traceroot

TraceRoot is a tool that helps engineers debug production issues 10× faster using AI-powered analysis of traces, logs, and code context. It accelerates the debugging process with AI-powered insights, integrates seamlessly into the development workflow, provides real-time trace and log analysis, code context understanding, and intelligent assistance. Features include ease of use, LLM flexibility, distributed services, AI debugging interface, and integration support. Users can get started with TraceRoot Cloud for a 7-day trial or self-host the tool. SDKs are available for Python and JavaScript/TypeScript.

p1

p1 is a code completion engine based on Large Language Models (LLM) that operates at the edge. It provides intelligent code suggestions and completions to enhance the coding experience. The tool is designed to assist developers in writing code more efficiently by predicting and offering context-aware completions based on the code being written. With implementations available for popular code editors like Vim and Visual Studio Code, p1 aims to improve productivity and streamline the coding process for software developers.

openspg

OpenSPG is a knowledge graph engine developed by Ant Group in collaboration with OpenKG, based on the SPG (Semantic-enhanced Programmable Graph) framework. It provides explicit semantic representations, logical rule definitions, operator frameworks (construction, inference), and other capabilities for domain knowledge graphs. OpenSPG supports pluggable adaptation of basic engines and algorithmic services by various vendors to build customized solutions.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

context-lens

Context Lens is a local proxy tool that captures LLM API calls from coding tools to provide a breakdown of context composition, including system prompts, tool definitions, conversation history, tool results, and thinking blocks. It helps developers understand why coding sessions may be resource-intensive without requiring any code changes. The tool works with various coding tools like Claude Code, Codex, Gemini CLI, Aider, and Pi, interacting with OpenAI, Anthropic, and Google APIs. Context Lens offers a visual treemap breakdown, cost tracking, conversation threading, agent breakdown, timeline visualization, context diff analysis, findings flags, auto-detection of coding tools, LHAR export, state persistence, and streaming support, all running locally for privacy and control.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

GraphLLM

GraphLLM is a graph-based framework designed to process data using LLMs. It offers a set of tools including a web scraper, PDF parser, YouTube subtitles downloader, Python sandbox, and TTS engine. The framework provides a GUI for building and debugging graphs with advanced features like loops, conditionals, parallel execution, streaming of results, hierarchical graphs, external tool integration, and dynamic scheduling. GraphLLM is a low-level framework that gives users full control over the raw prompt and output of models, with a steeper learning curve. It is tested with llama70b and qwen 32b, under heavy development with breaking changes expected.

VectorCode

VectorCode is a code repository indexing tool that helps users write better prompts for coding LLMs by providing information about the code repository being worked on. It includes a neovim plugin and supports multiple embedding engines. The tool enhances completion results by providing project context and improves understanding of close-source or cutting edge projects.

Fast-dLLM

Fast-DLLM is a diffusion-based Large Language Model (LLM) inference acceleration framework that supports efficient inference for models like Dream and LLaDA. It offers fast inference support, multiple optimization strategies, code generation, evaluation capabilities, and an interactive chat interface. Key features include Key-Value Cache for Block-Wise Decoding, Confidence-Aware Parallel Decoding, and overall performance improvements. The project structure includes directories for Dream and LLaDA model-related code, with installation and usage instructions provided for using the LLaDA and Dream models.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

ultracontext

UltraContext is a context API for AI agents that simplifies controlling what agents see by allowing users to replace messages, compact or offload context, replay decisions, and roll back mistakes with a single API call. It provides versioned context out of the box with full history and zero complexity. The tool aims to address the issue of context rot in large language models by providing a simple API with automatic versioning, time-travel capabilities, schema-free data storage, framework-agnostic compatibility, and fast performance. UltraContext is designed to streamline the process of managing context for AI agents, enabling users to focus on solving interesting problems rather than spending time gluing context together.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

generator

ctx is a tool designed to automatically generate organized context files from code files, GitHub repositories, Git commits, web pages, and plain text. It aims to efficiently provide necessary context to AI language models like ChatGPT and Claude, enabling users to streamline code refactoring, multiple iteration development, documentation generation, and seamless AI integration. With ctx, users can create structured markdown documents, save context files, and serve context through an MCP server for real-time assistance. The tool simplifies the process of sharing project information with AI assistants, making AI conversations smarter and easier.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

For similar tasks

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

ModelGenerator

AIDO.ModelGenerator is a software stack designed for developing AI-driven Digital Organisms. It enables researchers to adapt pretrained models and generate finetuned models for various tasks. The framework supports rapid prototyping with experiments like applying pre-trained models to new data, developing finetuning tasks, benchmarking models, and testing new architectures. Built on PyTorch, HuggingFace, and Lightning, it facilitates seamless integration with these ecosystems. The tool caters to cross-disciplinary teams in ML & Bio, offering installation, usage, tutorials, and API reference in its documentation.

gemini-cli

Gemini CLI is an open-source AI agent that provides lightweight access to Gemini, offering powerful capabilities like code understanding, generation, automation, integration, and advanced features. It is designed for developers who prefer working in the command line and offers extensibility through MCP support. The tool integrates directly into GitHub workflows and offers various authentication options for individual developers, enterprise teams, and production workloads. With features like code querying, editing, app generation, debugging, and GitHub integration, Gemini CLI aims to streamline development workflows and enhance productivity.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

CodeTF

CodeTF is a Python transformer-based library for code large language models (Code LLMs) and code intelligence. It provides an interface for training and inferencing on tasks like code summarization, translation, and generation. The library offers utilities for code manipulation across various languages, including easy extraction of code attributes. Using tree-sitter as its core AST parser, CodeTF enables parsing of function names, comments, and variable names. It supports fast model serving, fine-tuning of LLMs, various code intelligence tasks, preprocessed datasets, model evaluation, pretrained and fine-tuned models, and utilities to manipulate source code. CodeTF aims to facilitate the integration of state-of-the-art Code LLMs into real-world applications, ensuring a user-friendly environment for code intelligence tasks.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.