awesome-generative-ai-data-scientist

A curated list of 100+ resources for building and deploying generative AI specifically focusing on helping you become a Generative AI Data Scientist with LLMs

Stars: 425

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

README:

The Future is using AI and ML Together

A curated list of 100+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI Data Science applications with Large Language Models (LLMs) and deploying LLMs and Generative AI/ML with Cloud-based solutions.

Please ⭐ us on GitHub (it takes 2 seconds and means a lot).

Contributions are welcome! Please submit a pull request or open an issue if you have suggestions for new resources or improvements to existing ones. Thanks for your support!

- Awesome Generative AI Data Scientist

- Awesome Real-World AI Use Cases

-

Python Libraries

- Curated Python AI, Data Science, and ML Compilations

- Data Science And AI Agents

- Coding Agents

- Deep Research Agents

- AI Frameworks (Build Your Own)

- AI Frameworks (Drag and Drop)

- LLM Providers

- Open Source LLM Models

- LangChain Ecosystem

- LangGraph Extensions

- Huggingface Ecosystem

- Vector Databases (RAG)

- Pretraining

- Fine-tuning

- Testing and Monitoring (Observability)

- Document Parsing

- Web Parsing (HTML) and Web Crawling

- Agents and Tools (Build Your Own)

- Agents and Tools (Prebuilt)

- LLM Memory

- LLMOps

- Code Sandbox (Security)

- Browser Control Agents

- Prompt Improvement

- Other

- R Libraries

- LLM Deployment (Cloud Services)

- Examples and Cookbooks

- Newsletters

- Courses and Training

| Project | Description | Links |

|---|---|---|

| 🚀🚀 AI-Powered Data Science Team In Python | An AI-powered data science team of copilots that uses agents to help you perform common data science tasks 10X faster. | Apps | Examples | GitHub |

| 🚀 Awesome LLM Apps | LLM RAG AI Apps with Step-By-Step Tutorials. | GitHub |

| AI Hedge Fund | Proof of concept for an AI-powered hedge fund. | GitHub |

| AI Financial Agent | A financial agent for investment research. | GitHub |

| Structured Report Generation (LangGraph) | How to build an agent that can orchestrate the end-to-end process of report planning, web research, and writing. Produces reports of varying and easily configurable formats. | Video | Blog | Code |

| Uber QueryGPT | Uber's QueryGPT uses large language models (LLM), vector databases, and similarity search to generate complex queries from English (Natural Language) questions, enhancing productivity for engineers, operations managers, and data scientists. | Blog |

| Nir Diamant GenAI Agents Hub | Tutorials and implementations for various Generative AI Agent techniques, from basic to advanced. A comprehensive guide for building intelligent, interactive AI systems. | GitHub |

| AI Engineering Hub | Real-world AI agent applications, LLM and RAG tutorials, with examples to implement. | GitHub |

| StockChat | An open-source alternative to Perplexity Finance. | GitHub |

| Project | Description | Links |

|---|---|---|

| Awesome Generative AI Data Scientist | A curated list of 100+ resources for building and deploying generative AI specifically focusing on helping you become a Generative AI Data Scientist | GitHub |

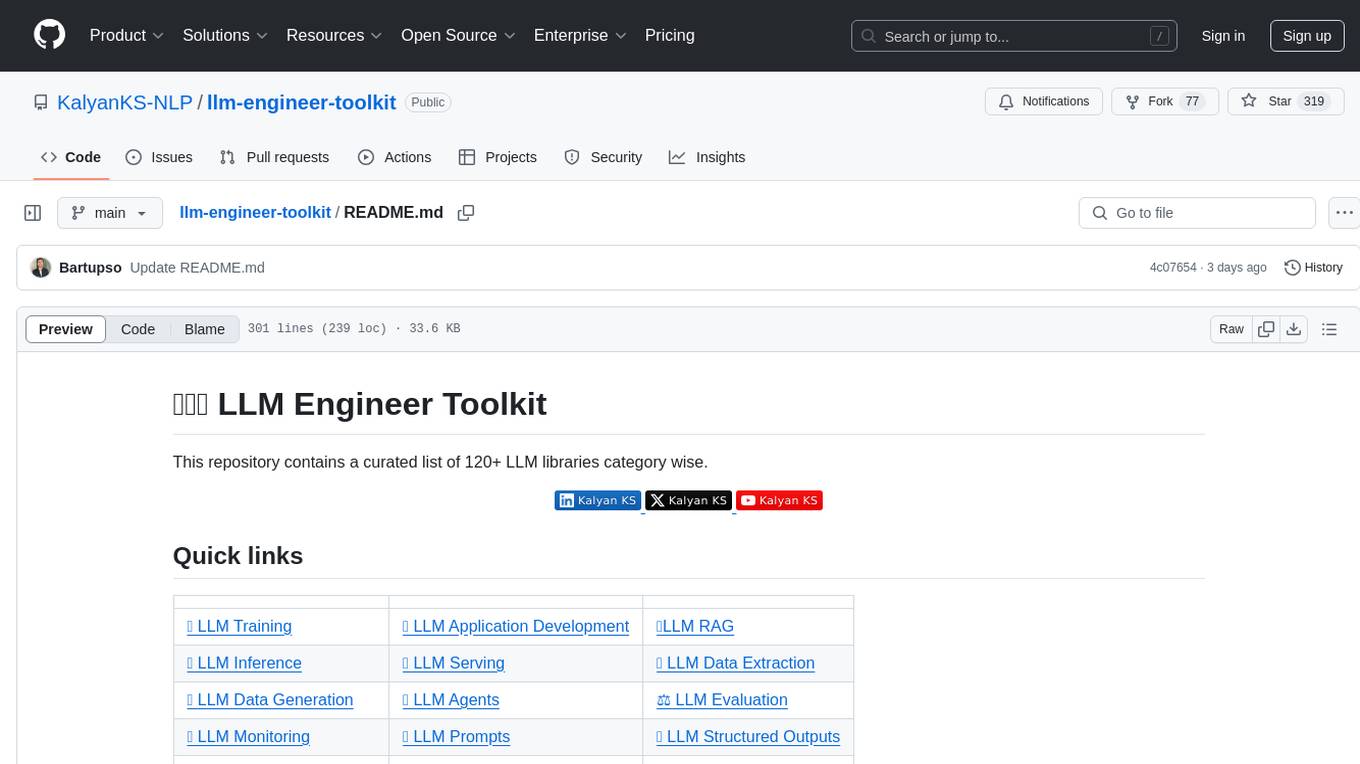

| LLM Engineer Toolkit | A curated list of 120+ LLM libraries organized by category. | GitHub |

| 🚀🚀 Best of ML Python | A ranked list of awesome machine learning Python libraries. Updated weekly. | GitHub |

| 🚀🚀 Awesome Python Data Science | Probably the best curated list of data science software in Python. | GitHub |

| Awesome Production Machine Learning | A curated list of awesome open source libraries to deploy, monitor, version and scale your machine learning | GitHub |

| Awesome AI Agents | A list of AI autonomous agents | GitHub |

| Project | Description | Links |

|---|---|---|

| 🚀🚀 AI Data Science Team In Python | AI Agents to help you perform common data science tasks 10X faster. | Apps | Examples | GitHub |

| 🚀 PandasAI | Open Source AI Agents for Data Analysis. | Documentation | GitHub |

| Microsoft Data Formulator | Transform data and create rich visualizations iteratively with AI 🪄. | Paper | GitHub |

| Jupyter Agent | Let a LLM agent write and execute code inside a notebook. | Hugging Face |

| Jupyter AI | A generative AI extension for JupyterLab. | Documentation | GitHub |

| WrenAI | Open-source GenBI AI Agent. Text2SQL made Easy! | Documentation | GitHub |

| Google GenAI Toolbox for Databases | Gen AI Toolbox for Databases is an open-source server that makes it easier to build Gen AI tools for interacting with databases. | Blog | Documentation | GitHub |

| Vanna AI | The fastest way to get actionable insights from your SQL database just by asking questions. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| Qwen-Agent | A framework for developing LLM applications based on the instruction following, tool usage, planning, and memory capabilities of Qwen. It also comes with example applications such as Browser Assistant, Code Interpreter, and Custom Assistant. | Documentation | Examples | GitHub |

| Project | Description | Links |

|---|---|---|

| HuggingFace OpenDeepResearch | Open version of OpenAI's Deep Research agent. | Blog | Example | GitHub |

| OpenDeepResearcher | AI researcher that continuously searches for information based on a user query until the system is confident that it has gathered all the necessary details. | GitHub |

| Project | Description | Links |

|---|---|---|

| LangChain | A framework for developing applications powered by large language models (LLMs). | Documentation | GitHub | Cookbook |

| LangGraph | A library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows. | Documentation | Tutorials |

| LangSmith | A platform for building production-grade LLM applications. It allows you to closely monitor and evaluate your application, so you can quickly and confidently ship. | Documentation | GitHub |

| LlamaIndex | A framework for building context-augmented generative AI applications with LLMs. | Documentation | GitHub |

| LlamaIndex Workflows | A mechanism for orchestrating actions in increasingly complex AI applications. | Blog |

| CrewAI | Streamline workflows across industries with powerful AI agents. | Documentation | GitHub |

| AutoGen | Microsoft's programming framework for agentic AI. | GitHub |

| Pydantic AI | Python agent framework designed to make building production-grade applications with Generative AI less painful. | GitHub |

| ControlFlow | Prefect's Python framework for building agentic AI workflows. | Documentation | GitHub |

| FlatAI | Frameworkless LLM Agents. | GitHub |

| Llama Stack | Meta (Facebook) core building blocks needed to bring generative AI applications to market. | Documentation | GitHub |

| Haystack | Deepset AI's open-source AI orchestration framework for building customizable, production-ready LLM applications. | Documentation | GitHub |

| Pocket Flow | A 100-line minimalist LLM framework for Agents, Task Decomposition, RAG, etc. | Documentation | GitHub |

| Agency Swarm | An open-source agent orchestration framework built on top of the latest OpenAI Assistants API. | Documentation | GitHub |

| Google GenAI | Google Gen AI Python SDK provides an interface for developers to integrate Google’s generative models into their Python applications. | Documentation | GitHub |

| AutoAgent | A fully-automated and highly self-developing framework that enables users to create and deploy LLM agents through natural language alone. | GitHub |

| Legion | A flexible and provider-agnostic framework designed to simplify the creation of sophisticated multi-agent systems. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| LangGraph Studio | IDE that enables visualization, interaction, and debugging of complex agentic applications. | GitHub |

| Langflow | A low-code tool that makes building powerful AI agents and workflows that can use any API, model, or database easier. | Documentation | GitHub |

| Pyspur | Graph-Based Editor for LLM Workflows. | Documentation | GitHub |

| LangWatch | Monitor, Evaluate & Optimize your LLM performance with 1-click. Drag and drop interface for LLMOps platform. | Documentation | GitHub |

| AutoGen Studio | A low-code interface to rapidly prototype AI agents, enhance them with tools, compose them into teams, and interact with them to accomplish tasks. Built on AutoGen AgentChat. | Documentation |

| n8n | Fair-code workflow automation platform with native AI capabilities. Combine visual building with custom code, self-host or cloud, 400+ integrations. | Documentation | GitHub |

| Provider | Description | Links |

|---|---|---|

| OpenAI | The official Python library for the OpenAI API. | GitHub |

| OpenAI Agents | The OpenAI Agents SDK is a lightweight yet powerful framework for building multi-agent workflows. | GitHub |

| Hugging Face Models | Open LLM models by Meta, Mistral, and hundreds of other providers. | Hugging Face |

| Anthropic Claude | The official Python library for the Anthropic API. | GitHub |

| Meta Llama Models | The open-source AI model you can fine-tune, distill, and deploy anywhere. | Meta |

| Google Gemini | The official Python library for the Google Gemini API. | GitHub |

| Ollama | Get up and running with large language models locally. | GitHub |

| Grok | The official Python library for the Groq API. | GitHub |

| Project | Description | Links |

|---|---|---|

| DeepSeek-R1 | 1st generation reasoning model that competes with OpenAI o1. | Paper | GitHub |

| Qwen | Alibaba's Qwen models. | GitHub |

| Llama | Meta's foundational models. | GitHub |

| Project | Description | Links |

|---|---|---|

| LangChain | A framework for developing applications powered by large language models (LLMs). | Documentation | GitHub | Cookbook |

| LangGraph | A library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows. | Documentation | Tutorials |

| LangSmith | A platform for building production-grade LLM applications. It allows you to closely monitor and evaluate your application, so you can quickly and confidently ship. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| LangGraph Prebuilt Agents | Prebuilt agents for LangGraph (includes 3rd Party LangGraph extensions). | Documentation |

| AI Data Science Team | An AI-powered data science team of agents to help you perform common data science tasks 10X faster. | GitHub |

| LangMem | LangMem provides tooling to extract important information from conversations, optimize agent behavior through prompt refinement, and maintain long-term memory. | GitHub |

| LangGraph Supervisor | A Python library for creating hierarchical multi-agent systems using LangGraph. | GitHub |

| Open Deep Research | An open-source assistant that automates research and produces customizable reports on any topic. | GitHub |

| LangGraph Reflection | This prebuilt graph is an agent that uses a reflection-style architecture to check and improve an initial agent's output. | GitHub |

| LangGraph Big Tool | Create LangGraph agents that can access large numbers of tools. | GitHub |

| LangGraph CodeAct | This library implements the CodeAct architecture in LangGraph. This architecture is used by Manus.im. | GitHub |

| LangGraph Swarm | Create swarm-style multi-agent systems using LangGraph. Agents dynamically hand off control to one another based on their specializations. | GitHub |

| LangChain MCP Adapters | Provides a lightweight wrapper that makes Anthropic Model Context Protocol (MCP) tools compatible with LangChain and LangGraph. | GitHub |

| Project | Description | Links |

|---|---|---|

| Huggingface | An open-source platform for machine learning (ML) and artificial intelligence (AI) tools and models. | Documentation |

| Transformers | Transformers provides APIs and tools to easily download and train state-of-the-art pretrained models. | Documentation |

| Tokenizers | Tokenizers provides an implementation of today’s most used tokenizers, with a focus on performance and versatility. | Documentation | GitHub |

| Sentence Transformers | Sentence Transformers (a.k.a. SBERT) is the go-to Python module for accessing, using, and training state-of-the-art text and image embedding models. | Documentation |

| smolagents | The simplest framework out there to build powerful agents. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| ChromaDB | The fastest way to build Python or JavaScript LLM apps with memory! | GitHub |

| FAISS | A library for efficient similarity search and clustering of dense vectors. | GitHub |

| Qdrant | High-Performance Vector Search at Scale. | Website |

| Pinecone | The official Pinecone Python SDK. | GitHub |

| Milvus | Milvus is an open-source vector database built to power embedding similarity search and AI applications. | GitHub |

| SQLite Vec | A vector search SQLite extension that runs anywhere! | GitHub |

| Project | Description | Links |

|---|---|---|

| PyTorch | PyTorch is an open-source machine learning library based on the Torch library, used for applications such as computer vision and natural language processing. | Website |

| TensorFlow | TensorFlow is an open-source machine learning library developed by Google. | Website |

| JAX | Google’s library for high-performance computing and automatic differentiation. | GitHub |

| tinygrad | A minimalistic deep learning library with a focus on simplicity and educational use, created by George Hotz. | GitHub |

| micrograd | A simple, lightweight autograd engine for educational purposes, created by Andrej Karpathy. | GitHub |

| Project | Description | Links |

|---|---|---|

| Transformers | Hugging Face Transformers is a popular library for Natural Language Processing (NLP) tasks, including fine-tuning large language models. | Documentation |

| Unsloth | Finetune Llama 3.2, Mistral, Phi-3.5 & Gemma 2-5x faster with 80% less memory! | GitHub |

| LitGPT | 20+ high-performance LLMs with recipes to pretrain, finetune, and deploy at scale. | GitHub |

| AutoTrain | No code fine-tuning of LLMs and other machine learning tasks. | GitHub |

| Project | Description | Links |

|---|---|---|

| LangSmith | LangSmith is a platform for building production-grade LLM applications. It allows you to closely monitor and evaluate your application, so you can quickly and confidently ship. | Documentation | GitHub |

| LangWatch | Monitor, Evaluate & Optimize your LLM performance with 1-click. Drag and drop interface for LLMOps platform. | Documentation | GitHub |

| Opik | Opik is an open-source platform for evaluating, testing, and monitoring LLM applications. | GitHub |

| MLflow Tracing and Evaluation | MLflow has a suite of features for LLMs. | MLflow LLM Documentation | Model Tracing | Model Evaluation | GitHub |

| Langfuse | Traces, evals, prompt management, and metrics to debug and improve your LLM application. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| LangChain Document Loaders | LangChain has hundreds of integrations with various data sources to load data from: Slack, Notion, Google Drive, etc. | Documentation |

| Embedchain | Create an AI app on your own data in a minute. | Documentation | GitHub |

| Docling by IBM | Parse documents and export them to the desired format with ease and speed. | GitHub |

| Markitdown by Microsoft | Python tool for converting files and office documents to Markdown. | GitHub |

| DocETL | A system for agentic LLM-powered data processing and ETL. | Documentation | GitHub |

| Unstructured.io | Unstructured provides a platform and tools to ingest and process unstructured documents for Retrieval Augmented Generation (RAG) and model fine-tuning. | Documentation | GitHub | Paper |

| Project | Description | Links |

|---|---|---|

| Gitingest | Turn any Git repository into a simple text ingest of its codebase. This is useful for feeding a codebase into any LLM. | GitHub |

| Crawl4AI | Open-source, blazing-fast, AI-ready web crawling tailored for LLMs, AI agents, and data pipelines. | Documentation | GitHub |

| GPT Crawler | Crawl a site to generate knowledge files to create your own custom GPT from a URL. | Documentation | GitHub |

| ScrapeGraphAI | A web scraping Python library that uses LLM and direct graph logic to create scraping pipelines for websites and local documents (XML, HTML, JSON, Markdown, etc.). | Documentation | GitHub |

| Scrapling | 🕷️ Undetectable, Lightning-Fast, and Adaptive Web Scraping for Python. | GitHub |

| Firecrawl | 🔥 Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl, and extract with a single API. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| LangChain Agents | Build agents with LangChain. | Documentation |

| LangChain Tools | Integrate Tools (Function Calling) with LangChain. | Documentation |

| smolagents | The simplest framework out there to build powerful agents. | Documentation | GitHub |

| Agentarium | Open-source framework for creating and managing simulations populated with AI-powered agents. It provides an intuitive platform for designing complex, interactive environments where agents can act, learn, and evolve. | GitHub |

| AutoGen AgentChat | Build applications quickly with preset agents. | Documentation |

| Project | Description | Links |

|---|---|---|

| Agno (Formerly Phidata) | An open-source platform to build, ship and monitor agentic systems. | Documentation | GitHub |

| Composio | Integration Platform for AI Agents & LLMs (works with LangChain, CrewAI, etc). | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| Mem0 | Mem0 is a self-improving memory layer for LLM applications, enabling personalized AI experiences that save costs and delight users. | Documentation | GitHub |

| Memary | Open Source Memory Layer For Autonomous Agents. | GitHub |

| Memobase | 1st User Profile-Based Memory for GenAI Apps. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| LangWatch | Monitor, Evaluate & Optimize your LLM performance with 1-click. Drag and drop interface for LLMOps platform. | Documentation | GitHub |

| MLflow | MLflow Tracing for LLM Observability. | Documentation |

| Agenta | Open-source LLMOps platform: prompt playground, prompt management, LLM evaluation, and LLM Observability all in one place. | Documentation |

| LLMOps | Best practices designed to support your LLMOps initiatives. | GitHub |

| Helicone | Open-source LLM observability platform for developers to monitor, debug, and improve production-ready applications. | Documentation | GitHub |

| Project | Description | Links |

|---|---|---|

| E2B | E2B is an open-source runtime for executing AI-generated code in secure cloud sandboxes. Made for agentic & AI use cases. | Documentation | GitHub |

| AutoGen Docker Code Executor | Executes code through a command line environment in a Docker container. | Documentation |

| Project | Description | Links |

|---|---|---|

| Browser-Use | Make websites accessible for AI agents. | Documentation | GitHub |

| WebUI | Built on Gradio and supports most of browser-use functionalities. This UI is designed to be user-friendly and enables easy interaction with the browser agent. |

GitHub |

| WebRover | WebRover is an AI-powered web agent that combines autonomous browsing with advanced research capabilities. | GitHub |

| Project | Description | Links |

|---|---|---|

| Microsoft PromptWizard | Task-Aware Prompt Optimization Framework. | GitHub |

| Promptify | A library for prompt engineering that simplifies NLP tasks (e.g., NER, classification) using LLMs like GPT. | GitHub |

| AutoPrompt | A framework for prompt tuning using Intent-based Prompt Calibration. | GitHub |

| Project | Description | Links |

|---|---|---|

| AI Suite | Simple, unified interface to multiple Generative AI providers. | GitHub |

| AdalFlow | The library to build & auto-optimize LLM applications, from Chatbot, RAG, to Agent by SylphAI. | GitHub |

| dspy | DSPy: The framework for programming—not prompting—foundation models. | GitHub |

| LiteLLM | Python SDK, Proxy Server (LLM Gateway) to call 100+ LLM APIs in OpenAI format. | GitHub |

| AI Agent Service Toolkit | Full toolkit for running an AI agent service built with LangGraph, FastAPI, and Streamlit. | App | GitHub |

| Microsoft Tiny Troupe | LLM-powered multiagent persona simulation for imagination enhancement and business insights. | GitHub |

| Distributed Llama | Connect home devices into a powerful cluster to accelerate LLM inference. | GitHub |

| Project | Description | Links |

|---|---|---|

| LLM tools for R | An ongoing roundup of useful developments in the LLM/genAI space, with a specific focus on R. | Website |

| Project | Description | Links |

|---|---|---|

| ellmer | Makes it easy to use large language models (LLM) from R. It supports a wide variety of LLM providers and implements a rich set of features including streaming outputs, tool/function calling, structured data extraction, and more. | Website |

| hellmer | Enables sequential and parallel batch processing for chat models supported by ellmer. | Documentation |

| chores | Provides a library of ergonomic LLM assistants designed to help you complete repetitive, hard-to-automate tasks quickly. | Documentation |

| ggpal | LLM assistant specifically for ggplot2. | GitHub |

| gander | A high-performance and low-friction chat experience for data scientists in RStudio and Positron–sort of like completions with Copilot, but it knows how to talk to the objects in your R environment. | Documentation |

| Project | Description | Links |

|---|---|---|

| mall | Run multiple LLM predictions against a data frame. The predictions are processed row-wise over a specified column. | Website |

| lang | Use an LLM to translate a function’s help documentation on-the-fly. | Website |

| chattr | An interface to LLMs (Large Language Models). | Website |

| Project | Description | Links |

|---|---|---|

| chatgpt | Interface with models from OpenAI to get assistance while coding. | GitHub |

| groqR | Brings GroqCloud’s lightning-fast LPU (Language Processing Unit) technology directly to your R workflow. | Website |

| gptstudio | Easily incorporate use of large language models (LLMs) into their project workflows. | Website |

| llmR | R interface to various Large Language Models (LLMs) such as OpenAI’s GPT models, Azure’s language models, Google’s Gemini models, or custom local servers. | GitHub |

| tidychatmodels | A simple interface to chat with your favorite AI chatbot from R, inspired by tidymodels where you can easily swap out any ML model for another one but keep the other parts of the workflow the same. | Website |

| tidyllm | Access various large language model APIs, including Anthropic Claude, OpenAI, Google Gemini, Perplexity, Groq, Mistral, and local models via Ollama or OpenAI-compatible APIs. | Website |

| gemini.R | R package to use Google’s Gemini via API on R. | Website |

| PerplexR | Intuitive interface for leveraging the capabilities of the Perplexity API Pro subscription. | GitHub |

| ollama-r | The easiest way to integrate R with Ollama, which lets you run language models locally on your own machine. | Website |

| rollama | Wraps the Ollama API, which allows you to run different LLMs locally and create an experience similar to ChatGPT/OpenAI’s API. | Website |

| Project | Description | Links |

|---|---|---|

| Ragnar | Helps implement Retrieval-Augmented Generation (RAG) workflows. | Website |

| Service | Description | Links |

|---|---|---|

| AWS Bedrock | Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon. | AWS Bedrock |

| Microsoft Azure AI Services | Azure AI services help developers and organizations rapidly create intelligent, cutting-edge, market-ready, and responsible applications with out-of-the-box and prebuilt and customizable APIs and models. | Microsoft Azure AI Services |

| Google Vertex AI | Vertex AI is a fully-managed, unified AI development platform for building and using generative AI. | Google Vertex AI |

| NVIDIA NIM | NVIDIA NIM™, part of NVIDIA AI Enterprise, provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models across clouds, data centers, and workstations. | NVIDIA NIM |

| Project | Description | Links |

|---|---|---|

| LangChain Cookbook | Example code for building applications with LangChain, with an emphasis on more applied and end-to-end examples. | GitHub |

| LangGraph Examples | Example code for building applications with LangGraph. | GitHub |

| Llama Index Examples | Example code for building applications with Llama Index. | GitHub |

| Streamlit LLM Examples | Streamlit LLM app examples for getting started. | GitHub |

| Project | Description | Links |

|---|---|---|

| Amazon Bedrock Workshop | Introduces how to leverage foundation models (FMs) through Amazon Bedrock. | GitHub |

| Project | Description | Links |

|---|---|---|

| Microsoft Generative AI for Beginners | 21 lessons teaching everything you need to know to start building Generative AI applications. | GitHub |

| Microsoft Intro to Generative AI Course | A comprehensive introduction to Generative AI concepts and applications. | Microsoft Learn |

| Azure Generative AI Examples | Prompt Flow and RAG examples for use with the Microsoft Azure Cloud platform. | GitHub |

| Project | Description | Links |

|---|---|---|

| Google Vertex AI Examples | Notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop, and manage machine learning and generative AI workflows using Google Cloud Vertex AI. | GitHub |

| Google Generative AI Examples | Sample code and notebooks for Generative AI on Google Cloud, with Gemini on Vertex AI. | GitHub |

| Project | Description | Links |

|---|---|---|

| NVIDIA NIM Anywhere | An entry point for developing with NIMs that natively scales out to full-sized labs and up to production environments. | GitHub |

| NVIDIA NIM Deploy | Reference implementations, example documents, and architecture guides that can be used as a starting point to deploy multiple NIMs and other NVIDIA microservices into Kubernetes and other production deployment environments. | GitHub |

| Newsletter | Description | Links |

|---|---|---|

| Python AI/ML Tips | Free newsletter on Generative AI and Data Science. | GitHub |

| unwind ai | Latest AI news, tools, and tutorials for AI Developers. | Website |

| Workshop | Description | Links |

|---|---|---|

| Generative AI Data Scientist Workshops | Get free training on how to build and deploy Generative AI / ML Solutions. | Register for the next free workshop here. |

| Course | Description | Links |

|---|---|---|

| 8-Week AI Bootcamp To Become A Generative AI-Data Scientist | Focused on helping you become a Generative AI Data Scientist. Learn how to build and deploy AI-powered data science solutions using LangChain, LangGraph, Pandas, Scikit Learn, Streamlit, AWS, Bedrock, and EC2. | Enroll Here |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-generative-ai-data-scientist

Similar Open Source Tools

awesome-generative-ai-data-scientist

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

redis-ai-resources

A curated repository of code recipes, demos, and resources for basic and advanced Redis use cases in the AI ecosystem. It includes demos for ArxivChatGuru, Redis VSS, Vertex AI & Redis, Agentic RAG, ArXiv Search, and Product Search. Recipes cover topics like Getting started with RAG, Semantic Cache, Advanced RAG, and Recommendation systems. The repository also provides integrations/tools like RedisVL, AWS Bedrock, LangChain Python, LangChain JS, LlamaIndex, Semantic Kernel, RelevanceAI, and DocArray. Additional content includes blog posts, talks, reviews, and documentation related to Vector Similarity Search, AI-Powered Document Search, Vector Databases, Real-Time Product Recommendations, and more. Benchmarks compare Redis against other Vector Databases and ANN benchmarks. Documentation includes QuickStart guides, official literature for Vector Similarity Search, Redis-py client library docs, Redis Stack documentation, and Redis client list.

AI-For-Beginners

AI-For-Beginners is a comprehensive 12-week, 24-lesson curriculum designed by experts at Microsoft to introduce beginners to the world of Artificial Intelligence (AI). The curriculum covers various topics such as Symbolic AI, Neural Networks, Computer Vision, Natural Language Processing, Genetic Algorithms, and Multi-Agent Systems. It includes hands-on lessons, quizzes, and labs using popular frameworks like TensorFlow and PyTorch. The focus is on providing a foundational understanding of AI concepts and principles, making it an ideal starting point for individuals interested in AI.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

mcp-for-beginners

The Model Context Protocol (MCP) Curriculum for Beginners is an open-source framework designed to standardize interactions between AI models and client applications. It offers a structured learning path with practical coding examples and real-world use cases in popular programming languages like C#, Java, JavaScript, Rust, Python, and TypeScript. Whether you're an AI developer, system architect, or software engineer, this guide provides comprehensive resources for mastering MCP fundamentals and implementation strategies.

llm-engineer-toolkit

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

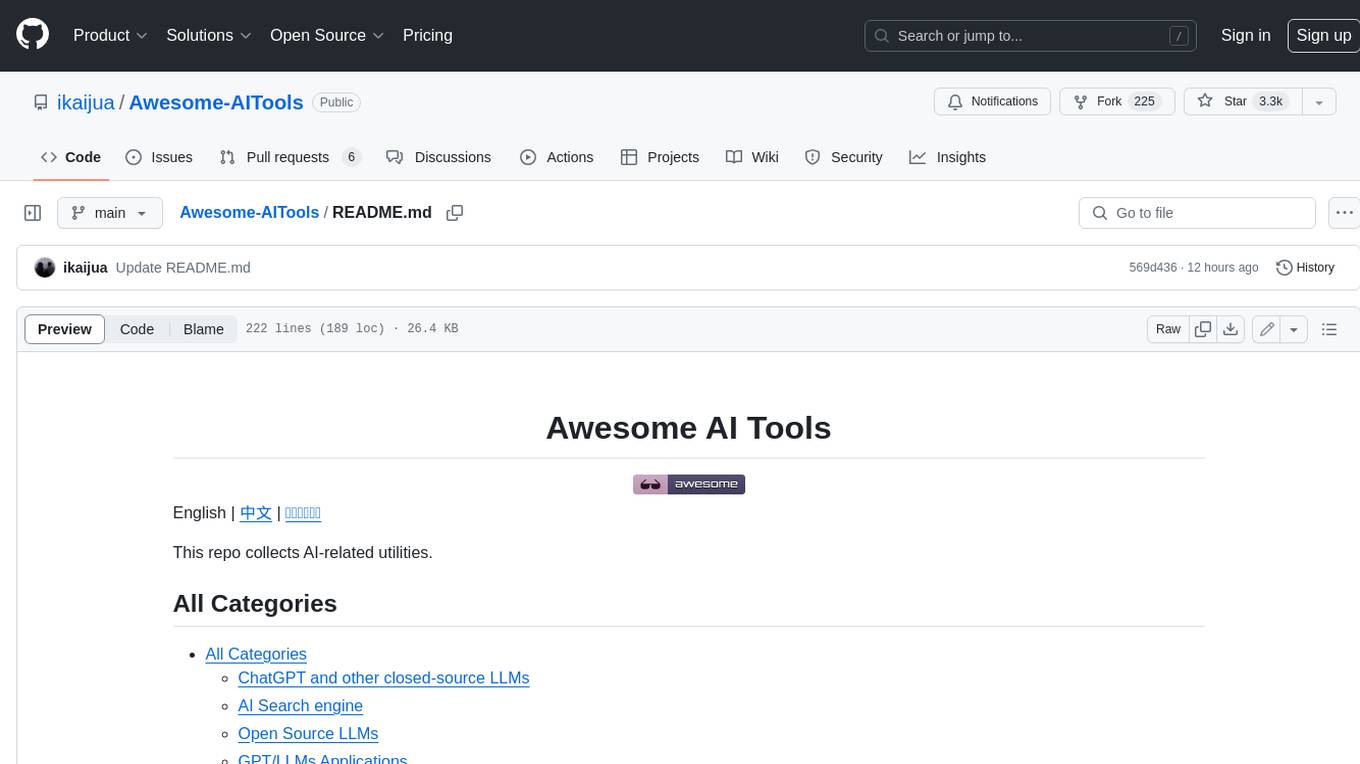

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

runtime

Exosphere is a lightweight runtime designed to make AI agents resilient to failure and enable infinite scaling across distributed compute. It provides a powerful foundation for building and orchestrating AI applications with features such as lightweight runtime, inbuilt failure handling, infinite parallel agents, dynamic execution graphs, native state persistence, and observability. Whether you're working on data pipelines, AI agents, or complex workflow orchestrations, Exosphere offers the infrastructure backbone to make your AI applications production-ready and scalable.

squirrelscan

Squirrelscan is a website audit tool designed for SEO, performance, and security audits. It offers 230+ rules across 21 categories, AI-native design for Claude Code and AI workflows, smart incremental crawling, and multiple output formats. It provides E-E-A-T auditing, crawl history tracking, and is developer-friendly with a CLI. Users can run audits in the terminal, integrate with AI coding agents, or pipe output to AI assistants. The tool is available for macOS, Linux, Windows, npm, and npx installations, and is suitable for autonomous AI workflows.

together-cookbook

The Together Cookbook is a collection of code and guides designed to help developers build with open source models using Together AI. The recipes provide examples on how to chain multiple LLM calls, create agents that route tasks to specialized models, run multiple LLMs in parallel, break down tasks into parallel subtasks, build agents that iteratively improve responses, perform LoRA fine-tuning and inference, fine-tune LLMs for repetition, improve summarization capabilities, fine-tune LLMs on multi-step conversations, implement retrieval-augmented generation, conduct multimodal search and conditional image generation, visualize vector embeddings, improve search results with rerankers, implement vector search with embedding models, extract structured text from images, summarize and evaluate outputs with LLMs, generate podcasts from PDF content, and get LLMs to generate knowledge graphs.

compose-for-agents

Compose for Agents is a tool that allows users to run demos using OpenAI models or locally with Docker Model Runner. The tool supports multi-agent and single-agent systems for various tasks such as fact-checking, summarizing GitHub issues, marketing strategy, SQL queries, travel planning, and more. Users can configure the demos by creating a `.mcp.env` file, supplying required tokens, and running `docker compose up --build`. Additionally, users can utilize OpenAI models by creating a `secret.openai-api-key` file and starting the project with the OpenAI configuration.

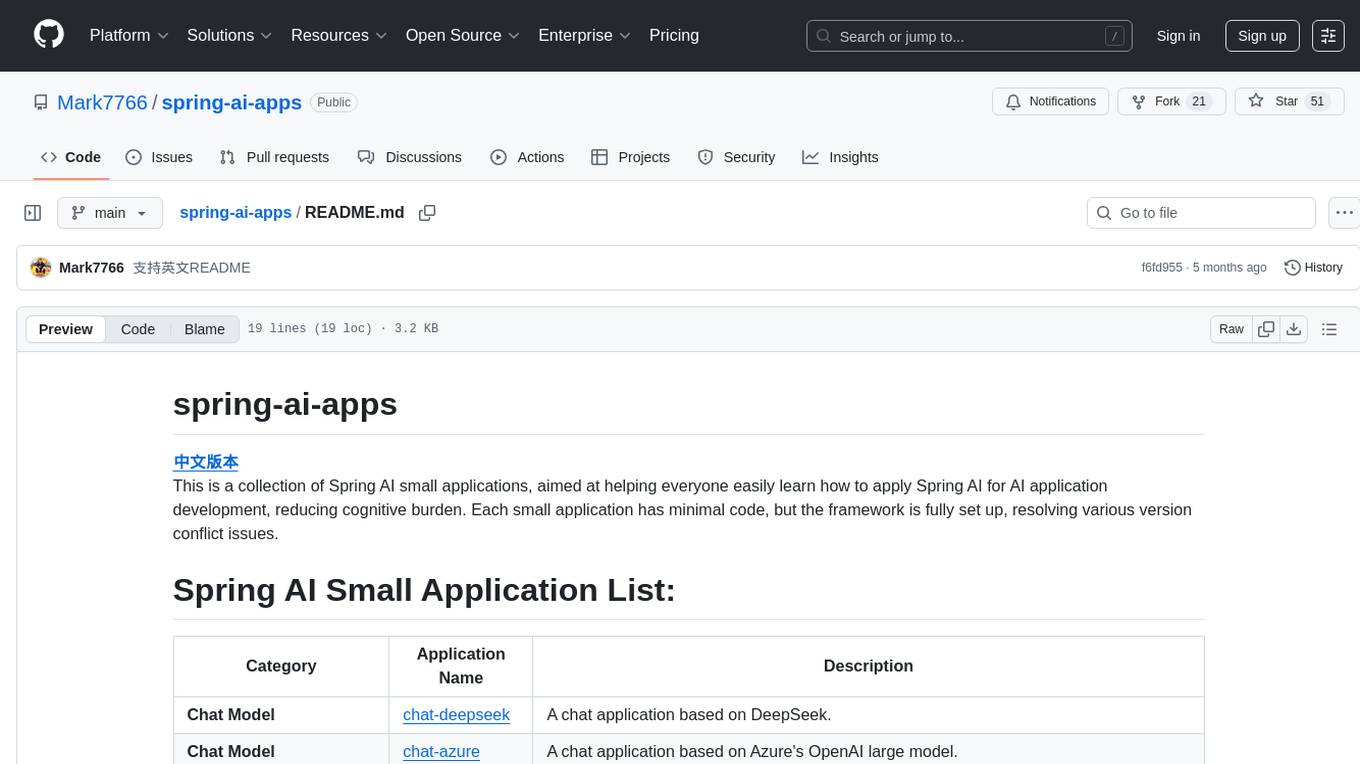

spring-ai-apps

spring-ai-apps is a collection of Spring AI small applications designed to help users easily apply Spring AI for AI application development. Each small application comes with minimal code and a fully set up framework to resolve version conflict issues.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

kodus-ai

Kodus AI is an open-source AI agent designed to review code like a real teammate, providing personalized, context-aware code reviews to help teams catch bugs, enforce best practices, and maintain a clean codebase. It seamlessly integrates with Git workflows, learns team coding patterns, and offers custom review policies. Kodus supports all programming languages with semantic and AST analysis, enhancing code review accuracy and providing actionable feedback. The tool is available in Cloud and Self-Hosted editions, offering features like self-hosting, unlimited users, custom integrations, and advanced compliance support.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.