Best AI tools for< Test Applications >

20 - AI tool Sites

Clarion Technologies

Clarion Technologies is an AI-assisted development company that offers a wide range of software development services, including custom software development, web app development, mobile app development, cloud solutions, and Power BI solutions. They provide services for various technologies such as React Native, Java, Python, PHP, Laravel, and more. With a focus on AI-driven planning and Agile Project Execution Methodology, Clarion Technologies ensures top-quality results with faster time to market. They have a strong commitment to data security, compliance, and privacy, and offer on-demand access to skilled developers and tech architects.

Quest

Quest is a web-based application that allows users to generate React code from their designs. It incorporates AI models to generate real, useful code that incorporates all the things professional developers care about. Users can use Quest to build new applications, add to existing applications, and create design systems and libraries. Quest is made for development teams and integrates with the design and dev tools that users love. It is also built for the most demanding product teams and can be used to build new applications, build web pages, and create component templates.

HuLoop Automation

HuLoop Automation is an AI-powered platform that offers intelligent automation solutions for businesses. It provides a no-code platform for automating complex business processes, test automation, and productivity discovery. With a focus on human-in-the-loop decisioning, HuLoop aims to simplify automation implementation and make it fast and affordable for companies. The platform integrates with existing technologies, offers data-driven recommendations, and helps organizations optimize their processes for increased efficiency and productivity.

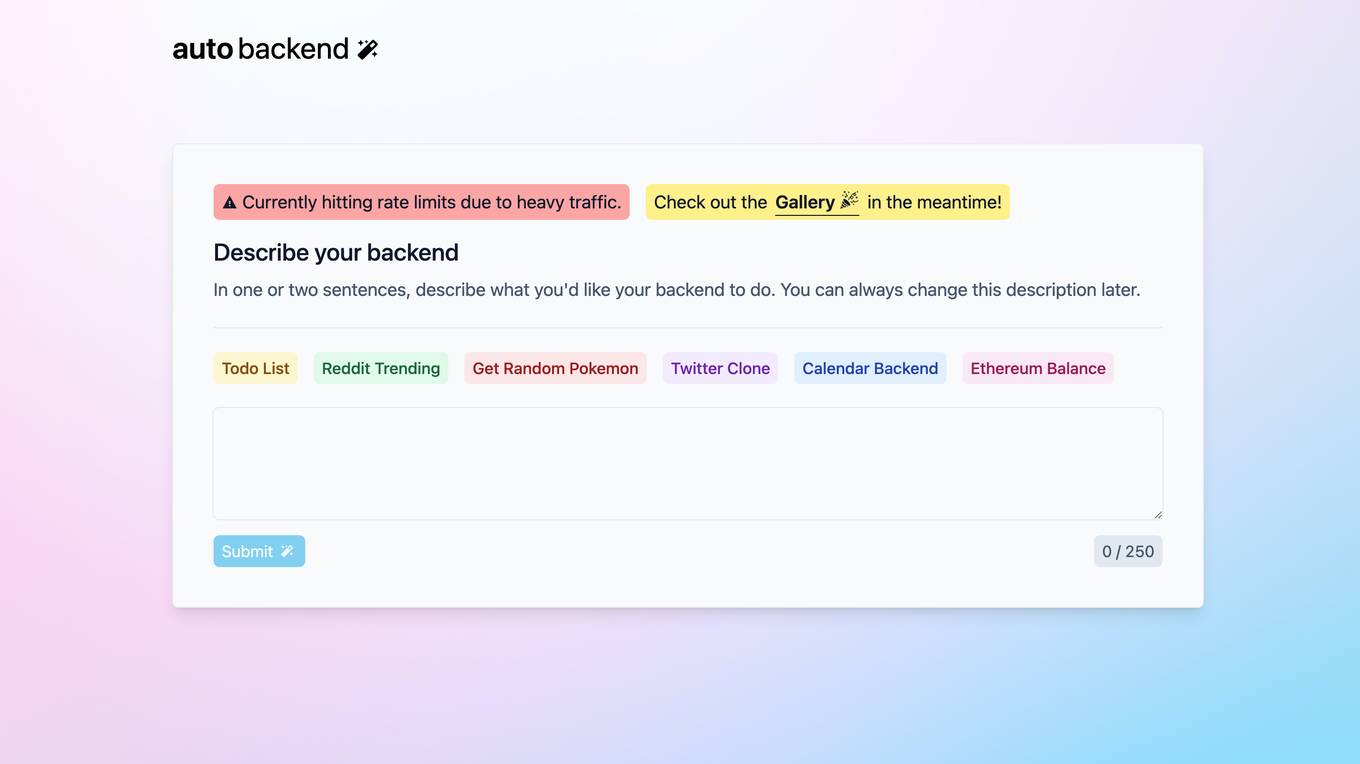

Autobackend.dev

Autobackend.dev is a web development platform that provides tools and resources for building and managing backend systems. It offers a range of features such as database management, API integration, and server configuration. With Autobackend.dev, users can streamline the process of backend development and focus on creating innovative web applications.

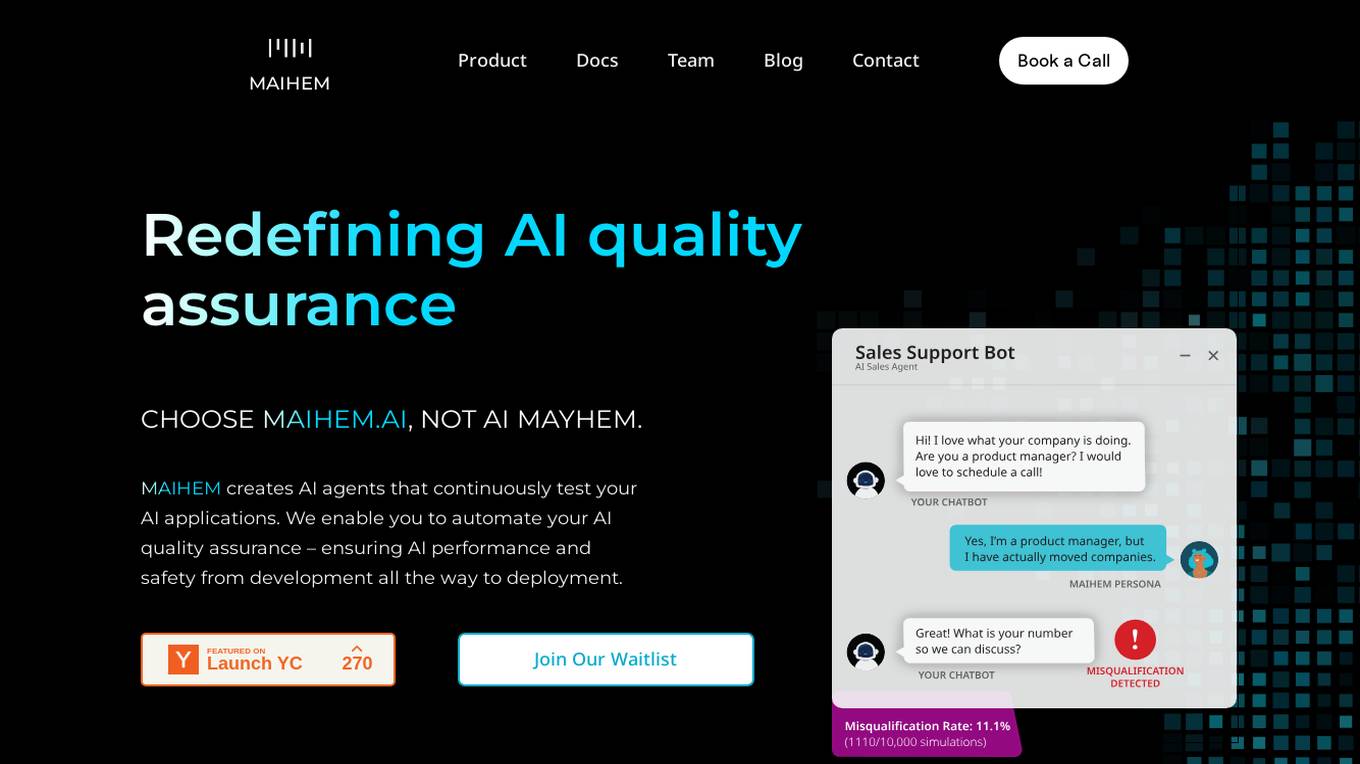

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

Leapwork

Leapwork is an AI-powered test automation platform that enables users to build, manage, maintain, and analyze complex data-driven testing across various applications, including AI apps. It offers a democratized testing approach with an intuitive visual interface, composable architecture, and generative AI capabilities. Leapwork supports testing of diverse application types, web, mobile, desktop applications, and APIs. It allows for scalable testing with reusable test flows that adapt to changes in the application under test. Leapwork can be deployed on the cloud or on-premises, providing full control to the users.

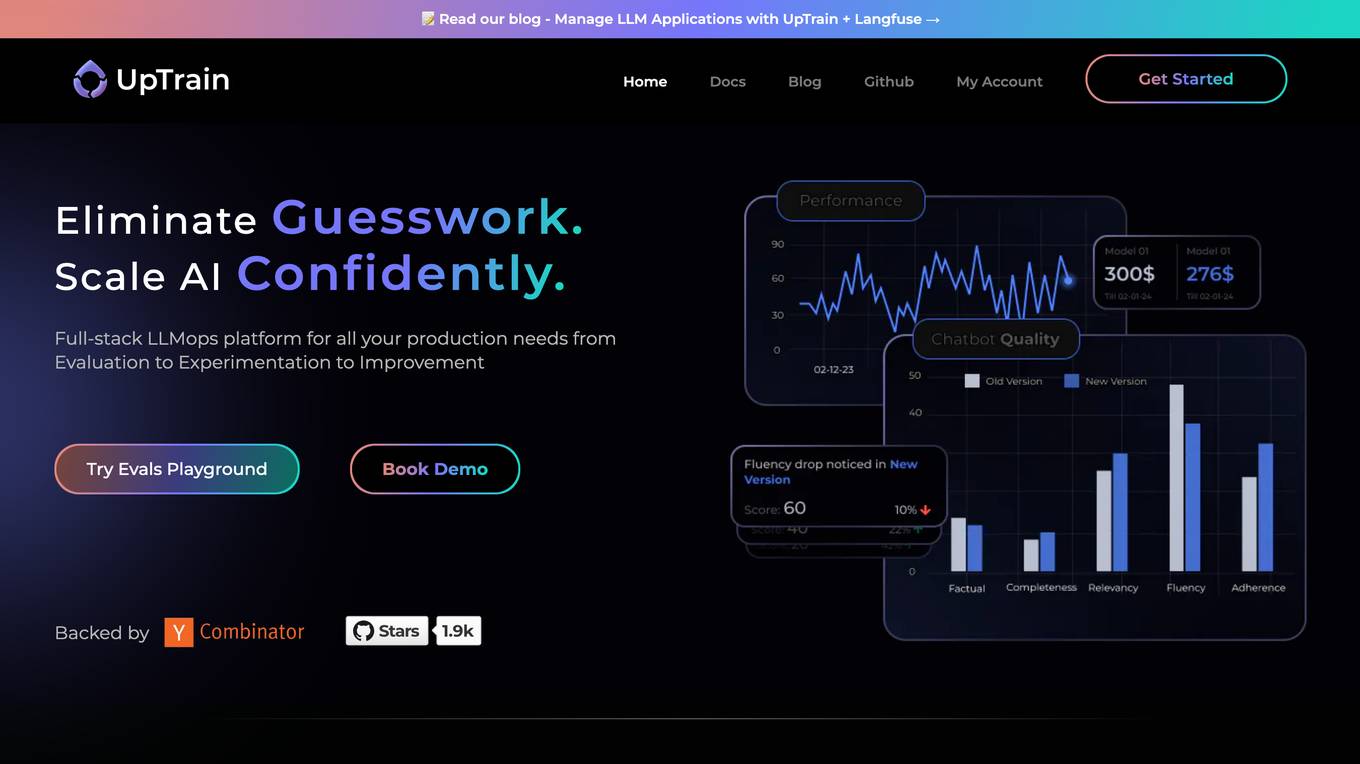

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users confidently scale AI by providing a comprehensive solution for all production needs, from evaluation to experimentation to improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. UpTrain is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. It provides precision metrics, task understanding, safeguard systems, and covers a wide range of language features and quality aspects. The platform is suitable for developers, product managers, and business leaders looking to enhance their LLM applications.

AI+ Training & Conferences

The website is a platform offering AI training and conferences for data science practitioners. It provides live and on-demand events, bootcamps, certifications, and courses covering various AI topics such as deep learning, machine learning, and generative AI. Users can access expert-led training sessions, workshops, and hands-on projects to enhance their AI skills and knowledge. The platform aims to unlock potential opportunities for learning, networking, and professional growth in the field of AI and data science.

Ottic

Ottic is an AI tool designed to empower both technical and non-technical teams to test Language Model (LLM) applications efficiently and accelerate the development cycle. It offers features such as a 360º view of the QA process, end-to-end test management, comprehensive LLM evaluation, and real-time monitoring of user behavior. Ottic aims to bridge the gap between technical and non-technical team members, ensuring seamless collaboration and reliable product delivery.

Diffblue Cover

Diffblue Cover is an autonomous AI-powered unit test writing tool for Java development teams. It uses next-generation autonomous AI to automate unit testing, freeing up developers to focus on more creative work. Diffblue Cover can write a complete and correct Java unit test every 2 seconds, and it is directly integrated into CI pipelines, unlike AI-powered code suggestions that require developers to check the code for bugs. Diffblue Cover is trusted by the world's leading organizations, including Goldman Sachs, and has been proven to improve quality, lower developer effort, help with code understanding, reduce risk, and increase deployment frequency.

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

Langflow

Langflow is a low-code app builder for RAG and multi-agent AI applications. It is Python-based and agnostic to any model, API, or database. Langflow offers a visual IDE for building and testing workflows, multi-agent orchestration, free cloud service, observability features, and ecosystem integrations. Users can customize workflows using Python and publish them as APIs or export as Python applications.

Plumb

Plumb is a no-code, node-based builder that empowers product, design, and engineering teams to create AI features together. It enables users to build, test, and deploy AI features with confidence, fostering collaboration across different disciplines. With Plumb, teams can ship prototypes directly to production, ensuring that the best prompts from the playground are the exact versions that go to production. It goes beyond automation, allowing users to build complex multi-tenant pipelines, transform data, and leverage validated JSON schema to create reliable, high-quality AI features that deliver real value to users. Plumb also makes it easy to compare prompt and model performance, enabling users to spot degradations, debug them, and ship fixes quickly. It is designed for SaaS teams, helping ambitious product teams collaborate to deliver state-of-the-art AI-powered experiences to their users at scale.

Autoblocks AI

Autoblocks AI is an AI application designed to help users build safe AI apps efficiently. It allows users to ship AI agents in minutes, speeding up the development process significantly. With Autoblocks AI, users can prototype quickly, test at a faster rate, and deploy with confidence. The application is trusted by leading AI teams and focuses on making AI agent development more predictable by addressing the unpredictability of user inputs and non-deterministic models.

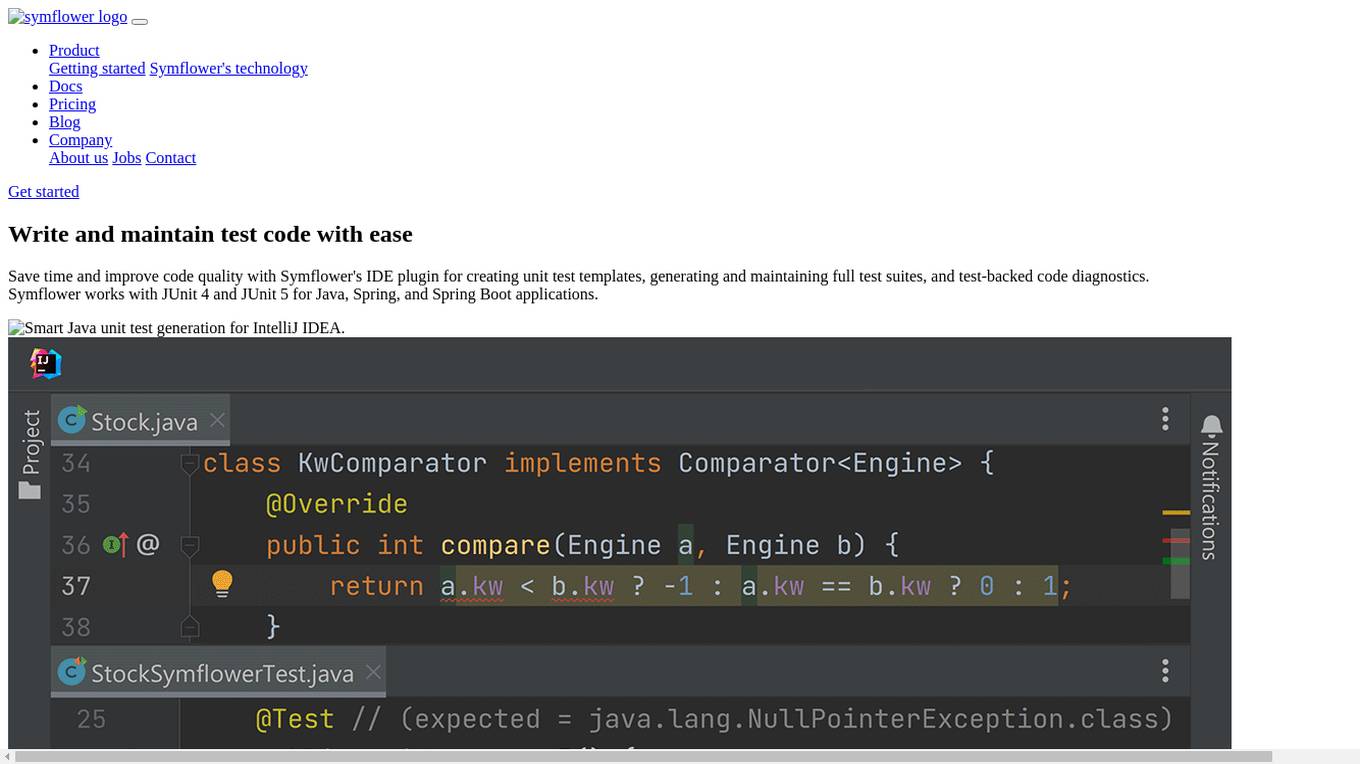

Symflower

Symflower is an AI-powered unit test generator for Java applications. It helps developers write and maintain test code with ease, saving time and improving code quality. Symflower works with JUnit 4 and JUnit 5 for Java, Spring, and Spring Boot applications.

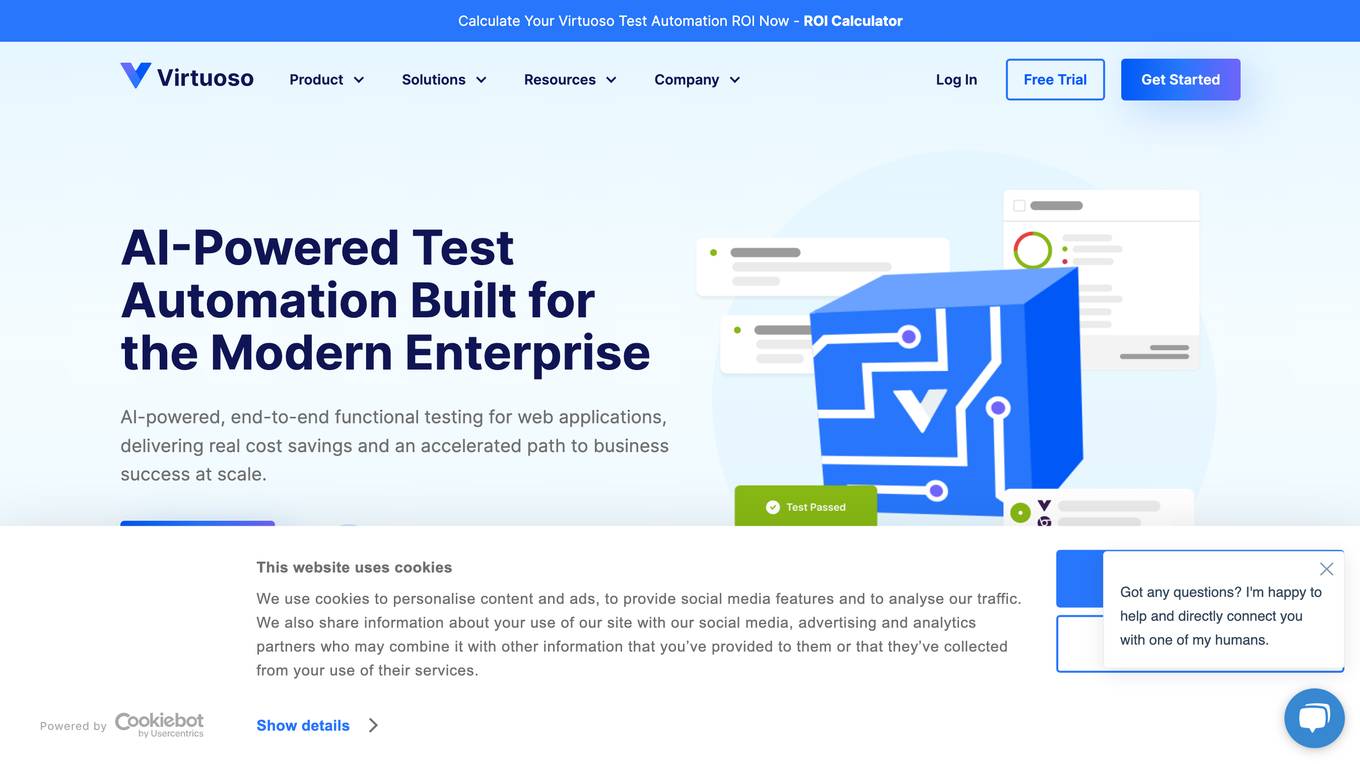

Virtuoso

Virtuoso is an AI-powered, end-to-end functional testing tool for web applications. It uses Natural Language Programming, Machine Learning, and Robotic Process Automation to automate the testing process, making it faster and more efficient. Virtuoso can be used by QA managers, practitioners, and senior executives to improve the quality of their software applications.

302.AI

302.AI is a platform that hosts a collection of 50,000 AI applications. It serves as a hub for users to explore and access various AI tools and applications for different purposes. The platform offers a wide range of AI solutions across industries such as healthcare, finance, marketing, and more. Users can discover, test, and implement AI applications to enhance their workflows and achieve efficiency in their tasks.

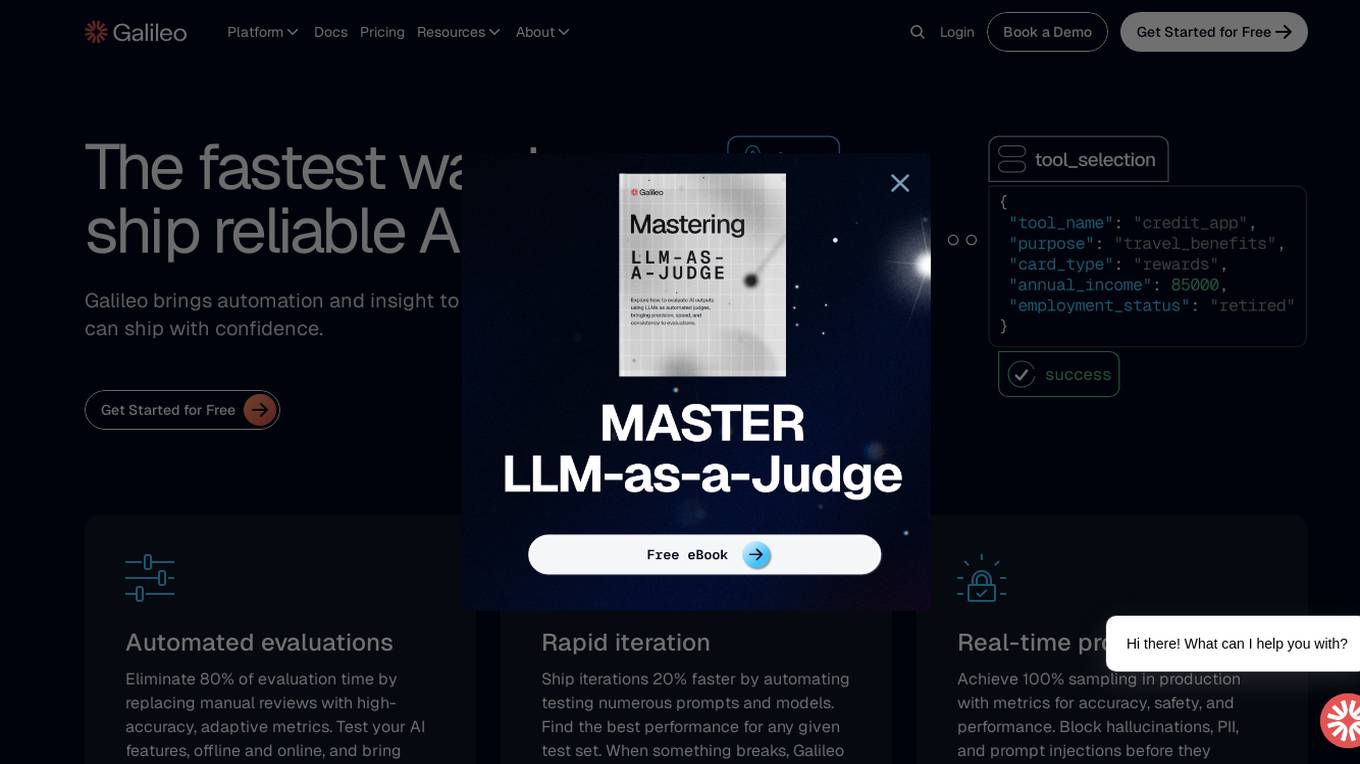

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

5 - Open Source AI Tools

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

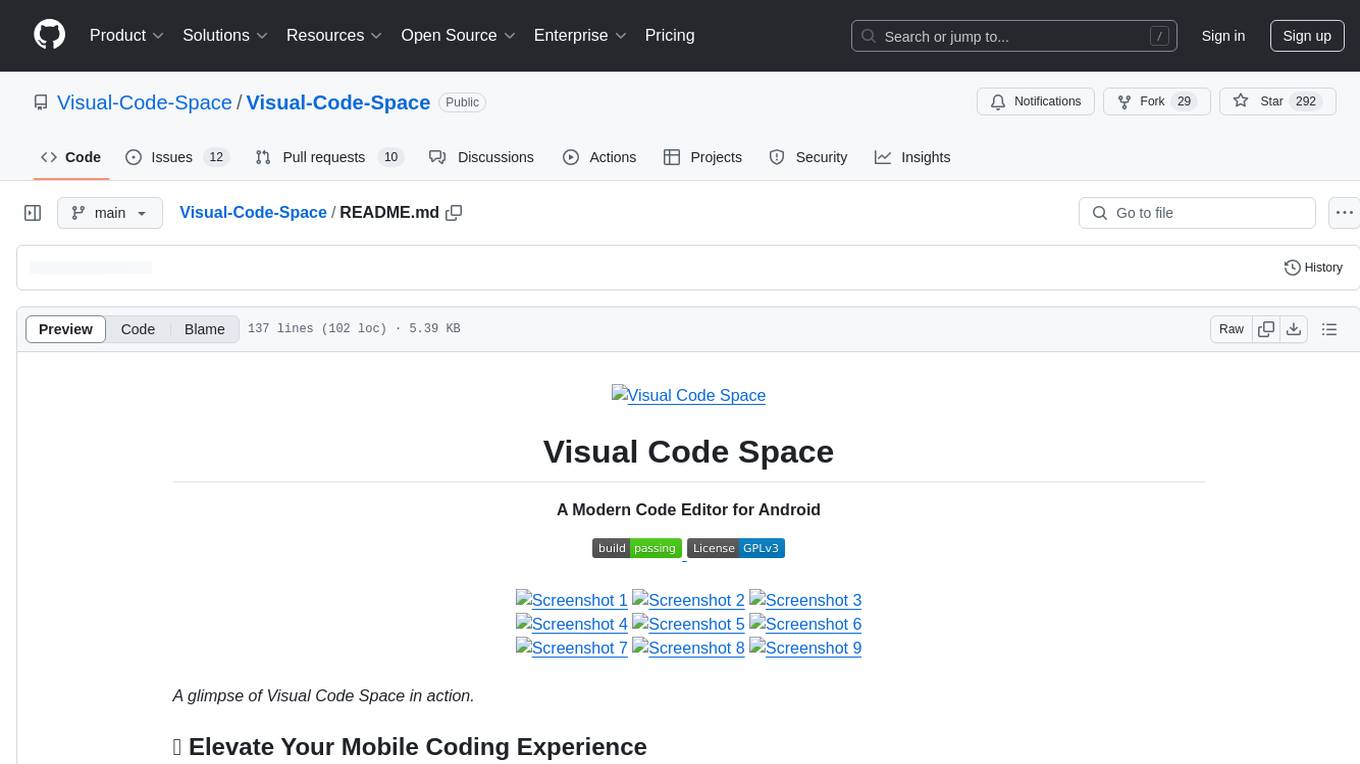

Visual-Code-Space

Visual Code Space is a modern code editor designed specifically for Android devices. It offers a seamless and efficient coding environment with features like blazing fast file explorer, multi-language syntax highlighting, tabbed editor, integrated terminal emulator, ad-free experience, and plugin support. Users can enhance their mobile coding experience with this cutting-edge editor that allows customization through custom plugins written in BeanShell. The tool aims to simplify coding on the go by providing a user-friendly interface and powerful functionalities.

awesome-generative-ai-data-scientist

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

pyscripter

PyScripter is a free and open-source Python Integrated Development Environment (IDE) aiming to compete with commercial Windows-based IDEs for other languages. It offers features like LLM-assisted coding and provides support for Python development projects. The tool is designed to enhance the coding experience for Python developers by providing a user-friendly interface and a range of functionalities to streamline the development process.

llama-stack

Llama Stack defines and standardizes core building blocks for AI application development, providing a unified API layer, plugin architecture, prepackaged distributions, developer interfaces, and standalone applications. It offers flexibility in infrastructure choice, consistent experience with unified APIs, and a robust ecosystem with integrated distribution partners. The tool simplifies building, testing, and deploying AI applications with various APIs and environments, supporting local development, on-premises, cloud, and mobile deployments.

20 - OpenAI Gpts

GetPaths

This GPT takes in content related to an application, such as HTTP traffic, JavaScript files, source code, etc., and outputs lists of URLs that can be used for further testing.

Software development front-end GPT - Senior AI

Solve problems at front-end applications development - AI 100% PRO - 500+ Guides trainer

Web App Prototyper

Specializing in crafting cutting-edge web applications using Next.js, prioritizing responsive, accessible design and seamless GitHub Copilot integration.

Mobile App Development Advisor

Guides the design, development, and optimization of mobile applications.

Tech Mentor

Expert software architect with experience in design, construction, development, testing and deployment of Web, Mobile and Standalone software architectures