lotti

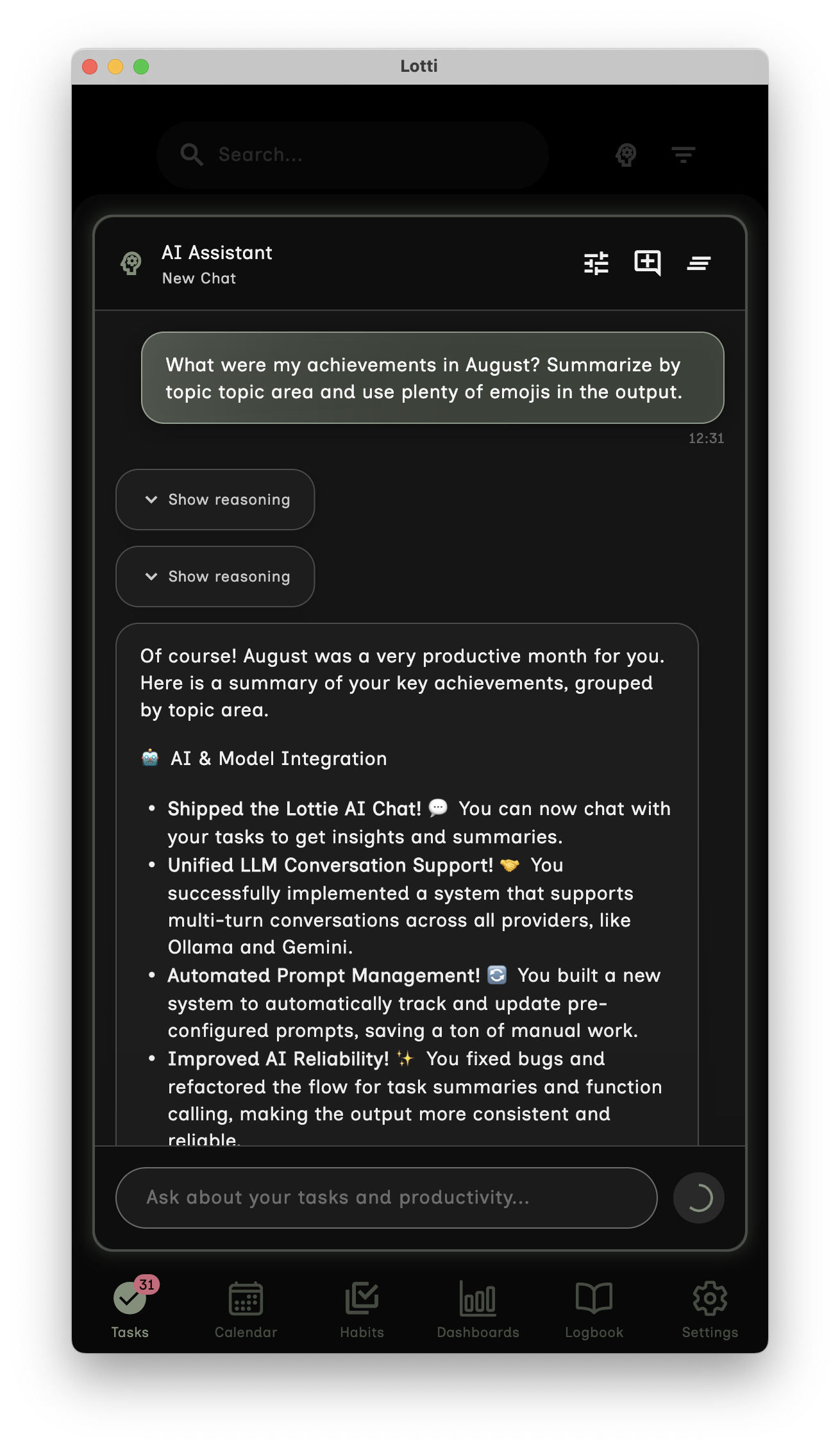

AI-powered digital assistant that keeps your data private. Chat with your tasks, get intelligent summaries, and track what matters—all stored locally on your devices. Choose your AI provider per category or run everything offline. Your data, your control.

Stars: 1084

Lotti is an open-source personal assistant that helps users capture, organize, and understand their work and life through AI-enhanced task management, audio recordings, and intelligent summaries. It ensures complete data ownership, configurable AI providers, privacy-first design, and no vendor lock-in. Users can pick up tasks, record voice notes, and ask for summaries. Core features include AI-powered intelligence, comprehensive tracking, and privacy & control. Lotti supports multiple AI providers, offers installation guides, beta testing options, and development instructions. It is built on Flutter with a focus on privacy, local AI, and user data ownership.

README:

Your AI‑powered context manager — a private, local‑first assistant for your tasks, notes, and audio.

Lotti is an open-source personal assistant that helps you capture, organize, and understand your work and life through AI-enhanced task management, audio recordings, and intelligent summaries—all while keeping your data entirely under your control.

Lotti is now available on Flathub — bringing AI-powered personal productivity to the Linux desktop!

The beginning of a multi-part blog series with video walkthroughs exploring everything Lotti can do is now live! From task management to AI-powered insights — learn how to take control of your productivity while keeping your data private.

Start reading: Meet Lotti | Project Background

- Why Lotti?

- Core Features

- AI Provider Configuration

- Getting Started

- Documentation

- Use Cases

- Contributing

- Technical Stack

- Philosophy

- License

- Acknowledgments

Most AI-powered tools require you to upload and store your personal data on their servers, creating privacy risks and vendor lock-in. Lotti takes a different approach:

- Complete data ownership: Your information stays on your devices. When you opt into cloud inference, European‑hosted, no‑retention providers are available

- Configurable AI providers per category: Choose between OpenAI, Anthropic, Gemini, Ollama (local), or any OpenAI-compatible provider on a per-category basis

- Privacy-first design: You control exactly what data gets shared with AI providers—only for specific inference calls via your API keys

- No vendor lock-in: Your data remains portable and accessible, independent of any subscription

- Pick up a task from last week — see your last notes, time spent, and a one‑paragraph recap

- Record a quick voice note — later it’s transcribed and turned into a checklist

- Ask “What did I finish in June?” — get a dated list with brief summaries

Currently, Lotti's AI capabilities are focused on task management and productivity. Habit tracking is fully functional but will receive AI enhancements in future updates.

- Smart Summaries: Automatically generate summaries of tasks, capturing key points and progress

- Audio Transcription: Transcribe recordings using either local Whisper (OpenAI's open weights model, 99 languages supported) or cloud providers with audio capabilities like Gemini Flash/Pro

- Context Recap: Resume a task with a one‑screen recap of your latest notes, time, and progress

- Intelligent Checklists: Transform rambling audio notes into actionable checklists

- Chat with Your Data: Ask questions about your tasks, learnings, and achievements across any time period

- Tasks: Full lifecycle management (open, groomed, in progress, blocked, done, rejected)

- Audio Recording: Capture thoughts, progress notes, and brain dumps

- Time Tracking: Record time spent on tasks and projects

- Journal Entries: Written reflections and documentation

- Habits: Define and monitor daily habits and routines

- Health Data: Import from Apple Health and other sources

- Custom Metrics: Track anything that matters to you

- Local-Only Storage: All data is permanently stored only on your devices and never in the cloud

- Encrypted Sync: End-to-end encrypted synchronization between your devices (desktop/laptop and mobile) using Matrix (requires a Matrix account — self-hosted or public homeserver)

- Selective AI Usage: Configure AI providers per category—keep sensitive data completely local with Ollama but use state‑of‑the‑art (frontier) cloud models when appropriate

- Your API Keys: When you choose cloud AI, data is shared only for that specific inference call. Please review the respective provider's terms and privacy policy to understand how they handle your data

- GDPR-Compliant Options: European-hosted AI providers with no data retention policies available for enhanced privacy

- Built for on‑device: Designed for the era when local AI inference becomes standard

Lotti supports multiple AI providers, configurable per category:

- Cloud Providers: OpenAI, Anthropic Claude, Google Gemini

-

Local Inference: Ollama for complete privacy (requires capable hardware)

- Full functionality available with local models like Qwen3 (8B), GPT-OSS (20B/120B), Gemma3 (12B/27B)

- Combined with local Whisper for speech recognition, enables 100% offline AI capabilities

- OpenAI-Compatible: Any provider with OpenAI-compatible APIs

- European Options: GDPR-compliant hosted alternatives

Configure different providers for different aspects of your life—use cutting-edge models for work projects while keeping personal reflections completely private with local inference. With sufficient hardware, you can run everything locally without any cloud dependency.

See DEVELOPMENT.md for setup and development workflow.

- Build it yourself: for iOS, macOS, Android, Linux, Windows

- iOS/macOS: TestFlight builds are available for select users, will be available more broadly in due course

-

Linux: See

tar.gzfiles on GitHub releases - will also be available via Flatpak soon

- Install Flutter (instructions) — FVM recommended; repo includes

.fvmrc - Install dependencies:

make deps -

Linux only: Install emoji font support for proper emoji rendering:

# First install the Noto Color Emoji font package: # Debian/Ubuntu: sudo apt install fonts-noto-color-emoji # Fedora: sudo dnf install google-noto-emoji-color-fonts # Arch: sudo pacman -S noto-fonts-emoji # Then configure fontconfig: ./linux/install_emoji_fonts.sh

- Static analysis:

make analyze - Tests:

make test• Coverage report:make coverage - Code generation:

make build_runner• Localization:make l10n - Run locally: macOS

fvm flutter run -d macos• othersflutter run -d <device>

See DEVELOPMENT.md for detailed development setup.

- Getting Started with AI - Set up Gemini or Ollama for AI features

- Basic Task Management - Voice-to-checklist workflow guide

- Manual - How to use Lotti

- Background Story - The inspiration and evolution of Lotti

- Architecture - Technical design and AI integration

- Privacy Policy - Our commitment to your privacy

- Contributing - How to help and our standards

- Track project progress with automatic context recovery

- Document decisions and learnings with searchable audio notes

- Generate sprint summaries and retrospectives from your task data

- Maintain focus with AI-powered context switching

- Build a searchable knowledge base from daily work

- Track time and generate reports across projects

- Monitor habits and health metrics

- Reflect on achievements and learnings over time

- Keep a multilingual audio journal

See CONTRIBUTING.md.

- Frontend: Flutter (iOS, macOS, Android, Windows, Linux)

- AI Integration: Multiple providers with streaming support, including Ollama for 100% private local inference

- Audio: Local Whisper (OpenAI's open weights model) or cloud providers with multimodal audio support

- Storage: Local SQLite, no cloud storage

- Synchronization: End-to-end encrypted sync using Matrix infrastructure (requires a Matrix account)

- Testing: Comprehensive unit and integration tests

Lotti represents a different approach to AI-powered productivity:

- Your data stays yours: No company should own your thoughts and experiences

- AI as a tool, not a service: Use AI capabilities without subscription lock-in

- Privacy by design: Choose exactly what to share, when, and with whom

- Future-focused: Built for the coming era of powerful local AI

Lotti is open source under LICENSE.

Special thanks to the Flutter team, OpenAI for the Whisper model, and all contributors who believe in privacy-respecting AI tools.

Building in public • Follow development here on GitHub • Read updates on Substack

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lotti

Similar Open Source Tools

lotti

Lotti is an open-source personal assistant that helps users capture, organize, and understand their work and life through AI-enhanced task management, audio recordings, and intelligent summaries. It ensures complete data ownership, configurable AI providers, privacy-first design, and no vendor lock-in. Users can pick up tasks, record voice notes, and ask for summaries. Core features include AI-powered intelligence, comprehensive tracking, and privacy & control. Lotti supports multiple AI providers, offers installation guides, beta testing options, and development instructions. It is built on Flutter with a focus on privacy, local AI, and user data ownership.

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

obsidian-llmsider

LLMSider is an AI assistant plugin for Obsidian that offers flexible multi-model support, deep workflow integration, privacy-first design, and a professional tool ecosystem. It provides comprehensive AI capabilities for personal knowledge management, from intelligent writing assistance to complex task automation, making AI a capable assistant for thinking and creating while ensuring data privacy.

Linguflex

Linguflex is a project that aims to simulate engaging, authentic, human-like interaction with AI personalities. It offers voice-based conversation with custom characters, alongside an array of practical features such as controlling smart home devices, playing music, searching the internet, fetching emails, displaying current weather information and news, assisting in scheduling, and searching or generating images.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

codegate

CodeGate is a local gateway that enhances the safety of AI coding assistants by ensuring AI-generated recommendations adhere to best practices, safeguarding code integrity, and protecting individual privacy. Developed by Stacklok, CodeGate allows users to confidently leverage AI in their development workflow without compromising security or productivity. It works seamlessly with coding assistants, providing real-time security analysis of AI suggestions. CodeGate is designed with privacy at its core, keeping all data on the user's machine and offering complete control over data.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

intellij-aicoder

AI Coding Assistant is a free and open-source IntelliJ plugin that leverages cutting-edge Language Model APIs to enhance developers' coding experience. It seamlessly integrates with various leading LLM APIs, offers an intuitive toolbar UI, and allows granular control over API requests. With features like Code & Patch Chat, Planning with AI Agents, Markdown visualization, and versatile text processing capabilities, this tool aims to streamline coding workflows and boost productivity.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

omniscient

Omniscient is an advanced AI Platform offered as a SaaS, empowering projects with cutting-edge artificial intelligence capabilities. Seamlessly integrating with Next.js 14, React, Typescript, and APIs like OpenAI and Replicate, it provides solutions for code generation, conversation simulation, image creation, music composition, and video generation.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

pocketpal-ai

PocketPal AI is a versatile virtual assistant tool designed to streamline daily tasks and enhance productivity. It leverages artificial intelligence technology to provide personalized assistance in managing schedules, organizing information, setting reminders, and more. With its intuitive interface and smart features, PocketPal AI aims to simplify users' lives by automating routine activities and offering proactive suggestions for optimal time management and task prioritization.

refact-vscode

Refact.ai is an open-source AI coding assistant that boosts developer's productivity. It supports 25+ programming languages and offers features like code completion, AI Toolbox for code explanation and refactoring, integrated in-IDE chat, and self-hosting or cloud version. The Enterprise plan provides enhanced customization, security, fine-tuning, user statistics, efficient inference, priority support, and access to 20+ LLMs for up to 50 engineers per GPU.

AudioMuse-AI

AudioMuse-AI is a deep learning-based tool for audio analysis and music generation. It provides a user-friendly interface for processing audio data and generating music compositions. The tool utilizes state-of-the-art machine learning algorithms to analyze audio signals and extract meaningful features for music generation. With AudioMuse-AI, users can explore the possibilities of AI in music creation and experiment with different styles and genres. Whether you are a music enthusiast, a researcher, or a developer, AudioMuse-AI offers a versatile platform for audio analysis and music generation.

For similar tasks

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

zep

Zep is a long-term memory service for AI Assistant apps. With Zep, you can provide AI assistants with the ability to recall past conversations, no matter how distant, while also reducing hallucinations, latency, and cost. Zep persists and recalls chat histories, and automatically generates summaries and other artifacts from these chat histories. It also embeds messages and summaries, enabling you to search Zep for relevant context from past conversations. Zep does all of this asyncronously, ensuring these operations don't impact your user's chat experience. Data is persisted to database, allowing you to scale out when growth demands. Zep also provides a simple, easy to use abstraction for document vector search called Document Collections. This is designed to complement Zep's core memory features, but is not designed to be a general purpose vector database. Zep allows you to be more intentional about constructing your prompt: 1. automatically adding a few recent messages, with the number customized for your app; 2. a summary of recent conversations prior to the messages above; 3. and/or contextually relevant summaries or messages surfaced from the entire chat session. 4. and/or relevant Business data from Zep Document Collections.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

mslearn-ai-language

This repository contains lab files for Azure AI Language modules. It provides hands-on exercises and resources for learning about various AI language technologies on the Azure platform. The labs cover topics such as natural language processing, text analytics, language understanding, and more. By following the exercises in this repository, users can gain practical experience in implementing AI language solutions using Azure services.

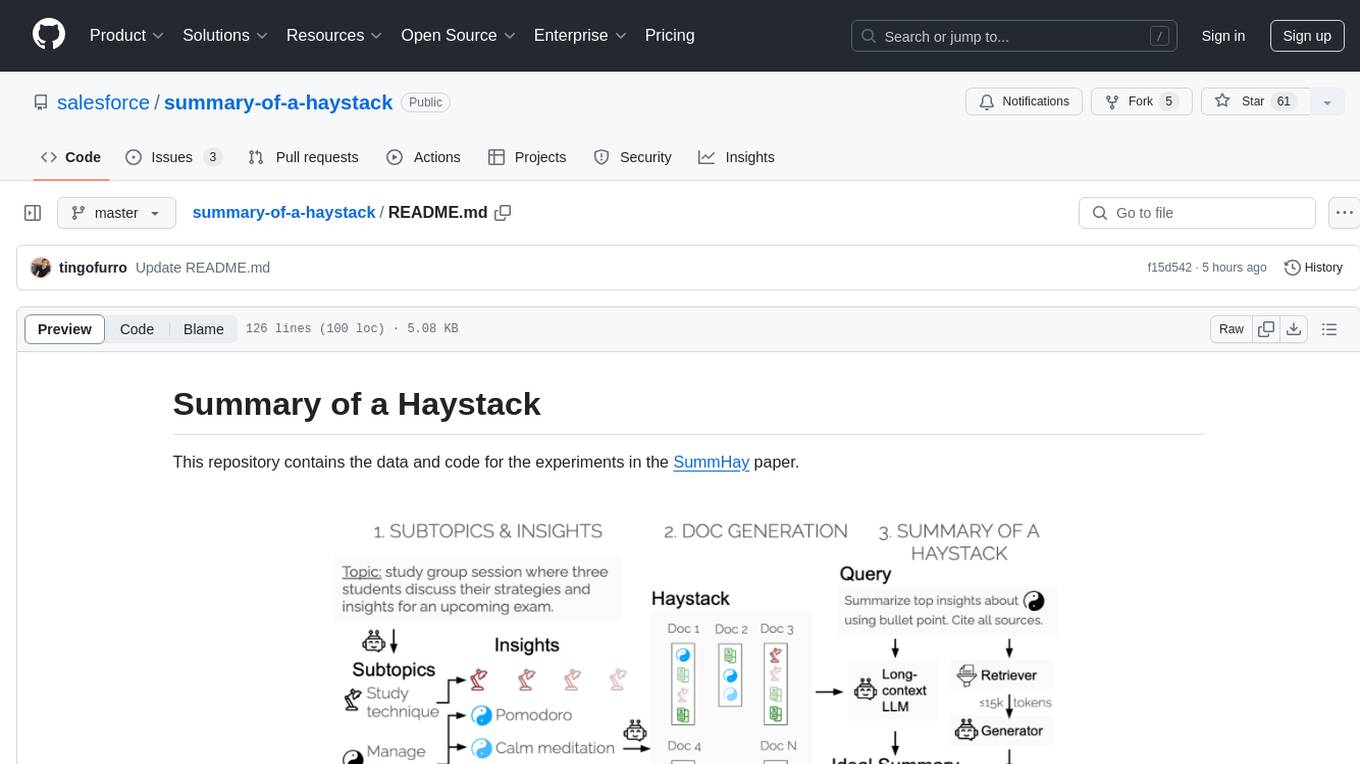

summary-of-a-haystack

This repository contains data and code for the experiments in the SummHay paper. It includes publicly released Haystacks in conversational and news domains, along with scripts for running the pipeline, visualizing results, and benchmarking automatic evaluation. The data structure includes topics, subtopics, insights, queries, retrievers, summaries, evaluation summaries, and documents. The pipeline involves scripts for retriever scores, summaries, and evaluation scores using GPT-4o. Visualization scripts are provided for compiling and visualizing results. The repository also includes annotated samples for benchmarking and citation information for the SummHay paper.

llm-book

The 'llm-book' repository is dedicated to the introduction of large-scale language models, focusing on natural language processing tasks. The code is designed to run on Google Colaboratory and utilizes datasets and models available on the Hugging Face Hub. Note that as of July 28, 2023, there are issues with the MARC-ja dataset links, but an alternative notebook using the WRIME Japanese sentiment analysis dataset has been added. The repository covers various chapters on topics such as Transformers, fine-tuning language models, entity recognition, summarization, document embedding, question answering, and more.

Controllable-RAG-Agent

This repository contains a sophisticated deterministic graph-based solution for answering complex questions using a controllable autonomous agent. The solution is designed to ensure that answers are solely based on the provided data, avoiding hallucinations. It involves various steps such as PDF loading, text preprocessing, summarization, database creation, encoding, and utilizing large language models. The algorithm follows a detailed workflow involving planning, retrieval, answering, replanning, content distillation, and performance evaluation. Heuristics and techniques implemented focus on content encoding, anonymizing questions, task breakdown, content distillation, chain of thought answering, verification, and model performance evaluation.

summarize

The 'summarize' tool is designed to transcribe and summarize videos from various sources using AI models. It helps users efficiently summarize lengthy videos, take notes, and extract key insights by providing timestamps, original transcripts, and support for auto-generated captions. Users can utilize different AI models via Groq, OpenAI, or custom local models to generate grammatically correct video transcripts and extract wisdom from video content. The tool simplifies the process of summarizing video content, making it easier to remember and reference important information.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.