mcpdoc

Expose llms-txt to IDEs for development

Stars: 148

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

README:

llms.txt is a website index for LLMs, providing background information, guidance, and links to detailed markdown files. IDEs like Cursor and Windsurf or apps like Claude Code/Desktop can use llms.txt to retrieve context for tasks. However, these apps use different built-in tools to read and process files like llms.txt. The retrieval process can be opaque, and there is not always a way to audit the tool calls or the context returned.

MCP offers a way for developers to have full control over tools used by these applications. Here, we create an open source MCP server to provide MCP host applications (e.g., Cursor, Windsurf, Claude Code/Desktop) with (1) a user-defined list of llms.txt files and (2) a simple fetch_docs tool read URLs within any of the provided llms.txt files. This allows the user to audit each tool call as well as the context returned.

You can find llms.txt files for langgraph and langchain here:

| Library | llms.txt |

|---|---|

| LangGraph Python | https://langchain-ai.github.io/langgraph/llms.txt |

| LangGraph JS | https://langchain-ai.github.io/langgraphjs/llms.txt |

| LangChain Python | https://python.langchain.com/llms.txt |

| LangChain JS | https://js.langchain.com/llms.txt |

- Please see official uv docs for other ways to install

uv.

curl -LsSf https://astral.sh/uv/install.sh | sh- For example, here's the LangGraph

llms.txtfile.

Note: Security and Domain Access Control

For security reasons, mcpdoc implements strict domain access controls:

Remote llms.txt files: When you specify a remote llms.txt URL (e.g.,

https://langchain-ai.github.io/langgraph/llms.txt), mcpdoc automatically adds only that specific domain (langchain-ai.github.io) to the allowed domains list. This means the tool can only fetch documentation from URLs on that domain.Local llms.txt files: When using a local file, NO domains are automatically added to the allowed list. You MUST explicitly specify which domains to allow using the

--allowed-domainsparameter.Adding additional domains: To allow fetching from domains beyond those automatically included:

- Use

--allowed-domains domain1.com domain2.comto add specific domains- Use

--allowed-domains '*'to allow all domains (use with caution)This security measure prevents unauthorized access to domains not explicitly approved by the user, ensuring that documentation can only be retrieved from trusted sources.

uvx --from mcpdoc mcpdoc \

--urls LangGraph:https://langchain-ai.github.io/langgraph/llms.txt \

--urls LangChain:https://python.langchain.com/llms.txt \

--transport sse \

--port 8082 \

--host localhost- This should run at: http://localhost:8082

- Run MCP inspector and connect to the running server:

npx @modelcontextprotocol/inspector- Here, you can test the

toolcalls.

- Open

Cursor SettingsandMCPtab. - This will open the

~/.cursor/mcp.jsonfile.

- Paste the following into the file (we use the

langgraph-docs-mcpname and link to the LangGraphllms.txt).

{

"mcpServers": {

"langgraph-docs-mcp": {

"command": "uvx",

"args": [

"--from",

"mcpdoc",

"mcpdoc",

"--urls",

"LangGraph:https://langchain-ai.github.io/langgraph/llms.txt",

"--urls",

"LangChain:https://python.langchain.com/llms.txt",

"--transport",

"stdio"

]

}

}

}

- Confirm that the server is running in your

Cursor Settings/MCPtab. - Best practice is to then update Cursor Global (User) rules.

- Open Cursor

Settings/Rulesand updateUser Ruleswith the following (or similar):

for ANY question about LangGraph, use the langgraph-docs-mcp server to help answer --

+ call list_doc_sources tool to get the available llms.txt file

+ call fetch_docs tool to read it

+ reflect on the urls in llms.txt

+ reflect on the input question

+ call fetch_docs on any urls relevant to the question

+ use this to answer the question

-

CMD+L(on Mac) to open chat. - Ensure

agentis selected.

Then, try an example prompt, such as:

what are types of memory in LangGraph?

- Open Cascade with

CMD+L(on Mac). - Click

Configure MCPto open the config file,~/.codeium/windsurf/mcp_config.json. - Update with

langgraph-docs-mcpas noted above.

- Update

Windsurf Rules/Global ruleswith the following (or similar):

for ANY question about LangGraph, use the langgraph-docs-mcp server to help answer --

+ call list_doc_sources tool to get the available llms.txt file

+ call fetch_docs tool to read it

+ reflect on the urls in llms.txt

+ reflect on the input question

+ call fetch_docs on any urls relevant to the question

Then, try the example prompt:

- It will perform your tool calls.

- Open

Settings/Developerto update~/Library/Application\ Support/Claude/claude_desktop_config.json. - Update with

langgraph-docs-mcpas noted above. - Restart Claude Desktop app.

[!Note] If you run into issues with Python version incompatibility when trying to add MCPDoc tools to Claude Desktop, you can explicitly specify the filepath to

pythonexecutable in theuvxcommand.Example configuration

{ "mcpServers": { "langgraph-docs-mcp": { "command": "uvx", "args": [ "--python", "/path/to/python", "--from", "mcpdoc", "mcpdoc", "--urls", "LangGraph:https://langchain-ai.github.io/langgraph/llms.txt", "--transport", "stdio" ] } } }

[!Note] Currently (3/21/25) it appears that Claude Desktop does not support

rulesfor global rules, so appending the following to your prompt.

<rules>

for ANY question about LangGraph, use the langgraph-docs-mcp server to help answer --

+ call list_doc_sources tool to get the available llms.txt file

+ call fetch_docs tool to read it

+ reflect on the urls in llms.txt

+ reflect on the input question

+ call fetch_docs on any urls relevant to the question

</rules>

- You will see your tools visible in the bottom right of your chat input.

Then, try the example prompt:

- It will ask to approve tool calls as it processes your request.

- In a terminal after installing Claude Code, run this command to add the MCP server to your project:

claude mcp add-json langgraph-docs '{"type":"stdio","command":"uvx" ,"args":["--from", "mcpdoc", "mcpdoc", "--urls", "langgraph:https://langchain-ai.github.io/langgraph/llms.txt", "--urls", "LangChain:https://python.langchain.com/llms.txt"]}' -s local

- You will see

~/.claude.jsonupdated. - Test by launching Claude Code and running to view your tools:

$ Claude

$ /mcp

[!Note] Currently (3/21/25) it appears that Claude Code does not support

rulesfor global rules, so appending the following to your prompt.

<rules>

for ANY question about LangGraph, use the langgraph-docs-mcp server to help answer --

+ call list_doc_sources tool to get the available llms.txt file

+ call fetch_docs tool to read it

+ reflect on the urls in llms.txt

+ reflect on the input question

+ call fetch_docs on any urls relevant to the question

</rules>

Then, try the example prompt:

- It will ask to approve tool calls.

The mcpdoc command provides a simple CLI for launching the documentation server.

You can specify documentation sources in three ways, and these can be combined:

- Using a YAML config file:

- This will load the LangGraph Python documentation from the

sample_config.yamlfile in this repo.

mcpdoc --yaml sample_config.yaml- Using a JSON config file:

- This will load the LangGraph Python documentation from the

sample_config.jsonfile in this repo.

mcpdoc --json sample_config.json- Directly specifying llms.txt URLs with optional names:

- URLs can be specified either as plain URLs or with optional names using the format

name:url. - You can specify multiple URLs by using the

--urlsparameter multiple times. - This is how we loaded

llms.txtfor the MCP server above.

mcpdoc --urls LangGraph:https://langchain-ai.github.io/langgraph/llms.txt --urls LangChain:https://python.langchain.com/llms.txtYou can also combine these methods to merge documentation sources:

mcpdoc --yaml sample_config.yaml --json sample_config.json --urls LangGraph:https://langchain-ai.github.io/langgraph/llms.txt --urls LangChain:https://python.langchain.com/llms.txt-

--follow-redirects: Follow HTTP redirects (defaults to False) -

--timeout SECONDS: HTTP request timeout in seconds (defaults to 10.0)

Example with additional options:

mcpdoc --yaml sample_config.yaml --follow-redirects --timeout 15This will load the LangGraph Python documentation with a 15-second timeout and follow any HTTP redirects if necessary.

Both YAML and JSON configuration files should contain a list of documentation sources.

Each source must include an llms_txt URL and can optionally include a name:

# Sample configuration for mcp-mcpdoc server

# Each entry must have a llms_txt URL and optionally a name

- name: LangGraph Python

llms_txt: https://langchain-ai.github.io/langgraph/llms.txt[

{

"name": "LangGraph Python",

"llms_txt": "https://langchain-ai.github.io/langgraph/llms.txt"

}

]from mcpdoc.main import create_server

# Create a server with documentation sources

server = create_server(

[

{

"name": "LangGraph Python",

"llms_txt": "https://langchain-ai.github.io/langgraph/llms.txt",

},

# You can add multiple documentation sources

# {

# "name": "Another Documentation",

# "llms_txt": "https://example.com/llms.txt",

# },

],

follow_redirects=True,

timeout=15.0,

)

# Run the server

server.run(transport="stdio")For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcpdoc

Similar Open Source Tools

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

mcp-victoriametrics

The VictoriaMetrics MCP Server is an implementation of Model Context Protocol (MCP) server for VictoriaMetrics. It provides access to your VictoriaMetrics instance and seamless integration with VictoriaMetrics APIs and documentation. The server allows you to use almost all read-only APIs of VictoriaMetrics, enabling monitoring, observability, and debugging tasks related to your VictoriaMetrics instances. It also contains embedded up-to-date documentation and tools for exploring metrics, labels, alerts, and more. The server can be used for advanced automation and interaction capabilities for engineers and tools.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

mLoRA

mLoRA (Multi-LoRA Fine-Tune) is an open-source framework for efficient fine-tuning of multiple Large Language Models (LLMs) using LoRA and its variants. It allows concurrent fine-tuning of multiple LoRA adapters with a shared base model, efficient pipeline parallelism algorithm, support for various LoRA variant algorithms, and reinforcement learning preference alignment algorithms. mLoRA helps save computational and memory resources when training multiple adapters simultaneously, achieving high performance on consumer hardware.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

claude-task-master

Claude Task Master is a task management system designed for AI-driven development with Claude, seamlessly integrating with Cursor AI. It allows users to configure tasks through environment variables, parse PRD documents, generate structured tasks with dependencies and priorities, and manage task status. The tool supports task expansion, complexity analysis, and smart task recommendations. Users can interact with the system through CLI commands for task discovery, implementation, verification, and completion. It offers features like task breakdown, dependency management, and AI-driven task generation, providing a structured workflow for efficient development.

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

genai-toolbox

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

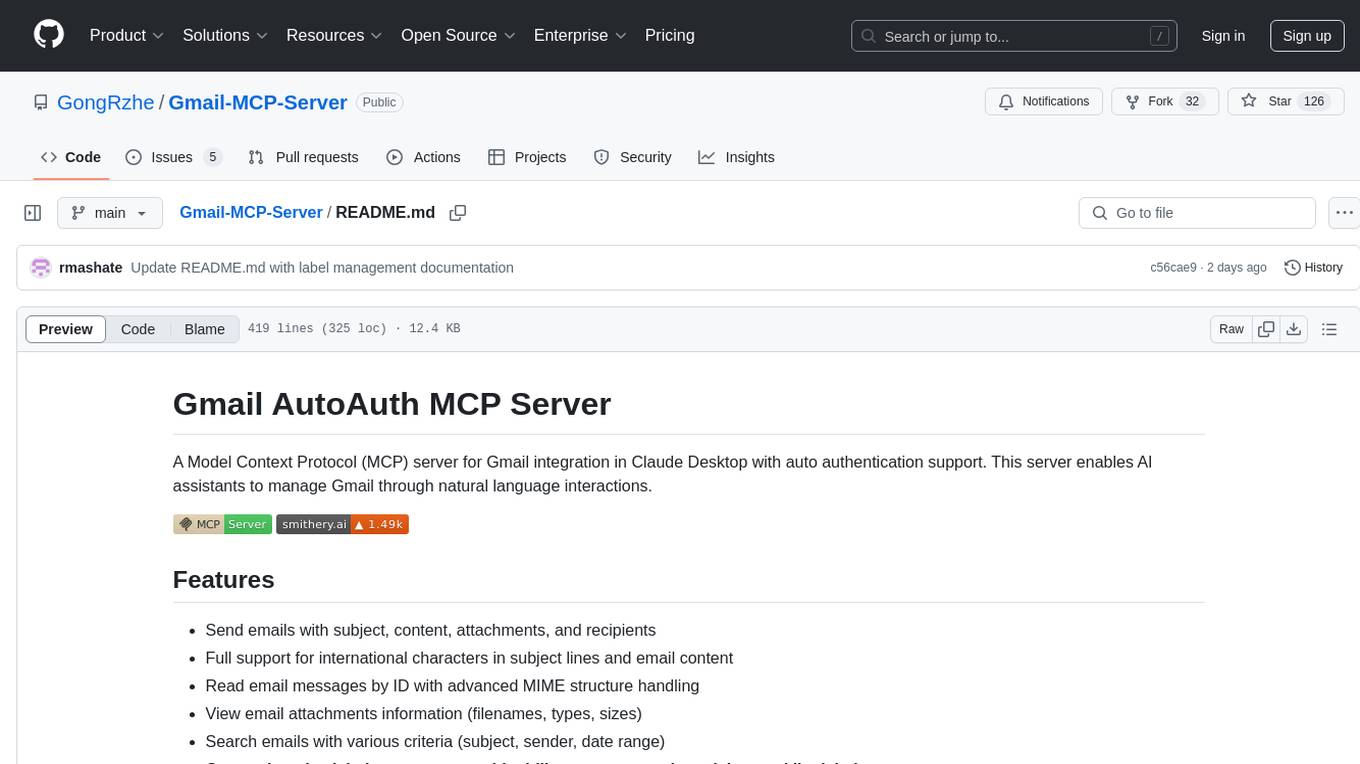

Gmail-MCP-Server

Gmail AutoAuth MCP Server is a Model Context Protocol (MCP) server designed for Gmail integration in Claude Desktop. It supports auto authentication and enables AI assistants to manage Gmail through natural language interactions. The server provides comprehensive features for sending emails, reading messages, managing labels, searching emails, and batch operations. It offers full support for international characters, email attachments, and Gmail API integration. Users can install and authenticate the server via Smithery or manually with Google Cloud Project credentials. The server supports both Desktop and Web application credentials, with global credential storage for convenience. It also includes Docker support and instructions for cloud server authentication.

For similar tasks

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

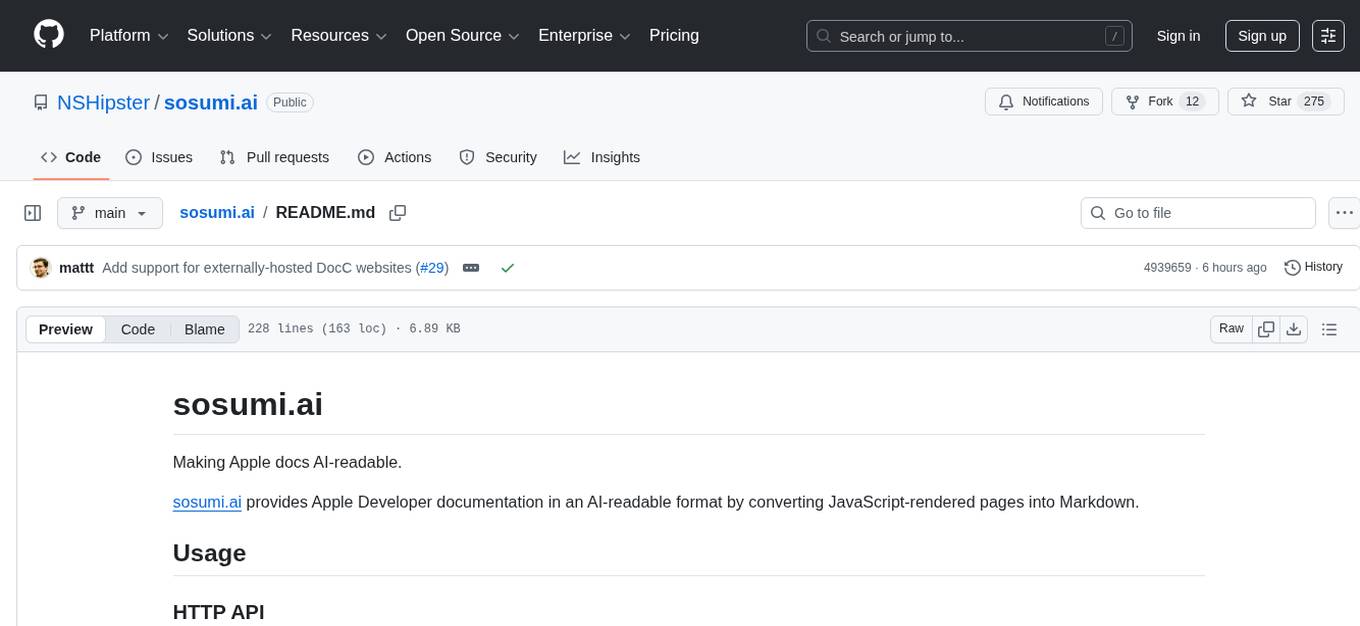

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

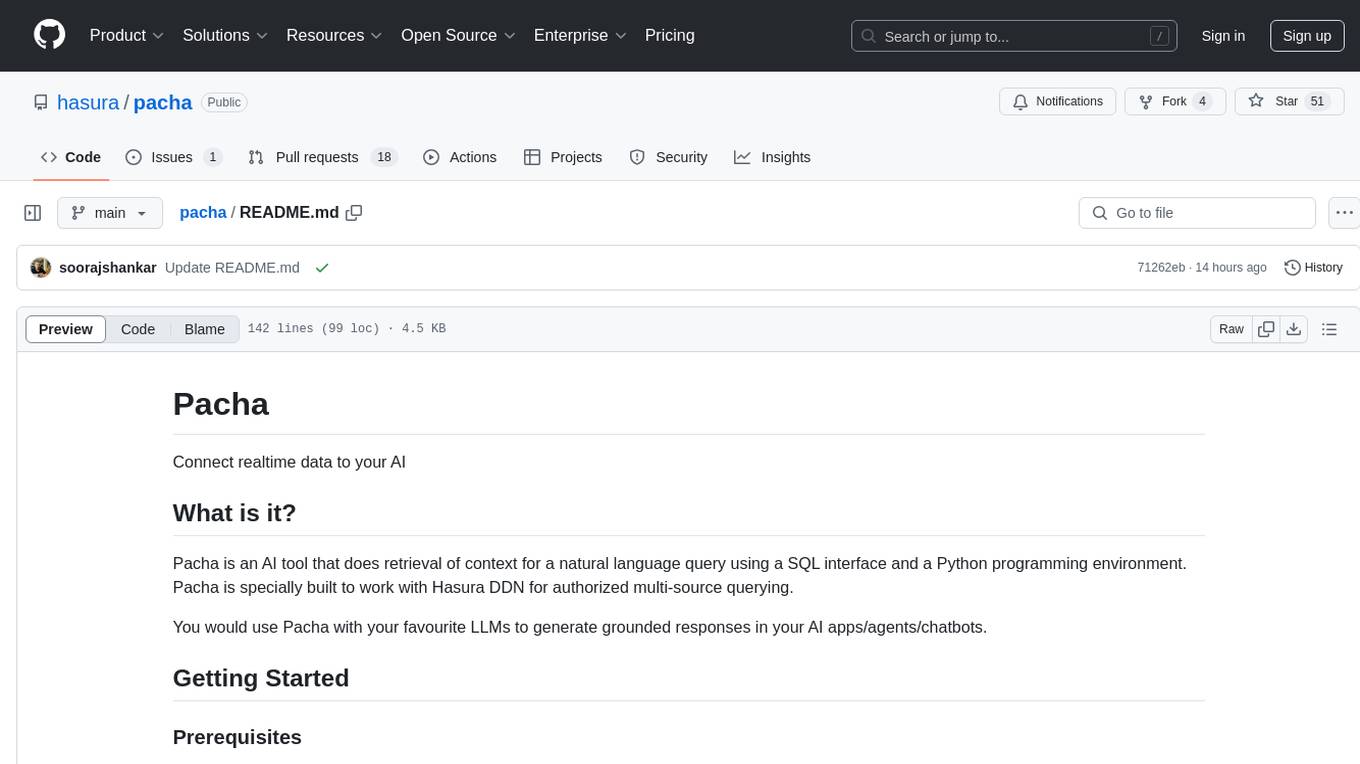

pacha

Pacha is an AI tool designed for retrieving context for natural language queries using a SQL interface and Python programming environment. It is optimized for working with Hasura DDN for multi-source querying. Pacha is used in conjunction with language models to produce informed responses in AI applications, agents, and chatbots.

superagentx

SuperAgentX is a lightweight open-source AI framework designed for multi-agent applications with Artificial General Intelligence (AGI) capabilities. It offers goal-oriented multi-agents with retry mechanisms, easy deployment through WebSocket, RESTful API, and IO console interfaces, streamlined architecture with no major dependencies, contextual memory using SQL + Vector databases, flexible LLM configuration supporting various Gen AI models, and extendable handlers for integration with diverse APIs and data sources. It aims to accelerate the development of AGI by providing a powerful platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

chatmemory

ChatMemory is a simple yet powerful long-term memory manager that facilitates communication between AI and users. It organizes conversation data into history, summary, and knowledge entities, enabling quick retrieval of context and generation of clear, concise answers. The tool leverages vector search on summaries/knowledge and detailed history to provide accurate responses. It balances speed and accuracy by using lightweight retrieval and fallback detailed search mechanisms, ensuring efficient memory management and response generation beyond mere data retrieval.

mem0-chrome-extension

Mem0 Chrome Extension is a tool that enhances AI interactions by providing a universal memory layer across various AI assistants. It allows users to seamlessly share context, automatically capture relevant information, and retrieve memories intelligently. The extension offers features like one-click sync with existing ChatGPT memories and a memory dashboard for easy management. Users can install the extension in Google Chrome, sign in with Google, and start using it with supported AI assistants. Mem0 is free to use with no usage limits or ads, and it prioritizes privacy and data security by sending messages to the Mem0 API for memory extraction and retrieval.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.