AirBnB_clone_v2

None

Stars: 98

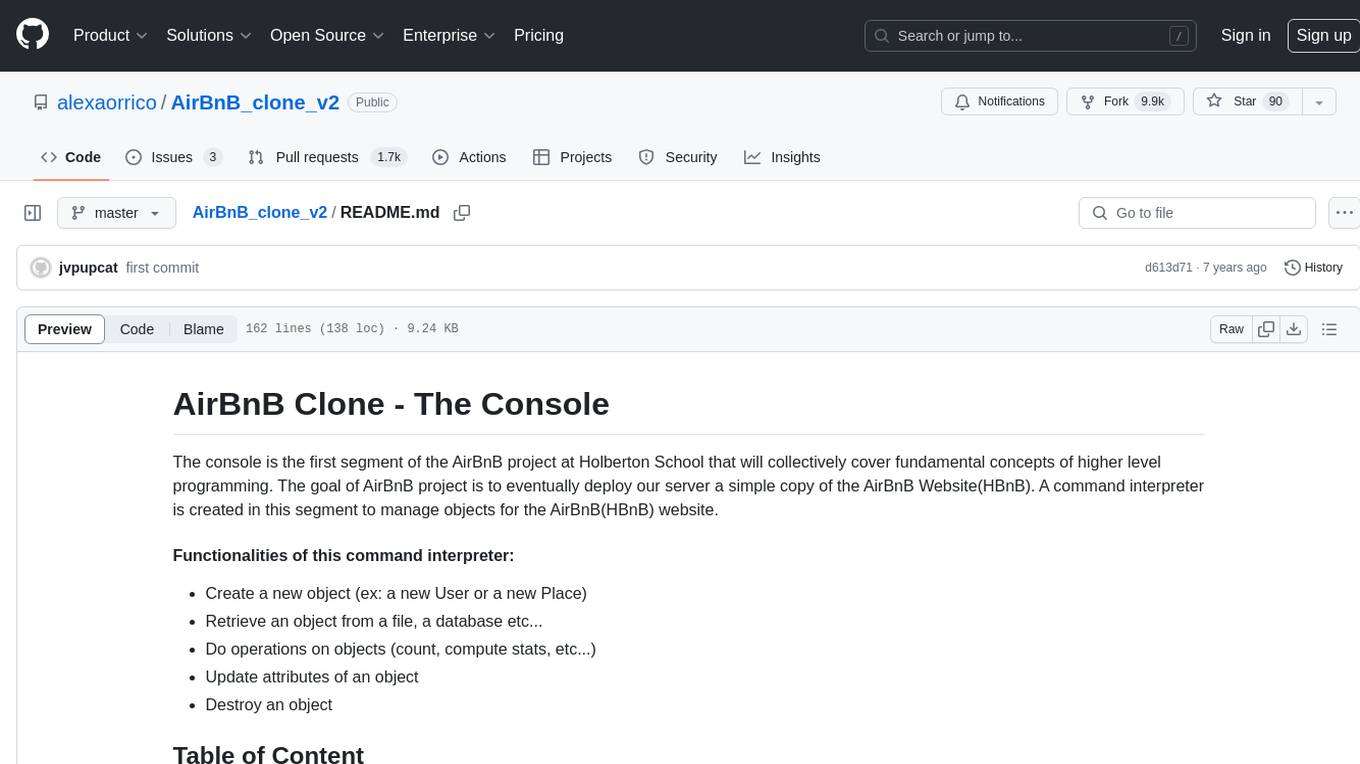

The AirBnB Clone - The Console project is the first segment of the AirBnB project at Holberton School, aiming to cover fundamental concepts of higher level programming. The goal is to deploy a server as a simple copy of the AirBnB Website (HBnB). The project includes a command interpreter to manage objects for the AirBnB website, allowing users to create new objects, retrieve objects, perform operations on objects, update object attributes, and destroy objects. The project is interpreted/tested on Ubuntu 14.04 LTS using Python 3.4.3.

README:

The console is the first segment of the AirBnB project at Holberton School that will collectively cover fundamental concepts of higher level programming. The goal of AirBnB project is to eventually deploy our server a simple copy of the AirBnB Website(HBnB). A command interpreter is created in this segment to manage objects for the AirBnB(HBnB) website.

- Create a new object (ex: a new User or a new Place)

- Retrieve an object from a file, a database etc...

- Do operations on objects (count, compute stats, etc...)

- Update attributes of an object

- Destroy an object

This project is interpreted/tested on Ubuntu 14.04 LTS using python3 (version 3.4.3)

- Clone this repository:

git clone "https://github.com/alexaorrico/AirBnB_clone.git" - Access AirBnb directory:

cd AirBnB_clone - Run hbnb(interactively):

./consoleand enter command - Run hbnb(non-interactively):

echo "<command>" | ./console.py

console.py - the console contains the entry point of the command interpreter. List of commands this console current supports:

-

EOF- exits console -

quit- exits console -

<emptyline>- overwrites default emptyline method and does nothing -

create- Creates a new instance ofBaseModel, saves it (to the JSON file) and prints the id -

destroy- Deletes an instance based on the class name and id (save the change into the JSON file). -

show- Prints the string representation of an instance based on the class name and id. -

all- Prints all string representation of all instances based or not on the class name. -

update- Updates an instance based on the class name and id by adding or updating attribute (save the change into the JSON file).

base_model.py - The BaseModel class from which future classes will be derived

-

def __init__(self, *args, **kwargs)- Initialization of the base model -

def __str__(self)- String representation of the BaseModel class -

def save(self)- Updates the attributeupdated_atwith the current datetime -

def to_dict(self)- returns a dictionary containing all keys/values of the instance

Classes inherited from Base Model:

/models/engine directory contains File Storage class that handles JASON serialization and deserialization :

file_storage.py - serializes instances to a JSON file & deserializes back to instances

-

def all(self)- returns the dictionary __objects -

def new(self, obj)- sets in __objects the obj with key .id -

def save(self)- serializes __objects to the JSON file (path: __file_path) -

def reload(self)- deserializes the JSON file to __objects

/test_models/test_base_model.py - Contains the TestBaseModel and TestBaseModelDocs classes TestBaseModelDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_base_model(self)- Test that models/base_model.py conforms to PEP8 -

def test_pep8_conformance_test_base_model(self)- Test that tests/test_models/test_base_model.py conforms to PEP8 -

def test_bm_module_docstring(self)- Test for the base_model.py module docstring -

def test_bm_class_docstring(self)- Test for the BaseModel class docstring -

def test_bm_func_docstrings(self)- Test for the presence of docstrings in BaseModel methods

TestBaseModel class:

-

def test_is_base_model(self)- Test that the instatiation of a BaseModel works -

def test_created_at_instantiation(self)- Test created_at is a pub. instance attribute of type datetime -

def test_updated_at_instantiation(self)- Test updated_at is a pub. instance attribute of type datetime -

def test_diff_datetime_objs(self)- Test that two BaseModel instances have different datetime objects

/test_models/test_amenity.py - Contains the TestAmenityDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_amenity(self)- Test that models/amenity.py conforms to PEP8 -

def test_pep8_conformance_test_amenity(self)- Test that tests/test_models/test_amenity.py conforms to PEP8 -

def test_amenity_module_docstring(self)- Test for the amenity.py module docstring -

def test_amenity_class_docstring(self)- Test for the Amenity class docstring

/test_models/test_city.py - Contains the TestCityDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_city(self)- Test that models/city.py conforms to PEP8 -

def test_pep8_conformance_test_city(self)- Test that tests/test_models/test_city.py conforms to PEP8 -

def test_city_module_docstring(self)- Test for the city.py module docstring -

def test_city_class_docstring(self)- Test for the City class docstring

/test_models/test_file_storage.py - Contains the TestFileStorageDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_file_storage(self)- Test that models/file_storage.py conforms to PEP8 -

def test_pep8_conformance_test_file_storage(self)- Test that tests/test_models/test_file_storage.py conforms to PEP8 -

def test_file_storage_module_docstring(self)- Test for the file_storage.py module docstring -

def test_file_storage_class_docstring(self)- Test for the FileStorage class docstring

/test_models/test_place.py - Contains the TestPlaceDoc class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_place(self)- Test that models/place.py conforms to PEP8. -

def test_pep8_conformance_test_place(self)- Test that tests/test_models/test_place.py conforms to PEP8. -

def test_place_module_docstring(self)- Test for the place.py module docstring -

def test_place_class_docstring(self)- Test for the Place class docstring

/test_models/test_review.py - Contains the TestReviewDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_review(self)- Test that models/review.py conforms to PEP8 -

def test_pep8_conformance_test_review(self)- Test that tests/test_models/test_review.py conforms to PEP8 -

def test_review_module_docstring(self)- Test for the review.py module docstring -

def test_review_class_docstring(self)- Test for the Review class docstring

/test_models/state.py - Contains the TestStateDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_state(self)- Test that models/state.py conforms to PEP8 -

def test_pep8_conformance_test_state(self)- Test that tests/test_models/test_state.py conforms to PEP8 -

def test_state_module_docstring(self)- Test for the state.py module docstring -

def test_state_class_docstring(self)- Test for the State class docstring

/test_models/user.py - Contains the TestUserDocs class:

-

def setUpClass(cls)- Set up for the doc tests -

def test_pep8_conformance_user(self)- Test that models/user.py conforms to PEP8 -

def test_pep8_conformance_test_user(self)- Test that tests/test_models/test_user.py conforms to PEP8 -

def test_user_module_docstring(self)- Test for the user.py module docstring -

def test_user_class_docstring(self)- Test for the User class docstring

vagrantAirBnB_clone$./console.py

(hbnb) help

Documented commands (type help <topic>):

========================================

EOF all create destroy help quit show update

(hbnb) all MyModel

** class doesn't exist **

(hbnb) create BaseModel

7da56403-cc45-4f1c-ad32-bfafeb2bb050

(hbnb) all BaseModel

[[BaseModel] (7da56403-cc45-4f1c-ad32-bfafeb2bb050) {'updated_at': datetime.datetime(2017, 9, 28, 9, 50, 46, 772167), 'id': '7da56403-cc45-4f1c-ad32-bfafeb2bb050', 'created_at': datetime.datetime(2017, 9, 28, 9, 50, 46, 772123)}]

(hbnb) show BaseModel 7da56403-cc45-4f1c-ad32-bfafeb2bb050

[BaseModel] (7da56403-cc45-4f1c-ad32-bfafeb2bb050) {'updated_at': datetime.datetime(2017, 9, 28, 9, 50, 46, 772167), 'id': '7da56403-cc45-4f1c-ad32-bfafeb2bb050', 'created_at': datetime.datetime(2017, 9, 28, 9, 50, 46, 772123)}

(hbnb) destroy BaseModel 7da56403-cc45-4f1c-ad32-bfafeb2bb050

(hbnb) show BaseModel 7da56403-cc45-4f1c-ad32-bfafeb2bb050

** no instance found **

(hbnb) quit

No known bugs at this time.

Alexa Orrico - Github / Twitter

Jennifer Huang - Github / Twitter

Second part of Airbnb: Joann Vuong

Public Domain. No copy write protection.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AirBnB_clone_v2

Similar Open Source Tools

AirBnB_clone_v2

The AirBnB Clone - The Console project is the first segment of the AirBnB project at Holberton School, aiming to cover fundamental concepts of higher level programming. The goal is to deploy a server as a simple copy of the AirBnB Website (HBnB). The project includes a command interpreter to manage objects for the AirBnB website, allowing users to create new objects, retrieve objects, perform operations on objects, update object attributes, and destroy objects. The project is interpreted/tested on Ubuntu 14.04 LTS using Python 3.4.3.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

mediasoup-client-aiortc

mediasoup-client-aiortc is a handler for the aiortc Python library, allowing Node.js applications to connect to a mediasoup server using WebRTC for real-time audio, video, and DataChannel communication. It facilitates the creation of Worker instances to manage Python subprocesses, obtain audio/video tracks, and create mediasoup-client handlers. The tool supports features like getUserMedia, handlerFactory creation, and event handling for subprocess closure and unexpected termination. It provides custom classes for media stream and track constraints, enabling diverse audio/video sources like devices, files, or URLs. The tool enhances WebRTC capabilities in Node.js applications through seamless Python subprocess communication.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

langgraph4j

Langgraph4j is a Java library for language processing tasks such as text classification, sentiment analysis, and named entity recognition. It provides a set of tools and algorithms for analyzing text data and extracting useful information. The library is designed to be efficient and easy to use, making it suitable for both research and production applications.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

DeepPavlov

DeepPavlov is an open-source conversational AI library built on PyTorch. It is designed for the development of production-ready chatbots and complex conversational systems, as well as for research in the area of NLP and dialog systems. The library offers a wide range of models for tasks such as Named Entity Recognition, Intent/Sentence Classification, Question Answering, Sentence Similarity/Ranking, Syntactic Parsing, and more. DeepPavlov also provides embeddings like BERT, ELMo, and FastText for various languages, along with AutoML capabilities and integrations with REST API, Socket API, and Amazon AWS.

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

For similar tasks

AirBnB_clone_v2

The AirBnB Clone - The Console project is the first segment of the AirBnB project at Holberton School, aiming to cover fundamental concepts of higher level programming. The goal is to deploy a server as a simple copy of the AirBnB Website (HBnB). The project includes a command interpreter to manage objects for the AirBnB website, allowing users to create new objects, retrieve objects, perform operations on objects, update object attributes, and destroy objects. The project is interpreted/tested on Ubuntu 14.04 LTS using Python 3.4.3.

db-ally

db-ally is a library for creating natural language interfaces to data sources. It allows developers to outline specific use cases for a large language model (LLM) to handle, detailing the desired data format and the possible operations to fetch this data. db-ally effectively shields the complexity of the underlying data source from the model, presenting only the essential information needed for solving the specific use cases. Instead of generating arbitrary SQL, the model is asked to generate responses in a simplified query language.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

ask-astro

Ask Astro is an open-source reference implementation of Andreessen Horowitz's LLM Application Architecture built by Astronomer. It provides an end-to-end example of a Q&A LLM application used to answer questions about Apache Airflow® and Astronomer. Ask Astro includes Airflow DAGs for data ingestion, an API for business logic, a Slack bot, a public UI, and DAGs for processing user feedback. The tool is divided into data retrieval & embedding, prompt orchestration, and feedback loops.

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

mcp-server-qdrant

The mcp-server-qdrant repository is an official Model Context Protocol (MCP) server designed for keeping and retrieving memories in the Qdrant vector search engine. It acts as a semantic memory layer on top of the Qdrant database. The server provides tools like 'qdrant-store' for storing information in the database and 'qdrant-find' for retrieving relevant information. Configuration is done using environment variables, and the server supports different transport protocols. It can be installed using 'uvx' or Docker, and can also be installed via Smithery for Claude Desktop. The server can be used with Cursor/Windsurf as a code search tool by customizing tool descriptions. It can store code snippets and help developers find specific implementations or usage patterns. The repository is licensed under the Apache License 2.0.

turing

Viglet Turing is an enterprise search platform that combines semantic navigation, chatbots, and generative artificial intelligence. It offers integrations for authentication APIs, OCR, content indexing, CMS connectors, web crawling, database connectors, and file system indexing.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.