LLMSys-PaperList

Large Language Model (LLM) Systems Paper List

Stars: 869

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

README:

A curated list of Large Language Model systems related academic papers, articles, tutorials, slides and projects. Star this repository, and then you can keep abreast of the latest developments of this booming research field.

- LLM Systems

- LLM for Systems

- ML Systems

- Survey Paper

- LLM Benchmark / Leaderboard / Traces

- LLM Frameworks

- Related ML Readings

- Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism

- Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM

- Reducing Activation Recomputation in Large Transformer Models

- Optimized Network Architectures for Large Language Model Training with Billions of Parameters | MIT

- Carbon Emissions and Large Neural Network Training | Google, UCB

- Perseus: Removing Energy Bloat from Large Model Training | SOSP' 24

- MegaScale: Scaling Large Language Model Training to More Than 10,000 GPUs | ByteDance

- DISTMM: Accelerating distributed multimodal model training | NSDI' 24

- A Codesign of Scheduling and Parallelization for Large Model Training in Heterogeneous Clusters

- Pipeline Parallelism with Controllable Memory | Sea AI Lab

- Boosting Large-scale Parallel Training Efficiency with C4: A Communication-Driven Approach

- Scaling Beyond the GPU Memory Limit for Large Mixture-of-Experts Model Training | ICML' 24

- Alibaba HPN: A Data Center Network for Large Language ModelTraining

- FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision

- The Llama 3 Herd of Models (Section 3)

- FALCON: Pinpointing and Mitigating Stragglers for Large-Scale Hybrid-Parallel Training

- Enabling Parallelism Hot Switching for Efficient Training of Large Language Models | SOSP' 24

- Revisiting Reliability in Large-Scale Machine Learning Research Clusters

- ScheMoE: An Extensible Mixture-of-Experts Distributed Training System with Tasks Scheduling | EuroSys '24

- DynaPipe : Optimizing Multi-task Training through Dynamic Pipelines | EuroSys '24

- HAP: SPMD DNN Training on Heterogeneous GPU Clusters with Automated Program Synthesis | EuroSys'24

- Demystifying Workload Imbalances in Large Transformer Model Training over Variable-length Sequences | PKU

- Improving training time and GPU utilization in geo-distributed language model training

- DeepSeek-V3 Technical Report

- Comet: Fine-grained Computation-communication Overlapping for Mixture-of-Experts | ByteDance

- ByteScale : Efficient Scaling of LLM Training with a 2048K Context Length on More Than 12,000 GPUs | ByteDance

- Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length

- SPPO:Efficient Long-sequence LLM Training via Adaptive Sequence Pipeline Parallel Offloading

- ByteScale: Efficient Scaling of LLM Training with a 2048K Context Length on More Than 12,000 GPUs | Bytedance

- TileLink: Generating Efficient Compute-Communication Overlapping Kernels using Tile-Centric Primitives | MLSys' 25

- Every FLOP Counts: Scaling a 300B Mixture-of-Experts LING LLM without Premium GPUs| Ant Group

- RLHFuse: Efficient RLHF Training for Large Language Models with Inter- and Intra-Stage Fusion | NSDI'25

- HybridFlow: A Flexible and Efficient RLHF Framework

- Oobleck: Resilient Distributed Training of Large Models Using Pipeline Templates | SOSP' 23

- Malleus: Straggler-Resilient Hybrid Parallel Training of Large-scale Models via Malleable Data and Model Parallelization

- Fire-Flyer AI-HPC: A Cost-Effective Software-Hardware Co-Design for Deep Learning | DeepSeek SC' 24

- Lazarus: Resilient and Elastic Training of Mixture-of-Experts Models with Adaptive Expert Placement

- GEMINI: Fast Failure Recovery in Distributed Training with In-Memory Checkpoints

- ByteCheckpoint: A Unified Checkpointing System for LLM Development

- ReCycle: Resilient Training of Large DNNs using Pipeline Adaptation | SOSP' 24

- Minder: Faulty Machine Detection for Large-scale Distributed Model Training | THU

- The Streaming Batch Model for Efficient and Fault-Tolerant Heterogeneous Execution

- TrainMover: Efficient ML Training Live Migration with No Memory Overhead | Alibaba

- Characterizing GPU Resilience and Impact on AI/HPC Systems | UIUC

- Orca: A Distributed Serving System for Transformer-Based Generative Models | OSDI'22

- Response Length Perception and Sequence Scheduling: An LLM-Empowered LLM Inference Pipeline | NUS

- Efficiently Scaling Transformer Inference | MLSys' 23

- Flover: A Temporal Fusion Framework for Efficient Autoregressive Model Parallel Inference

- FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness

- DeepSpeed Inference : Enabling Efficient Inference of Transformer Models at Unprecedented Scale.

- TurboTransformers: An Efficient GPU Serving System For Transformer Models

- FlexGen: High-throughput Generative Inference of Large Language Models with a Single GPU | ICML' 23

- MPCFormer : fast, performant, and private transformer inference with MPC | ICLR'23

- POLCA: Power Oversubscription in LLM Cloud Providers | Microsoft

- SARATHI: Efficient LLM Inference by Piggybacking Decodes with Chunked Prefills | Microsoft

- AttMemo: Accelerating Self-Attention with Memoization on Big Memory Systems

- vLLM: Easy, Fast, and Cheap LLM Serving with PagedAttention | SOSP' 23

- Tabi: An Efficient Multi-Level Inference System for Large Language Models | EuroSys' 23

- Flash-LLM: Enabling Cost-Effective and Highly-Efficient Large Generative Model Inference with Unstructured Sparsity | VLDB' 24

- AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation | Microsoft

- FlashDecoding++: Faster Large Language Model Inference on GPUs | Tsinghua

- DeepSpeed-MII: Model Implementations for Inference (MII) | Microsoft

- Punica: Multi-Tenant LoRA Serving | MLSys' 24

- S-LoRA: Serving Thousands of Concurrent LoRA Adapters | MLSys' 24

- SpotServe: Serving Generative Large Language Models on Preemptible Instances | CMU

- SuperServe: Fine-Grained Inference Serving for Unpredictable Workloads

- Fairness in Serving Large Language Models | OSDI' 24

- Infinite-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache

- CaraServe: CPU-Assisted and Rank-Aware LoRA Serving for Generative LLM Inference

- DistServe: Disaggregating Prefill and Decoding for Goodput-optimized Large Language Model Serving| OSDI' 24

- Inference without Interference: Disaggregate LLM Inference for Mixed Downstream Workloads

- APIServe: Efficient API Support for Large-Language Model Inferencing

- FlexLLM: A System for Co-Serving Large Language Model Inference and Parameter-Efficient Finetuning

- DéjàVu: KV-cache Streaming for Fast, Fault-tolerant Generative LLM Serving

- Optimizing LLM Queries in Relational Workloads | UCB

- AttentionStore: Cost-effective Attention Reuse across Multi-turn Conversations in Large Language Model Serving | NUS

- MuxServe: Flexible Multiplexing for Efficient Multiple LLM Serving

- LoongServe: Efficiently Serving Long-context Large Language Models with Elastic Sequence Parallelism | SOSP' 24

- RAGCache: Efficient Knowledge Caching for Retrieval-Augmented Generation | PKU

- Andes: Defining and Enhancing Quality-of-Experience in LLM-Based Text Streaming Services | Umich

- BlockLLM: Multi-tenant Finer-grained Serving for Large Language Models

- vAttention: Dynamic Memory Management for Serving LLMs without PagedAttention

- Helix: Distributed Serving of Large Language Models via Max-Flow on Heterogeneous GPUs | CMU

- Eloquent: A More Robust Transmission Scheme for LLM Token Streaming | NAIC' 24

- Optimizing Speculative Decoding for Serving Large Language Models Using Goodput | UCB

- Enabling Elastic Model Serving with MultiWorld | Cisco Research

- Prepacking: A Simple Method for Fast Prefilling and Increased Throughput in Large Language Models

- NanoFlow: Towards Optimal Large Language Model Serving Throughput

- Responsive ML inference in multi-tenanted environments using AQUA

- One Queue Is All You Need: Resolving Head-of-Line Blocking in Large Language Model Serving

- MemServe: Context Caching for Disaggregated LLM Serving with Elastic Memory Pool

- dLoRA: Dynamically Orchestrating Requests and Adapters for LoRA LLM Serving | OSDI' 24

- Llumnix: Dynamic Scheduling for Large Language Model Serving | OSDI' 24

- Taming Throughput-Latency Tradeoff in LLM Inference with Sarathi-Serve | OSDI' 24

- InfiniGen: Efficient Generative Inference of Large Language Models with Dynamic KV Cache Management

- ServerlessLLM: Low-Latency Serverless Inference for Large Language Models | OSDI' 24

- Preble: Efficient Distributed Prompt Scheduling for LLM Serving

- Mnemosyne: Parallelization Strategies for Efficiently Serving Multi-Million Context Length LLM Inference Requests Without Approximations

- ConServe: Harvesting GPUs for Low-Latency and High-Throughput Large Language Model Serving

- BlockLLM: Multi-tenant Finer-grained Serving for Large Language Models

- Context Parallelism for Scalable Million-Token Inference

- xDiT: an Inference Engine for Diffusion Transformers (DiTs) with Massive Parallelism

- Pie: Pooling CPU Memory for LLM Inference

- NEO: Saving GPU Memory Crisis with CPU Offloading for Online LLM Inference

- FastSwitch: Optimizing Context Switching Efficiency in Fairness-aware Large Language Model Serving

- Flash Communication: Reducing Tensor Parallelization Bottleneck for Fast Large Language Model Inference

- FlashInfer: Efficient and Customizable Attention Engine for LLM Inference Serving

- Fast Inference for Augmented Large Language Models

- A System for Microserving of LLMs | CMU

- iServe : An Intent-based Serving System for LLMs| UT Austin

- Locality-aware Fair Scheduling in LLM Serving | UCB

- Towards Efficient Large Multimodal Model Serving | MSFT

- DeltaZip: Efficient Serving of Multiple Full-Model-Tuned LLMs

- PIM Is All You Need: A CXL-Enabled GPU-Free System for Large Language Model Inference | ASPLOS' 25

- λScale: Enabling Fast Scaling for Serverless Large Language Model Inference

- AIBrix: Towards Scalable and Cost-Effective LLM Inference Infrastructure | vLLM

- Serving Models, Fast and Slow:Optimizing Heterogeneous LLM Inferencing Workloads at Scale

- Make LLM Inference Affordable to Everyone: Augmenting GPU Memory with NDP-DIMM

- Jenga: Effective Memory Management for Serving LLM with Heterogeneity

- ALTO: An Efficient Network Orchestrator for Compound AI Systems | Stanford & UCB

- Parrot: Efficient Serving of LLM-based Applications with Semantic Variable | OSDI' 24

- Efficiently Serving LLM Reasoning Programs with Certaindex | UCSD

- Autellix: An Efficient Serving Engine for LLM Agents as General Programs | UCB

- LLM in a flash: Efficient Large Language Model Inference with Limited Memory | Apple

- STI: Turbocharge NLP Inference at the Edge via Elastic Pipelining | ASPLOS 23

- PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU | SOSP' 24

- MoE-Lightning: High-Throughput MoE Inference on Memory-constrained GPUs

- InfiniteHiP: Extending Language Model Context Up to 3 Million Tokens on a Single GPU

- Fast Distributed Inference Serving for Large Language Models | PKU

- FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance | Stanford

- H2O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models | ICML ES-FoMo Workshop 2023

- Inference with Reference: Lossless Acceleration of Large Language Models

- SkipDecode: Autoregressive Skip Decoding with Batching and Caching for Efficient LLM Inferencex

- Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time

- Knowledge-preserving Pruning for Pre-trained Language Models without Retraining | SNU

- Accelerating LLM Inference with Staged Speculative Decoding | ICML' 23

- SpecInfer: Accelerating Generative LLM Serving with Speculative Inference and Token Tree Verification | CMU

- Deja Vu: Contextual Sparsity for Efficient LLMs at Inference Time | ICML' 23

- S3: Increasing GPU Utilization during Generative Inference for Higher Throughput | Havard

- LLMCad: Fast and Scalable On-device Large Language Model Inference

- Skeleton-of-Thought: Large Language Models Can Do Parallel Decoding | THU

- LoRAShear: Efficient Large Language Model Structured Pruning and Knowledge Recovery | Microsoft

- Ring Attention with Blockwise Transformers for Near-Infinite Context | UCB

- Learned Best-Effort LLM Serving | UCB

- Star Attention : Efficient LLM Inference over Long Sequences| NVIDIA

- Ymir: A Scheduler for Foundation Model Fine-tuning Workloads in Datacenters | ICS' 24

- MOSEL: Inference Serving Using Dynamic Modality Selection

- DISTMM: Accelerating distributed multimodal model training | NSDI' 24

- Approximate Caching for Efficiently Serving Diffusion Models | Adobe Research

- Generative AI Beyond LLMs: System Implications of Multi-Modal Generation | Meta

- Characterizing and Efficiently Accelerating Multimodal Generation Model Inference | Meta

- DistriFusion: Distributed Parallel Inference for High-Resolution Diffusion Models | MIT

- Optimus: Accelerating Large-Scale Multi-Modal LLM Training by Bubble Exploitation

- Addressing Model and Data Heterogeneity in Multimodal Large Language Model Training | PKU

- LongVILA: Scaling Long-Context Visual Language Models for Long Videos | NVIDIA

- FlexCache: Flexible Approximate Cache System for Video Diffusion | University of Waterloo

- Large Language Models for Compiler Optimization

- The Hitchhiker's Guide to Program Analysis: A Journey with Large Language Models

- LLM-Assisted Code Cleaning For Training Accurate Code Generators | UCB

- Efficient Multi-Task Large Model Training via Data Heterogeneity-aware Model Management

- If At First You Don’t Succeed, Try, Try, Again...? | SOSP' 24

- Aceso: Efficient Parallel DNN Training through Iterative Bottleneck Alleviation | EuroSys '24

- GMorph: Accelerating Multi-DNN Inference via Model Fusion | EuroSys '24

- Automatic Root Cause Analysis via Large Language Models for Cloud Incidents | EuroSys '24

- Efficient Large Language Models: A Survey

- Challenges and Applications of Large Language Models

- Beyond Efficiency: A Systematic Survey of Resource-Efficient Large Language Models

- Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems

- LLM Energy Leaderboard | Umich

- LLM-Perf Leaderboard | HuggingFace

- Aviary Explorer | Anyscale

- Open LLM Leaderboard | HuggingFace

- HELM | Stanford

- LMSYS | UCB

- Towards Efficient and Reliable LLM Serving: A Real-World Workload Study

- DeepSpeed: a deep learning optimization library that makes distributed training and inference easy, efficient, and effective | Microsoft

- TensorRT-LLM | Nvidia

- Accelerate | Hugging Face

- Ray-LLM | Ray

- LLaVA

- Megatron | Nvidia

- NeMo | Nvidia

- torchtitan | PyTorch

- vLLM | UCB

- SGLang | UCB

- TGI | Hugging Face

- OpenRLHF

- veRL | ByteDance

- veScale | ByteDance

- KV Transformers

- DeepSeek Open Infra

- Dynamo | NVIDA

- Cornstarch: Build, Train, Run Your Own Multimodal Model | UMich

- Large Transformer Model Inference Optimization

- Transformer Inference Arithmetic

- The Transformer Family Version 2.0

- Full Stack Optimization of Transformer Inference: a Survey | UCB

- Systems for Machine Learning | (Stanford)[https://cs229s.stanford.edu/fall2023/]

- Systems for Generative AI | (Umich)[https://github.com/mosharaf/eecs598/tree/w24-genai]

- Systems for AI - LLMs | (GT)[https://cs8803-sp24.anand-iyer.com/]

- A curated list of Large Language Model

- AI systems paper list

- A baseline repository of Auto-Parallelism in Training Neural Networks

- Numbers every LLM Developer should know

- 100,000 H100 Clusters: Power, Network Topology, Ethernet vs InfiniBand, Reliability, Failures, Checkpointing

- OpenAI Keynote on Building Scalable AI Infrastructure

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLMSys-PaperList

Similar Open Source Tools

LLMSys-PaperList

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

DecryptPrompt

This repository does not provide a tool, but rather a collection of resources and strategies for academics in the field of artificial intelligence who are feeling depressed or overwhelmed by the rapid advancements in the field. The resources include articles, blog posts, and other materials that offer advice on how to cope with the challenges of working in a fast-paced and competitive environment.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

parallax

Parallax is a fully decentralized inference engine developed by Gradient. It allows users to build their own AI cluster for model inference across distributed nodes with varying configurations and physical locations. Core features include hosting local LLM on personal devices, cross-platform support, pipeline parallel model sharding, paged KV cache management, continuous batching for Mac, dynamic request scheduling, and routing for high performance. The backend architecture includes P2P communication powered by Lattica, GPU backend powered by SGLang and vLLM, and MAC backend powered by MLX LM.

Next-Generation-LLM-based-Recommender-Systems-Survey

The Next-Generation LLM-based Recommender Systems Survey is a comprehensive overview of the latest advancements in recommender systems leveraging Large Language Models (LLMs). The survey covers various paradigms, approaches, and applications of LLMs in recommendation tasks, including generative and non-generative models, multimodal recommendations, personalized explanations, and industrial deployment. It discusses the comparison with existing surveys, different paradigms, and specific works in the field. The survey also addresses challenges and future directions in the domain of LLM-based recommender systems.

awesome-mobile-robotics

The 'awesome-mobile-robotics' repository is a curated list of important content related to Mobile Robotics and AI. It includes resources such as courses, books, datasets, software and libraries, podcasts, conferences, journals, companies and jobs, laboratories and research groups, and miscellaneous resources. The repository covers a wide range of topics in the field of Mobile Robotics and AI, providing valuable information for enthusiasts, researchers, and professionals in the domain.

LLMs4TS

LLMs4TS is a repository focused on the application of cutting-edge AI technologies for time-series analysis. It covers advanced topics such as self-supervised learning, Graph Neural Networks for Time Series, Large Language Models for Time Series, Diffusion models, Mixture-of-Experts architectures, and Mamba models. The resources in this repository span various domains like healthcare, finance, and traffic, offering tutorials, courses, and workshops from prestigious conferences. Whether you're a professional, data scientist, or researcher, the tools and techniques in this repository can enhance your time-series data analysis capabilities.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

awesome-quant-ai

Awesome Quant AI is a curated list of resources focusing on quantitative investment and trading strategies using artificial intelligence and machine learning in finance. It covers key challenges in quantitative finance, AI/ML technical fit, predictive modeling, sequential decision-making, synthetic data generation, contextual reasoning, mathematical foundations, design approach, quantitative trading strategies, tools and platforms, learning resources, books, research papers, community, and conferences. The repository aims to provide a comprehensive resource for those interested in the intersection of AI, machine learning, and quantitative finance, with a focus on extracting alpha while managing risk in financial systems.

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

Awesome_Mamba

Awesome Mamba is a curated collection of groundbreaking research papers and articles on Mamba Architecture, a pioneering framework in deep learning known for its selective state spaces and efficiency in processing complex data structures. The repository offers a comprehensive exploration of Mamba architecture through categorized research papers covering various domains like visual recognition, speech processing, remote sensing, video processing, activity recognition, image enhancement, medical imaging, reinforcement learning, natural language processing, 3D recognition, multi-modal understanding, time series analysis, graph neural networks, point cloud analysis, and tabular data handling.

awesome-MLSecOps

Awesome MLSecOps is a curated list of open-source tools, resources, and tutorials for MLSecOps (Machine Learning Security Operations). It includes a wide range of security tools and libraries for protecting machine learning models against adversarial attacks, as well as resources for AI security, data anonymization, model security, and more. The repository aims to provide a comprehensive collection of tools and information to help users secure their machine learning systems and infrastructure.

Prompt_Engineering

Prompt Engineering Techniques is a comprehensive repository for learning, building, and sharing prompt engineering techniques, from basic concepts to advanced strategies for leveraging large language models. It provides step-by-step tutorials, practical implementations, and a platform for showcasing innovative prompt engineering techniques. The repository covers fundamental concepts, core techniques, advanced strategies, optimization and refinement, specialized applications, and advanced applications in prompt engineering.

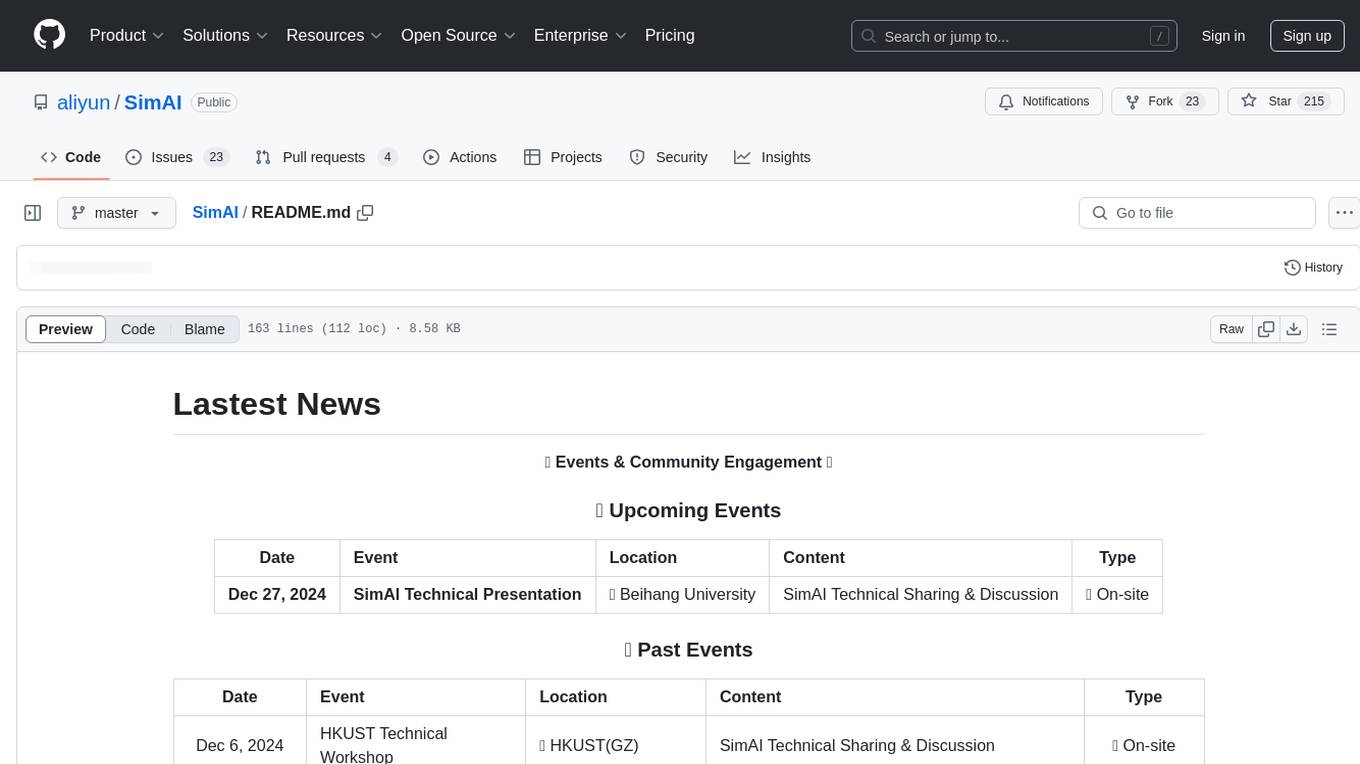

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

For similar tasks

veScale

veScale is a PyTorch Native LLM Training Framework. It provides a set of tools and components to facilitate the training of large language models (LLMs) using PyTorch. veScale includes features such as 4D parallelism, fast checkpointing, and a CUDA event monitor. It is designed to be scalable and efficient, and it can be used to train LLMs on a variety of hardware platforms.

LLMSys-PaperList

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

TensorRT-LLM

TensorRT-LLM is an easy-to-use Python API to define Large Language Models (LLMs) and build TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. TensorRT-LLM contains components to create Python and C++ runtimes that execute those TensorRT engines. It also includes a backend for integration with the NVIDIA Triton Inference Server; a production-quality system to serve LLMs. Models built with TensorRT-LLM can be executed on a wide range of configurations going from a single GPU to multiple nodes with multiple GPUs (using Tensor Parallelism and/or Pipeline Parallelism).

mLoRA

mLoRA (Multi-LoRA Fine-Tune) is an open-source framework for efficient fine-tuning of multiple Large Language Models (LLMs) using LoRA and its variants. It allows concurrent fine-tuning of multiple LoRA adapters with a shared base model, efficient pipeline parallelism algorithm, support for various LoRA variant algorithms, and reinforcement learning preference alignment algorithms. mLoRA helps save computational and memory resources when training multiple adapters simultaneously, achieving high performance on consumer hardware.

llm-engine

Scale's LLM Engine is an open-source Python library, CLI, and Helm chart that provides everything you need to serve and fine-tune foundation models, whether you use Scale's hosted infrastructure or do it in your own cloud infrastructure using Kubernetes.

llm-on-openshift

This repository provides resources, demos, and recipes for working with Large Language Models (LLMs) on OpenShift using OpenShift AI or Open Data Hub. It includes instructions for deploying inference servers for LLMs, such as vLLM, Hugging Face TGI, Caikit-TGIS-Serving, and Ollama. Additionally, it offers guidance on deploying serving runtimes, such as vLLM Serving Runtime and Hugging Face Text Generation Inference, in the Single-Model Serving stack of Open Data Hub or OpenShift AI. The repository also covers vector databases that can be used as a Vector Store for Retrieval Augmented Generation (RAG) applications, including Milvus, PostgreSQL+pgvector, and Redis. Furthermore, it provides examples of inference and application usage, such as Caikit, Langchain, Langflow, and UI examples.

OpenLLM

OpenLLM is a platform that helps developers run any open-source Large Language Models (LLMs) as OpenAI-compatible API endpoints, locally and in the cloud. It supports a wide range of LLMs, provides state-of-the-art serving and inference performance, and simplifies cloud deployment via BentoML. Users can fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM. The platform also supports various quantization techniques, serving fine-tuning layers, and multiple runtime implementations. OpenLLM seamlessly integrates with other tools like OpenAI Compatible Endpoints, LlamaIndex, LangChain, and Transformers Agents. It offers deployment options through Docker containers, BentoCloud, and provides a community for collaboration and contributions.

candle-vllm

Candle-vllm is an efficient and easy-to-use platform designed for inference and serving local LLMs, featuring an OpenAI compatible API server. It offers a highly extensible trait-based system for rapid implementation of new module pipelines, streaming support in generation, efficient management of key-value cache with PagedAttention, and continuous batching. The tool supports chat serving for various models and provides a seamless experience for users to interact with LLMs through different interfaces.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.