TME-AIX

Various Telco AI Usecases & Experiments

Stars: 115

The TME-AIX repository is a collaborative workspace dedicated to exploring Telco Media Entertainment use-cases using open source AI capabilities and datasets. It focuses on projects like Revenue Assurance, Service Assurance Predictions, 5G Network Fault Predictions, Sustainability, SecOps-AI, SmartGrid, IoT Security, Customer Relation Management, Anomaly Detection, Starlink Quality Predictions, and NoC AI Augmentation for OSS.

README:

Welcome to the TME-AIX collaborative experimental workspace.

This repository is dedicated to exploring various TME use-cases built around open source AI capabilities and utilizing open datasets.

Our Project Summary: TrueAI4Telco

- Revenue Assurance and Fraud Management (RAFM)

- Service Assurance Latency & NPS Predictions

- 5G Network Operation Fault Predictions

- Sustainability & Energy Efficiency

- SecOps-AI for Networking

- AI Powered SmartGrid

- IoT Perimeter Security

- 5G CNF RCA with LLM

- Customer Relation Management Voice App

- Anomaly Detection & RootCauseAnalysis with Model Chaining + Use of RAG for DataMesh

- Starlink -Satellite ISP- Quality of Experience Predictions

- NoC AI Augmention for OSS

Discover our models and datasets on HuggingFace: TME-AIX on HuggingFace

For collaboration or inquiries about interesting use cases and data mining opportunities, feel free to reach out:

| Role | Name | LinkedIn Profile | Geo-Location |

|---|---|---|---|

| Maintainer | Alessandro Arrichiello | EU | |

| Maintainer | Ali Bokhari | NA | |

| Maintainer | Atul Deshpande | APAC | |

| Founder | Fatih E. NAR | Texas |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for TME-AIX

Similar Open Source Tools

TME-AIX

The TME-AIX repository is a collaborative workspace dedicated to exploring Telco Media Entertainment use-cases using open source AI capabilities and datasets. It focuses on projects like Revenue Assurance, Service Assurance Predictions, 5G Network Fault Predictions, Sustainability, SecOps-AI, SmartGrid, IoT Security, Customer Relation Management, Anomaly Detection, Starlink Quality Predictions, and NoC AI Augmentation for OSS.

Telco-AIX

Telco-AIX is a collaborative experimental workspace dedicated to exploring data-driven decision-making use-cases using open source AI capabilities and open datasets. The repository focuses on projects related to revenue assurance, fraud management, service assurance, latency predictions, 5G network operations, sustainability, energy efficiency, SecOps-AI for networking, AI-powered SmartGrid, IoT perimeter security, anomaly detection, root cause analysis, customer relationship management voice app, Starlink quality of experience predictions, and NoC AI augmentation for OSS.

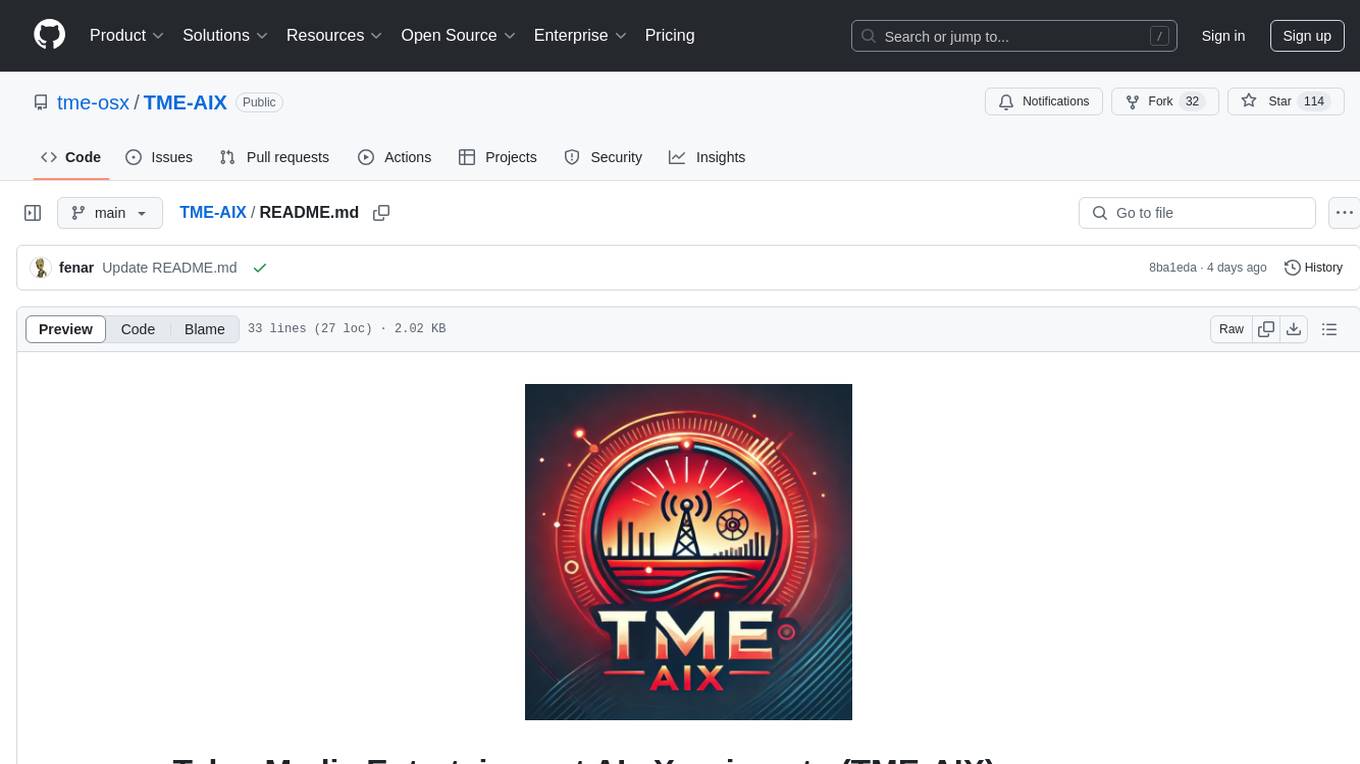

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

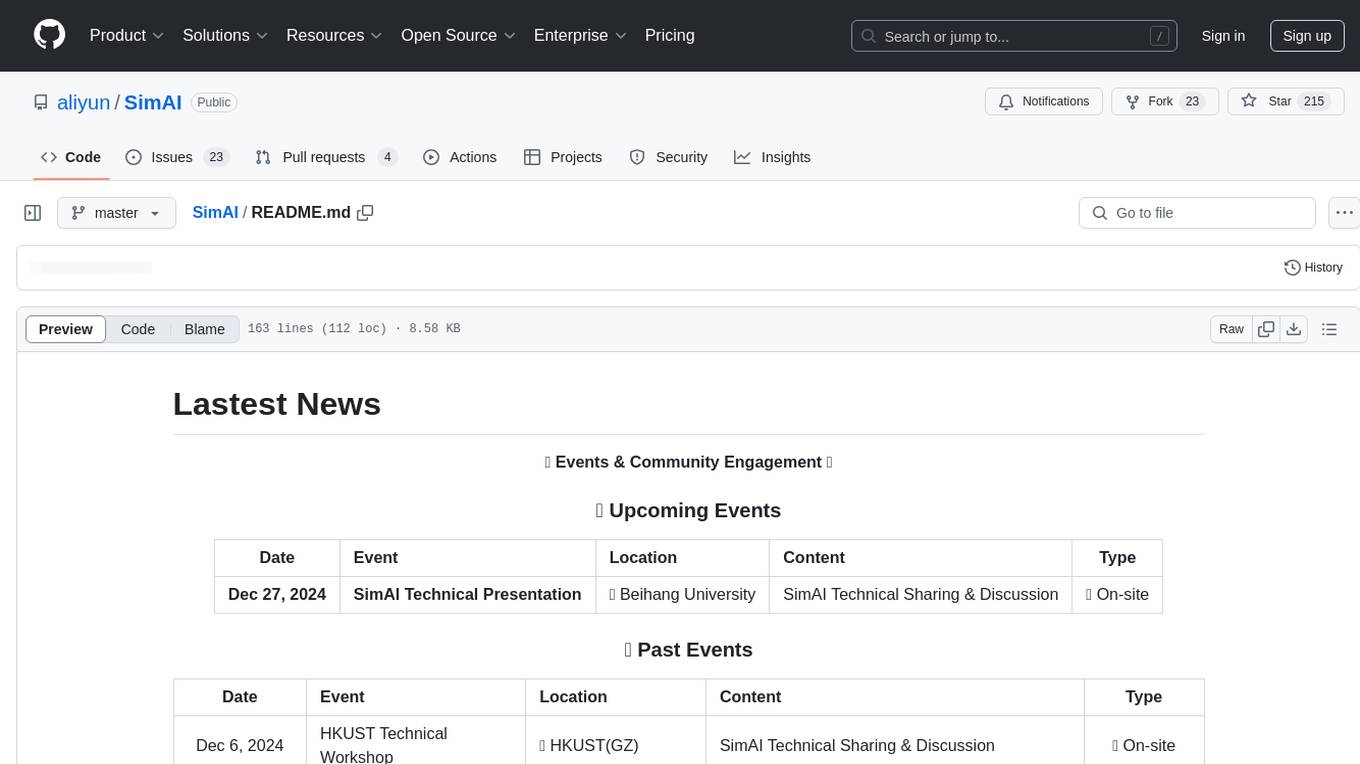

netdata

Netdata is an open-source, real-time infrastructure monitoring platform that provides instant insights, zero configuration deployment, ML-powered anomaly detection, efficient monitoring with minimal resource usage, and secure & distributed data storage. It offers real-time, per-second updates and clear insights at a glance. Netdata's origin story involves addressing the limitations of existing monitoring tools and led to a fundamental shift in infrastructure monitoring. It is recognized as the most energy-efficient tool for monitoring Docker-based systems according to a study by the University of Amsterdam.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

solo-founder-superpowers

Solo Founder Superpowers is a comprehensive guide for non-technical founders building SaaS products with AI tools. It covers 43 expert skills across various aspects of planning, building, launching, and growing a software business. The repository provides actionable guides, checklists, and copy-paste prompts to assist founders in their entrepreneurial journey. Skills range from development and technical aspects to design, SEO, growth marketing, strategy, business operations, and more. The tool aims to empower solo founders with the necessary knowledge and resources to succeed in the competitive tech industry.

BharatMLStack

BharatMLStack is a comprehensive, production-ready machine learning infrastructure platform designed to democratize ML capabilities across India and beyond. It provides a robust, scalable, and accessible ML stack empowering organizations to build, deploy, and manage machine learning solutions at massive scale. It includes core components like Horizon, Trufflebox UI, Online Feature Store, Go SDK, Python SDK, and Numerix, offering features such as control plane, ML management console, real-time features, mathematical compute engine, and more. The platform is production-ready, cloud agnostic, and offers observability through built-in monitoring and logging.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

Vision-Agents

Vision Agents is an open-source project by Stream that provides building blocks for creating intelligent, low-latency video experiences powered by custom models and infrastructure. It offers multi-modal AI agents that watch, listen, and understand video in real-time. The project includes SDKs for various platforms and integrates with popular AI services like Gemini and OpenAI. Vision Agents can be used for tasks such as sports coaching, security camera systems with package theft detection, and building invisible assistants for various applications. The project aims to simplify the development of real-time vision AI applications by providing a range of processors, integrations, and out-of-the-box features.

off-grid-mobile

Off Grid is a complete offline AI suite that allows users to perform various tasks such as text generation, image generation, vision AI, voice transcription, and document analysis on their mobile devices without sending any data out. The tool offers high performance on flagship devices and supports a wide range of models for different tasks. Users can easily install the tool on Android by downloading the APK from GitHub Releases or build it from source with Node.js and JDK. The documentation provides detailed information on the system architecture, codebase, design system, visual hierarchy, test flows, and more. Contributions are welcome, and the tool is built with a focus on user privacy and data security, ensuring no cloud, subscription, or data harvesting.

runtime

Exosphere is a lightweight runtime designed to make AI agents resilient to failure and enable infinite scaling across distributed compute. It provides a powerful foundation for building and orchestrating AI applications with features such as lightweight runtime, inbuilt failure handling, infinite parallel agents, dynamic execution graphs, native state persistence, and observability. Whether you're working on data pipelines, AI agents, or complex workflow orchestrations, Exosphere offers the infrastructure backbone to make your AI applications production-ready and scalable.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

agentsys

AgentSys is a modular runtime and orchestration system for AI agents, with 13 plugins, 42 agents, and 28 skills that compose into structured pipelines for software development. It handles task selection, branch management, code review, artifact cleanup, CI, PR comments, and deployment. The system runs on Claude Code, OpenCode, and Codex CLI, providing a functional software suite and runtime for AI agent orchestration.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

For similar tasks

TME-AIX

The TME-AIX repository is a collaborative workspace dedicated to exploring Telco Media Entertainment use-cases using open source AI capabilities and datasets. It focuses on projects like Revenue Assurance, Service Assurance Predictions, 5G Network Fault Predictions, Sustainability, SecOps-AI, SmartGrid, IoT Security, Customer Relation Management, Anomaly Detection, Starlink Quality Predictions, and NoC AI Augmentation for OSS.

Telco-AIX

Telco-AIX is a collaborative experimental workspace dedicated to exploring data-driven decision-making use-cases using open source AI capabilities and open datasets. The repository focuses on projects related to revenue assurance, fraud management, service assurance, latency predictions, 5G network operations, sustainability, energy efficiency, SecOps-AI for networking, AI-powered SmartGrid, IoT perimeter security, anomaly detection, root cause analysis, customer relationship management voice app, Starlink quality of experience predictions, and NoC AI augmentation for OSS.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

SynapseML

SynapseML (previously known as MMLSpark) is an open-source library that simplifies the creation of massively scalable machine learning (ML) pipelines. It provides simple, composable, and distributed APIs for various machine learning tasks such as text analytics, vision, anomaly detection, and more. Built on Apache Spark, SynapseML allows seamless integration of models into existing workflows. It supports training and evaluation on single-node, multi-node, and resizable clusters, enabling scalability without resource wastage. Compatible with Python, R, Scala, Java, and .NET, SynapseML abstracts over different data sources for easy experimentation. Requires Scala 2.12, Spark 3.4+, and Python 3.8+.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

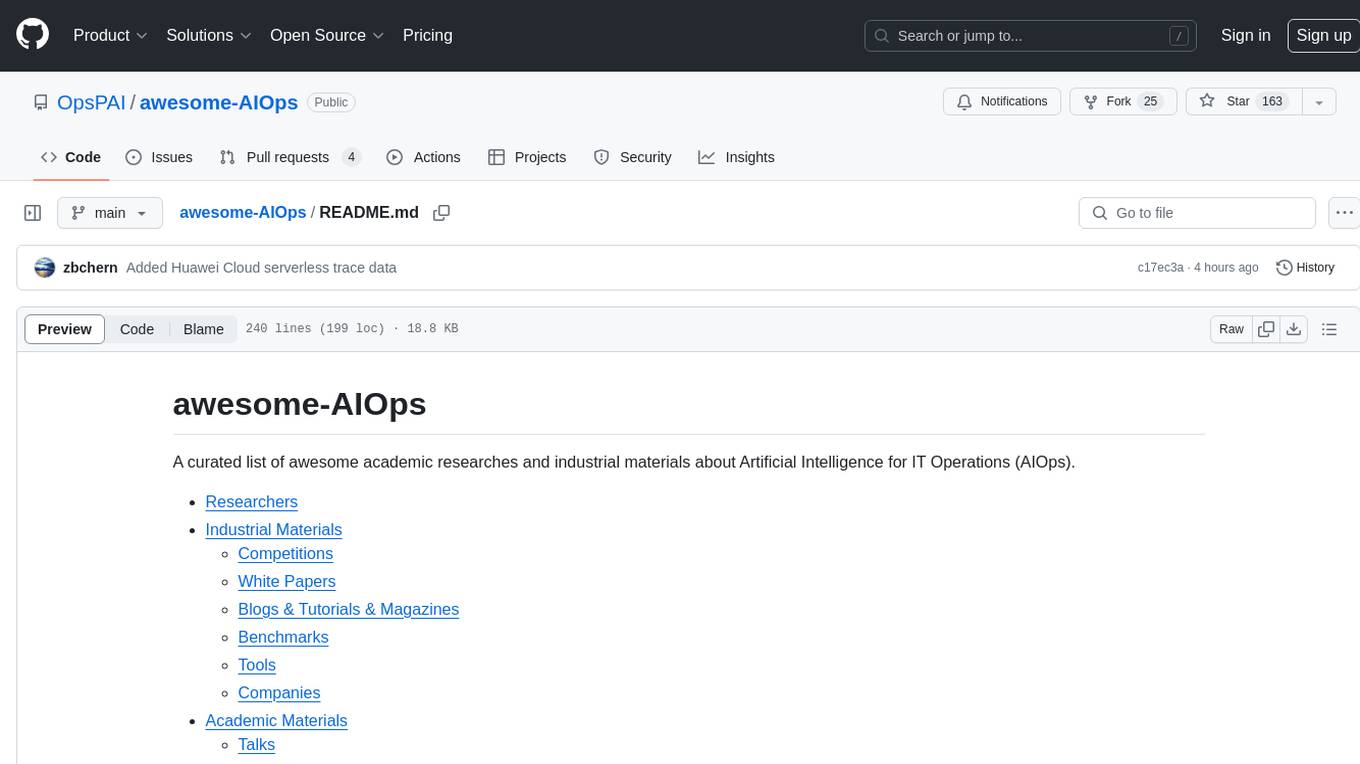

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.