LLMs4TS

None

Stars: 110

LLMs4TS is a repository focused on the application of cutting-edge AI technologies for time-series analysis. It covers advanced topics such as self-supervised learning, Graph Neural Networks for Time Series, Large Language Models for Time Series, Diffusion models, Mixture-of-Experts architectures, and Mamba models. The resources in this repository span various domains like healthcare, finance, and traffic, offering tutorials, courses, and workshops from prestigious conferences. Whether you're a professional, data scientist, or researcher, the tools and techniques in this repository can enhance your time-series data analysis capabilities.

README:

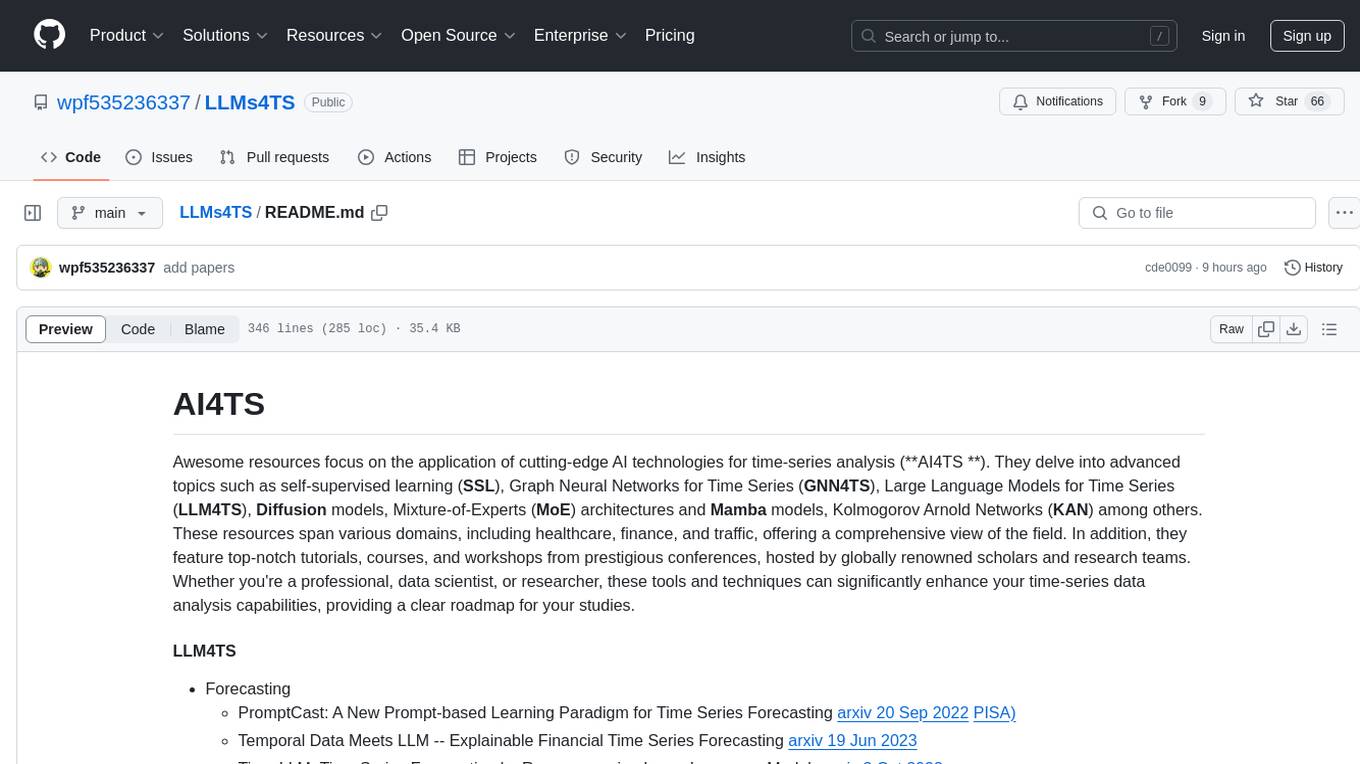

Awesome resources focus on the application of cutting-edge AI technologies for time-series analysis (AI4TS). They delve into advanced topics such as self-supervised learning (SSL), Graph Neural Networks for Time Series (GNN4TS), Large Language Models for Time Series (LLM4TS), Diffusion models, Mixture-of-Experts (MoE) architectures and Mamba models, Kolmogorov Arnold Networks (KAN), Learn at Test Time (TTT) among others. These resources span various domains, including healthcare, finance, and traffic, offering a comprehensive view of the field. In addition, they feature top-notch tutorials, courses, and workshops from prestigious conferences, hosted by globally renowned scholars and research teams. Whether you're a professional, data scientist, or researcher, these tools and techniques can significantly enhance your time-series data analysis capabilities, providing a clear roadmap for your studies.

-

Forecasting

- PromptCast: A New Prompt-based Learning Paradigm for Time Series Forecasting arxiv 20 Sep 2022 PISA)

- Temporal Data Meets LLM -- Explainable Financial Time Series Forecasting arxiv 19 Jun 2023

- Time-LLM: Time Series Forecasting by Reprogramming Large Language Models arxiv 3 Oct 2023

- TimeGPT-1 arxiv 2023 5 Oct 2023

- TEMPO: Prompt-based Generative Pre-trained Transformer for Time Series Forecasting arxiv 8 Oct 2023

- Pushing the Limits of Pre-training for Time Series Forecasting in the CloudOps Domain arxiv 2023 8 Oct 2023

- Large Language Models Are Zero-Shot Time Series Forecasters NeurIPS 2023 11 Oct 2023 llmtime

- Lag-Llama: Towards Foundation Models for Time Series Forecasting arxiv 12 Oct 2023 Lag-Llama

- iTransformer: Inverted Transformers Are Effective for Time Series Forecasting arxiv 13 Oct 2023 [iTransformer](GitHub - thuml/iTransformer: This is the official implementation for "iTransformer: Inverted Transformers Are Effective for Time Series Forecasting".)

- Harnessing LLMs for Temporal Data - A Study on Explainable Financial Time Series Forecasting 2023.emnlp-industry

- LLM4TS: Two-Stage Fine-Tuning for Time-Series Forecasting with Pre-Trained LLMs arxiv16 Aug 2023

- UniTime: A Language-Empowered Unified Model for Cross-Domain Time Series Forecasting 15 Oct 2023

- AutoTimes: Autoregressive Time Series Forecasters via Large Language Models 4 Feb 2024

- Unified Training of Universal Time Series Forecasting Transformers 4 Feb 2024 SalesforceAIResearch/uni2ts

- LSTPrompt: Large Language Models as Zero-Shot Time Series Forecasters by Long-Short-Term Prompting 25 Feb 2024 LSTPrompt

- Multi-Patch Prediction: Adapting LLMs for Time Series Representation Learning 7 Feb 2024

- Chronos: Learning the Language of Time Series 12 Mar 2024 chronos

- S^2 IP-LLM: Semantic Space Informed Prompt Learning with LLM for Time Series Forecasting 9 Mar 2024

- CALF: Aligning LLMs for Time Series Forecasting via Cross-modal Fine-Tuning 12 Mar 2024 CALF

- TimeCMA: Towards LLM-Empowered Time Series Forecasting via Cross-Modality Alignment 3 Jun 2024

- TS-TCD: Triplet-Level Cross-Modal Distillation for Time-Series Forecasting Using Large Language Models 23 Sep 2024

- VITRO: Vocabulary Inversion for Time-series Representation Optimization ICASSP2025

-

Anomaly Detection

- Large Language Model Guided Knowledge Distillation for Time Series Anomaly Detection 26 Jan 2024 AnomalyLLM

- Large Language Models for Forecasting and Anomaly Detection: A Systematic Literature Review 15 Feb 2024

- Large language models can be zero-shot anomaly detectors for time series? 23 May 2024

- PeFAD: A Parameter-Efficient Federated Framework for Time Series Anomaly Detection KDD2024

- See it, Think it, Sorted: Large Multimodal Models are Few-shot Time Series Anomaly Analyzers 4 Nov 2024

-

Imputation

- GATGPT: A Pre-trained Large Language Model with Graph Attention Network for Spatiotemporal Imputation 24 Nov 2023

- NuwaTS: a Foundation Model Mending Every Incomplete Time Series 24 May 2024 Chengyui/NuwaTS

-

classification

- TableTime: Reformulating Time Series Classification as Zero-Shot Table Understanding via Large Language Models 24 Nov 2024 tabletime

-

Spatio-temporal prediction

- Spatio-Temporal Graph Learning with Large Language Model 20 Sept 2023

- How Can Large Language Models Understand Spatial-Temporal Data? 25 Jan 2024

- UrbanGPT: Spatio-Temporal Large Language Models 25 Feb 2024

- TPLLM: A Traffic Prediction Framework Based on Pretrained Large Language Models 4 Mar 2024

- Spatial-Temporal Large Language Model for Traffic Prediction 18 Jan 2024 ChenxiLiu-HNU/ST-LLM

-

One Fits all

- One Fits All:Power General Time Series Analysis by Pretrained LM arxiv 23 Feb 2023 NeurIPS2023-One-Fits-All no-officail reproduction

- TEST: Text Prototype Aligned Embedding to Activate LLM's Ability for Time Series arxiv16 Aug 2023 TEST

- Timer: Transformers for Time Series Analysis at Scale 4 Feb 2024

- UniTS: Building a Unified Time Series Model 29 Feb 2024 UniTS

- MOMENT: A Family of Open Time-series Foundation Models 6 Feb 2024 MOMENT

- TSLANet: Rethinking Transformers for Time Series Representation Learning ICML2024 TSLANet

- RWKV-TS: Beyond Traditional Recurrent Neural Network for Time Series Tasks 17 Jan 2024 RWKV-TS

- UniCL: A Universal Contrastive Learning Framework for Large Time Series Models 17 May 2024

-

Discussion

- Language models still struggle to zero-shot reason about time series 17 Apr 2024TSandLanguage

- Are Language Models Actually Useful for Time Series Forecasting? 22 Jun 2024 TS_Models

- Towards Neural Scaling Laws for Time Series Foundation Models 16 Oct 2024

- Revisited Large Language Model for Time Series Analysis through Modality Alignment 16 Oct 2024

- Exploring Capabilities of Time Series Foundation Models in Building Analytics 28 Oct 2024

- Fundamental limitations of foundational forecasting models-The need for multimodality and rigorous evaluation 2024NeurIPSInvTalk

-

Reasoning

- ChatTS: Aligning Time Series with LLMs via Synthetic Data for Enhanced Understanding and Reasoning 4 Dec 2024

- Time Series as Images: Vision Transformer for Irregularly Sampled Time Series Neurips 2023 ViTST

- VisionTS: Visual Masked Autoencoders Are Free-Lunch Zero-Shot Time Series Forecasters 30 Aug 2024 Keytoyze/VisionTS

- CAFO: Feature-Centric Explanation on Time Series Classification KDD2024

- ViTime: A Visual Intelligence-Based Foundation Model for Time Series Forecasting 10 Jul 2024 IkeYang/ViTime

- Hierarchical Context Representation and Self-Adaptive Thresholding for Multivariate Anomaly Detection TKDE2024

- Training-Free Time-Series Anomaly Detection: Leveraging Image Foundation Models 27 Aug 2024

- Plots Unlock Time-Series Understanding in Multimodal Models 3 Oct 2024

- Vision-Enhanced Time Series Forecasting by Decomposed Feature Extraction and Composed Reconstruction submit ICLR2025

- ChatTime: A Unified Multimodal Time Series Foundation Model Bridging Numerical and Textual Data AAAI2025 ChatTime

- Meta-Transformer: A Unified Framework for Multimodal Learning 20 Jul 2023 Meta-Transformer

- UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio, Video,Point Cloud, Time-Series and Image Recognition 27 Nov 2023 UniRepLKNet

- ViT-Lens-2: Gateway to Omni-modal Intelligence 27 Nov 2023 ViT-Lens-2

- Patches Are All You Need? ICLR 24 Jan 2022 convmixer

- A Time Series is Worth 64 Words: Long-term Forecasting with Transformers ICLR 2023 27 Nov 2022 PatchTST

- PatchMixer: A Patch-Mixing Architecture for Long-Term Time Series Forecasting arxiv 1 Oct 2023 PatchMixer Chinese blog

- Learning to Embed Time Series Patches Independently NeurIPS Workshop on Self-Supervised Learning: Theory and Practice, 2023 pits

- The first step is the hardest: Pitfalls of Representing and Tokenizing Temporal Data for Large Language Models arxiv 12 Sep 2023

- What Makes for Good Visual Tokenizers for Large Language Models? 20 May 2023 GVT

- SpeechTokenizer: Unified Speech Tokenizer for Speech Large Language Models ICLR2024 SpeechTokenizer

- Pathformer: Multi-scale Transformers with Adaptive Pathways for Time Series Forecasting ICLR2024

- From Similarity to Superiority: Channel Clustering for Time Series Forecasting 31 Mar 2024

- TOTEM: TOkenized Time series EMbeddings for General Time Series Analysis 26 Feb 2024 TOTEM

- Medformer: A Multi-Granularity Patching Transformer for Medical Time-Series Classification arxiv24 May 2024 DL4mHealth/Medformer

- Graph-Aware Contrasting for Multivariate Time-Series Classification AAAI2024 TSGAC

- GinAR: An End-To-End Multivariate Time Series Forecasting Model Suitable for Variable Missing KDD2024

- TSMixer: Lightweight MLP-Mixer Model for Multivariate Time Series Forecasting KDD2023PatchTSMixer

- A Multi-Scale Decomposition MLP-Mixer for Time Series Analysis VLDB2024 zshhans/MSD-Mixer

- Tiny Time Mixers (TTMs): Fast Pre-trained Models for Enhanced Zero/Few-Shot Forecasting of Multivariate Time Series 8 Jan 2024

- U-Mixer: An Unet-Mixer Architecture with Stationarity Correction for Time Series Forecasting AAAI2024 U-Mixer

- LightTS: Lightweight Time Series Classification with Adaptive Ensemble Distillation—Extended Version SIGMOD 2023

- An Analysis of Linear Time Series Forecasting Models ICML2024

- SOFTS: Efficient Multivariate Time Series Forecasting with Series-Core Fusion 22 Apr 2024 Secilia-Cxy/SOFTS

- Mixture-of-Linear-Experts for Long-term Time Series Forecasting 11 Dec 2023

- Prompt-based Domain Discrimination for Multi-source Time Series Domain Adaptation 19 Dec 2023

- A Review of Sparse Expert Models in Deep Learning 4 Sep 2022

- MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts 8 Jan 2024

- ST-MoE: Spatio-Temporal Mixture of Experts for Multivariate Time Series Forecasting 2023ISKE

- Tutorial for Mixture of Expert (MoE) Forecasting Model — Merlion 1.1.0 documentation (salesforce.com)

- Time-MoE: Billion-Scale Time Series Foundation Models with Mixture of Experts 24 Sep 2024 Time-MoE

- Moirai-MoE: Empowering Time Series Foundation Models with Sparse Mixture of Experts 14 Oct 2024

- One Fits All: Universal Time Series Analysis by Pretrained LM and Specially Designed Adaptors 24 Nov 2023 GPT4TS_Adapter

- Low-Rank Adaptation of Time Series Foundational Models for Out-of-Domain Modality Forecasting 16 May 2024

- Personalized Adapter for Large Meteorology Model on Devices: Towards Weather Foundation Models 24 May 2024

- DualTime: A Dual-Adapter Multimodal Language Model for Time Series Representation 7 Jun 2024

- Channel-Aware Low-Rank Adaptation in Time Series Forecasting CIKM2024 C-LoRA

- Is Mamba Effective for Time Series Forecasting? 17 Mar 2024 wzhwzhwzh0921/S-D-Mamba

- TimeMachine: A Time Series is Worth 4 Mambas for Long-term Forecasting 14 Mar 2024 Atik-Ahamed/TimeMachine

- STG-Mamba: Spatial-Temporal Graph Learning via Selective State Space Model 19 Mar 2024

- SiMBA: Simplified Mamba-Based Architecture for Vision and Multivariate Time series 22 Mar 2024 badripatro/simba

- MambaMixer: Efficient Selective State Space Models with Dual Token and Channel Selection 29 Mar 2024

- Traj-LLM: A New Exploration for Empowering Trajectory Prediction with Pre-trained Large Language Models 8 May 2024

- Time-SSM: Simplifying and Unifying State Space Models for Time Series Forecasting 25 May 2024

- TSCMamba: Mamba Meets Multi-View Learning for Time Series Classification 6 Jun 2024

- A Mamba Foundation Model for Time Series Forecasting 5 Nov 2024

- KAN: Kolmogorov-Arnold Networks 30 Apr 2024 pykan

- TKAN: Temporal Kolmogorov-Arnold Networks arxiv12 May 2024 TKAN

- Kolmogorov-Arnold Networks (KANs) for Time Series Analysis 14 May 2024

- Feature-Based Time Series Classification with Kolmogorov–Arnold Networks Simple-KAN-4-Time-Series

- Learning to (Learn at Test Time): RNNs with Expressive Hidden States 5 Jul 2024 test-time-training/ttt-lm-pytorch

- TimeMIL: Advancing Multivariate Time Series Classification via a Time-aware Multiple Instance Learning ICML2024 xiwenc1/TimeMIL

- A ConvNet for the 2020s CVPR2022 ConvNext

- ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders 2 Jan 2023 ConvNeXt-V2

- ModernTCN:A Modern Pure Convolution Structure for General Time Series Analysis ICLR2024 luodhhh/ModernTCN

- InceptionNeXt: When Inception Meets ConvNeXt CVPR2024 InceptionNeXt

- RWKV: Reinventing RNNs for the Transformer Era 22 May 2023

- RWKV-TS: Beyond Traditional Recurrent Neural Network for Time Series Tasks arxiv17 Jan 2024 RWKV-TS

- Efficient and Effective Time-Series Forecasting with Spiking Neural Networks ICML2024

- xLSTM: Extended Long Short-Term Memory 7 May 2024 xLSTM

- Unlocking the Power of LSTM for Long Term Time Series Forecasting 19 Aug 2024

- TransNeXt: Robust Foveal Visual Perception for Vision Transformers CVPR2024

- PeFAD: A Parameter-Efficient Federated Framework for Time Series Anomaly Detection SIGKDD 2024 PeFAD

- Time-FFM: Towards LM-Empowered Federated Foundation Model for Time Series Forecasting NeurIPS24

- Neuro-GPT: Developing A Foundation Model for EEG arxiv 7 Nov 2023

- Brant: Foundation Model for Intracranial Neural Signal NeurIPS23

- Brant-2: Foundation Model for Brain Signals 15 Feb 2024

- Brant-X: A Unified Physiological Signal Alignment Framework KDD2024

- PPi: Pretraining Brain Signal Model for Patient-independent Seizure Detection NeurIPS23

- Large-scale training of foundation models for wearable biosignals submit ICLR 2024

- BIOT: Cross-data Biosignal Learning in the Wild NeurIPS23 BIOT

- Large Brain Model for Learning Generic Representations with Tremendous EEG Data in BCI submit ICLR 2024

- Practical intelligent diagnostic algorithm for wearable 12-lead ECG via self-supervised learning on large-scale dataset Nature Communications 2023

- Large AI Models in Health Informatics: Applications, Challenges, and the Future IEEE Journal of Biomedical and Health Informatics Awesome-Healthcare-Foundation-Models

- Data science opportunities of large language models for neuroscience and biomedicine Neuron

- BioSignal Copilot: Leveraging the power of LLMs in drafting reports for biomedical signals July 06, 2023

- Health-LLM: Large Language Models for Health Prediction via Wearable Sensor Data 12 Jan 2024

- Self-supervised Learning for Electroencephalogram: A Systematic Survey 9 Jan 2024

- Learning Topology-Agnostic EEG Representations with Geometry-Aware Modeling NeurIPS 2023 MMM

- A Survey of Large Language Models in Medicine: Progress, Application, and Challenge 9 Nov 2023 AI-in-Health/MedLLMsPracticalGuide

- EEG-GPT: Exploring Capabilities of Large Language Models for EEG Classification and Interpretation 31 Jan 2024

- A Survey on Multimodal Wearable Sensor-based Human Action Recognition 14 Apr 2024

- Unveiling Thoughts: A Review of Advancements in EEG Brain Signal Decoding into Text 26 Apr 2024

- AI for Biomedicine in the Era of Large Language Models 23 Mar 2024

- NeuroLM: A Universal Multi-task Foundation Model for Bridging the Gap between Language and EEG Signals 27 Aug 2024

- EEG-Language Modeling for Pathology Detection 2 Sep 2024

- Interpretable and Robust AI in EEG Systems: A Survey 21 Apr 2023

- A Survey of Spatio-Temporal EEG data Analysis: from Models to Applications 26 Sep 2024

- NeuroLM: A Universal Multi-task Foundation Model for Bridging the Gap between Language and EEG Signals 27 Aug 2024

- Repurposing Foundation Model for Generalizable Medical Time Series Classification 3 Oct 2024 FORMED

- A Survey of Few-Shot Learning for Biomedical Time Series 3 May 2024

- CBraMod: A Criss-Cross Brain Foundation Model for EEG Decoding 10 Dec 2024 CBraMod

- Foundation Models for Weather and Climate Data Understanding: A Comprehensive Survey 5 Dec 2023

- Towards Urban General Intelligence: A Review and Outlook of Urban Foundation Models usail-hkust/Awesome-Urban-Foundation-Models

- Urban Foundation Models: A Survey KDD2024

- Biosignal

- Frozen Language Model Helps ECG Zero-Shot Learning 22 Mar 2023

- Learning Transferable Time Series Classifier with Cross-Domain Pre-training from Language Model 19 Mar 2024 CrossTimeNet

- Zero-Shot ECG Diagnosis with Large Language Models and Retrieval-Augmented Generation ML4H2023

- Large Language Models with Retrieval-Augmented Generation for Zero-Shot Disease Phenotyping 11 Dec 2023

- Zero-Shot ECG Classification with Multimodal Learning and Test-time Clinical Knowledge Enhancement 11 Mar 2024

- Electrocardiogram Instruction Tuning for Report Generation 7 Mar 2024

- Multimodal Pretraining of Medical Time Series and Notes PMLR2023 kingrc15/multimodal-clinical-pretraining

- SleepFM: Multi-modal Representation Learning for Sleep across ECG, EEG and Respiratory Signals AAAI 2024 Spring Symposium Series Clinical FMs

- [2408.07773] MedTsLLM: Leveraging LLMs for Multimodal Medical Time Series Analysis (arxiv.org) MedTsLLM

- Financial

- FinGPT: Open-Source Financial Large Language Models arxiv 9 Jun 2023 AI4Finance-Foundation/FinNLP

- FinVis-GPT: A Multimodal Large Language Model for Financial Chart Analysis (FinLLM 2023)@IJCAI 2023

- Insight Miner: A Time Series Analysis Dataset for Cross-Domain Alignment with Natural Language [NeurIPS2023-AI4Science Poster](https://openreview.net/forum?id=E1khscdUdH

- A Survey of Large Language Models for Financial Applications: Progress, Prospects and Challenges 15 Jun 2024

- Other fields

- GPT4MTS: Prompt-Based Large Language Model for Multimodal Time-Series Forecasting AAAI2024

- Advancing Time Series Classification with Multimodal Language Modeling 19 Mar 2024 Mingyue-Cheng/InstructTime

- Frequency-Aware Masked Autoencoders for Multimodal Pretraining on Biosignals 12 Sep 2023

- FOCAL: Contrastive Learning for Multimodal Time-Series Sensing Signals in Factorized Orthogonal Latent Space NeurIPS'23 30 Oct 2023 focal

- Multimodal Adaptive Emotion Transformer with Flexible Modality Inputs on A Novel Dataset with Continuous Labels ACMMM 27 October 2023

- Improving day-ahead Solar Irradiance Time Series Forecasting by Leveraging Spatio-Temporal Context 1 Jun 2023 CrossViVit

- DualTime: A Dual-Adapter Multimodal Language Model for Time Series Representation 7 Jun 2024

- Mipha: A Comprehensive Overhaul of Multimodal Assistant with Small Language Models 10 Mar 2024 LLaVA-Phi

- Efficient Multimodal Large Language Models:A Survey 17 May 2024 swordlidev/Efficient-Multimodal-LLMs-Survey

- On-Device Language Models: A Comprehensive Review 26 Aug 2024 NexaAI/Awesome-LLMs-on-device

- Small Language Models: Survey, Measurements, and Insights 24 Sep 2024 UbiquitousLearning/SLM_Survey

- TS-Benchmark: A Benchmark for Time Series Databases ICDE2021

- TimeEval: a benchmarking toolkit for time series anomaly detection algorithms VLDB2022

- Class-incremental Learning for Time Series: Benchmark and Evaluation KDD2024(ADS track) zqiao11/TSCIL

- TFB: Towards Comprehensive and Fair Benchmarking of Time Series Forecasting Methods VLDB2024 TFB

- The Capacity and Robustness Trade-off: Revisiting the Channel Independent Strategy for Multivariate Time Series Forecasting TKDE2024 channel_independent_MTSF

- Deep Time Series Models: A Comprehensive Survey and Benchmark 18 Jul 2024 TSLib

- UP2ME: Univariate Pre-training to Multivariate Fine-tuning as a General-purpose Framework for Multivariate Time Series Analysis ICML2024 UP2ME

- Time-MMD: A New Multi-Domain Multimodal Dataset for Time Series Analysis 12 Jun 2024 AdityaLab/MM-TSFlib (github.com)

- TSI-Bench: Benchmarking Time Series Imputation 18 Jun 2024 TSI-Bench

- Guidelines for Augmentation Selection in Contrastive Learning for Time Series Classification 12 Jul 2024 TS-Contrastive-Augmentation-Recommendation

- FoundTS: Comprehensive and Unified Benchmarking of Foundation Models for Time Series Forecasting 15 Oct 2024 FoundTS-C2B0

- LibEER: A Comprehensive Benchmark and Algorithm Library for EEG-based Emotion Recognition 13 Oct 2024 LibEER

- Evaluating Large Language Models on Time Series Feature Understanding: A Comprehensive Taxonomy and Benchmark EMNLP2024

- GIFT-Eval: A Benchmark For General Time Series Forecasting Model Evaluation 14 Oct 2024 GIFT-Eval

- AD-LLM: Benchmarking Large Language Models for Anomaly Detection 15 Dec 2024 AD-LLM

-

Representation learning(self-supervised learning && Semi-supervised learning&&Supervised learning)

-

Self-supervised Contrastive Representation Learning for Semi-supervised Time-Series Classification TPAMI 13 Aug 2022 CA-TCC

-

Deep Learning for Time Series Classification and Extrinsic Regression: A Current Survey ACM Computing Surveys, 2023

-

Label-efficient Time Series Representation Learning: A Review 13 Feb 2023

-

Self-Supervised Learning for Time Series Analysis: Taxonomy, Progress, and Prospects 16 Jun 2023 SSL4TS

-

Unsupervised Representation Learning for Time Series: A Review 3 Aug 2023 ULTS

-

Self-Supervised Learning for Time Series: Contrastive or Generative? AI4TS workshop at IJCAI 2023 SSL_Comparison

-

Self-Supervised Contrastive Learning for Medical Time Series: A Systematic Review Sensors in 2023 Contrastive-Learning-in-Medical-Time-Series-Survey

-

Applications of Self-Supervised Learning to Biomedical Signals: where are we now post date 2023-04-11

-

What Constitutes Good Contrastive Learning in Time-Series Forecasting? last revised 13 Aug 2023

-

A review of self-supervised learning methods in the field of ECG 2023

-

Universal Time-Series Representation Learning: A Survey 8 Jan 2024 itouchz/awesome-deep-time-series-representations

-

Deep Learning for Trajectory Data Management and Mining: A Survey and Beyond 21 Mar 2024 yoshall/Awesome-Trajectory-Computing

-

Scaling-laws for Large Time-series Models 22 May 2024

-

Deep Time Series Forecasting Models: A Comprehensive Survey Mathematics 2024

-

GNN4TS

-

A Survey on Graph Neural Networks for Time Series: Forecasting, Classification, Imputation, and Anomaly Detection 7 Jul 2023 KimMeen/Awesome-GNN4TS

-

K-Link: Knowledge-Link Graph from LLMs for Enhanced Representation Learning in Multivariate Time-Series Data 6 Mar 2024

-

Generative models

-

General

- A Survey of Generative Techniques for Spatial-Temporal Data Mining 15 May 2024

-

Diffusion4TS

- Diffusion models for time-series applications: a survey 1 May 2023

- A Survey on Diffusion Models for Time Series and Spatio-Temporal Data 29 Apr 2024 yyysjz1997/Awesome-TimeSeries-SpatioTemporal-Diffusion-Model

-

-

LLM4TS

-

Large Models for Time Series and Spatio-Temporal Data: A Survey and Outlook arxiv 16 Oct 2023 Awesome-TimeSeries-SpatioTemporal-LM-LLM

-

Large Language Models for Time Series: A Survey 2 Feb 2024 xiyuanzh/awesome-llm-time-series

-

Position Paper:What Can Large Language Models Tell Us about Time Series Analysis 5 Feb 2024

-

Empowering Time Series Analysis with Large Language Models: A Survey 5 Feb 2024

-

Time Series Forecasting with LLMs: Understanding and Enhancing Model Capabilities 16 Feb 2024

-

A Survey of Time Series Foundation Models: Generalizing Time Series Representation with Large Language Model 3 May 2024 start2020/Awesome-TimeSeries-LLM-FM

-

Large Language Models for Mobility in Transportation Systems: A Survey on Forecasting Tasks 3 May 2024

-

Foundation && Pre-Trained models

-

A Survey on Time-Series Pre-Trained Models 18 May 2023 time-series-ptms

-

Toward a Foundation Model for Time Series Data 21 Oct 2023 code

-

A Review for Pre-Trained Transformer-Based Time Series Forecasting Models ITMS2023

-

A Survey of Deep Learning and Foundation Models for Time Series Forecasting 25 Jan 2024

-

Foundation Models for Time Series Analysis: A Tutorial and Survey 21 Mar 2024

-

Heterogeneous Contrastive Learning for Foundation Models and Beyond 30 Mar 2024

-

A Comprehensive Survey of Large Language Models and Multimodal Large Language Models in Medicine 14 May 2024

-

Application

-

Deep Learning for Multivariate Time Series Imputation: A Survey 6 Feb 2024 WenjieDu/Awesome_Imputation

-

Chinese

- 任利强,贾舒宜,王海鹏,等.基于深度学习的时间序列分类研究综述[J/OL].电子与信息学报:1-23[2024-05-25].http://kns.cnki.net/kcms/detail/11.4494.TN.20240109.0749.008.html.

- 毛远宏,孙琛琛,徐鲁豫,等.基于深度学习的时间序列预测方法综述[J].微电子学与计算机,2023,40(04):8-17.DOI:10.19304/J.ISSN1000-7180.2022.0725.

- 梁宏涛,刘硕,杜军威,等.深度学习应用于时序预测研究综述[J].计算机科学与探索,2023,17(06):1285-1300.

- MulTiSA 2024 | MultiTISA 2024 in conjunction with ICDE'24

- Time Series in the Age of Large Models in NeurIPS'2024

- KDD2024 - Frontiers of Foundation Models for Time Series

- ddz16/TSFpaper

- Time_Series_Instruct

- qingsongedu/Awesome-TimeSeries-AIOps-LM-LLM

- LLM for Time Series

- Time Series AI Papers

- Multivariate Time Series Transformer Framework

- xiyuanzh/time-series-papers

- vincentlux/Awesome-Multimodal-LLM

- yyysjz1997/Awesome-TimeSeries-SpatioTemporal-Diffusion-Model

- SitaoLuan/LLM4Graph

- willxxy/awesome-mmps

- mintisan/awesome-kan: A comprehensive collection of KAN(Kolmogorov-Arnold Network)-related resources, including libraries, projects, tutorials, papers, and more, for researchers and developers in the Kolmogorov-Arnold Network field. (github.com)

- xmindflow/Awesome_Mamba

-

Foundation-Models

- uncbiag/Awesome-Foundation-Models

- UbiquitousLearning/Efficient_Foundation_Model_Survey

- zhanghm1995/Forge_VFM4AD

- Yangyi-Chen/Multimodal-AND-Large-Language-Models

- MM-LLMs: Recent Advances in MultiModal Large Language Models

- A Survey on Benchmarks of Multimodal Large Language Models swordlidev/Evaluation-Multimodal-LLMs-Survey

- Learning on Multimodal Graphs: A Survey

- The (R)Evolution of Multimodal Large Language Models: A Survey

- A Practical Guide for Medical Large Language Models

- Instruction Tuning for Large Language Models: A Survey xiaoya-li/Instruction-Tuning-Survey

- [Visual Instruction Tuning towards General-Purpose Multimodal Model: A Survey](https://arxiv.org/ abs/2312.16602)

- Brain-Conditional Multimodal Synthesis: A Survey and Taxonomy

- Towards Graph Foundation Models: A Survey and Beyond

- Westlake-AI/openmixup: CAIRI Supervised, Semi- and Self-Supervised Visual Representation Learning Toolbox and Benchmark (github.com)

- open-mmlab/mmselfsup: OpenMMLab Self-Supervised Learning Toolbox and Benchmark (github.com)

- lightly-ai/lightly: A python library for self-supervised learning on images. (github.com)

- Anomaly Detection in Time Series using ChatGPT

- Change Point Detection in Time Series using ChatGPT

- Huggingface 镜像站

-

HuiguangHe - Chinese Academy of Sciences, Institute of Automation

- Changde Du - Personal Homepage

-

Bao-Liang Lu - Home Page

- Wei-Long Zheng - Personal Homepage

-

DongruiWu - Huazhong University of Science and Technology, Brain-Computer Interface and Machine Learning Lab

-

Yang Yang - Zhejiang University

-

Shenda Hong - Personal Homepage

-

Xiang Zhang Personal Homepage

- Mingsheng Long - Tsinghua University

-

Huaiyu Wan Publications

- Shengnan Guo - Faculty Directory

- Haomin Wen - Personal Homepage

- **MinWu ** - Google Site

- Emadeldeen Eldele - Personal Homepage

- Yucheng Wang - Personal Homepage

- Ming Jin - Home Page

- Yuxuan Liang - Publications

- Xiyuan Zhang - Home Page

- Mingyue Cheng - Home Page

- Deep Learning for Mobile Health Lab - GitHub Repository

- Temporal and Spatial Data Mining Research Teams - Zhihu Discussion

- Time Series Analysis from nan jing university

- Time-Series Analysis from intel company

- 北京大学数学科学学院李东风2024年春季学期课程主页 (pku.edu.cn)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLMs4TS

Similar Open Source Tools

LLMs4TS

LLMs4TS is a repository focused on the application of cutting-edge AI technologies for time-series analysis. It covers advanced topics such as self-supervised learning, Graph Neural Networks for Time Series, Large Language Models for Time Series, Diffusion models, Mixture-of-Experts architectures, and Mamba models. The resources in this repository span various domains like healthcare, finance, and traffic, offering tutorials, courses, and workshops from prestigious conferences. Whether you're a professional, data scientist, or researcher, the tools and techniques in this repository can enhance your time-series data analysis capabilities.

DecryptPrompt

This repository does not provide a tool, but rather a collection of resources and strategies for academics in the field of artificial intelligence who are feeling depressed or overwhelmed by the rapid advancements in the field. The resources include articles, blog posts, and other materials that offer advice on how to cope with the challenges of working in a fast-paced and competitive environment.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

cosmos-predict1

Cosmos-Predict1 is a specialized branch of Cosmos World Foundation Models (WFMs) focused on future state prediction, offering diffusion-based and autoregressive-based world foundation models for Text2World and Video2World generation. It includes image and video tokenizers for efficient tokenization, along with post-training and pre-training scripts for Physical AI builders. The tool provides various models for different tasks such as text to visual world generation, video-based future visual world generation, and tokenization of images and videos.

LLMSys-PaperList

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

awesome-quant-ai

Awesome Quant AI is a curated list of resources focusing on quantitative investment and trading strategies using artificial intelligence and machine learning in finance. It covers key challenges in quantitative finance, AI/ML technical fit, predictive modeling, sequential decision-making, synthetic data generation, contextual reasoning, mathematical foundations, design approach, quantitative trading strategies, tools and platforms, learning resources, books, research papers, community, and conferences. The repository aims to provide a comprehensive resource for those interested in the intersection of AI, machine learning, and quantitative finance, with a focus on extracting alpha while managing risk in financial systems.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

Awesome-Attention-Heads

Awesome-Attention-Heads is a platform providing the latest research on Attention Heads, focusing on enhancing understanding of Transformer structure for model interpretability. It explores attention mechanisms for behavior, inference, and analysis, alongside feed-forward networks for knowledge storage. The repository aims to support researchers studying LLM interpretability and hallucination by offering cutting-edge information on Attention Head Mining.

awesome-artificial-intelligence-research

The 'Awesome Artificial Intelligence Research' repository is a curated list of up-to-date research papers in the field of Artificial Intelligence (AI). It aims to help researchers stay informed about cutting-edge research trends and topics in AI by providing a comprehensive collection of research paper lists. The repository covers various subfields of AI, including Machine Learning, Data Mining, Computer Vision, Natural Language Processing, Audio & Speech, and other applications. It also includes tools for research such as public datasets and new paper recommendations.

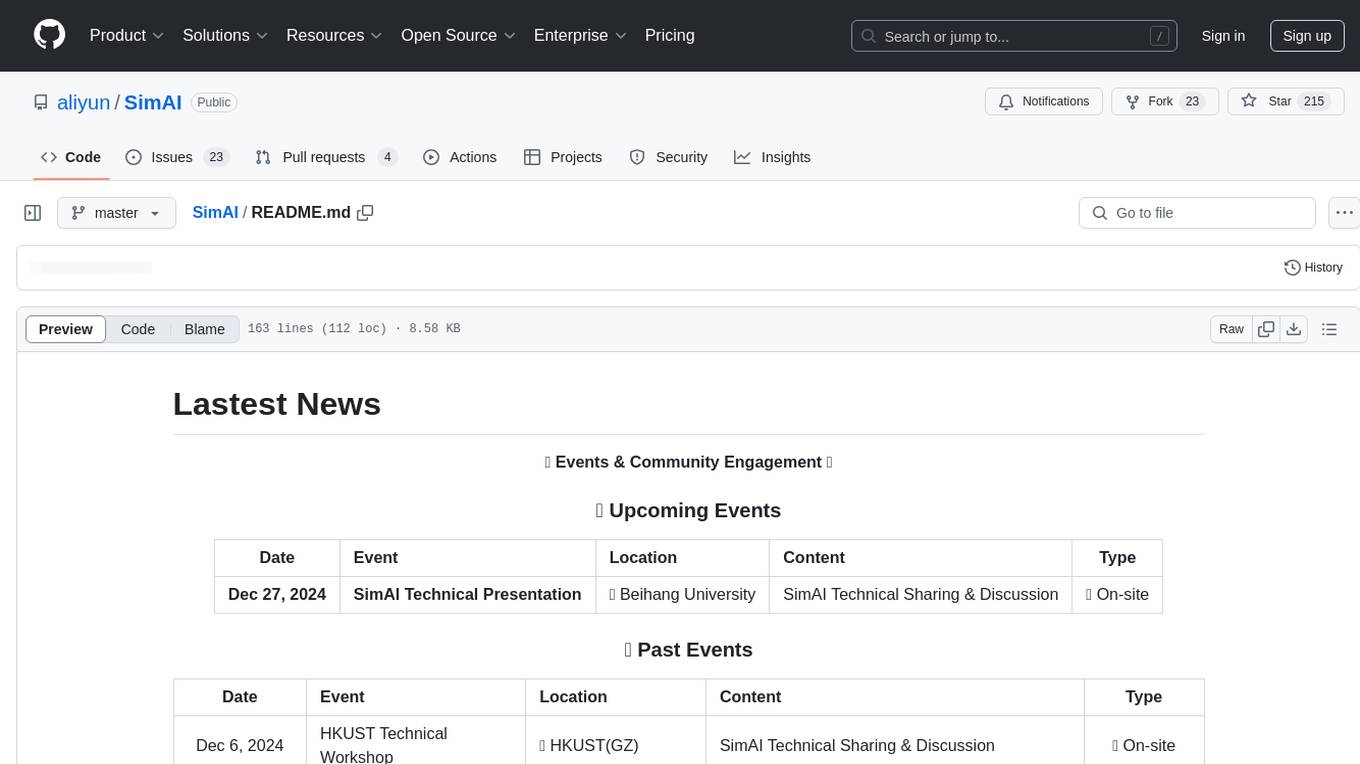

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

Prompt_Engineering

Prompt Engineering Techniques is a comprehensive repository for learning, building, and sharing prompt engineering techniques, from basic concepts to advanced strategies for leveraging large language models. It provides step-by-step tutorials, practical implementations, and a platform for showcasing innovative prompt engineering techniques. The repository covers fundamental concepts, core techniques, advanced strategies, optimization and refinement, specialized applications, and advanced applications in prompt engineering.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

For similar tasks

LLMs4TS

LLMs4TS is a repository focused on the application of cutting-edge AI technologies for time-series analysis. It covers advanced topics such as self-supervised learning, Graph Neural Networks for Time Series, Large Language Models for Time Series, Diffusion models, Mixture-of-Experts architectures, and Mamba models. The resources in this repository span various domains like healthcare, finance, and traffic, offering tutorials, courses, and workshops from prestigious conferences. Whether you're a professional, data scientist, or researcher, the tools and techniques in this repository can enhance your time-series data analysis capabilities.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.