Best AI tools for< Natural Language Processing Researcher >

Infographic

20 - AI tool Sites

Storia AI

Storia AI is an AI tool designed to assist software engineering teams in understanding and generating code. It provides a Perplexity-like chat experience where users can interact with an AI expert that has access to the latest versions of open-source software. The tool aims to improve code understanding and generation by providing responses backed with links to implementations, API references, GitHub issues, and more. Storia AI is developed by a team of natural language processing researchers from Google and Amazon Alexa, with a mission to build the most reliable AI pair programmer for engineering teams.

Chat2CSV

The website offers an AI-powered tool for easy data visualization through natural language commands. Users can transform CSV data into various charts without the need for complex coding. The platform provides a wide range of chart types, smart AI support, and prioritizes data security and privacy. It simplifies data visualization tasks, making it intuitive and versatile for users to create visual insights effortlessly.

NLTK

NLTK (Natural Language Toolkit) is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries, and an active discussion forum. Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics, plus comprehensive API documentation, NLTK is suitable for linguists, engineers, students, educators, researchers, and industry users alike.

Iflow

Iflow is an AI assistant application designed to help users efficiently acquire knowledge in various areas, whether it's for daily entertainment, general life knowledge, or professional academic research. It provides real-time answers to questions, summarizes lengthy articles, and assists in structuring documents to enhance creativity and productivity. With Iflow, users can easily enter a state of flow where knowledge flows effortlessly. The application covers a wide range of topics and is equipped with advanced natural language processing capabilities to cater to diverse user needs.

Medallia

Medallia is a real-time text analytics software that empowers organizations to derive actionable insights from customer interactions. With a focus on omnichannel analytics, Medallia's AI-powered platform enables users to identify emerging trends, prioritize key insights, and drive real-time actions. By leveraging natural language understanding and out-of-the-box topic models, Medallia offers customizable KPIs and scalable text analytics solutions for various industries. The platform aims to transform unstructured data into actionable insights to enhance customer and employee experiences.

Datumbox

Datumbox is a machine learning platform that offers a powerful open-source Machine Learning Framework written in Java. It provides a large collection of algorithms, models, statistical tests, and tools to power up intelligent applications. The platform enables developers to build smart software and services quickly using its REST Machine Learning API. Datumbox API offers off-the-shelf Classifiers and Natural Language Processing services for applications like Sentiment Analysis, Topic Classification, Language Detection, and more. It simplifies the process of designing and training Machine Learning models, making it easy for developers to create innovative applications.

Locus

Locus is a free browser extension that uses natural language processing to help users quickly find information on any web page. It allows users to search for specific terms or concepts using natural language queries, and then instantly jumps to the relevant section of the page. Locus also integrates with AI-powered tools such as GPT-3.5 to provide additional functionality, such as summarizing text and generating code. With Locus, users can save time and improve their productivity when reading and researching online.

PaperClip

PaperClip is an AI tool designed to help users keep track of and memorize details from AI research papers, machine learning blog posts, and news articles. It allows users to easily find back important findings, search through saved content, and clean up data. The tool runs locally on the user's machine, ensuring data privacy and offline support. PaperClip is a convenient solution for researchers, students, and professionals in the AI field.

FutureSmart AI

FutureSmart AI is a platform that provides custom Natural Language Processing (NLP) solutions. The platform focuses on integrating Mem0 with LangChain to enhance AI Assistants with Intelligent Memory. It offers tutorials, guides, and practical tips for building applications with large language models (LLMs) to create sophisticated and interactive systems. FutureSmart AI also features internship journeys and practical guides for mastering RAG with LangChain, catering to developers and enthusiasts in the realm of NLP and AI.

AutoGPT

AutoGPT is an AI News & Articles Blog that serves as a comprehensive resource hub for AI enthusiasts. From breaking news to hands-on tutorials, the platform offers expert insights and tool reviews to help users leverage AI in their work and daily life.

SheetGPT

SheetGPT is an add-on for Google Sheets that allows users to integrate OpenAI's text and image generation capabilities into their spreadsheets. It is designed to be easy to use, with no API keys required, and offers a range of features including content creation, research and organization, summarization, and prototyping. SheetGPT is suitable for a variety of users, including content creators, digital marketing managers, researchers, and product managers.

Ai Kit Finder

Ai Kit Finder is a website that provides a directory of AI tools and applications. The website includes a search bar that allows users to search for AI tools by category, feature, or keyword. Ai Kit Finder also provides detailed descriptions of each AI tool, including its features, advantages, and disadvantages. Additionally, the website includes a blog that provides articles on the latest AI trends and developments.

Prefit.AI

Prefit.AI is a generative AI search engine that enables users to quickly generate new content based on a variety of inputs. It can explore and analyze complex data in new ways, discover new trends and patterns, and summarize content, outline multiple solution paths, brainstorm ideas, and create detailed documentation from research notes. Prefit.AI can also respond naturally to human conversation and serve as a tool for customer service and personalization of customer workflows. It can augment employee workflows and act as efficient assistants for everyone in your organization.

Sourcely

Sourcely is an AI-powered academic search assistant designed to help users find, summarize, and add credible academic sources efficiently. With access to over 200 million research papers and advanced search filters, Sourcely streamlines the research process by finding sources, summarizing them, and exporting citations instantly. It offers features such as advanced citation tools, precise search filters, targeted citations, free PDF downloads, citations library, credible summaries, and export references in various formats. Sourcely is a valuable tool for researchers, students, and professionals looking to enhance the quality of their work and save time in sourcing and referencing.

Ask a Philosopher

Ask a Philosopher is a website where users can submit questions to a philosopher and receive answers. The platform allows individuals to seek philosophical insights and perspectives on various topics. It is a space for intellectual exploration and discussion, offering a unique opportunity to engage with philosophical thinking in a practical and accessible way.

Summarizer

Summarizer is a Chrome extension that allows users to summarize articles and webpages quickly and efficiently. With this tool, users can extract key information from lengthy texts, saving time and enhancing productivity. The extension provides concise summaries that capture the main points of the content, making it easier for users to grasp the essential details without having to read through the entire text. Summarizer is a valuable tool for students, researchers, professionals, and anyone who needs to process large amounts of information in a short time.

Explosion

Explosion is a software company specializing in developer tools and tailored solutions for AI, Machine Learning, and Natural Language Processing (NLP). They are the makers of spaCy, one of the leading open-source libraries for advanced NLP. The company offers consulting services and builds developer tools for various AI-related tasks, such as coreference resolution, dependency parsing, image classification, named entity recognition, and more.

Nextatlas Generate

Nextatlas Generate is an AI-powered generative trend forecasting service that provides deep insights into market trends and consumer behavior. It leverages cutting-edge AI technology to offer specialized assistants for market research, innovation scouting, brand strategy, and more. The service analyzes real-time social media data, online content, and various sources to uncover emerging trends, consumer behaviors, and business cases. Nextatlas Generate Suite is designed to help businesses make data-driven decisions and stay ahead in their industries.

AI Jobs

AI Jobs is a curated list of the best AI jobs for developers, designers and marketers. It provides a platform for companies to post their AI-related job openings and for job seekers to find their dream AI job. The website also includes a blog with articles on the latest AI trends and technologies.

Undressing AI

Undressing AI is a website that provides information about artificial intelligence (AI) and its potential impact on society. The site includes articles, videos, and other resources on topics such as the history of AI, the different types of AI, and the ethical implications of AI.

26 - Open Source Tools

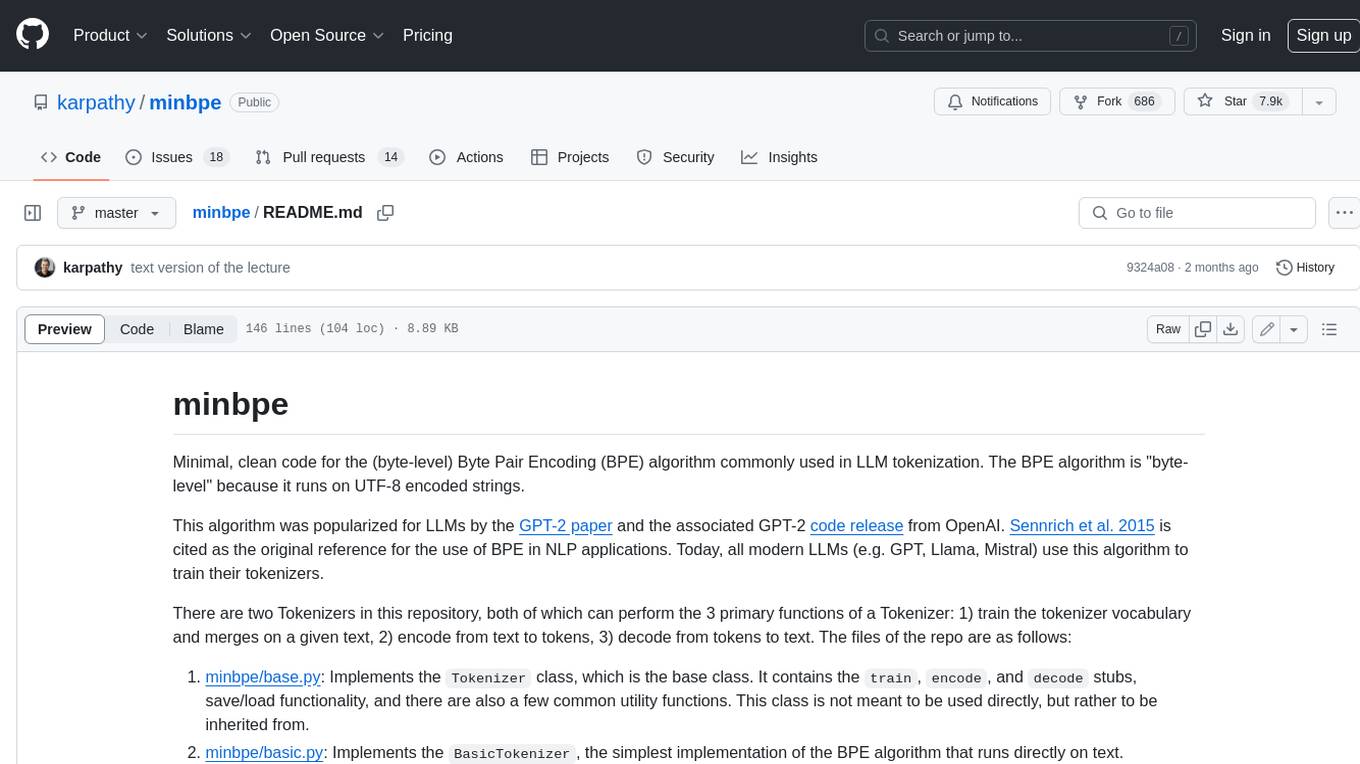

minbpe

This repository contains a minimal, clean code implementation of the Byte Pair Encoding (BPE) algorithm, commonly used in LLM tokenization. The BPE algorithm is "byte-level" because it runs on UTF-8 encoded strings. This algorithm was popularized for LLMs by the GPT-2 paper and the associated GPT-2 code release from OpenAI. Sennrich et al. 2015 is cited as the original reference for the use of BPE in NLP applications. Today, all modern LLMs (e.g. GPT, Llama, Mistral) use this algorithm to train their tokenizers. There are two Tokenizers in this repository, both of which can perform the 3 primary functions of a Tokenizer: 1) train the tokenizer vocabulary and merges on a given text, 2) encode from text to tokens, 3) decode from tokens to text. The files of the repo are as follows: 1. minbpe/base.py: Implements the `Tokenizer` class, which is the base class. It contains the `train`, `encode`, and `decode` stubs, save/load functionality, and there are also a few common utility functions. This class is not meant to be used directly, but rather to be inherited from. 2. minbpe/basic.py: Implements the `BasicTokenizer`, the simplest implementation of the BPE algorithm that runs directly on text. 3. minbpe/regex.py: Implements the `RegexTokenizer` that further splits the input text by a regex pattern, which is a preprocessing stage that splits up the input text by categories (think: letters, numbers, punctuation) before tokenization. This ensures that no merges will happen across category boundaries. This was introduced in the GPT-2 paper and continues to be in use as of GPT-4. This class also handles special tokens, if any. 4. minbpe/gpt4.py: Implements the `GPT4Tokenizer`. This class is a light wrapper around the `RegexTokenizer` (2, above) that exactly reproduces the tokenization of GPT-4 in the tiktoken library. The wrapping handles some details around recovering the exact merges in the tokenizer, and the handling of some unfortunate (and likely historical?) 1-byte token permutations. Finally, the script train.py trains the two major tokenizers on the input text tests/taylorswift.txt (this is the Wikipedia entry for her kek) and saves the vocab to disk for visualization. This script runs in about 25 seconds on my (M1) MacBook. All of the files above are very short and thoroughly commented, and also contain a usage example on the bottom of the file.

dolma

Dolma is a dataset and toolkit for curating large datasets for (pre)-training ML models. The dataset consists of 3 trillion tokens from a diverse mix of web content, academic publications, code, books, and encyclopedic materials. The toolkit provides high-performance, portable, and extensible tools for processing, tagging, and deduplicating documents. Key features of the toolkit include built-in taggers, fast deduplication, and cloud support.

MemGPT

MemGPT is a system that intelligently manages different memory tiers in LLMs in order to effectively provide extended context within the LLM's limited context window. For example, MemGPT knows when to push critical information to a vector database and when to retrieve it later in the chat, enabling perpetual conversations. MemGPT can be used to create perpetual chatbots with self-editing memory, chat with your data by talking to your local files or SQL database, and more.

DAMO-ConvAI

DAMO-ConvAI is the official repository for Alibaba DAMO Conversational AI. It contains the codebase for various conversational AI models and tools developed by Alibaba Research. These models and tools cover a wide range of tasks, including natural language understanding, natural language generation, dialogue management, and knowledge graph construction. DAMO-ConvAI is released under the MIT license and is available for use by researchers and developers in the field of conversational AI.

mnn-llm

MNN-LLM is a high-performance inference engine for large language models (LLMs) on mobile and embedded devices. It provides optimized implementations of popular LLM models, such as ChatGPT, BLOOM, and GPT-3, enabling developers to easily integrate these models into their applications. MNN-LLM is designed to be efficient and lightweight, making it suitable for resource-constrained devices. It supports various deployment options, including mobile apps, web applications, and embedded systems. With MNN-LLM, developers can leverage the power of LLMs to enhance their applications with natural language processing capabilities, such as text generation, question answering, and dialogue generation.

rank_llm

RankLLM is a suite of prompt-decoders compatible with open source LLMs like Vicuna and Zephyr. It allows users to create custom ranking models for various NLP tasks, such as document reranking, question answering, and summarization. The tool offers a variety of features, including the ability to fine-tune models on custom datasets, use different retrieval methods, and control the context size and variable passages. RankLLM is easy to use and can be integrated into existing NLP pipelines.

langcheck

LangCheck is a Python library that provides a suite of metrics and tools for evaluating the quality of text generated by large language models (LLMs). It includes metrics for evaluating text fluency, sentiment, toxicity, factual consistency, and more. LangCheck also provides tools for visualizing metrics, augmenting data, and writing unit tests for LLM applications. With LangCheck, you can quickly and easily assess the quality of LLM-generated text and identify areas for improvement.

unitxt

Unitxt is a customizable library for textual data preparation and evaluation tailored to generative language models. It natively integrates with common libraries like HuggingFace and LM-eval-harness and deconstructs processing flows into modular components, enabling easy customization and sharing between practitioners. These components encompass model-specific formats, task prompts, and many other comprehensive dataset processing definitions. The Unitxt-Catalog centralizes these components, fostering collaboration and exploration in modern textual data workflows. Beyond being a tool, Unitxt is a community-driven platform, empowering users to build, share, and advance their pipelines collaboratively.

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

LLMLingua

LLMLingua is a tool that utilizes a compact, well-trained language model to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models, achieving up to 20x compression with minimal performance loss. The tool includes LLMLingua, LongLLMLingua, and LLMLingua-2, each offering different levels of prompt compression and performance improvements for tasks involving large language models.

awesome-tool-llm

This repository focuses on exploring tools that enhance the performance of language models for various tasks. It provides a structured list of literature relevant to tool-augmented language models, covering topics such as tool basics, tool use paradigm, scenarios, advanced methods, and evaluation. The repository includes papers, preprints, and books that discuss the use of tools in conjunction with language models for tasks like reasoning, question answering, mathematical calculations, accessing knowledge, interacting with the world, and handling non-textual modalities.

qb

QANTA is a system and dataset for question answering tasks. It provides a script to download datasets, preprocesses questions, and matches them with Wikipedia pages. The system includes various datasets, training, dev, and test data in JSON and SQLite formats. Dependencies include Python 3.6, `click`, and NLTK models. Elastic Search 5.6 is needed for the Guesser component. Configuration is managed through environment variables and YAML files. QANTA supports multiple guesser implementations that can be enabled/disabled. Running QANTA involves using `cli.py` and Luigi pipelines. The system accesses raw Wikipedia dumps for data processing. The QANTA ID numbering scheme categorizes datasets based on events and competitions.

Woodpecker

Woodpecker is a tool designed to correct hallucinations in Multimodal Large Language Models (MLLMs) by introducing a training-free method that picks out and corrects inconsistencies between generated text and image content. It consists of five stages: key concept extraction, question formulation, visual knowledge validation, visual claim generation, and hallucination correction. Woodpecker can be easily integrated with different MLLMs and provides interpretable results by accessing intermediate outputs of the stages. The tool has shown significant improvements in accuracy over baseline models like MiniGPT-4 and mPLUG-Owl.

AlignBench

AlignBench is the first comprehensive evaluation benchmark for assessing the alignment level of Chinese large models across multiple dimensions. It includes introduction information, data, and code related to AlignBench. The benchmark aims to evaluate the alignment performance of Chinese large language models through a multi-dimensional and rule-calibrated evaluation method, enhancing reliability and interpretability.

CritiqueLLM

CritiqueLLM is an official implementation of a model designed for generating informative critiques to evaluate large language model generation. It includes functionalities for data collection, referenced pointwise grading, referenced pairwise comparison, reference-free pairwise comparison, reference-free pointwise grading, inference for pointwise grading and pairwise comparison, and evaluation of the generated results. The model aims to provide a comprehensive framework for evaluating the performance of large language models based on human ratings and comparisons.

COLD-Attack

COLD-Attack is a framework designed for controllable jailbreaks on large language models (LLMs). It formulates the controllable attack generation problem and utilizes the Energy-based Constrained Decoding with Langevin Dynamics (COLD) algorithm to automate the search of adversarial LLM attacks with control over fluency, stealthiness, sentiment, and left-right-coherence. The framework includes steps for energy function formulation, Langevin dynamics sampling, and decoding process to generate discrete text attacks. It offers diverse jailbreak scenarios such as fluent suffix attacks, paraphrase attacks, and attacks with left-right-coherence.

RAGFoundry

RAG Foundry is a library designed to enhance Large Language Models (LLMs) by fine-tuning models on RAG-augmented datasets. It helps create training data, train models using parameter-efficient finetuning (PEFT), and measure performance using RAG-specific metrics. The library is modular, customizable using configuration files, and facilitates prototyping with various RAG settings and configurations for tasks like data processing, retrieval, training, inference, and evaluation.

Controllable-RAG-Agent

This repository contains a sophisticated deterministic graph-based solution for answering complex questions using a controllable autonomous agent. The solution is designed to ensure that answers are solely based on the provided data, avoiding hallucinations. It involves various steps such as PDF loading, text preprocessing, summarization, database creation, encoding, and utilizing large language models. The algorithm follows a detailed workflow involving planning, retrieval, answering, replanning, content distillation, and performance evaluation. Heuristics and techniques implemented focus on content encoding, anonymizing questions, task breakdown, content distillation, chain of thought answering, verification, and model performance evaluation.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

files-to-prompt

files-to-prompt is a tool that concatenates a directory full of files into a single prompt for use with Language Models (LLMs). It allows users to provide the path to one or more files or directories for processing, outputting the contents of each file with relative paths and separators. The tool offers options to include hidden files, ignore specific patterns, and exclude files specified in .gitignore. It is designed to streamline the process of preparing text data for LLMs by simplifying file concatenation and customization.

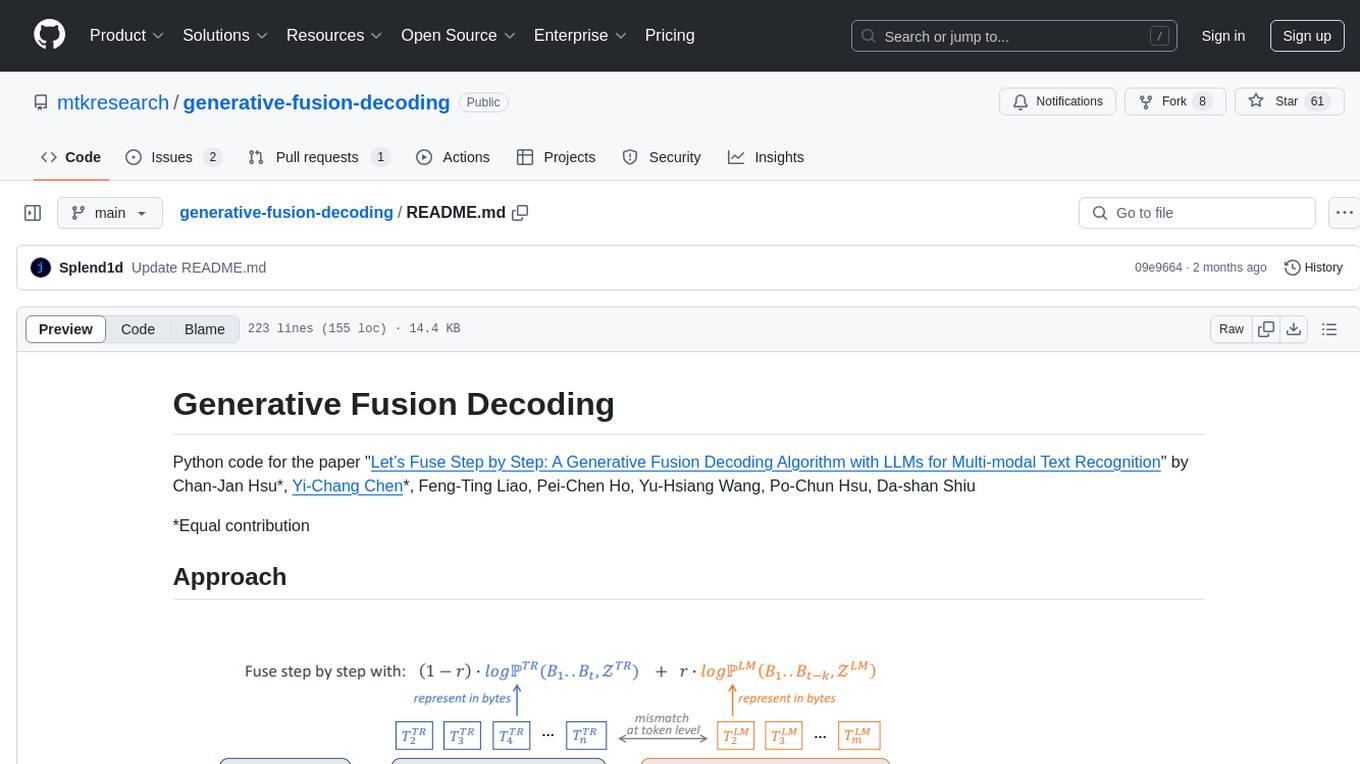

generative-fusion-decoding

Generative Fusion Decoding (GFD) is a novel shallow fusion framework that integrates Large Language Models (LLMs) into multi-modal text recognition systems such as automatic speech recognition (ASR) and optical character recognition (OCR). GFD operates across mismatched token spaces of different models by mapping text token space to byte token space, enabling seamless fusion during the decoding process. It simplifies the complexity of aligning different model sample spaces, allows LLMs to correct errors in tandem with the recognition model, increases robustness in long-form speech recognition, and enables fusing recognition models deficient in Chinese text recognition with LLMs extensively trained on Chinese. GFD significantly improves performance in ASR and OCR tasks, offering a unified solution for leveraging existing pre-trained models through step-by-step fusion.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

CAG

Cache-Augmented Generation (CAG) is an alternative paradigm to Retrieval-Augmented Generation (RAG) that eliminates real-time retrieval delays and errors by preloading all relevant resources into the model's context. CAG leverages extended context windows of large language models (LLMs) to generate responses directly, providing reduced latency, improved reliability, and simplified design. While CAG has limitations in knowledge size and context length, advancements in LLMs are addressing these issues, making CAG a practical and scalable alternative for complex applications.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

EuroEval

EuroEval is a robust European language model benchmark tool, formerly known as ScandEval. It provides a platform to benchmark pretrained models on various tasks across different languages. Users can evaluate models, datasets, and metrics both online and offline. The tool supports benchmarking from the command line, script, and Docker. Additionally, users can reproduce datasets used in the project using provided scripts. EuroEval welcomes contributions and offers guidelines for general contributions and adding new datasets.

tunix

Tunix is a JAX-based library designed for post-training Large Language Models. It provides efficient support for supervised fine-tuning, reinforcement learning, and knowledge distillation. Tunix leverages JAX for accelerated computation and integrates seamlessly with the Flax NNX modeling framework. The library is modular, efficient, and designed for distributed training on accelerators like TPUs. Currently in early development, Tunix aims to expand its capabilities, usability, and performance.

20 - OpenAI Gpts

Automated AI Prompt Categorizer

Comprehensive categorization and organization for AI Prompts

DataLearnerAI-GPT

Using OpenLLMLeaderboard data to answer your questions about LLM. For Currently!

AI-Driven Lab

recommends AI research these days in Japanese using AI-driven's-lab articles

Illuminati AI

The IlluminatiAI model represents a novel approach in the field of artificial intelligence, incorporating elements of secret societies, ancient knowledge, and hidden wisdom into its algorithms.

Generative AI Examiner

For "Generative AI Test". Examiner in Generative AI, posing questions and providing feedback.