browser-use-webui

Run AI Agent in your browser.

Stars: 218

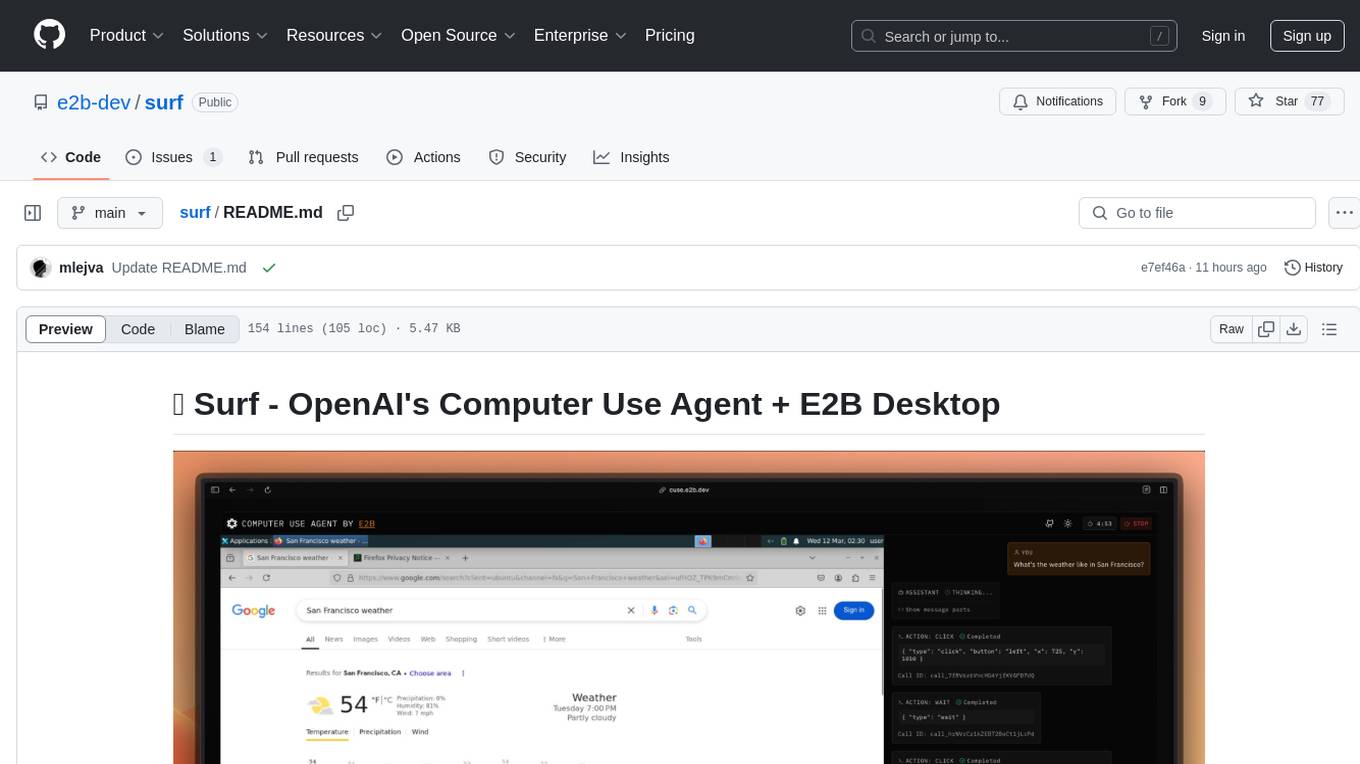

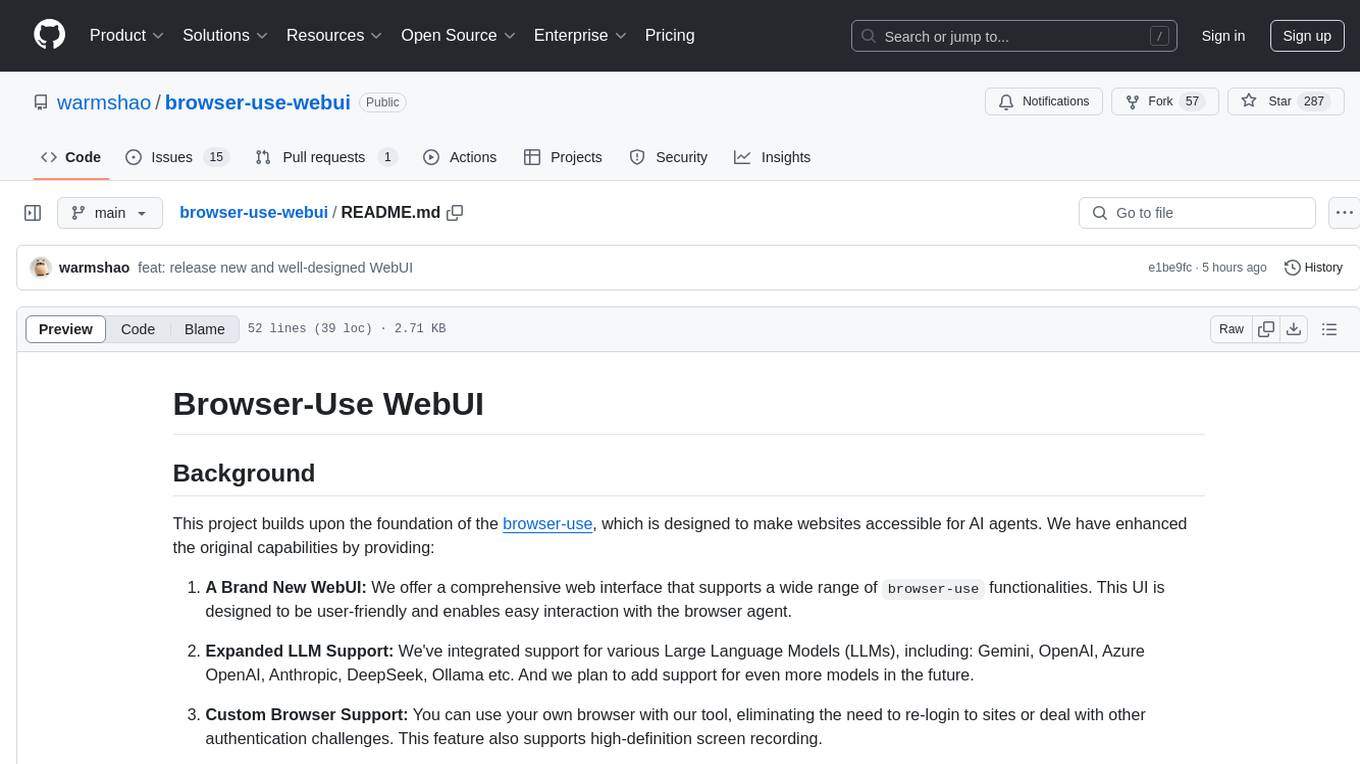

Browser-Use WebUI is a project that enhances the original browser-use tool by providing a brand new web interface, expanded LLM support for various Large Language Models, custom browser support for using your own browser with the tool, and a customized agent with optimized prompts. The tool aims to make websites accessible for AI agents and offers user-friendly interaction with the browser agent, eliminating the need for re-login to sites and dealing with authentication challenges. It also supports high-definition screen recording.

README:

This project builds upon the foundation of the browser-use, which is designed to make websites accessible for AI agents. We have enhanced the original capabilities by providing:

-

A Brand New WebUI: We offer a comprehensive web interface that supports a wide range of

browser-usefunctionalities. This UI is designed to be user-friendly and enables easy interaction with the browser agent. -

Expanded LLM Support: We've integrated support for various Large Language Models (LLMs), including: Gemini, OpenAI, Azure OpenAI, Anthropic, DeepSeek, Ollama etc. And we plan to add support for even more models in the future.

-

Custom Browser Support: You can use your own browser with our tool, eliminating the need to re-login to sites or deal with other authentication challenges. This feature also supports high-definition screen recording.

-

Customized Agent: We've implemented a custom agent that enhances

browser-usewith Optimized prompts.

Your browser does not support playing this video!

Changelog

- [x] 2025/01/06: Thanks to @richard-devbot, a New and Well-Designed WebUI is released. Video tutorial demo.

- Python Version: Ensure you have Python 3.11 or higher installed.

-

Install

browser-use:pip install browser-use

-

Install Playwright:

playwright install

-

Install Dependencies:

pip install -r requirements.txt

-

Configure Environment Variables:

- Copy

.env.exampleto.envand set your environment variables, including API keys for the LLM. -

If using your own browser:

- Set

CHROME_PATHto the executable path of your browser (e.g.,C:\Program Files\Google\Chrome\Application\chrome.exeon Windows). - Set

CHROME_USER_DATAto the user data directory of your browser (e.g.,C:\Users\<YourUsername>\AppData\Local\Google\Chrome\User Data).

- Set

- Copy

-

Run the WebUI:

python webui.py --ip 127.0.0.1 --port 7788

-

Access the WebUI: Open your web browser and navigate to

http://127.0.0.1:7788. -

Using Your Own Browser:

- Close all chrome windows

- Open the WebUI in a non-Chrome browser, such as Firefox or Edge. This is important because the persistent browser context will use the Chrome data when running the agent.

- Check the "Use Own Browser" option within the Browser Settings.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for browser-use-webui

Similar Open Source Tools

browser-use-webui

Browser-Use WebUI is a project that enhances the original browser-use tool by providing a brand new web interface, expanded LLM support for various Large Language Models, custom browser support for using your own browser with the tool, and a customized agent with optimized prompts. The tool aims to make websites accessible for AI agents and offers user-friendly interaction with the browser agent, eliminating the need for re-login to sites and dealing with authentication challenges. It also supports high-definition screen recording.

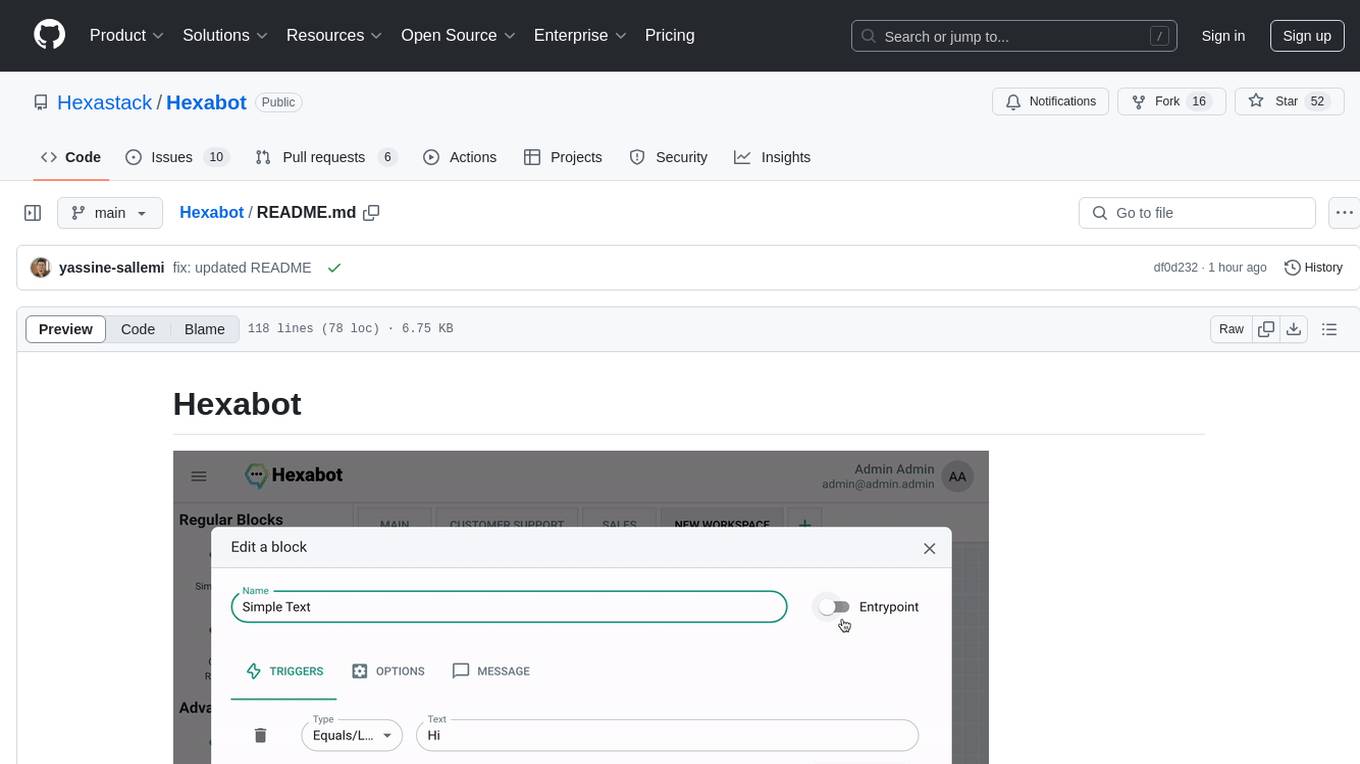

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

GraphRAG-Local-UI

GraphRAG Local with Interactive UI is an adaptation of Microsoft's GraphRAG, tailored to support local models and featuring a comprehensive interactive user interface. It allows users to leverage local models for LLM and embeddings, visualize knowledge graphs in 2D or 3D, manage files, settings, and queries, and explore indexing outputs. The tool aims to be cost-effective by eliminating dependency on costly cloud-based models and offers flexible querying options for global, local, and direct chat queries.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

chatty

Chatty is a private AI tool that runs large language models natively and privately in the browser, ensuring in-browser privacy and offline usability. It supports chat history management, open-source models like Gemma and Llama2, responsive design, intuitive UI, markdown & code highlight, chat with files locally, custom memory support, export chat messages, voice input support, response regeneration, and light & dark mode. It aims to bring popular AI interfaces like ChatGPT and Gemini into an in-browser experience.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

ComfyUIMini

ComfyUI Mini is a lightweight and mobile-friendly frontend designed to run ComfyUI workflows. It allows users to save workflows locally on their device or PC, easily import workflows, and view generation progress information. The tool requires ComfyUI to be installed on the PC and a modern browser with WebSocket support on the mobile device. Users can access the WebUI by running the app and connecting to the local address of the PC. ComfyUI Mini provides a simple and efficient way to manage workflows on mobile devices.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

AIOLists

AIOLists is a stateless open source list management addon for Stremio that allows users to import and manage lists from various sources in one place. It offers unified search, metadata customization, Trakt integration, MDBList integration, external lists import, list sorting, customization options, watchlist updates, RPDB support, genre filtering, discovery lists, and shareable configurations. The addon aims to enhance the list management experience for Stremio users by providing a comprehensive set of features and functionalities.

ai-chatbot-svelte

SvelteKit AI Chatbot is an open-source template built with SvelteKit and the AI SDK by Vercel. It provides a unified API for generating text, structured objects, and tool calls with LLMs. The template includes hooks for building dynamic chat and generative user interfaces, supports various model providers, and offers styling with Tailwind CSS. Data persistence is ensured with Vercel Postgres and Blob for saving chat history and user data. Users can easily deploy their own version of the chatbot to Vercel with one click and run it locally using the provided environment variables.

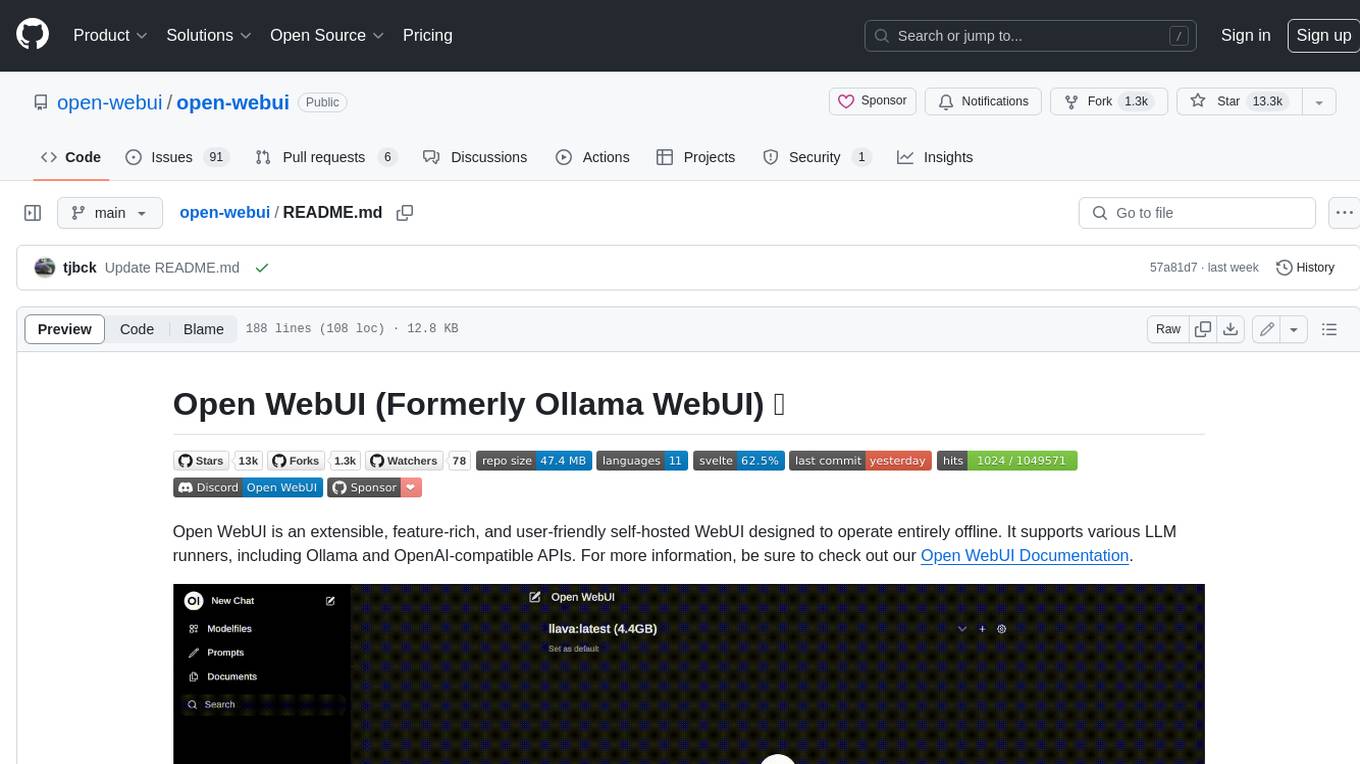

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

Loyal-Elephie

Embark on an exciting adventure with Loyal Elephie, your faithful AI sidekick! This project combines the power of a neat Next.js web UI and a mighty Python backend, leveraging the latest advancements in Large Language Models (LLMs) and Retrieval Augmented Generation (RAG) to deliver a seamless and meaningful chatting experience. Features include controllable memory, hybrid search, secure web access, streamlined LLM agent, and optional Markdown editor integration. Loyal Elephie supports both open and proprietary LLMs and embeddings serving as OpenAI compatible APIs.

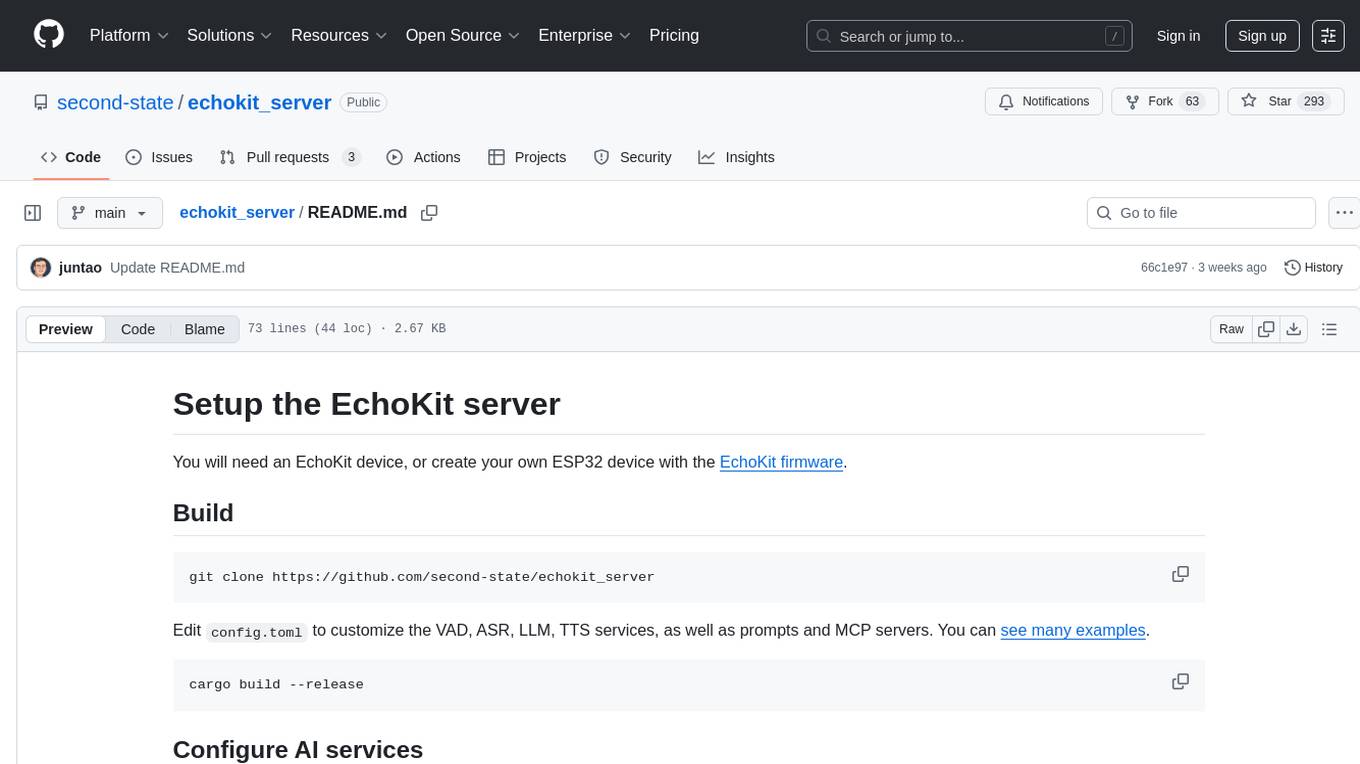

echokit_server

Echokit_server is a lightweight and efficient server-side implementation of the Amazon Alexa Voice Service (AVS) SDK. It allows developers to easily integrate Alexa voice capabilities into their own applications or devices. The server handles the communication with the Alexa Voice Service API, manages user interactions, and processes voice commands. Echokit_server provides a simple and flexible solution for adding voice-controlled features to a wide range of projects, such as smart home devices, IoT applications, and voice-enabled services.

surf

Surf is a Next.js application that integrates E2B's desktop sandbox with OpenAI's API to create an AI agent that can perform tasks on a virtual computer through natural language instructions. It provides a web interface for users to start a virtual desktop sandbox environment, send instructions to the AI agent, watch AI actions in real-time, and interact with the AI through a chat interface. The application uses Server-Sent Events (SSE) for seamless communication between frontend and backend components.

GPThemes

GPThemes is a GitHub repository that provides a collection of customizable themes for various programming languages and text editors. It offers a wide range of color schemes and styling options to enhance the visual appearance of code editors and terminals. Users can easily browse through the available themes and apply them to their preferred development environment to personalize the coding experience. With GPThemes, developers can quickly switch between different themes to find the one that best suits their preferences and workflow, making coding more enjoyable and visually appealing.

For similar tasks

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

browser-use-webui

Browser-Use WebUI is a project that enhances the original browser-use tool by providing a brand new web interface, expanded LLM support for various Large Language Models, custom browser support for using your own browser with the tool, and a customized agent with optimized prompts. The tool aims to make websites accessible for AI agents and offers user-friendly interaction with the browser agent, eliminating the need for re-login to sites and dealing with authentication challenges. It also supports high-definition screen recording.

TermNet

TermNet is an AI-powered terminal assistant that connects a Large Language Model (LLM) with shell command execution, browser search, and dynamically loaded tools. It streams responses in real-time, executes tools one at a time, and maintains conversational memory across steps. The project features terminal integration for safe shell command execution, dynamic tool loading without code changes, browser automation powered by Playwright, WebSocket architecture for real-time communication, a memory system to track planning and actions, streaming LLM output integration, a safety layer to block dangerous commands, dual interface options, a notification system, and scratchpad memory for persistent note-taking. The architecture includes a multi-server setup with servers for WebSocket, browser automation, notifications, and web UI. The project structure consists of core backend files, various tools like web browsing and notification management, and servers for browser automation and notifications. Installation requires Python 3.9+, Ollama, and Chromium, with setup steps provided in the README. The tool can be used via the launcher for managing components or directly by starting individual servers. Additional tools can be added by registering them in `toolregistry.json` and implementing them in Python modules. Safety notes highlight the blocking of dangerous commands, allowed risky commands with warnings, and the importance of monitoring tool execution and setting appropriate timeouts.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

BrowserOS

BrowserOS is an open-source Chromium fork that runs AI agents natively. It provides a privacy-first alternative to ChatGPT Atlas, Perplexity Comet, and Dia. Users can use their own API keys or run local models with Ollama, ensuring that their data never leaves their machine. BrowserOS offers a familiar Chrome interface with all extensions working, AI agents that run on the user's browser instead of the cloud, privacy-first approach, browser control through MCP server, workflows for building browser automations, coworking capabilities with local file operations, scheduled tasks, LLM Hub for comparing AI agents, built-in ad blocker, and is 100% open source under AGPL-3.0.

web-ui

WebUI is a user-friendly tool built on Gradio that enhances website accessibility for AI agents. It supports various Large Language Models (LLMs) and allows custom browser integration for seamless interaction. The tool eliminates the need for re-login and authentication challenges, offering high-definition screen recording capabilities.

TagUI

TagUI is an open-source RPA tool that allows users to automate repetitive tasks on their computer, including tasks on websites, desktop apps, and the command line. It supports multiple languages and offers features like interacting with identifiers, automating data collection, moving data between TagUI and Excel, and sending Telegram notifications. Users can create RPA robots using MS Office Plug-ins or text editors, run TagUI on the cloud, and integrate with other RPA tools. TagUI prioritizes enterprise security by running on users' computers and not storing data. It offers detailed logs, enterprise installation guides, and support for centralised reporting.

bedrock-agentcore-starter-toolkit

Amazon Bedrock AgentCore Starter Toolkit enables developers to deploy and operate highly effective AI agents securely at scale using any framework and model. It provides tools and capabilities to make agents more effective and capable, purpose-built infrastructure to securely scale agents, and controls to operate trustworthy agents. The toolkit includes modular services like Runtime, Memory, Gateway, Code Interpreter, Browser, Observability, Identity, and Import Agent for seamless migration of existing agents. It is currently in public preview and offers enterprise-grade security and reliability for accelerating AI agent development.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.