playground-flight-booking

Spring AI powered expert system demo

Stars: 71

This repository contains a Spring AI re-implementation of an expert system demo that showcases building an AI-powered system with capabilities such as retrieval augmented generation, function calling using Java methods, and interaction with the user through an LLM. The app requires Java 17+ and an OpenAI API key. It provides examples of integrating with various chat services like OpenAI, VertexAI Gemini, Azure OpenAI, Groq, and Anthropic Claude 3. Users can explore different chat options and models for AI interactions within the system.

README:

Spring AI re-implementation of https://github.com/marcushellberg/java-ai-playground

This app shows how you can use Spring AI to build an AI-powered system that:

- Has access to terms and conditions (retrieval augmented generation, RAG)

- Can access tools (Java methods) to perform actions (Function Calling)

- Uses an LLM to interact with the user

- Java 17+

- OpenAI API key in

OPENAI_API_KEYenvironment variable

Run the app by running Application.java in your IDE or mvn in the command line.

Add to the POM the Spring AI Open AI boot starter:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>Add the OpenAI configuraiton to the applicaiton.properties:

spring.ai.openai.api-key=${OPENAI_API_KEY}

spring.ai.openai.chat.options.model=gpt-4o

Add to the POM the Spring AI VertexAI Gemeni and Onnx Transfomer Embedding boot starters:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-vertex-ai-gemini-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-transformers-spring-boot-starter</artifactId>

</dependency>Add the VertexAI Gemini configuraiton to the applicaiton.properties:

spring.ai.vertex.ai.gemini.project-id=${VERTEX_AI_GEMINI_PROJECT_ID}

spring.ai.vertex.ai.gemini.location=${VERTEX_AI_GEMINI_LOCATION}

spring.ai.vertex.ai.gemini.chat.options.model=gemini-1.5-pro-001

# spring.ai.vertex.ai.gemini.chat.options.model=gemini-1.5-flash-001

Add to the POM the Spring AI Azure OpenAI boot starter:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-azure-openai-spring-boot-starter</artifactId>

</dependency>Add the Azure OpenAI configuraiton to the applicaiton.properties:

spring.ai.azure.openai.api-key=${AZURE_OPENAI_API_KEY}

spring.ai.azure.openai.endpoint=${AZURE_OPENAI_ENDPOINT}

spring.ai.azure.openai.chat.options.deployment-name=gpt-4o

It reuses the OpenAI Chat client but ponted to the Groq endpont

Add to the POM the Spring AI Open AI boot starter:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-transformers-spring-boot-starter</artifactId>

</dependency>Add the Groq configuraiton to the applicaiton.properties:

spring.ai.openai.api-key=${GROQ_API_KEY}

spring.ai.openai.base-url=https://api.groq.com/openai

spring.ai.openai.chat.options.model=llama3-70b-8192

Add to the POM the Spring AI Anthropic Claude and Onnx Transfomer Embedding boot starters:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-anthropic-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-transformers-spring-boot-starter</artifactId>

</dependency>Add the Anthropic configuraiton to the applicaiton.properties:

spring.ai.anthropic.api-key=${ANTHROPIC_API_KEY}

spring.ai.openai.chat.options.model=llama3-70b-8192

spring.ai.anthropic.chat.options.model=claude-3-5-sonnet-20240620

./mvnw clean install -Pproductionjava -jar ./target/playground-flight-booking-0.0.1-SNAPSHOT.jardocker run -it --rm --name postgres -p 5432:5432 -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=postgres ankane/pgvector

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for playground-flight-booking

Similar Open Source Tools

playground-flight-booking

This repository contains a Spring AI re-implementation of an expert system demo that showcases building an AI-powered system with capabilities such as retrieval augmented generation, function calling using Java methods, and interaction with the user through an LLM. The app requires Java 17+ and an OpenAI API key. It provides examples of integrating with various chat services like OpenAI, VertexAI Gemini, Azure OpenAI, Groq, and Anthropic Claude 3. Users can explore different chat options and models for AI interactions within the system.

ruby-nano-bots

Ruby Nano Bots is an implementation of the Nano Bots specification supporting various AI providers like Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others. It allows calling tools (functions) and provides a helpful assistant for interacting with AI language models. The tool can be used both from the command line and as a library in Ruby projects, offering features like REPL, debugging, and encryption for data privacy.

org-ai

org-ai is a minor mode for Emacs org-mode that provides access to generative AI models, including OpenAI API (ChatGPT, DALL-E, other text models) and Stable Diffusion. Users can use ChatGPT to generate text, have speech input and output interactions with AI, generate images and image variations using Stable Diffusion or DALL-E, and use various commands outside org-mode for prompting using selected text or multiple files. The tool supports syntax highlighting in AI blocks, auto-fill paragraphs on insertion, and offers block options for ChatGPT, DALL-E, and other text models. Users can also generate image variations, use global commands, and benefit from Noweb support for named source blocks.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

ahnlich

Ahnlich is a tool that provides multiple components for storing and searching similar vectors using linear or non-linear similarity algorithms. It includes 'ahnlich-db' for in-memory vector key value store, 'ahnlich-ai' for AI proxy communication, 'ahnlich-client-rs' for Rust client, and 'ahnlich-client-py' for Python client. The tool is not production-ready yet and is still in testing phase, allowing AI/ML engineers to issue queries using raw input such as images/text and features off-the-shelf models for indexing and querying.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

llm-gemini

llm-gemini is a plugin that provides API access to Google's Gemini models. It allows users to configure and run various Gemini models for tasks such as generating text, processing images, transcribing audio, and executing code. The plugin supports multi-modal inputs including images, audio, and video, and can output JSON objects. Additionally, it enables chat interactions with the model and supports different embedding models for text processing. Users can also run similarity searches on embedded data. The plugin is designed to work in conjunction with LLM and offers extensive documentation for development and usage.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

ragflow

RAGFlow is an open-source Retrieval-Augmented Generation (RAG) engine that combines deep document understanding with Large Language Models (LLMs) to provide accurate question-answering capabilities. It offers a streamlined RAG workflow for businesses of all sizes, enabling them to extract knowledge from unstructured data in various formats, including Word documents, slides, Excel files, images, and more. RAGFlow's key features include deep document understanding, template-based chunking, grounded citations with reduced hallucinations, compatibility with heterogeneous data sources, and an automated and effortless RAG workflow. It supports multiple recall paired with fused re-ranking, configurable LLMs and embedding models, and intuitive APIs for seamless integration with business applications.

hey

Hey is a free CLI-based AI assistant powered by LLMs, allowing users to connect Hey to different LLM services. It provides commands for quick usage, customization options, and integration with code editors. Hey was created for a hackathon and is licensed under the MIT License.

green-bit-llm

Green-Bit-LLM is a Python toolkit designed for fine-tuning, inferencing, and evaluating GreenBitAI's low-bit Language Models (LLMs). It utilizes the Bitorch Engine for efficient operations on low-bit LLMs, enabling high-performance inference on various GPUs and supporting full-parameter fine-tuning using quantized LLMs. The toolkit also provides evaluation tools to validate model performance on benchmark datasets. Green-Bit-LLM is compatible with AutoGPTQ series of 4-bit quantization and compression models.

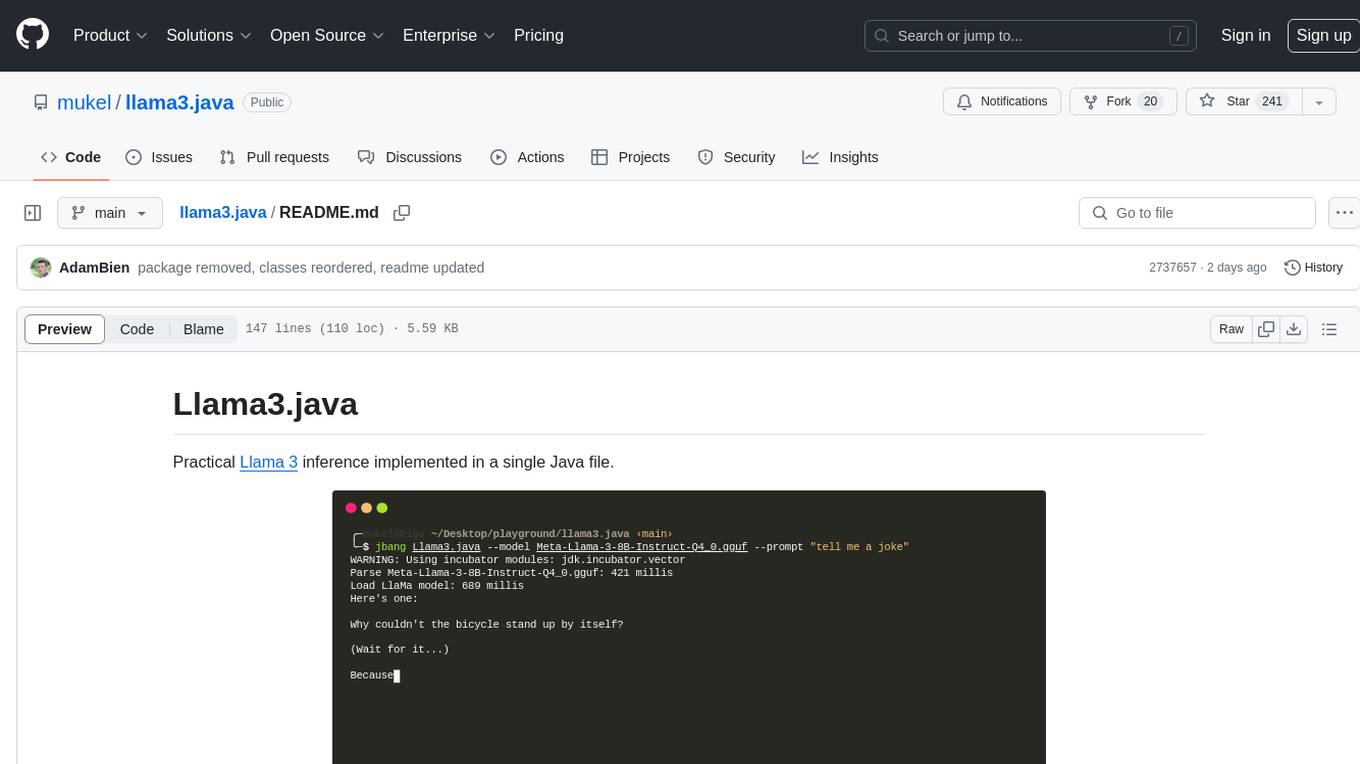

llama3.java

Llama3.java is a practical Llama 3 inference tool implemented in a single Java file. It serves as the successor of llama2.java and is designed for testing and tuning compiler optimizations and features on the JVM, especially for the Graal compiler. The tool features a GGUF format parser, Llama 3 tokenizer, Grouped-Query Attention inference, support for Q8_0 and Q4_0 quantizations, fast matrix-vector multiplication routines using Java's Vector API, and a simple CLI with 'chat' and 'instruct' modes. Users can download quantized .gguf files from huggingface.co for model usage and can also manually quantize to pure 'Q4_0'. The tool requires Java 21+ and supports running from source or building a JAR file for execution. Performance benchmarks show varying tokens/s rates for different models and implementations on different hardware setups.

airbase

Airbase is a Maven project management tool that provides a parent pom structure and conventions for defining new projects. It includes guidelines for project pom structure, deployment to Maven Central, project build and checkers, well-known dependencies, and other properties. Airbase helps in enforcing build configurations, organizing project pom files, and running various checkers to catch problems early in the build process. It also offers default properties that can be overridden in the project pom.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

For similar tasks

playground-flight-booking

This repository contains a Spring AI re-implementation of an expert system demo that showcases building an AI-powered system with capabilities such as retrieval augmented generation, function calling using Java methods, and interaction with the user through an LLM. The app requires Java 17+ and an OpenAI API key. It provides examples of integrating with various chat services like OpenAI, VertexAI Gemini, Azure OpenAI, Groq, and Anthropic Claude 3. Users can explore different chat options and models for AI interactions within the system.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

browser-use

Browser Use is a tool designed to make websites accessible for AI agents. It provides an easy way to connect AI agents with the browser, enabling users to perform tasks such as extracting vision and HTML elements, managing multiple tabs, and executing custom actions. The tool supports various language models and allows users to parallelize multiple agents for efficient processing. With features like self-correction and the ability to register custom actions, Browser Use offers a versatile solution for interacting with web content using AI technology.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.