token.js

Integrate 200+ LLMs with one TypeScript SDK using OpenAI's format.

Stars: 51

Token.js is a TypeScript SDK that integrates with over 200 LLMs from 10 providers using OpenAI's format. It allows users to call LLMs, supports tools, JSON outputs, image inputs, and streaming, all running on the client side without the need for a proxy server. The tool is free and open source under the MIT license.

README:

Integrate 200+ LLMs with one TypeScript SDK using OpenAI's format. Free and open source. No proxy server required.

- Use OpenAI's format to call 200+ LLMs from 10 providers.

- Supports tools, JSON outputs, image inputs, streaming, and more.

- Runs completely on the client side. No proxy server needed.

- Free and open source under MIT.

- AI21

- Anthropic

- AWS Bedrock

- Cohere

- Gemini

- Groq

- Mistral

- OpenAI

- Perplexity

- OpenRouter

npm install token.jsImport the Token.js client and call the create function with a prompt in OpenAI's format. Specify the model and LLM provider using their respective fields.

OPENAI_API_KEY=<openai api key>import { TokenJS } from 'token.js'

// Create the Token.js client

const tokenjs = new TokenJS()

async function main() {

// Create a model response

const completion = await tokenjs.chat.completions.create({

// Specify the provider and model

provider: 'openai',

model: 'gpt-4o',

// Define your message

messages: [

{

role: 'user',

content: 'Hello!',

},

],

})

console.log(completion.choices[0])

}

main()We recommend using environment variables to configure the credentials for each LLM provider.

# OpenAI

OPENAI_API_KEY=

# AI21

AI21_API_KEY=

# Anthropic

ANTHROPIC_API_KEY=

# Cohere

COHERE_API_KEY=

# Gemini

GEMINI_API_KEY=

# Groq

GROQ_API_KEY=

# Mistral

MISTRAL_API_KEY=

# Perplexity

PERPLEXITY_API_KEY=

# OpenRouter

OPENROUTER_API_KEY=

# AWS Bedrock

AWS_REGION_NAME=

AWS_ACCESS_KEY_ID=

AWS_SECRET_ACCESS_KEY=Token.js supports streaming responses for all providers that offer it.

import { TokenJS } from 'token.js'

const tokenjs = new TokenJS()

async function main() {

const result = await tokenjs.chat.completions.create({

stream: true,

provider: 'openai',

model: 'gpt-4o',

messages: [

{

role: 'user',

content: `Tell me about yourself.`,

},

],

})

for await (const part of result) {

process.stdout.write(part.choices[0]?.delta?.content || '')

}

}

main()Token.js supports the function calling tool for all providers and models that offer it.

import { TokenJS, ChatCompletionTool } from 'token.js'

const tokenjs = new TokenJS()

async function main() {

const tools: ChatCompletionTool[] = [

{

type: 'function',

function: {

name: 'get_current_weather',

description: 'Get the current weather in a given location',

parameters: {

type: 'object',

properties: {

location: {

type: 'string',

description: 'The city and state, e.g. San Francisco, CA',

},

},

required: ['location'],

},

},

},

]

const result = await tokenjs.chat.completions.create({

provider: 'gemini',

model: 'gemini-1.5-pro',

messages: [

{

role: 'user',

content: `What's the weather like in San Francisco?`,

},

],

tools,

tool_choice: 'auto',

})

console.log(result.choices[0].message.tool_calls)

}

main()This table provides an overview of the features that Token.js supports from each LLM provider.

| Provider | Chat Completion | Streaming | Function Calling Tool | JSON Output | Image Input |

|---|---|---|---|---|---|

| OpenAI | ✅ | ✅ | ✅ | ✅ | ✅ |

| Anthropic | ✅ | ✅ | ✅ | ✅ | ✅ |

| Bedrock | ✅ | ✅ | ✅ | ✅ | ✅ |

| Mistral | ✅ | ✅ | ✅ | ✅ | ➖ |

| Cohere | ✅ | ✅ | ✅ | ➖ | ➖ |

| AI21 | ✅ | ✅ | ➖ | ➖ | ➖ |

| Gemini | ✅ | ✅ | ✅ | ✅ | ✅ |

| Groq | ✅ | ✅ | ➖ | ✅ | ➖ |

| Perplexity | ✅ | ✅ | ➖ | ➖ | ➖ |

| OpenRouter | ✅ | ✅ | ✅ | ✅ | ✅ |

| Symbol | Description |

|---|---|

| ✅ | Supported by Token.js |

| ➖ | Not supported by the LLM provider, so Token.js cannot support it |

Note: Certain LLMs, particularly older or weaker models, do not support some features in this table. For details about these restrictions, see our LLM provider documentation.

See our Contributing guide to learn how to contribute to Token.js.

Please reach out if there's any way that we can improve Token.js!

Here are a few ways you can reach us:

- Discord

- Schedule a meeting

- Call or text: +1 (516) 206-6928

- Email: [email protected]

- Feedback

Token.js is free and open source software licensed under MIT.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for token.js

Similar Open Source Tools

token.js

Token.js is a TypeScript SDK that integrates with over 200 LLMs from 10 providers using OpenAI's format. It allows users to call LLMs, supports tools, JSON outputs, image inputs, and streaming, all running on the client side without the need for a proxy server. The tool is free and open source under the MIT license.

AnyCrawl

AnyCrawl is a high-performance crawling and scraping toolkit designed for SERP crawling, web scraping, site crawling, and batch tasks. It offers multi-threading and multi-process capabilities for high performance. The tool also provides AI extraction for structured data extraction from pages, making it LLM-friendly and easy to integrate and use.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

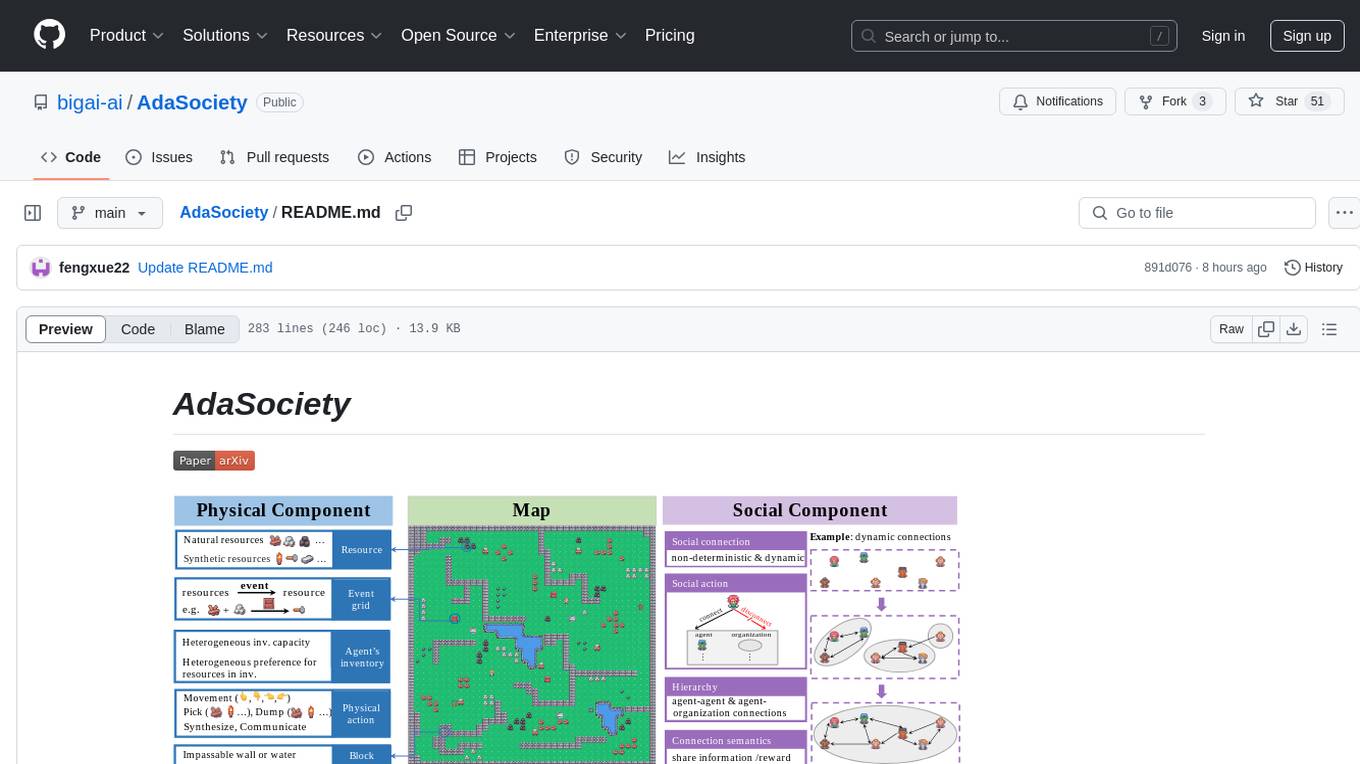

AdaSociety

AdaSociety is a multi-agent environment designed for simulating social structures and decision-making processes. It offers built-in resources, events, and player interactions. Users can customize the environment through JSON configuration or custom Python code. The environment supports training agents using RLlib and LLM frameworks. It provides a platform for studying multi-agent systems and social dynamics.

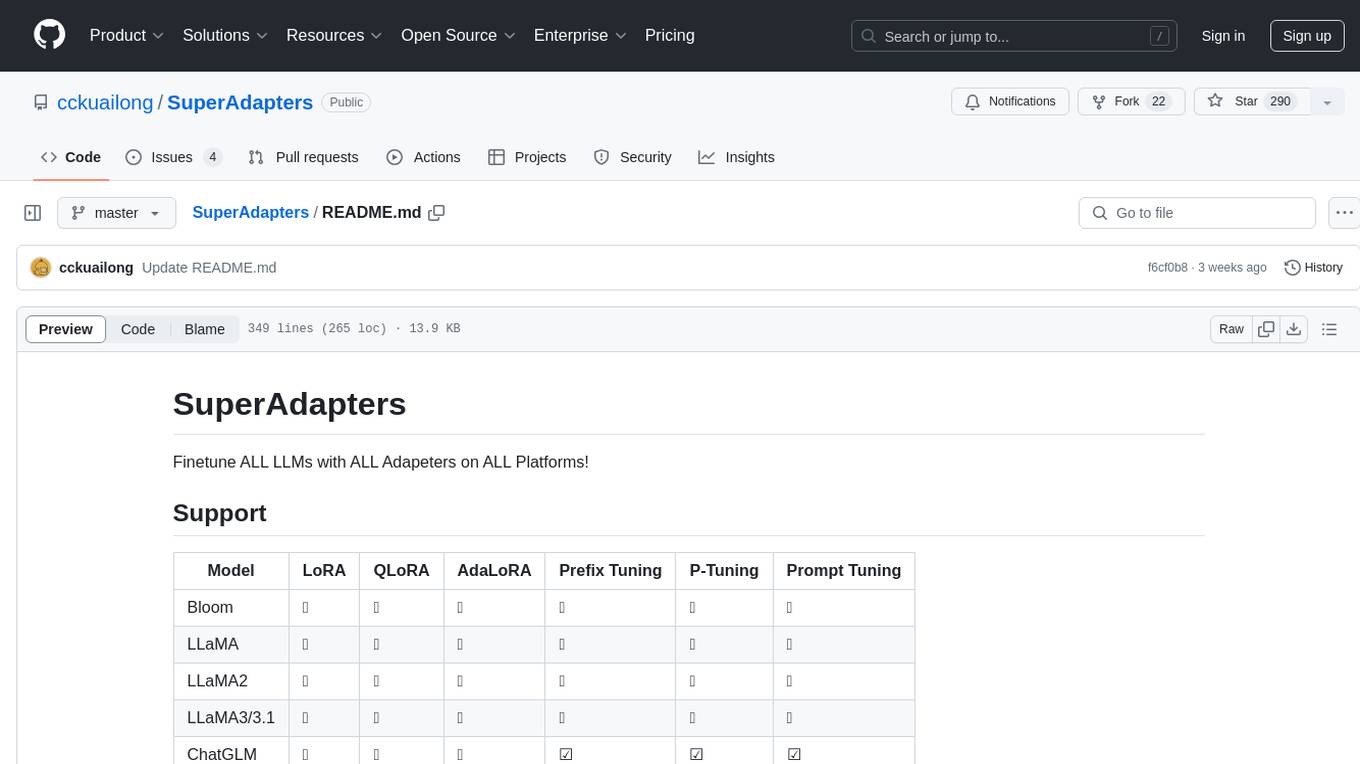

SuperAdapters

SuperAdapters is a tool designed to finetune Large Language Models (LLMs) with various adapters on different platforms. It supports models like Bloom, LLaMA, ChatGLM, Qwen, Baichuan, Mixtral, Phi, and more. Users can finetune LLMs on Windows, Linux, and Mac M1/2, handle train/test data with Terminal, File, or DataBase, and perform tasks like CausalLM and SequenceClassification. The tool provides detailed instructions on how to use different models with specific adapters for tasks like finetuning and inference. It also includes requirements for CentOS, Ubuntu, and MacOS, along with information on LLM downloads and data formats. Additionally, it offers parameters for finetuning and inference, as well as options for web and API-based inference.

litellm

LiteLLM is a tool that allows you to call all LLM APIs using the OpenAI format. This includes Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, and more. LiteLLM manages translating inputs to provider's `completion`, `embedding`, and `image_generation` endpoints, providing consistent output, and retry/fallback logic across multiple deployments. It also supports setting budgets and rate limits per project, api key, and model.

dbhub

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

data-prep-kit

Data Prep Kit is a community project aimed at democratizing and speeding up unstructured data preparation for LLM app developers. It provides high-level APIs and modules for transforming data (code, language, speech, visual) to optimize LLM performance across different use cases. The toolkit supports Python, Ray, Spark, and Kubeflow Pipelines runtimes, offering scalability from laptop to datacenter-scale processing. Developers can contribute new custom modules and leverage the data processing library for building data pipelines. Automation features include workflow automation with Kubeflow Pipelines for transform execution.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

gollama

Gollama is a tool designed for managing Ollama models through a Text User Interface (TUI). Users can list, inspect, delete, copy, and push Ollama models, as well as link them to LM Studio. The application offers interactive model selection, sorting by various criteria, and actions using hotkeys. It provides features like sorting and filtering capabilities, displaying model metadata, model linking, copying, pushing, and more. Gollama aims to be user-friendly and useful for managing models, especially for cleaning up old models.

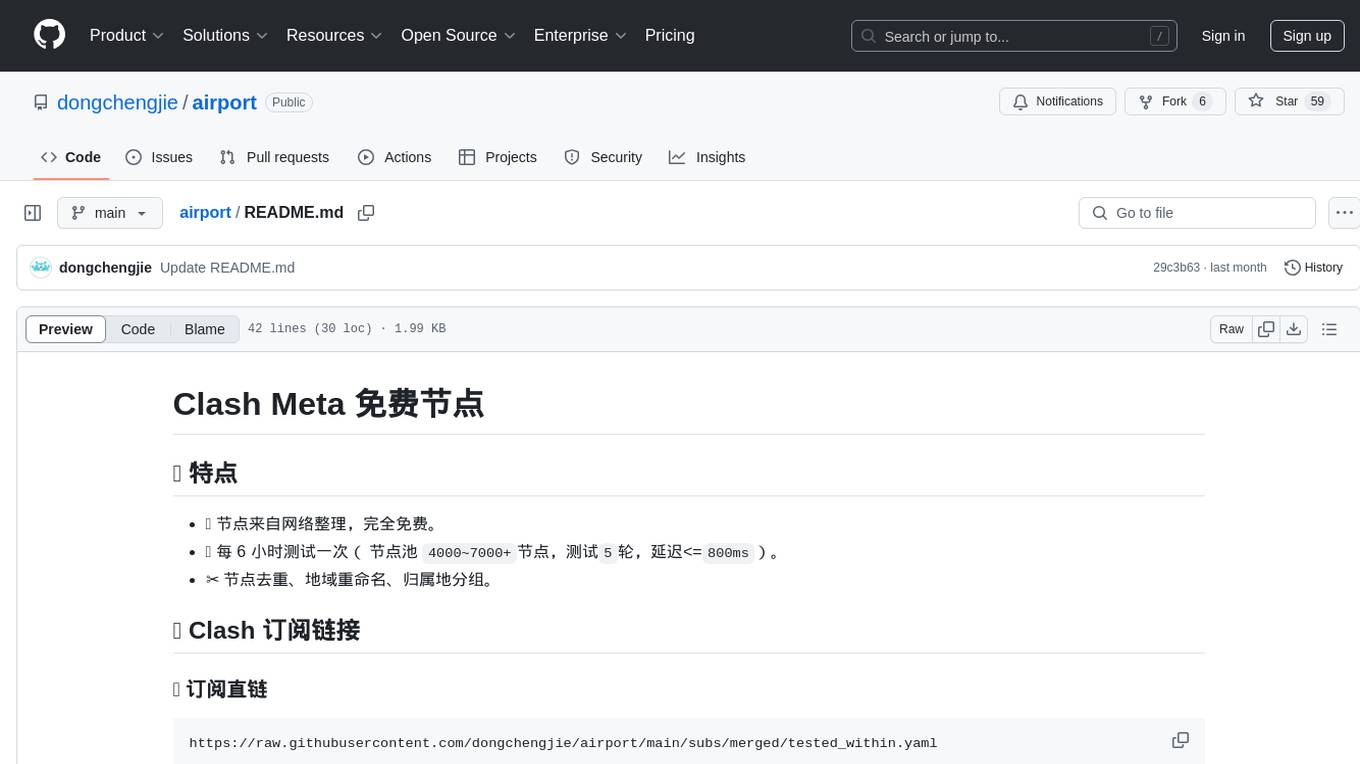

airport

The 'airport' repository provides free Clash Meta nodes sourced from the internet, with testing every 6 hours to ensure quality and low latency. It includes features such as node deduplication, regional renaming, and geographical grouping.

PredictorLLM

PredictorLLM is an advanced trading agent framework that utilizes large language models to automate trading in financial markets. It includes a profiling module to establish agent characteristics, a layered memory module for retaining and prioritizing financial data, and a decision-making module to convert insights into trading strategies. The framework mimics professional traders' behavior, surpassing human limitations in data processing and continuously evolving to adapt to market conditions for superior investment outcomes.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

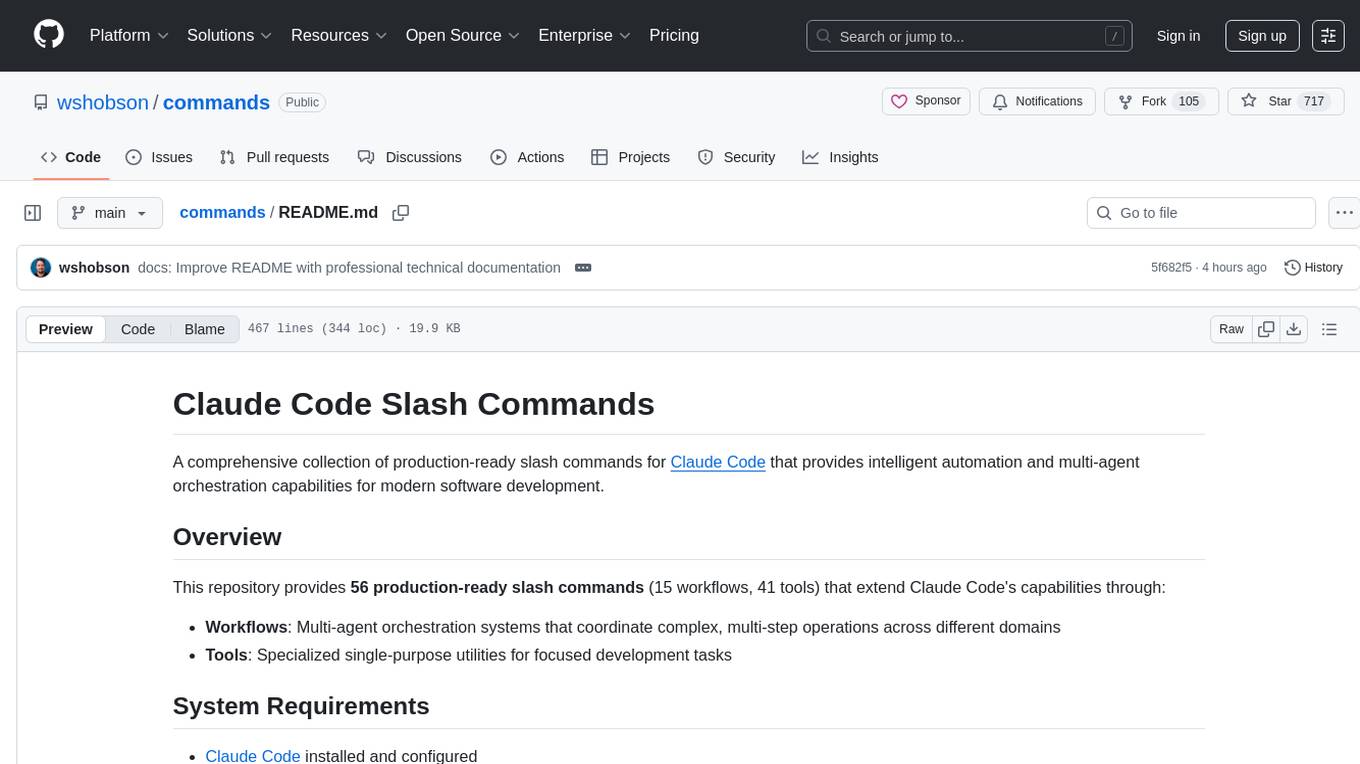

commands

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

DeepRetrieval

DeepRetrieval is a tool designed to enhance search engines and retrievers using Large Language Models (LLMs) and Reinforcement Learning (RL). It allows LLMs to learn how to search effectively by integrating with search engine APIs and customizing reward functions. The tool provides functionalities for data preparation, training, evaluation, and monitoring search performance. DeepRetrieval aims to improve information retrieval tasks by leveraging advanced AI techniques.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.