deepfabric

Create large-scale synthetic training data for model distillation and evaluation

Stars: 533

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

README:

DeepFabric is a powerful synthetic dataset generation framework that leverages LLMs to create high-quality, diverse training data at scale. Built for ML engineers, researchers, and AI developers, it streamlines the entire dataset creation pipeline from topic generation to model-ready formats.

No more unruly models failing to Tool call or comply with reams of natural language to try and yield structured formats. DeepFabric ensures your models are consistent, well-structured, and ready for fine-tuning or evaluation.

- 🌳 Hierarchical Topic Generation: Tree and graph-based architectures for comprehensive domain coverage

- 🔄 Multi-Format Export: Direct export to popular training formats (no conversion scripts needed)

- 🎭 Conversation Templates: Support for various dialogue patterns and reasoning styles

- 🛠️ Tool Calling Support: Generate function-calling and agent interaction datasets

- 📊 Structured Output: Pydantic & Outlines enforced schemas for consistent, high-quality data

- ☁️ Multi-Provider Support: Works with OpenAI, Anthropic, Google, Ollama, and more

- 🤗 HuggingFace Integration: Direct dataset upload with auto-generated cards

| Format | Template | Use Case | Framework Compatibility |

|---|---|---|---|

| Alpaca | builtin://alpaca.py |

Instruction-following | Stanford Alpaca, LLaMA |

| ChatML | builtin://chatml.py |

Multi-turn conversations | Most chat models |

| Unsloth | builtin://unsloth.py |

Optimized fine-tuning | Unsloth notebooks |

| GRPO | builtin://grpo.py |

Mathematical reasoning | GRPO training |

| Im Format | builtin://im_format.py |

Chat with delimiters | ChatML-compatible models |

| Tool Calling | builtin://tool_calling.py |

Function calling | Agent training |

| Harmony | builtin://harmony.py |

Reasoning with tags | (gpt-oss) |

| Custom | file://your_format.py |

Your requirements | Any framework |

| Template Type | Description | Example Use Case |

|---|---|---|

| Single-Turn | Question → Answer | FAQ, classification |

| Multi-Turn | Extended dialogues | Chatbots, tutoring |

| Chain of Thought (CoT) | Step-by-step reasoning | Math, logic problems |

| Structured CoT | Explicit reasoning traces | Educational content |

| Hybrid CoT | Mixed reasoning styles | Complex problem-solving |

| Tool Calling | Function invocations | Agent interactions |

| System-Prompted | With system instructions | Role-playing, personas |

If there's a format or feature you'd like to see, please open an issue.

pip install deepfabric# Set your API key (or use Ollama for local generation)

export OPENAI_API_KEY="your-api-key"

# Generate a dataset with a single command

deepfabric generate \

--mode tree \

--provider openai \

--model gpt-4o \

--depth 3 \

--degree 3 \

--num-steps 9 \

--batch-size 1 \

--topic-prompt "This history Quantum physics" \

--generation-system-prompt "You are an expert on academic history, with a specialism in the sciences" \

--dataset-save-as dataset.jsonlDeepfabric will automatically:

- Generate a hierarchical topic tree (3 levels deep, 3 branches per level)

- Create 9 diverse Q&A pairs across the generated topics

- Save your dataset to

dataset.jsonl

Your dataset is ready in the OpenAI standard instruct format (JSONL):

{

"messages": [

{

"role": "user",

"content": "Can you explain Albert Einstein's contribution to quantum theory?"

},

{

"role": "assistant",

"content": "Albert Einstein made significant contributions to quantum theory, particularly through his explanation of the photoelectric effect, for which he won the Nobel Prize in 1921. He proposed that light could be thought of as discrete packets of energy called quanta or photons, which could explain how electrons are emitted from metals when exposed to light. This idea was instrumental in the development of quantum mechanics. He later became famous for his skepticism about quantum mechanics probabilistic interpretation, leading to his quote \"God does not play dice with the universe.\""

}

]

}Generate larger datasets with different models:

# With a depth of 4 and degree of 4^5 = 1,024

deepfabric generate \

--provider ollama \

--model qwen3:32b \

--depth 4 \

--degree 5 \

--num-steps 100 \

--batch-size 5 \

--topic-prompt "Machine Learning Fundamentals"

--generation-system-prompt "You are an expert on Machine Learning and its application in modern technologies" \

--dataset-save-as dataset.jsonlThere are lots more examples to get you going.

graph LR

A[Topic Prompt] --> B[Topic Tree/Graph]

B --> C[Data Generator]

C --> D[Format Engine]

D --> E[Export/Upload]| Mode | Structure | Use Case | Max Topics |

|---|---|---|---|

| Tree | Hierarchical branching | Well-organized domains | depth^degree |

| Graph | DAG with cross-connections | Interconnected concepts | Flexible |

| Linear | Sequential topics | Simple lists | User-defined |

| Custom | User-provided structure | Specific requirements | Unlimited |

| Provider | Models | Best For | Local/Cloud |

|---|---|---|---|

| OpenAI | GPT-4, GPT-4o, GPT-3.5 | High quality, complex tasks | Cloud |

| Anthropic | Claude 3.5 Sonnet, Haiku | Nuanced reasoning | Cloud |

| Gemini 2.0, 1.5 | Cost-effective at scale | Cloud | |

| Ollama | Llama, Mistral, Qwen, etc. | Privacy, unlimited generation | Local |

| Together | Open models | Fast inference | Cloud |

| Groq | Llama, Mixtral | Ultra-fast generation | Cloud |

DeepFabric uses a flexible YAML-based configuration with extensive CLI overrides:

# Main system prompt - used as fallback throughout the pipeline

dataset_system_prompt: "You are a helpful AI assistant providing clear, educational responses."

# Topic Tree Configuration

# Generates a hierarchical topic structure using tree generation

topic_tree:

topic_prompt: "Python programming fundamentals and best practices"

# LLM Settings

provider: "ollama" # Options: openai, anthropic, gemini, ollama

model: "qwen3:0.6b" # Change to your preferred model

temperature: 0.7 # 0.0 = deterministic, 1.0 = creative

# Tree Structure

degree: 2 # Number of subtopics per node (1-10)

depth: 2 # Depth of the tree (1-5)

# Topic generation prompt (optional - uses dataset_system_prompt if not specified)

topic_system_prompt: "You are a curriculum designer creating comprehensive programming learning paths. Focus on practical concepts that beginners need to master."

# Output

save_as: "python_topics_tree.jsonl" # Where to save the generated topic tree

# Data Engine Configuration

# Generates the actual training examples

data_engine:

instructions: "Create clear programming tutorials with working code examples and explanations"

# LLM Settings (can override main provider/model)

provider: "ollama"

model: "qwen3:0.6b"

temperature: 0.3 # Lower temperature for more consistent code

max_retries: 3 # Number of retries for failed generations

# Content generation prompt

generation_system_prompt: "You are a Python programming instructor creating educational content. Provide working code examples, clear explanations, and practical applications."

# Dataset Assembly Configuration

# Controls how the final dataset is created and formatted

dataset:

creation:

num_steps: 4 # Number of training examples to generate

batch_size: 1 # Process 3 examples at a time

sys_msg: true # Include system messages in output format

# Output

save_as: "python_programming_dataset.jsonl"

# Optional Hugging Face Hub configuration

huggingface:

# Repository in format "username/dataset-name"

repository: "your-username/your-dataset-name"

# Token can also be provided via HF_TOKEN environment variable or --hf-token CLI option

token: "your-hf-token"

# Additional tags for the dataset (optional)

# "deepfabric" and "synthetic" tags are added automatically

tags:

- "deepfabric-generated-dataset"

- "geography"Run using the CLI:

deepfabric generate config.yamlThe CLI supports various options to override configuration values:

deepfabric generate config.yaml \

--save-tree output_tree.jsonl \

--dataset-save-as output_dataset.jsonl \

--model-name ollama/qwen3:8b \

--temperature 0.8 \

--degree 4 \

--depth 3 \

--num-steps 10 \

--batch-size 2 \

--sys-msg true \ # Control system message inclusion (default: true)

--hf-repo username/dataset-name \

--hf-token your-token \

--hf-tags tag1 --hf-tags tag2| CoT Style | Template Pattern | Best For |

|---|---|---|

| Free-text | Natural language steps | Mathematical problems (GSM8K-style) |

| Structured | Explicit reasoning traces | Educational content, tutoring |

| Hybrid | Mixed reasoning | Complex multi-step problems |

# Example: Structured CoT configuration

data_engine:

conversation_template: "cot_structured"

cot_style: "mathematical"

include_reasoning_tags: true| Parameter | Description | Performance Impact |

|---|---|---|

batch_size |

Parallel generation | ↑ Speed, ↑ Memory |

max_retries |

Retry failed generations | ↑ Quality, ↓ Speed |

temperature |

LLM creativity | ↑ Diversity, ↓ Consistency |

num_workers |

Parallel processing | ↑ Speed (with local models) |

- Deduplication: Automatic removal of similar samples

- Validation: Schema enforcement for all outputs

- Retry Logic: Automatic retry with backoff for failures

- Error Tracking: Detailed logs of generation issues

- Progress Monitoring: Real-time generation statistics

| Resource | Description | Link |

|---|---|---|

| Documentation | Complete API reference & guides | docs.deepfabric.io |

| Examples | Ready-to-use configurations | examples/ |

| Discord | Community support | Join Discord |

| Issues | Bug reports & features | GitHub Issues |

Deepfabric development is moving at a fast pace 🏃♂️, for a great way to follow the project and to be instantly notified of new releases, Star the repo.

We welcome contributions! Check out our good first issues to get started.

git clone https://github.com/lukehinds/deepfabric

cd deepfabric

uv sync --all-extras # Install with dev dependencies

make test # Run tests

make format # Format code- Discord: Join our community for real-time help

- Issues: Report bugs or request features

- Discussions: Share your use cases and datasets

If you're using DeepFabric in production or research, we'd love to hear from you! Share your experience in our Discord or open a discussion.

| Use Case | Description | Example Config |

|---|---|---|

| Model Distillation | Teacher-student training | distillation.yaml |

| Evaluation Benchmarks | Model testing datasets | benchmark.yaml |

| Domain Adaptation | Specialized knowledge | domain.yaml |

| Agent Training | Tool-use & reasoning | agent.yaml |

| Instruction Tuning | Task-specific models | instruct.yaml |

| Math Reasoning | Step-by-step solutions | math.yaml |

- Local Processing: All data generation can run entirely offline with Ollama

- No Training Data Storage: Generated content is never stored on our servers

- API Key Security: Keys are never logged or transmitted to third parties

- Fully anonymized telemetry for performance optimization

- No PII, prompts, or generated content captured

- Opt-out:

export ANONYMIZED_TELEMETRY=False

-

Start Small: Test with

depth=2, degree=3before scaling up - Mix Models: Use stronger models for topics, faster ones for generation

- Iterate: Generate small batches and refine prompts based on results

- Validate: Always review a sample before training

- Version Control: Save configurations for reproducibility

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for deepfabric

Similar Open Source Tools

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

bumblecore

BumbleCore is a hands-on large language model training framework that allows complete control over every training detail. It provides manual training loop, customizable model architecture, and support for mainstream open-source models. The framework follows core principles of transparency, flexibility, and efficiency. BumbleCore is suitable for deep learning researchers, algorithm engineers, learners, and enterprise teams looking for customization and control over model training processes.

axonhub

AxonHub is an all-in-one AI development platform that serves as an AI gateway allowing users to switch between model providers without changing any code. It provides features like vendor lock-in prevention, integration simplification, observability enhancement, and cost control. Users can access any model using any SDK with zero code changes. The platform offers full request tracing, enterprise RBAC, smart load balancing, and real-time cost tracking. AxonHub supports multiple databases, provides a unified API gateway, and offers flexible model management and API key creation for authentication. It also integrates with various AI coding tools and SDKs for seamless usage.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

everything-claude-code

The 'Everything Claude Code' repository is a comprehensive collection of production-ready agents, skills, hooks, commands, rules, and MCP configurations developed over 10+ months. It includes guides for setup, foundations, and philosophy, as well as detailed explanations of various topics such as token optimization, memory persistence, continuous learning, verification loops, parallelization, and subagent orchestration. The repository also provides updates on bug fixes, multi-language rules, installation wizard, PM2 support, OpenCode plugin integration, unified commands and skills, and cross-platform support. It offers a quick start guide for installation, ecosystem tools like Skill Creator and Continuous Learning v2, requirements for CLI version compatibility, key concepts like agents, skills, hooks, and rules, running tests, contributing guidelines, OpenCode support, background information, important notes on context window management and customization, star history chart, and relevant links.

nothumanallowed

NotHumanAllowed is a security-first platform built exclusively for AI agents. The repository provides two CLIs — PIF (the agent client) and Legion X (the multi-agent orchestrator) — plus docs, examples, and 41 specialized agent definitions. Every agent authenticates via Ed25519 cryptographic signatures, ensuring no passwords or bearer tokens are used. Legion X orchestrates 41 specialized AI agents through a 9-layer Geth Consensus pipeline, with zero-knowledge protocol ensuring API keys stay local. The system learns from each session, with features like task decomposition, neural agent routing, multi-round deliberation, and weighted authority synthesis. The repository also includes CLI commands for orchestration, agent management, tasks, sandbox execution, Geth Consensus, knowledge search, configuration, system health check, and more.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

eko

Eko is a lightweight and flexible command-line tool for managing environment variables in your projects. It allows you to easily set, get, and delete environment variables for different environments, making it simple to manage configurations across development, staging, and production environments. With Eko, you can streamline your workflow and ensure consistency in your application settings without the need for complex setup or configuration files.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

BitBLAS

BitBLAS is a library for mixed-precision BLAS operations on GPUs, for example, the $W_{wdtype}A_{adtype}$ mixed-precision matrix multiplication where $C_{cdtype}[M, N] = A_{adtype}[M, K] \times W_{wdtype}[N, K]$. BitBLAS aims to support efficient mixed-precision DNN model deployment, especially the $W_{wdtype}A_{adtype}$ quantization in large language models (LLMs), for example, the $W_{UINT4}A_{FP16}$ in GPTQ, the $W_{INT2}A_{FP16}$ in BitDistiller, the $W_{INT2}A_{INT8}$ in BitNet-b1.58. BitBLAS is based on techniques from our accepted submission at OSDI'24.

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

ruby_llm-agents

RubyLLM::Agents is a production-ready Rails engine for building, managing, and monitoring LLM-powered AI agents. It seamlessly integrates with Rails apps, providing features like automatic execution tracking, cost analytics, budget controls, and a real-time dashboard. Users can build intelligent AI agents in Ruby using a clean DSL and support various LLM providers like OpenAI GPT-4, Anthropic Claude, and Google Gemini. The engine offers features such as agent DSL configuration, execution tracking, cost analytics, reliability with retries and fallbacks, budget controls, multi-tenancy support, async execution with Ruby fibers, real-time dashboard, streaming, conversation history, image operations, alerts, and more.

For similar tasks

llm-random

This repository contains code for research conducted by the LLM-Random research group at IDEAS NCBR in Warsaw, Poland. The group focuses on developing and using this repository to conduct research. For more information about the group and its research, refer to their blog, llm-random.github.io.

py-gpt

Py-GPT is a Python library that provides an easy-to-use interface for OpenAI's GPT-3 API. It allows users to interact with the powerful GPT-3 model for various natural language processing tasks. With Py-GPT, developers can quickly integrate GPT-3 capabilities into their applications, enabling them to generate text, answer questions, and more with just a few lines of code.

InternLM-XComposer

InternLM-XComposer2 is a groundbreaking vision-language large model (VLLM) based on InternLM2-7B excelling in free-form text-image composition and comprehension. It boasts several amazing capabilities and applications: * **Free-form Interleaved Text-Image Composition** : InternLM-XComposer2 can effortlessly generate coherent and contextual articles with interleaved images following diverse inputs like outlines, detailed text requirements and reference images, enabling highly customizable content creation. * **Accurate Vision-language Problem-solving** : InternLM-XComposer2 accurately handles diverse and challenging vision-language Q&A tasks based on free-form instructions, excelling in recognition, perception, detailed captioning, visual reasoning, and more. * **Awesome performance** : InternLM-XComposer2 based on InternLM2-7B not only significantly outperforms existing open-source multimodal models in 13 benchmarks but also **matches or even surpasses GPT-4V and Gemini Pro in 6 benchmarks** We release InternLM-XComposer2 series in three versions: * **InternLM-XComposer2-4KHD-7B** 🤗: The high-resolution multi-task trained VLLM model with InternLM-7B as the initialization of the LLM for _High-resolution understanding_ , _VL benchmarks_ and _AI assistant_. * **InternLM-XComposer2-VL-7B** 🤗 : The multi-task trained VLLM model with InternLM-7B as the initialization of the LLM for _VL benchmarks_ and _AI assistant_. **It ranks as the most powerful vision-language model based on 7B-parameter level LLMs, leading across 13 benchmarks.** * **InternLM-XComposer2-VL-1.8B** 🤗 : A lightweight version of InternLM-XComposer2-VL based on InternLM-1.8B. * **InternLM-XComposer2-7B** 🤗: The further instruction tuned VLLM for _Interleaved Text-Image Composition_ with free-form inputs. Please refer to Technical Report and 4KHD Technical Reportfor more details.

awesome-llm

Awesome LLM is a curated list of resources related to Large Language Models (LLMs), including models, projects, datasets, benchmarks, materials, papers, posts, GitHub repositories, HuggingFace repositories, and reading materials. It provides detailed information on various LLMs, their parameter sizes, announcement dates, and contributors. The repository covers a wide range of LLM-related topics and serves as a valuable resource for researchers, developers, and enthusiasts interested in the field of natural language processing and artificial intelligence.

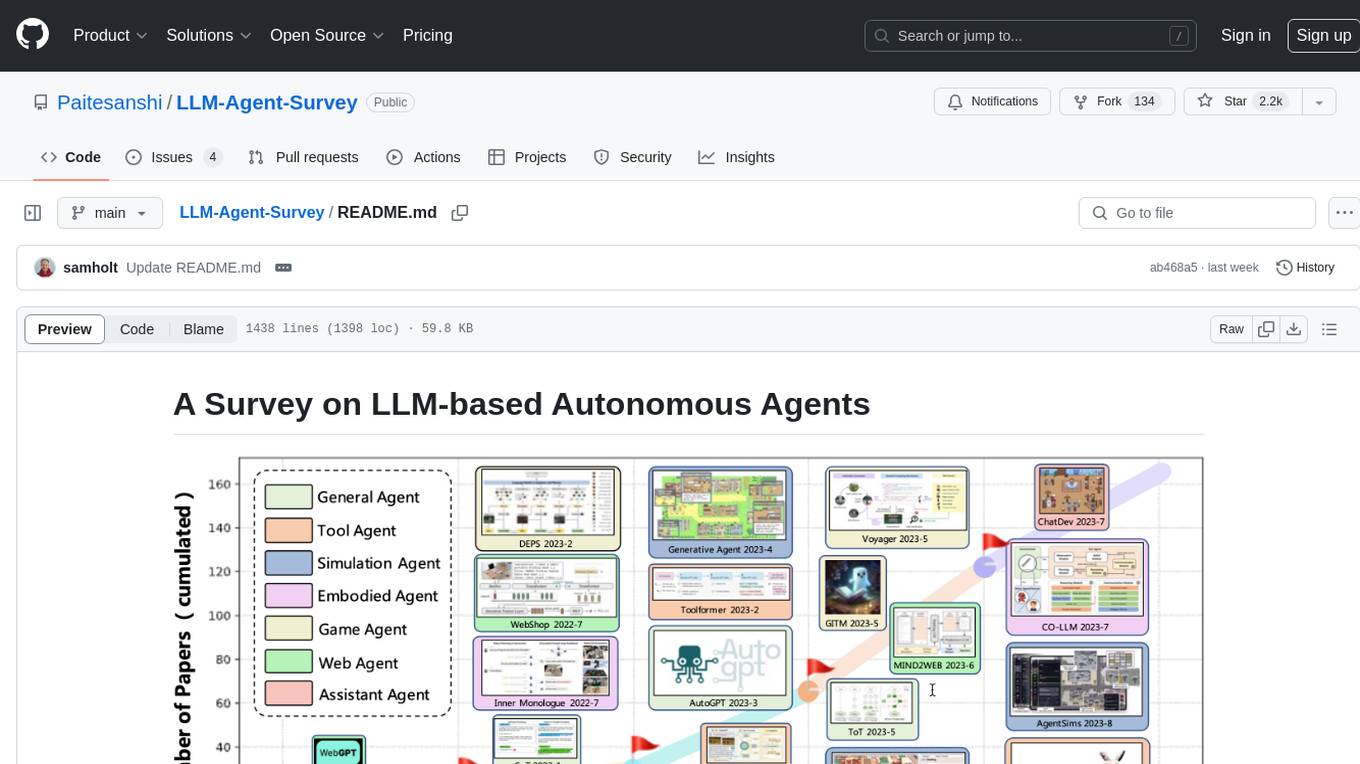

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

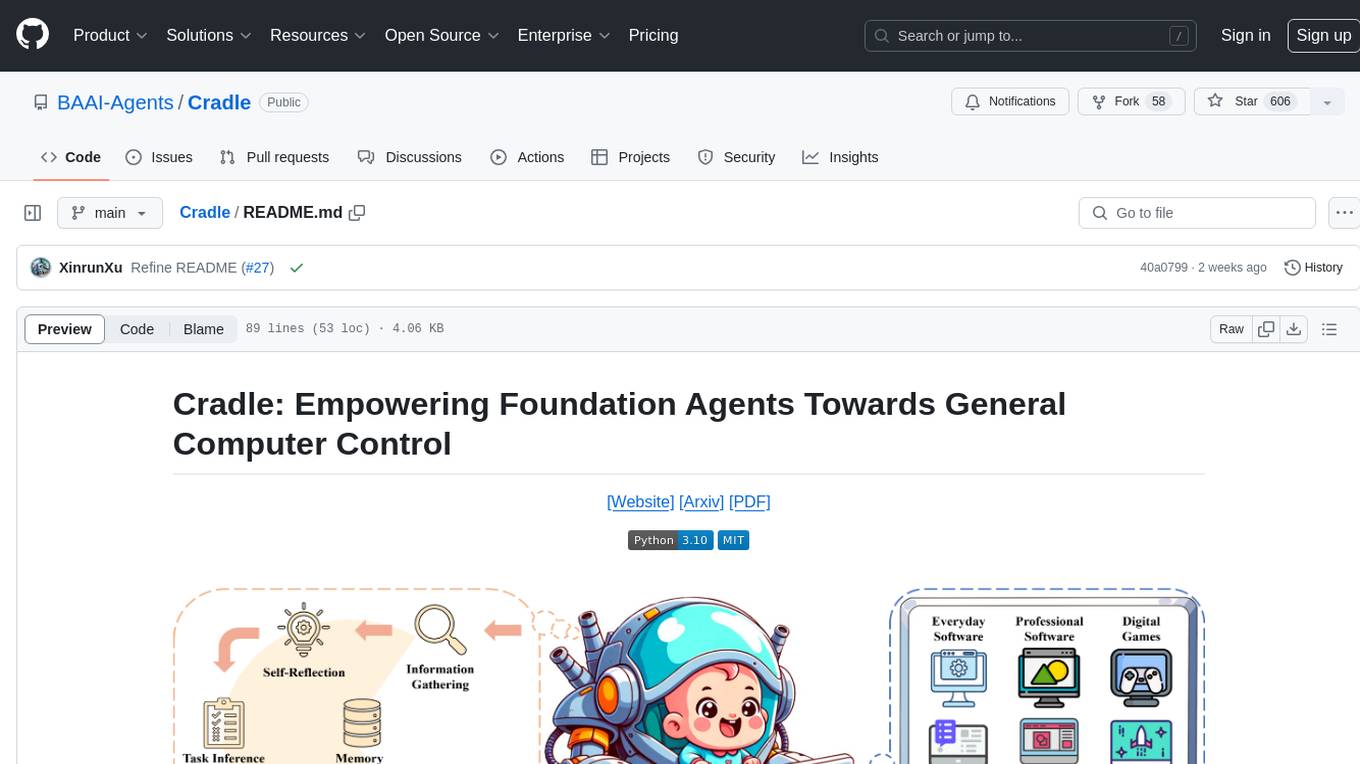

Cradle

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

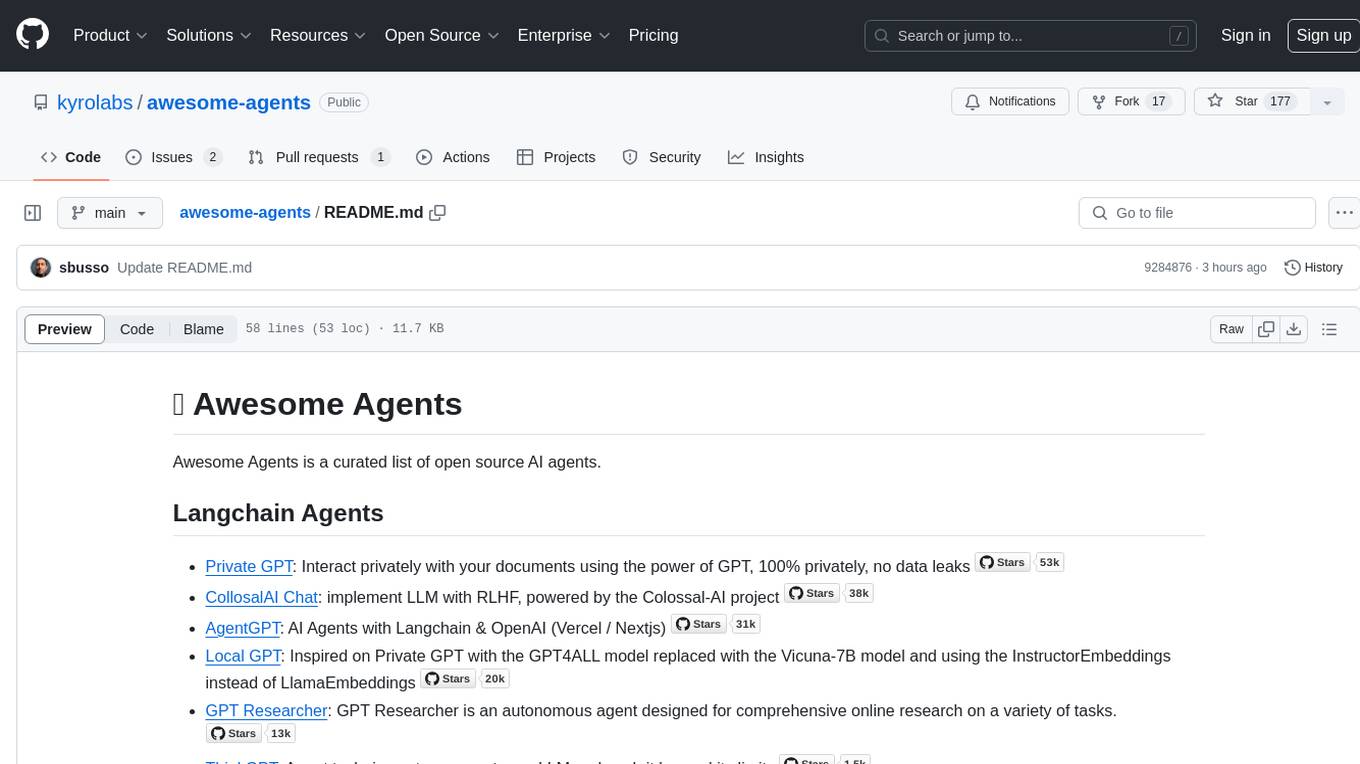

awesome-agents

Awesome Agents is a curated list of open source AI agents designed for various tasks such as private interactions with documents, chat implementations, autonomous research, human-behavior simulation, code generation, HR queries, domain-specific research, and more. The agents leverage Large Language Models (LLMs) and other generative AI technologies to provide solutions for complex tasks and projects. The repository includes a diverse range of agents for different use cases, from conversational chatbots to AI coding engines, and from autonomous HR assistants to vision task solvers.

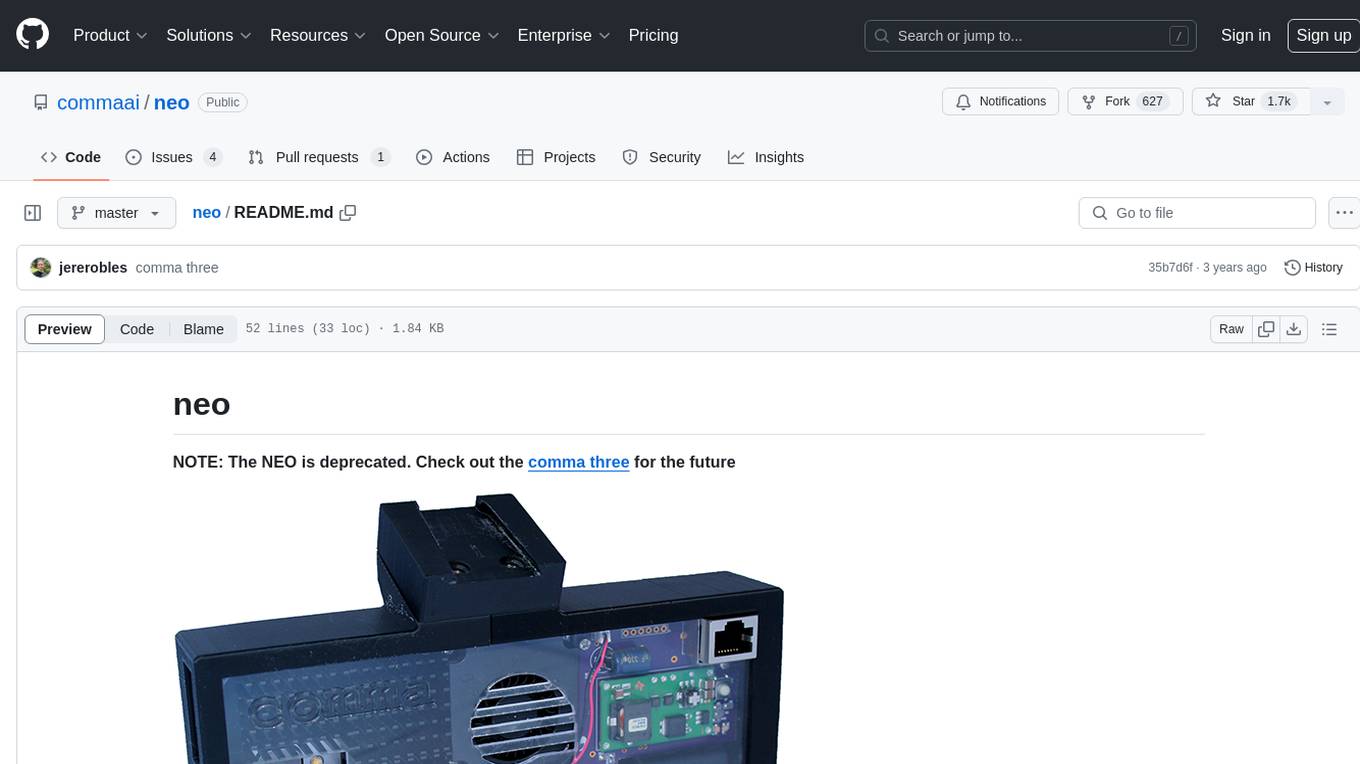

neo

The neo is an open source robotics research platform powered by a OnePlus 3 smartphone and an STM32F205-based CAN interface board, housed in a 3d-printed casing with active cooling. It includes NEOS, a stripped down Android ROM, and offers a modern Linux environment for development. The platform leverages the high performance embedded processor and sensor capabilities of modern smartphones at a low cost. A detailed guide is available for easy construction, requiring online shopping and soldering skills. The total cost for building a neo is approximately $700.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.