awesome-llm

Awesome series for Large Language Model(LLM)s

Stars: 58

Awesome LLM is a curated list of resources related to Large Language Models (LLMs), including models, projects, datasets, benchmarks, materials, papers, posts, GitHub repositories, HuggingFace repositories, and reading materials. It provides detailed information on various LLMs, their parameter sizes, announcement dates, and contributors. The repository covers a wide range of LLM-related topics and serves as a valuable resource for researchers, developers, and enthusiasts interested in the field of natural language processing and artificial intelligence.

README:

Awesome series for Large Language Model(LLM)s

| Name | Parameter size | Announcement date |

|---|---|---|

| BERT-Large (336M) | 336 million | 2018 |

| T5 (11B) | 11 billion | 2020 |

| Gopher (280B) | 280 billion | 2021 |

| GPT-J (6B) | 6 billion | 2021 |

| LaMDA (137B) | 137 billion | 2021 |

| Megatron-Turing NLG (530B) | 530 billion | 2021 |

| T0 (11B) | 11 billion | 2021 |

| Macaw (11B) | 11 billion | 2021 |

| GLaM (1.2T) | 1.2 trillion | 2021 |

| T5 FLAN (540B) | 540 billion | 2022 |

| OPT-175B (175B) | 175 billion | 2022 |

| ChatGPT (175B) | 175 billion | 2022 |

| GPT 3.5 (175B) | 175 billion | 2022 |

| AlexaTM (20B) | 20 billion | 2022 |

| Bloom (176B) | 176 billion | 2022 |

| Bard | Not yet announced | 2023 |

| GPT 4 | Not yet announced | 2023 |

| AlphaCode (41.4B) | 41.4 billion | 2022 |

| Chinchilla (70B) | 70 billion | 2022 |

| Sparrow (70B) | 70 billion | 2022 |

| PaLM (540B) | 540 billion | 2022 |

| NLLB (54.5B) | 54.5 billion | 2022 |

| Alexa TM (20B) | 20 billion | 2022 |

| Galactica (120B) | 120 billion | 2022 |

| UL2 (20B) | 20 billion | 2022 |

| Jurassic-1 (178B) | 178 billion | 2022 |

| LLaMA (65B) | 65 billion | 2023 |

| Stanford Alpaca (7B) | 7 billion | 2023 |

| GPT-NeoX 2.0 (20B) | 20 billion | 2023 |

| BloombergGPT | 50 billion | 2023 |

| Dolly | 6 billion | 2023 |

| Jurassic-2 | Not yet announced | 2023 |

| OpenAssistant LLaMa | 30 billion | 2023 |

| Koala | 13 billion | 2023 |

| Vicuna | 13 billion | 2023 |

| PaLM2 | Not yet announced, Smaller than PaLM1 | 2023 |

| LIMA | 65 billion | 2023 |

| MPT | 7 billion | 2023 |

| Falcon | 40 billion | 2023 |

| Llama 2 | 70 billion | 2023 |

| Google Gemini | Not yet announced | 2023 |

| Microsoft Phi-2 | 2.7 billion | 2023 |

| Grok-0 | 33 billion | 2023 |

| Grok-1 | 314 billion | 2023 |

| Solar | 10.7 billion | 2024 |

| Gemma | 7 billion | 2024 |

| Grok-1.5 | Not yet announced | 2024 |

| DBRX | 132 billion | 2024 |

| Claude 3 | Not yet announced | 2024 |

| Gemma 1.1 | 7 billion | 2024 |

| Llama 3 | 70 billion | 2024 |

- T5 (11B) - Announced by Google / 2020

- T5 FLAN (540B) - Announced by Google / 2022

- T0 (11B) - Announced by BigScience (HuggingFace) / 2021

- OPT-175B (175B) - Announced by Meta / 2022

- UL2 (20B) - Announced by Google / 2022

- Bloom (176B) - Announced by BigScience (HuggingFace) / 2022

- BERT-Large (336M) - Announced by Google / 2018

- GPT-NeoX 2.0 (20B) - Announced by EleutherAI / 2023

- GPT-J (6B) - Announced by EleutherAI / 2021

- Macaw (11B) - Announced by AI2 / 2021

- Stanford Alpaca (7B) - Announced by Stanford University / 2023

- Visual ChatGPT - Announced by Microsoft / 2023

- LMOps - Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities.

- GPT 4 (Parameter size unannounced, gpt-4-32k) - Announced by OpenAI / 2023

- ChatGPT (175B) - Announced by OpenAI / 2022

- ChatGPT Plus (175B) - Announced by OpenAI / 2023

- GPT 3.5 (175B, text-davinci-003) - Announced by OpenAI / 2022

- Gemini - Announced by Google Deepmind / 2023

- Bard - Announced by Google / 2023

- Codex (11B) - Announced by OpenAI / 2021

-

Sphere - Announced by Meta / 2022

-

134Mdocuments split into906Mpassages as the web corpus.

-

-

Common Crawl

-

3.15Bpages and over than380TiBsize dataset, public, free to use.

-

-

SQuAD 2.0

-

100,000+question dataset for QA.

-

-

Pile

-

825 GiB diverse, open source language modelling data set.

-

-

RACE

- A large-scale reading comprehension dataset with more than

28,000passages and nearly100,000questions.

- A large-scale reading comprehension dataset with more than

-

Wikipedia

- Wikipedia dataset containing cleaned articles of all languages.

- Megatron-Turing NLG (530B) - Announced by NVIDIA and Microsoft / 2021

- LaMDA (137B) - Announced by Google / 2021

- GLaM (1.2T) - Announced by Google / 2021

- PaLM (540B) - Announced by Google / 2022

- AlphaCode (41.4B) - Announced by DeepMind / 2022

- Chinchilla (70B) - Announced by DeepMind / 2022

- Sparrow (70B) - Announced by DeepMind / 2022

- NLLB (54.5B) - Announced by Meta / 2022

- LLaMA (65B) - Announced by Meta / 2023

- AlexaTM (20B) - Announced by Amazon / 2022

- Gopher (280B) - Announced by DeepMind / 2021

- Galactica (120B) - Announced by Meta / 2022

- PaLM2 Tech Report - Announced by Google / 2023

- LIMA - Announced by Meta / 2023

- Llama 2 (70B) - Announced by Meta / 2023

- Luminous (13B) - Announced by Aleph Alpha / 2021

- Turing NLG (17B) - Announced by Microsoft / 2020

- Claude (52B) - Announced by Anthropic / 2021

- Minerva (Parameter size unannounced) - Announced by Google / 2022

- BloombergGPT (50B) - Announced by Bloomberg / 2023

- AlexaTM (20B - Announced by Amazon / 2023

- Dolly (6B) - Announced by Databricks / 2023

- Jurassic-1 - Announced by AI21 / 2022

- Jurassic-2 - Announced by AI21 / 2023

- Koala - Announced by Berkeley Artificial Intelligence Research(BAIR) / 2023

- Gemma - Gemma: Introducing new state-of-the-art open models / 2024

- Grok-1 - Open Release of Grok-1 / 2023

- Grok-1.5 - Announced by XAI / 2024

- DBRX - Announced by Databricks / 2024

- BigScience - Maintained by HuggingFace (Twitter) (Notion)

- HuggingChat - Maintained by HuggingFace / 2023

- OpenAssistant - Maintained by Open Assistant / 2023

- StableLM - Maintained by Stability AI / 2023

- Eleuther AI Language Model- Maintained by Eleuther AI / 2023

- Falcon LLM - Maintained by Technology Innovation Institute / 2023

- Gemma - Maintained by Google / 2024

-

Stanford Alpaca -

- A repository of Stanford Alpaca project, a model fine-tuned from the LLaMA 7B model on 52K instruction-following demonstrations.

-

Dolly -

- A large language model trained on the Databricks Machine Learning Platform.

-

AutoGPT -

- An experimental open-source attempt to make GPT-4 fully autonomous.

-

dalai -

- The cli tool to run LLaMA on the local machine.

-

LLaMA-Adapter -

- Fine-tuning LLaMA to follow Instructions within 1 Hour and 1.2M Parameters.

-

alpaca-lora -

- Instruct-tune LLaMA on consumer hardware.

-

llama_index -

- A project that provides a central interface to connect your LLM's with external data.

-

openai/evals -

- A curated list of reinforcement learning with human feedback resources.

-

trlx -

- A repo for distributed training of language models with Reinforcement Learning via Human Feedback. (RLHF)

-

pythia -

- A suite of 16 LLMs all trained on public data seen in the exact same order and ranging in size from 70M to 12B parameters.

-

Embedchain -

- Framework to create ChatGPT like bots over your dataset.

- OpenAssistant SFT 6 - 30 billion LLaMa-based model made by HuggingFace for the chatting conversation.

- Vicuna Delta v0 - An open-source chatbot trained by fine-tuning LLaMA on user-shared conversations collected from ShareGPT.

- MPT 7B - A decoder-style transformer pre-trained from scratch on 1T tokens of English text and code. This model was trained by MosaicML.

- Falcon 7B - A 7B parameters causal decoder-only model built by TII and trained on 1,500B tokens of RefinedWeb enhanced with curated corpora.

- Phi-2: The surprising power of small language models

- StackLLaMA: A hands-on guide to train LLaMA with RLHF

- PaLM2

- PaLM2 and Future work: Gemini model

We welcome contributions to the Awesome LLMOps list! If you'd like to suggest an addition or make a correction, please follow these guidelines:

- Fork the repository and create a new branch for your contribution.

- Make your changes to the README.md file.

- Ensure that your contribution is relevant to the topic of LLM.

- Use the following format to add your contribution:

[Name of Resource](Link to Resource) - Description of resource- Add your contribution in alphabetical order within its category.

- Make sure that your contribution is not already listed.

- Provide a brief description of the resource and explain why it is relevant to LLM.

- Create a pull request with a clear title and description of your changes.

We appreciate your contributions and thank you for helping to make the Awesome LLM list even more awesome!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm

Similar Open Source Tools

awesome-llm

Awesome LLM is a curated list of resources related to Large Language Models (LLMs), including models, projects, datasets, benchmarks, materials, papers, posts, GitHub repositories, HuggingFace repositories, and reading materials. It provides detailed information on various LLMs, their parameter sizes, announcement dates, and contributors. The repository covers a wide range of LLM-related topics and serves as a valuable resource for researchers, developers, and enthusiasts interested in the field of natural language processing and artificial intelligence.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

Korean-SAT-LLM-Leaderboard

The Korean SAT LLM Leaderboard is a benchmarking project that allows users to test their fine-tuned Korean language models on a 10-year dataset of the Korean College Scholastic Ability Test (CSAT). The project provides a platform to compare human academic ability with the performance of large language models (LLMs) on various question types to assess reading comprehension, critical thinking, and sentence interpretation skills. It aims to share benchmark data, utilize a reliable evaluation dataset curated by the Korea Institute for Curriculum and Evaluation, provide annual updates to prevent data leakage, and promote open-source LLM advancement for achieving top-tier performance on the Korean CSAT.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

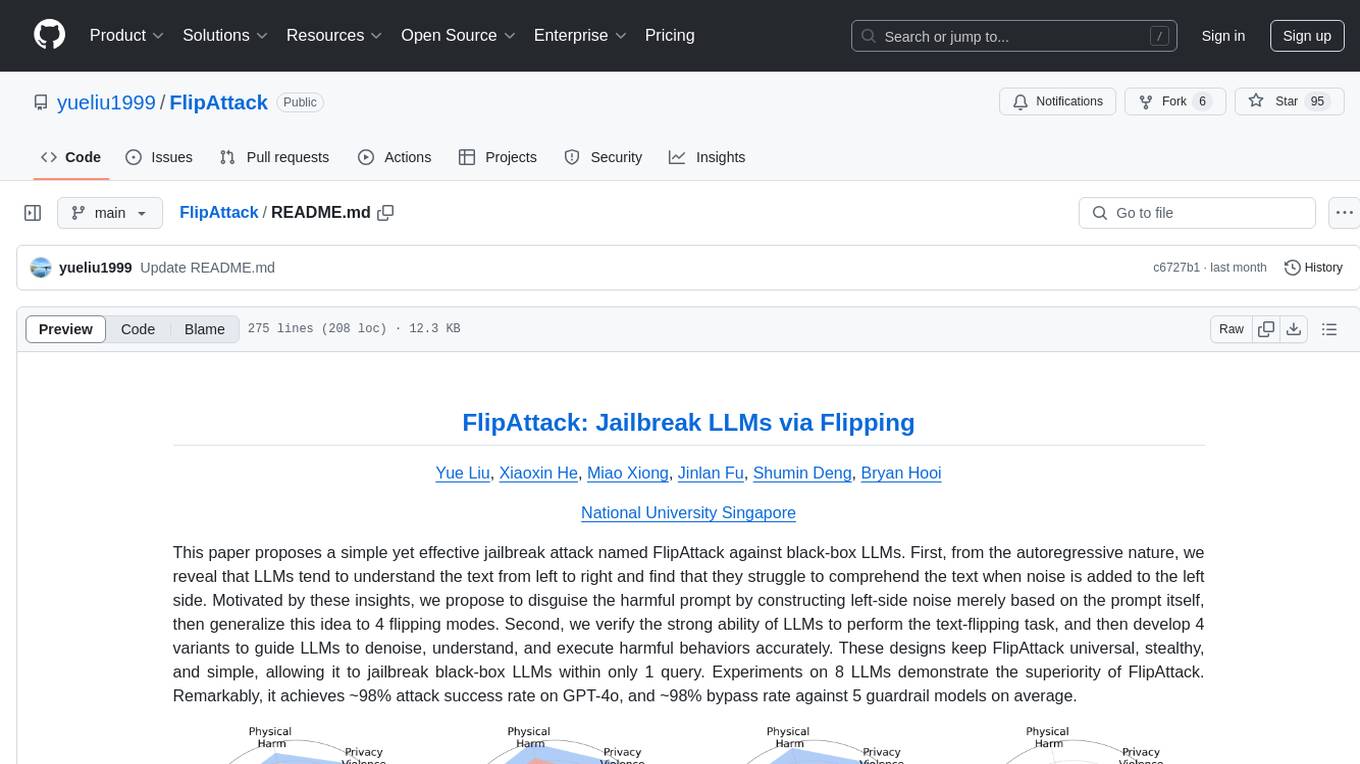

FlipAttack

FlipAttack is a jailbreak attack tool designed to exploit black-box Language Model Models (LLMs) by manipulating text inputs. It leverages insights into LLMs' autoregressive nature to construct noise on the left side of the input text, deceiving the model and enabling harmful behaviors. The tool offers four flipping modes to guide LLMs in denoising and executing malicious prompts effectively. FlipAttack is characterized by its universality, stealthiness, and simplicity, allowing users to compromise black-box LLMs with just one query. Experimental results demonstrate its high success rates against various LLMs, including GPT-4o and guardrail models.

visionOS-examples

visionOS-examples is a repository containing accelerators for Spatial Computing. It includes examples such as Local Large Language Model, Chat Apple Vision Pro, WebSockets, Anchor To Head, Hand Tracking, Battery Life, Countdown, Plane Detection, Timer Vision, and PencilKit for visionOS. The repository showcases various functionalities and features for Apple Vision Pro, offering tools for developers to enhance their visionOS apps with capabilities like hand tracking, plane detection, and real-time cryptocurrency prices.

vlmrun-cookbook

VLM Run Cookbook is a repository containing practical examples and tutorials for extracting structured data from images, videos, and documents using Vision Language Models (VLMs). It offers comprehensive Colab notebooks demonstrating real-world applications of VLM Run, with complete code and documentation for easy adaptation. The examples cover various domains such as financial documents and TV news analysis.

oumi

Oumi is an open-source platform for building state-of-the-art foundation models, offering tools for data preparation, training, evaluation, and deployment. It supports training and fine-tuning models with various parameters, working with text and multimodal models, synthesizing and curating training data, deploying models efficiently, evaluating models comprehensively, and running on different platforms. Oumi provides a consistent API, reliability, and flexibility for research purposes.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

KwaiAgents

KwaiAgents is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). The open-sourced content includes: 1. **KAgentSys-Lite**: a lite version of the KAgentSys in the paper. While retaining some of the original system's functionality, KAgentSys-Lite has certain differences and limitations when compared to its full-featured counterpart, such as: (1) a more limited set of tools; (2) a lack of memory mechanisms; (3) slightly reduced performance capabilities; and (4) a different codebase, as it evolves from open-source projects like BabyAGI and Auto-GPT. Despite these modifications, KAgentSys-Lite still delivers comparable performance among numerous open-source Agent systems available. 2. **KAgentLMs**: a series of large language models with agent capabilities such as planning, reflection, and tool-use, acquired through the Meta-agent tuning proposed in the paper. 3. **KAgentInstruct**: over 200k Agent-related instructions finetuning data (partially human-edited) proposed in the paper. 4. **KAgentBench**: over 3,000 human-edited, automated evaluation data for testing Agent capabilities, with evaluation dimensions including planning, tool-use, reflection, concluding, and profiling.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.