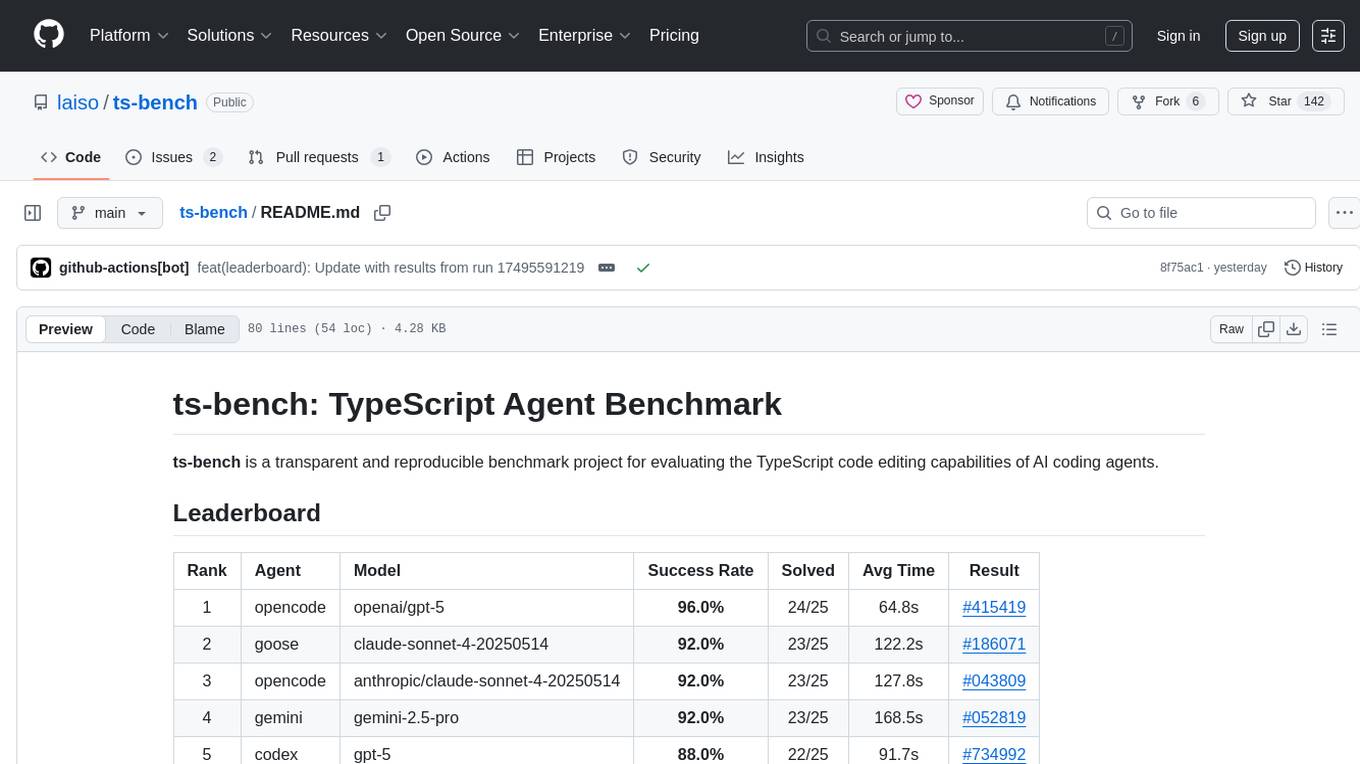

ts-bench

Measure and compare the performance of AI coding agents on TypeScript tasks.

Stars: 162

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

README:

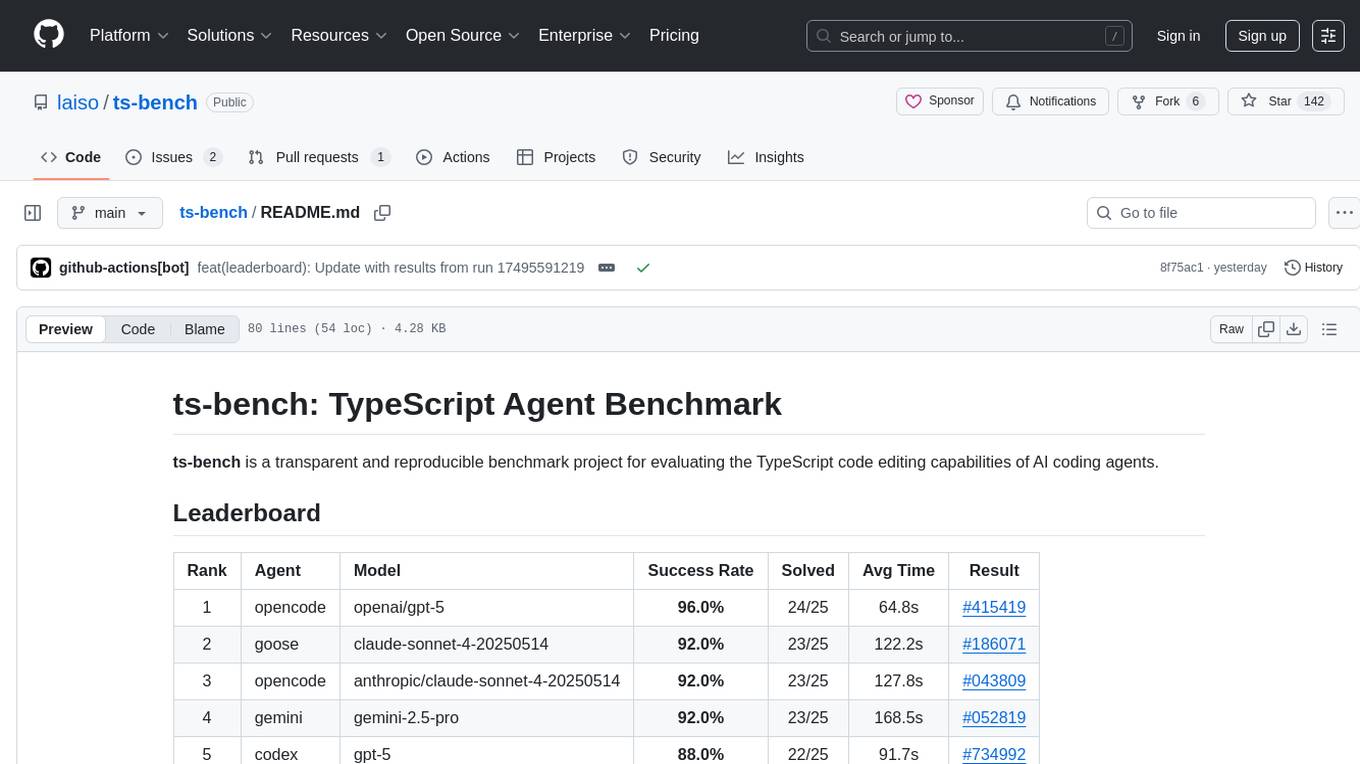

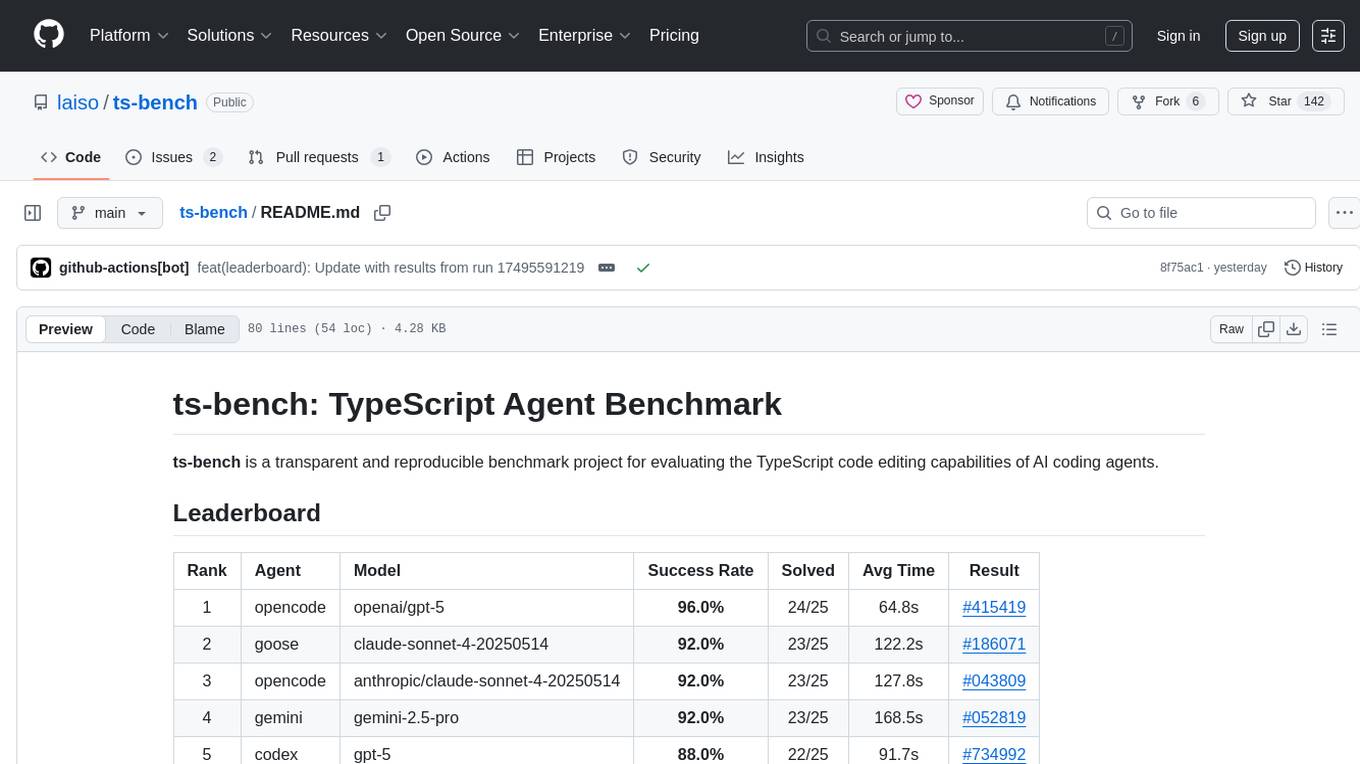

ts-bench is a transparent and reproducible benchmark project for evaluating the TypeScript code editing capabilities of AI coding agents.

| Rank | Agent | Model | Success Rate | Solved | Avg Time | Result |

|---|---|---|---|---|---|---|

| 1 | opencode | openai/gpt-5 | 96.0% | 24/25 | 64.8s | #415419 |

| 2 | codex | gpt-5-codex | 92.0% | 23/25 | 98.7s | #015544 |

| 3 | goose | claude-sonnet-4-20250514 | 92.0% | 23/25 | 122.2s | #186071 |

| 4 | opencode | anthropic/claude-sonnet-4-20250514 | 92.0% | 23/25 | 127.8s | #043809 |

| 5 | gemini | gemini-2.5-pro | 92.0% | 23/25 | 168.5s | #052819 |

| 6 | codex | gpt-5 | 88.0% | 22/25 | 91.7s | #734992 |

| 7 | opencode | opencode/grok-code | 88.0% | 22/25 | 97.0s | #083421 |

| 8 | claude | glm-4.5 | 80.0% | 20/25 | 172.3s | #591219 |

| 9 | claude | claude-sonnet-4-20250514 | 72.0% | 18/25 | 206.1s | #732069 |

| 10 | qwen | qwen3-coder-plus | 64.0% | 16/25 | 123.9s | #246268 |

Currently supported agents:

This project is strongly inspired by benchmarks like Aider Polyglot. Rather than measuring the performance of large language models (LLMs) alone, it focuses on evaluating the agent layer—the entire AI coding assistant tool, including prompt strategies, file operations, and iterative logic.

Based on this vision, the benchmark is designed according to the following principles:

- TypeScript-First: Focused on TypeScript, which is essential in modern development. Static typing presents unique challenges and opportunities for AI agents, making it a crucial evaluation target.

-

Agent-Agnostic: Designed to be independent of any specific AI agent, allowing fair comparison of multiple CLI-based agents such as

AiderandClaude Code. - Baseline Performance: Uses self-contained problem sets sourced from Exercism to serve as a baseline for measuring basic code reading and editing abilities. It is not intended to measure performance on large-scale editing tasks or complex bug fixes across entire repositories like SWE-bench.

All benchmark results are generated and published via GitHub Actions.

Each results page provides a formatted summary and downloadable artifacts containing raw data (JSON).

For detailed documentation, see:

- Environment Setup: Details on setting up the local and Docker environments.

- Leaderboard Operation Design: Explains how the leaderboard is updated and maintained.

bun installRun the benchmark with the following commands. Use --help to see all available options.

# Run the default 25 problems with Claude Code (Sonnet 3.5)

bun src/index.ts --agent claude --model claude-3-5-sonnet-20240620

# Run only the 'acronym' problem with Aider (GPT-4o)

bun src/index.ts --agent aider --model gpt-4o --exercise acronymFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ts-bench

Similar Open Source Tools

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

pai-opencode

PAI-OpenCode is a complete port of Daniel Miessler's Personal AI Infrastructure (PAI) to OpenCode, an open-source, provider-agnostic AI coding assistant. It brings modular capabilities, dynamic multi-agent orchestration, session history, and lifecycle automation to personalize AI assistants for users. With support for 75+ AI providers, PAI-OpenCode offers dynamic per-task model routing, full PAI infrastructure, real-time session sharing, and multiple client options. The tool optimizes cost and quality with a 3-tier model strategy and a 3-tier research system, allowing users to switch presets for different routing strategies. PAI-OpenCode's architecture preserves PAI's design while adapting to OpenCode, documented through Architecture Decision Records (ADRs).

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

learn-claude-code

Learn Claude Code is an educational project by shareAI Lab that aims to help users understand how modern AI agents work by building one from scratch. The repository provides original educational material on various topics such as the agent loop, tool design, explicit planning, context management, knowledge injection, task systems, parallel execution, team messaging, and autonomous teams. Users can follow a learning path through different versions of the project, each introducing new concepts and mechanisms. The repository also includes technical tutorials, articles, and example skills for users to explore and learn from. The project emphasizes the philosophy that the model is crucial in agent development, with code playing a supporting role.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

BharatMLStack

BharatMLStack is a comprehensive, production-ready machine learning infrastructure platform designed to democratize ML capabilities across India and beyond. It provides a robust, scalable, and accessible ML stack empowering organizations to build, deploy, and manage machine learning solutions at massive scale. It includes core components like Horizon, Trufflebox UI, Online Feature Store, Go SDK, Python SDK, and Numerix, offering features such as control plane, ML management console, real-time features, mathematical compute engine, and more. The platform is production-ready, cloud agnostic, and offers observability through built-in monitoring and logging.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

qserve

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

Athena-Public

Project Athena is a Linux OS designed for AI Agents, providing memory, persistence, scheduling, and governance for AI models. It offers a comprehensive memory layer that survives across sessions, models, and IDEs, allowing users to own their data and port it anywhere. The system is built bottom-up through 1,079+ sessions, focusing on depth and compounding knowledge. Athena features a trilateral feedback loop for cross-model validation, a Model Context Protocol server with 9 tools, and a robust security model with data residency options. The repository structure includes an SDK package, examples for quickstart, scripts, protocols, workflows, and deep documentation. Key concepts cover architecture, knowledge graph, semantic memory, and adaptive latency. Workflows include booting, reasoning modes, planning, research, and iteration. The project has seen significant content expansion, viral validation, and metrics improvements.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

HuatuoGPT-II

HuatuoGPT2 is an innovative domain-adapted medical large language model that excels in medical knowledge and dialogue proficiency. It showcases state-of-the-art performance in various medical benchmarks, surpassing GPT-4 in expert evaluations and fresh medical licensing exams. The open-source release includes HuatuoGPT2 models in 7B, 13B, and 34B versions, training code for one-stage adaptation, partial pre-training and fine-tuning instructions, and evaluation methods for medical response capabilities and professional pharmacist exams. The tool aims to enhance LLM capabilities in the Chinese medical field through open-source principles.

LlamaV-o1

LlamaV-o1 is a Large Multimodal Model designed for spontaneous reasoning tasks. It outperforms various existing models on multimodal reasoning benchmarks. The project includes a Step-by-Step Visual Reasoning Benchmark, a novel evaluation metric, and a combined Multi-Step Curriculum Learning and Beam Search Approach. The model achieves superior performance in complex multi-step visual reasoning tasks in terms of accuracy and efficiency.

sktime

sktime is a Python library for time series analysis that provides a unified interface for various time series learning tasks such as classification, regression, clustering, annotation, and forecasting. It offers time series algorithms and tools compatible with scikit-learn for building, tuning, and validating time series models. sktime aims to enhance the interoperability and usability of the time series analysis ecosystem by empowering users to apply algorithms across different tasks and providing interfaces to related libraries like scikit-learn, statsmodels, tsfresh, PyOD, and fbprophet.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

For similar tasks

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

brokk

Brokk is a code assistant tool named after the Norse god of the forge. It is designed to understand code semantically, enabling LLMs to work effectively on large codebases. Users can sign up at Brokk.ai, install jbang, and follow instructions to run Brokk. The tool uses Gradle with Scala support and requires JDK 21 or newer for building. Brokk aims to enhance code comprehension and productivity by providing semantic understanding of code.

awesome-claude-code

Awesome Claude Code is a curated list of slash-commands, CLAUDE.md files, CLI tools, and other resources for enhancing your Claude Code workflow. It includes a variety of agent skills, workflows, tooling, hooks, slash-commands, and more to help developers improve their coding experience using Claude Code, a CLI-based coding assistant from Anthropic. The list covers a wide range of topics such as AI development, project management, code analysis, documentation, CI/CD, and domain-specific projects. Whether you are a beginner or an experienced developer, this repository provides valuable resources to enhance your coding skills and workflow with Claude Code.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.