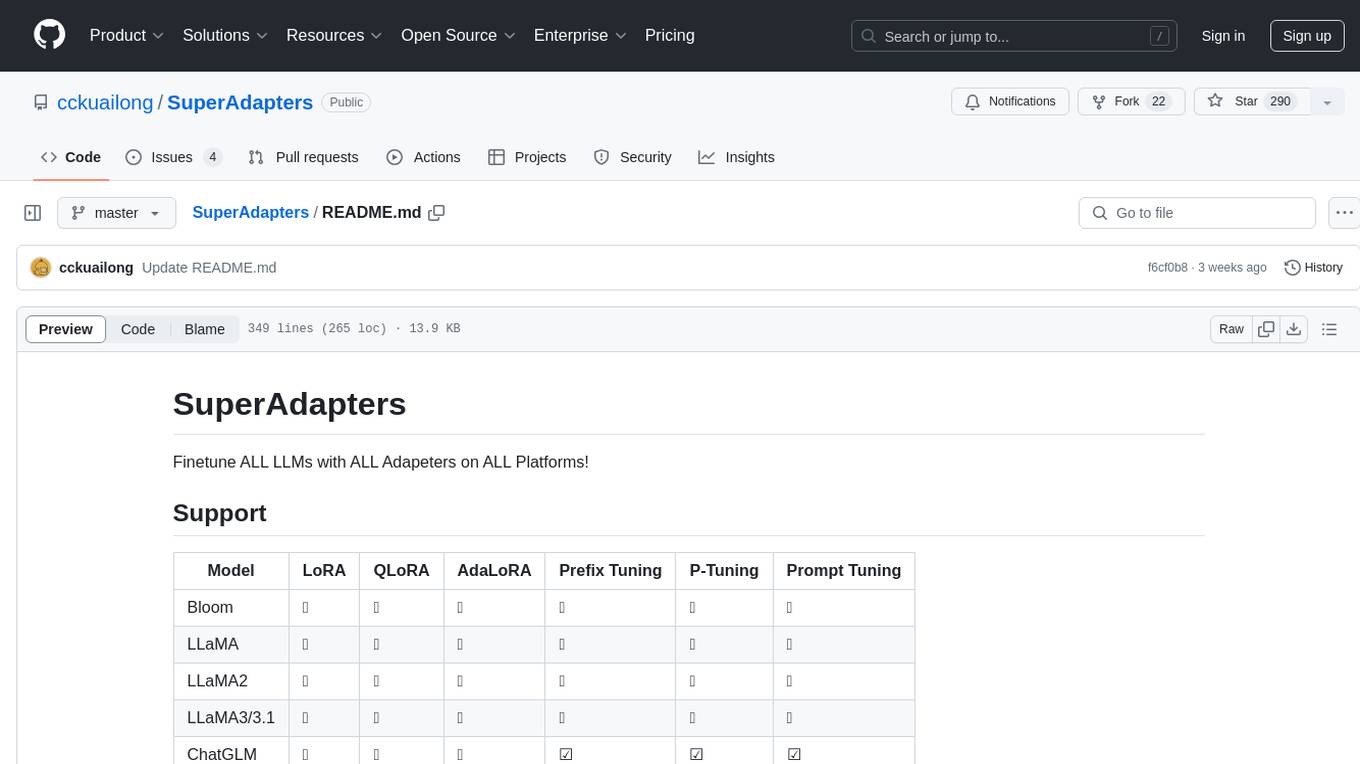

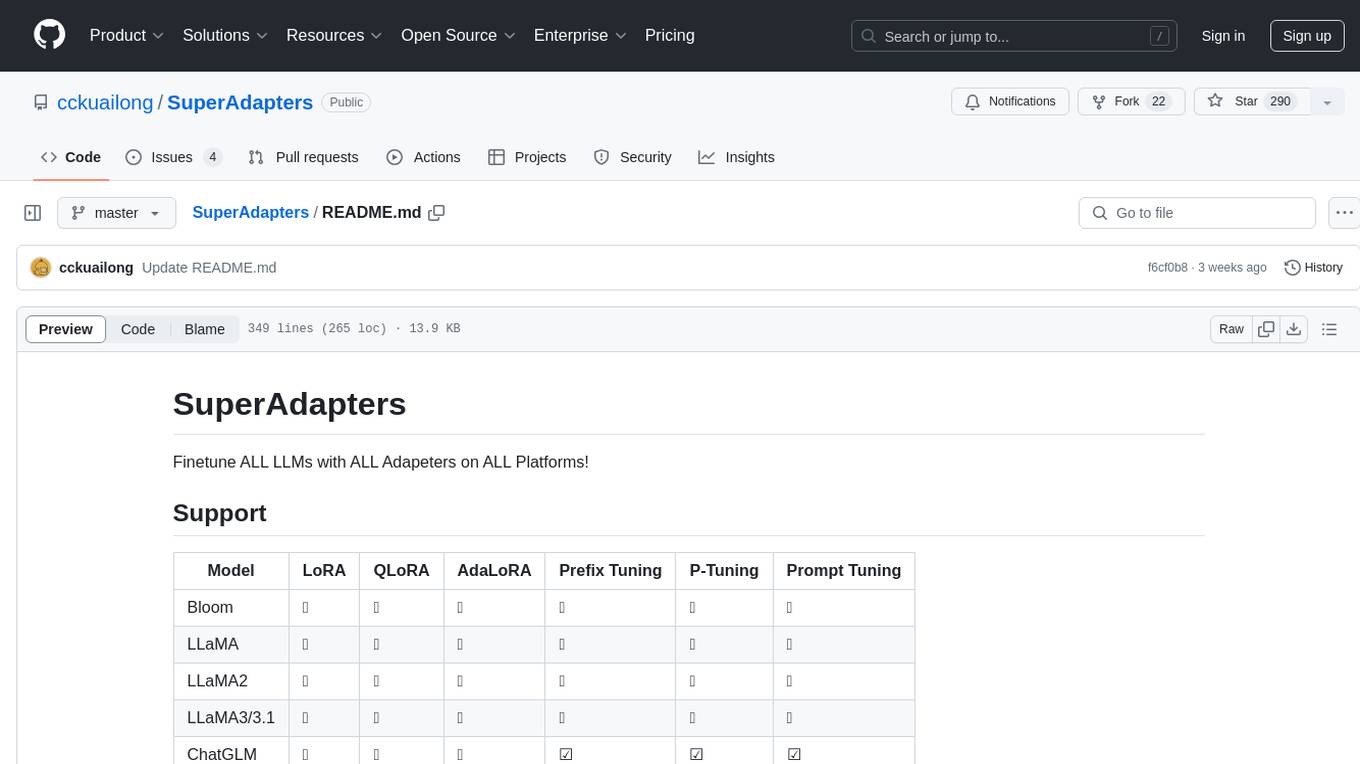

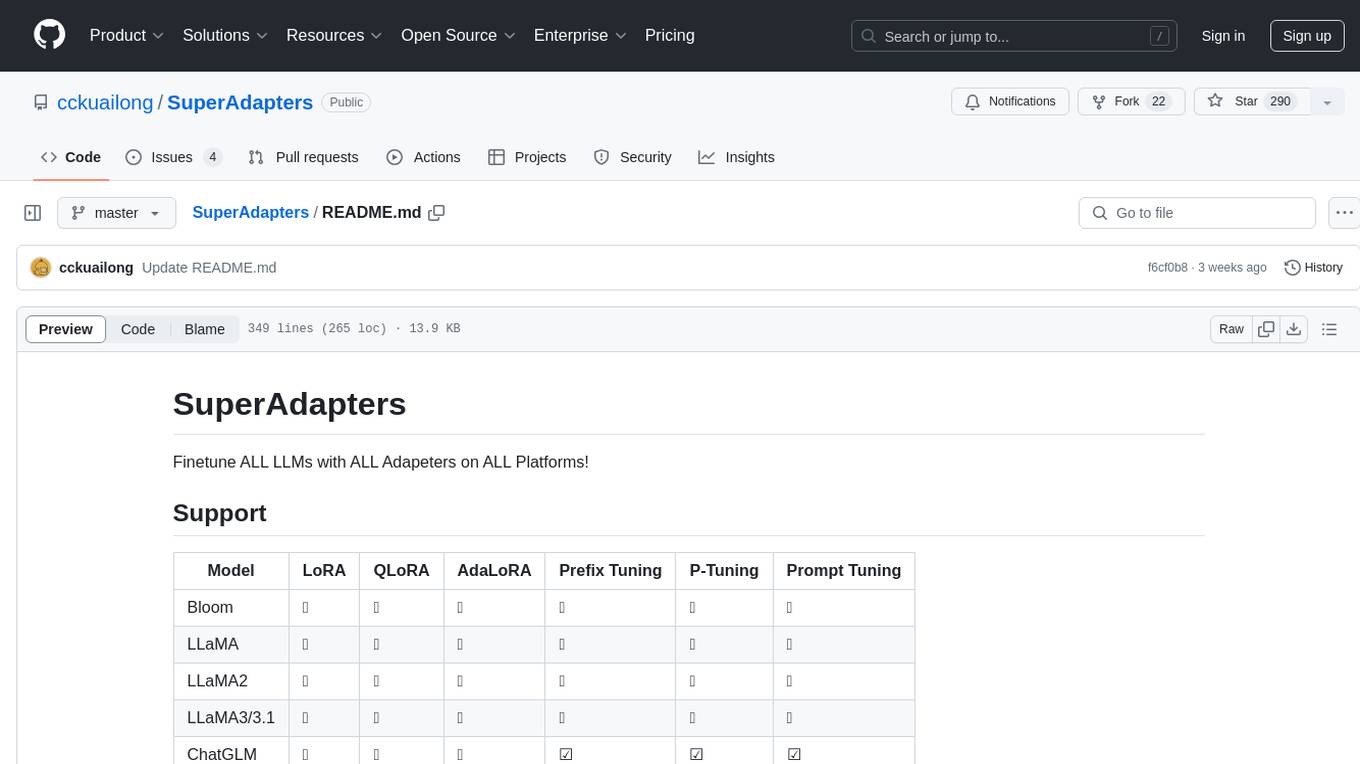

SuperAdapters

Finetune ALL LLMs with ALL Adapeters on ALL Platforms!

Stars: 293

SuperAdapters is a tool designed to finetune Large Language Models (LLMs) with various adapters on different platforms. It supports models like Bloom, LLaMA, ChatGLM, Qwen, Baichuan, Mixtral, Phi, and more. Users can finetune LLMs on Windows, Linux, and Mac M1/2, handle train/test data with Terminal, File, or DataBase, and perform tasks like CausalLM and SequenceClassification. The tool provides detailed instructions on how to use different models with specific adapters for tasks like finetuning and inference. It also includes requirements for CentOS, Ubuntu, and MacOS, along with information on LLM downloads and data formats. Additionally, it offers parameters for finetuning and inference, as well as options for web and API-based inference.

README:

Finetune ALL LLMs with ALL Adapeters on ALL Platforms!

| Model | LoRA | QLoRA | AdaLoRA | Prefix Tuning | P-Tuning | Prompt Tuning |

|---|---|---|---|---|---|---|

| Bloom | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| LLaMA | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| LLaMA2 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| LLaMA3/3.1 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| ChatGLM | ✅ | ✅ | ✅ | ☑️ | ☑️ | ☑️ |

| ChatGLM2 | ✅ | ✅ | ✅ | ☑️ | ☑️ | ☑️ |

| Qwen | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Baichuan | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Mixtral | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Phi | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Phi3 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Gemma | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

You can Finetune LLM on

- Windows

- Linux

- Mac M1/2

You can Handle train / test Data with

- Terminal

- File

- DataBase

You can Do various Task

- CausalLM (default)

- SequenceClassification

P.S. Unfortunately, SuperAdapters do not support qlora on Mac, please use lora/adalora instead.

CentOS:

yum install -y xz-develUbuntu:

apt-get install -y liblzma-devMacOS:

brew install xzP.S. Maybe you should recompile the python with xz

CPPFLAGS="-I$(brew --prefix xz)/include" pyenv install 3.10.0If you want to use gpu on Mac, Please read How to use GPU on Mac

pip install --pre torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/nightly/cpu

pip install -r requirements.txtpython finetune.py --model_type chatglm --data "data/train/" --model_path "LLMs/chatglm/chatglm-6b/" --adapter "lora" --output_dir "output/chatglm"python inference.py --model_type chatglm --instruction "Who are you?" --model_path "LLMs/chatglm/chatglm-6b/" --adapter_weights "output/chatglm" --max_new_tokens 32python finetune.py --model_type llama --data "data/train/" --model_path "LLMs/open-llama/open-llama-3b/" --adapter "lora" --output_dir "output/llama"python inference.py --model_type llama --instruction "Who are you?" --model_path "LLMs/open-llama/open-llama-3b" --adapter_weights "output/llama" --max_new_tokens 32python finetune.py --model_type qwen --data "data/train/" --model_path "LLMs/Qwen/Qwen-7b-chat" --adapter "lora" --output_dir "output/Qwen"python inference.py --model_type qwen --instruction "Who are you?" --model_path "LLMs/Qwen/Qwen-7b-chat" --adapter_weights "output/Qwen" --max_new_tokens 32Other LLMs are some usage of the above.

You need to specify task_type('classify') and labels

python finetune.py --model_type llama --data "data/train/alpaca_tiny_classify.json" --model_path "LLMs/open-llama/open-llama-3b" --adapter "lora" --output_dir "output/llama" --task_type classify --labels '["0", "1"]' --disable_wandbpython inference.py --model_type llama --data "data/train/alpaca_tiny_classify.json" --model_path "LLMs/open-llama/open-llama-3b" --adapter_weights "output/llama" --task_type classify --labels '["0", "1"]' --disable_wandb- You need to install a MySQL, and put the db config into the system env.

Eg.

export LLM_DB_HOST='127.0.0.1'

export LLM_DB_PORT=3306

export LLM_DB_USERNAME='YOURUSERNAME'

export LLM_DB_PASSWORD='YOURPASSWORD'

export LLM_DB_NAME='YOURDBNAME'

- create the necessary tables

source xxxx.sql- db_iteration: [train/test] The record's set name.

- db_type: [test] The record is whether "train" or "test".

- db_test_iteration: [test] The record's test set name.

- finetune (use chatglm for example)

python finetune.py --model_type chatglm --fromdb --db_iteration xxxxxx --model_path "LLMs/chatglm/chatglm-6b/" --adapter "lora" --output_dir "output/chatglm" --disable_wandb- eval

python inference.py --model_type chatglm --fromdb --db_iteration xxxxxx --db_type 'test' --db_test_iteration yyyyyyy --model_path "LLMs/chatglm/chatglm-6b/" --adapter_weights "output/chatglm" --max_new_tokens 6usage: finetune.py [-h] [--data DATA] [--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,gemma}] [--task_type {seq2seq,classify}] [--labels LABELS] [--model_path MODEL_PATH]

[--output_dir OUTPUT_DIR] [--disable_wandb] [--adapter {lora,qlora,adalora,prompt,p_tuning,prefix}] [--lora_r LORA_R] [--lora_alpha LORA_ALPHA] [--lora_dropout LORA_DROPOUT]

[--lora_target_modules LORA_TARGET_MODULES [LORA_TARGET_MODULES ...]] [--adalora_init_r ADALORA_INIT_R] [--adalora_tinit ADALORA_TINIT] [--adalora_tfinal ADALORA_TFINAL]

[--adalora_delta_t ADALORA_DELTA_T] [--num_virtual_tokens NUM_VIRTUAL_TOKENS] [--mapping_hidden_dim MAPPING_HIDDEN_DIM] [--epochs EPOCHS] [--learning_rate LEARNING_RATE]

[--cutoff_len CUTOFF_LEN] [--val_set_size VAL_SET_SIZE] [--group_by_length] [--logging_steps LOGGING_STEPS] [--load_8bit] [--add_eos_token]

[--resume_from_checkpoint [RESUME_FROM_CHECKPOINT]] [--per_gpu_train_batch_size PER_GPU_TRAIN_BATCH_SIZE] [--gradient_accumulation_steps GRADIENT_ACCUMULATION_STEPS] [--fromdb]

[--db_iteration DB_ITERATION]

Finetune for all.

optional arguments:

-h, --help show this help message and exit

--data DATA the data used for instructing tuning

--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,gemma}

--task_type {seq2seq,classify}

--labels LABELS Labels to classify, only used when task_type is classify

--model_path MODEL_PATH

--output_dir OUTPUT_DIR

The DIR to save the model

--disable_wandb Disable report to wandb

--adapter {lora,qlora,adalora,prompt,p_tuning,prefix}

--lora_r LORA_R

--lora_alpha LORA_ALPHA

--lora_dropout LORA_DROPOUT

--lora_target_modules LORA_TARGET_MODULES [LORA_TARGET_MODULES ...]

the module to be injected, e.g. q_proj/v_proj/k_proj/o_proj for llama, query_key_value for bloom&GLM

--adalora_init_r ADALORA_INIT_R

--adalora_tinit ADALORA_TINIT

number of warmup steps for AdaLoRA wherein no pruning is performed

--adalora_tfinal ADALORA_TFINAL

fix the resulting budget distribution and fine-tune the model for tfinal steps when using AdaLoRA

--adalora_delta_t ADALORA_DELTA_T

interval of steps for AdaLoRA to update rank

--num_virtual_tokens NUM_VIRTUAL_TOKENS

--mapping_hidden_dim MAPPING_HIDDEN_DIM

--epochs EPOCHS

--learning_rate LEARNING_RATE

--cutoff_len CUTOFF_LEN

--val_set_size VAL_SET_SIZE

--group_by_length

--logging_steps LOGGING_STEPS

--load_8bit

--add_eos_token

--resume_from_checkpoint [RESUME_FROM_CHECKPOINT]

resume from the specified or the latest checkpoint, e.g. `--resume_from_checkpoint [path]` or `--resume_from_checkpoint`

--per_gpu_train_batch_size PER_GPU_TRAIN_BATCH_SIZE

Batch size per GPU/CPU for training.

--gradient_accumulation_steps GRADIENT_ACCUMULATION_STEPS

--fromdb

--db_iteration DB_ITERATION

The record's set name.

--db_item_num DB_ITEM_NUM

The Limit Num of train/test items selected from DB.usage: inference.py [-h] [--debug] [--web] [--api] [--instruction INSTRUCTION] [--input INPUT] [--max_input MAX_INPUT] [--test_data_path TEST_DATA_PATH]

[--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,phi3,gemma}] [--task_type {seq2seq,classify}] [--labels LABELS] [--model_path MODEL_PATH]

[--adapter_weights ADAPTER_WEIGHTS] [--load_8bit] [--temperature TEMPERATURE] [--top_p TOP_P] [--top_k TOP_K] [--max_new_tokens MAX_NEW_TOKENS] [--vllm] [--fromdb] [--db_type DB_TYPE]

[--db_iteration DB_ITERATION] [--db_test_iteration DB_TEST_ITERATION] [--db_item_num DB_ITEM_NUM]

Inference for all.

optional arguments:

-h, --help show this help message and exit

--debug Debug Mode to output detail info

--web Web Demo to try the inference

--api API to try the inference

--instruction INSTRUCTION

--input INPUT

--max_input MAX_INPUT

Limit the input length to avoid OOM or other bugs

--test_data_path TEST_DATA_PATH

The DIR of test data

--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,phi3,gemma}

--task_type {seq2seq,classify}

--labels LABELS Labels to classify, only used when task_type is classify

--model_path MODEL_PATH

--adapter_weights ADAPTER_WEIGHTS

The DIR of adapter weights

--load_8bit

--temperature TEMPERATURE

temperature higher, LLM is more creative

--top_p TOP_P

--top_k TOP_K

--max_new_tokens MAX_NEW_TOKENS

--vllm Use vllm to accelerate inference.

--fromdb

--db_type DB_TYPE The record is whether 'train' or 'test'.

--db_iteration DB_ITERATION

The record's set name.

--db_test_iteration DB_TEST_ITERATION

The record's test set name.

--db_item_num DB_ITEM_NUM

The Limit Num of train/test items selected from DB.Use vllm:

- Combine the Base Model and Adapter weight

python tool.py combine --model_type llama3 --model_path "LLMs/llama3.1/" --adapter_weights "output/llama3.1/" --output_dir "output/llama3.1-combined/"

- Install the dependencies and start vllm server, Help Link.

- use option vllm

python inference.py --model_type llama3 --instruction "Who are you?" --model_path "/root/SuperAdapters/output/llama3.1-combined" --vllm --max_new_tokens 32

usage: tool.py combine [-h] [--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,phi3,gemma}] [--model_path MODEL_PATH] [--adapter_weights ADAPTER_WEIGHTS]

[--output_dir OUTPUT_DIR] [--max_shard_size MAX_SHARD_SIZE]

optional arguments:

-h, --help show this help message and exit

--model_type {llama,llama2,llama3,chatglm,chatglm2,bloom,qwen,baichuan,mixtral,phi,phi3,gemma}

--model_path MODEL_PATH

--adapter_weights ADAPTER_WEIGHTS

The DIR of adapter weights

--output_dir OUTPUT_DIR

The DIR to save the model

--max_shard_size MAX_SHARD_SIZE

Max size of each of the combined model weight, like 1GB,5GB,etc.

python tool.py combine --model_type llama --model_path "LLMs/open-llama/open-llama-3b/" --adapter_weights "output/llama/" --output_dir "output/combine/"

Add the "--web" parameter

python inference.py --model_type phi --model_path "LLMs/phi/phi-2" --webAdd the "--api" parameter

python inference.py --model_type phi --model_path "LLMs/phi/phi-2" --apipython web/label.pypython web/label.py --type chatFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SuperAdapters

Similar Open Source Tools

SuperAdapters

SuperAdapters is a tool designed to finetune Large Language Models (LLMs) with various adapters on different platforms. It supports models like Bloom, LLaMA, ChatGLM, Qwen, Baichuan, Mixtral, Phi, and more. Users can finetune LLMs on Windows, Linux, and Mac M1/2, handle train/test data with Terminal, File, or DataBase, and perform tasks like CausalLM and SequenceClassification. The tool provides detailed instructions on how to use different models with specific adapters for tasks like finetuning and inference. It also includes requirements for CentOS, Ubuntu, and MacOS, along with information on LLM downloads and data formats. Additionally, it offers parameters for finetuning and inference, as well as options for web and API-based inference.

AnyCrawl

AnyCrawl is a high-performance crawling and scraping toolkit designed for SERP crawling, web scraping, site crawling, and batch tasks. It offers multi-threading and multi-process capabilities for high performance. The tool also provides AI extraction for structured data extraction from pages, making it LLM-friendly and easy to integrate and use.

token.js

Token.js is a TypeScript SDK that integrates with over 200 LLMs from 10 providers using OpenAI's format. It allows users to call LLMs, supports tools, JSON outputs, image inputs, and streaming, all running on the client side without the need for a proxy server. The tool is free and open source under the MIT license.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

PraisonAI

Praison AI is a low-code, centralised framework that simplifies the creation and orchestration of multi-agent systems for various LLM applications. It emphasizes ease of use, customization, and human-agent interaction. The tool leverages AutoGen and CrewAI frameworks to facilitate the development of AI-generated scripts and movie concepts. Users can easily create, run, test, and deploy agents for scriptwriting and movie concept development. Praison AI also provides options for full automatic mode and integration with OpenAI models for enhanced AI capabilities.

BetterOCR

BetterOCR is a tool that enhances text detection by combining multiple OCR engines with LLM (Language Model). It aims to improve OCR results, especially for languages with limited training data or noisy outputs. The tool combines results from EasyOCR, Tesseract, and Pororo engines, along with LLM support from OpenAI. Users can provide custom context for better accuracy, view performance examples by language, and upcoming features include box detection, improved interface, and async support. The package is under rapid development and contributions are welcomed.

shodh-memory

Shodh-Memory is a cognitive memory system designed for AI agents to persist memory across sessions, learn from experience, and run entirely offline. It features Hebbian learning, activation decay, and semantic consolidation, packed into a single ~17MB binary. Users can deploy it on cloud, edge devices, or air-gapped systems to enhance the memory capabilities of AI agents.

gollama

Gollama is a tool designed for managing Ollama models through a Text User Interface (TUI). Users can list, inspect, delete, copy, and push Ollama models, as well as link them to LM Studio. The application offers interactive model selection, sorting by various criteria, and actions using hotkeys. It provides features like sorting and filtering capabilities, displaying model metadata, model linking, copying, pushing, and more. Gollama aims to be user-friendly and useful for managing models, especially for cleaning up old models.

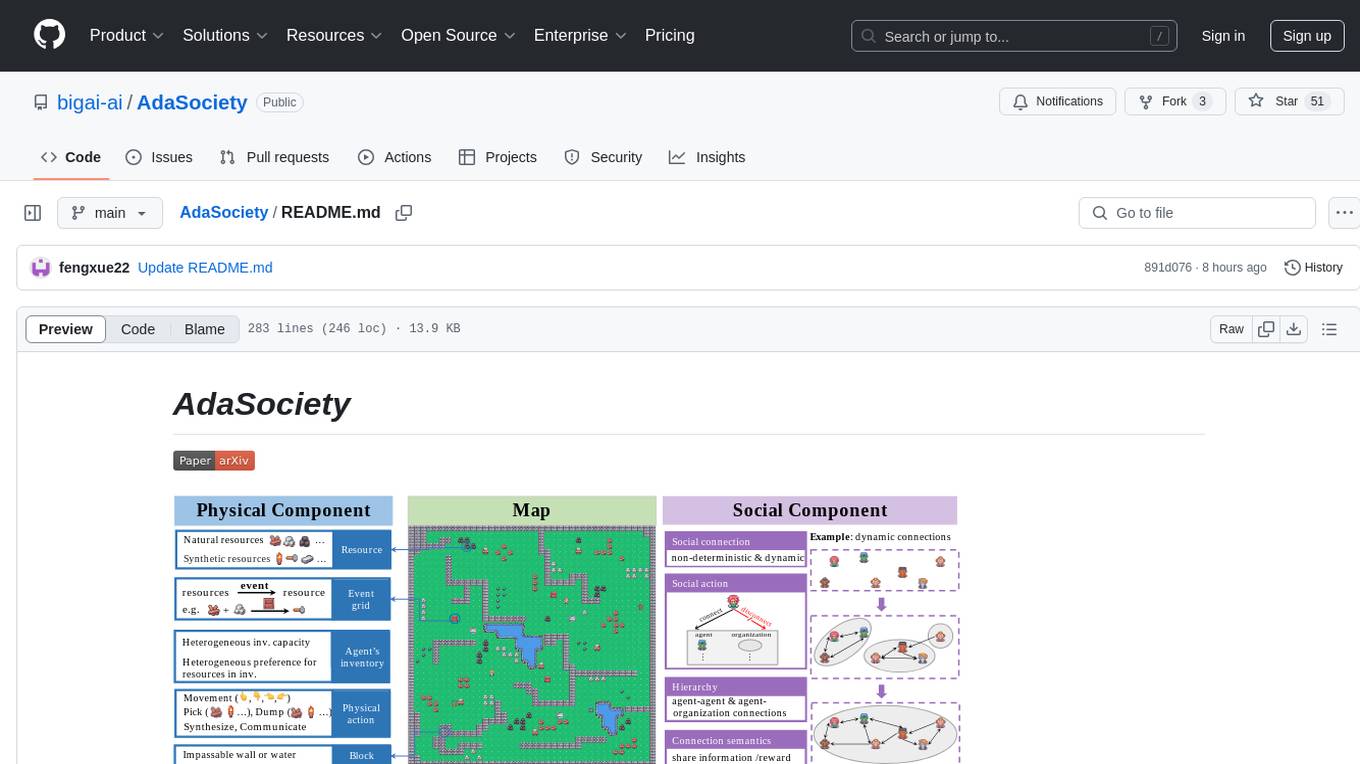

AdaSociety

AdaSociety is a multi-agent environment designed for simulating social structures and decision-making processes. It offers built-in resources, events, and player interactions. Users can customize the environment through JSON configuration or custom Python code. The environment supports training agents using RLlib and LLM frameworks. It provides a platform for studying multi-agent systems and social dynamics.

api-for-open-llm

This project provides a unified backend interface for open large language models (LLMs), offering a consistent experience with OpenAI's ChatGPT API. It supports various open-source LLMs, enabling developers to seamlessly integrate them into their applications. The interface features streaming responses, text embedding capabilities, and support for LangChain, a tool for developing LLM-based applications. By modifying environment variables, developers can easily use open-source models as alternatives to ChatGPT, providing a cost-effective and customizable solution for various use cases.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

EAGLE

Eagle is a family of Vision-Centric High-Resolution Multimodal LLMs that enhance multimodal LLM perception using a mix of vision encoders and various input resolutions. The model features a channel-concatenation-based fusion for vision experts with different architectures and knowledge, supporting up to over 1K input resolution. It excels in resolution-sensitive tasks like optical character recognition and document understanding.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

KwaiAgents

KwaiAgents is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). The open-sourced content includes: 1. **KAgentSys-Lite**: a lite version of the KAgentSys in the paper. While retaining some of the original system's functionality, KAgentSys-Lite has certain differences and limitations when compared to its full-featured counterpart, such as: (1) a more limited set of tools; (2) a lack of memory mechanisms; (3) slightly reduced performance capabilities; and (4) a different codebase, as it evolves from open-source projects like BabyAGI and Auto-GPT. Despite these modifications, KAgentSys-Lite still delivers comparable performance among numerous open-source Agent systems available. 2. **KAgentLMs**: a series of large language models with agent capabilities such as planning, reflection, and tool-use, acquired through the Meta-agent tuning proposed in the paper. 3. **KAgentInstruct**: over 200k Agent-related instructions finetuning data (partially human-edited) proposed in the paper. 4. **KAgentBench**: over 3,000 human-edited, automated evaluation data for testing Agent capabilities, with evaluation dimensions including planning, tool-use, reflection, concluding, and profiling.

For similar tasks

SuperAdapters

SuperAdapters is a tool designed to finetune Large Language Models (LLMs) with various adapters on different platforms. It supports models like Bloom, LLaMA, ChatGLM, Qwen, Baichuan, Mixtral, Phi, and more. Users can finetune LLMs on Windows, Linux, and Mac M1/2, handle train/test data with Terminal, File, or DataBase, and perform tasks like CausalLM and SequenceClassification. The tool provides detailed instructions on how to use different models with specific adapters for tasks like finetuning and inference. It also includes requirements for CentOS, Ubuntu, and MacOS, along with information on LLM downloads and data formats. Additionally, it offers parameters for finetuning and inference, as well as options for web and API-based inference.

superagentx

SuperAgentX is a lightweight open-source AI framework designed for multi-agent applications with Artificial General Intelligence (AGI) capabilities. It offers goal-oriented multi-agents with retry mechanisms, easy deployment through WebSocket, RESTful API, and IO console interfaces, streamlined architecture with no major dependencies, contextual memory using SQL + Vector databases, flexible LLM configuration supporting various Gen AI models, and extendable handlers for integration with diverse APIs and data sources. It aims to accelerate the development of AGI by providing a powerful platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.