seline

Seline is a local-first AI desktop application that brings together conversational AI, visual generation tools, vector search, and multi-channel connectivity in one place.

Stars: 133

Seline is a local-first AI desktop application that integrates conversational AI, visual generation tools, vector search, and multi-channel connectivity. It allows users to connect WhatsApp, Telegram, or Slack to create always-on bots with full context and background task delivery. The application supports multi-channel connectivity, deep research mode, local web browsing with Puppeteer, local knowledge and privacy features, visual and creative tools, automation and agents, developer experience enhancements, and more. Seline is actively developed with a focus on improving user experience and functionality.

README:

Seline is a local-first AI desktop application that brings together conversational AI, visual generation tools, vector search, and multi-channel connectivity in one place. Your data stays on your machine, conversations persist across sessions with long-running context, and you can route between any LLM provider without leaving the app. Connect WhatsApp, Telegram, or Slack to turn your agents into always-on bots that respond across channels with full context and background task delivery.

Seline is in active development, things break, we fix, it's a big application and our team utilizes it each day now and dedicated to improve.

Known Issues: Mac dmg builds has signing issue, might give error after install. Wait two days or search for workaround on the web. I will sign in two days with Apple developer id.

Multi-Channel Connectivity

- WhatsApp, Telegram, Slack — Turn agents into always-on bots. Messages route to assigned agents, responses flow back automatically. Scheduled task delivery to channels.

-

MCP (Model Context Protocol) — Connect external AI services per-agent with dynamic path variables. Bundled Node.js for

npx-based servers.

Intelligence & Research

- Deep Research Mode — 6-phase workflow (plan → search → analyze → draft → refine → finalize) with cited sources and full reports. Multi-model routing for research, chat, vision, and utility tasks running in parallel.

- Local web browsing with Puppeteer — Bundled headless Chromium scrapes pages locally (no external API needed), supports JavaScript-heavy sites, extracts markdown and metadata.

- Prompt enhancement — A utility model enriches your queries with context from synced folders before the main LLM sees them.

- Smart tool discovery — 40+ tools loaded on-demand via searchTools, saving ~70% of tokens per request.

Local Knowledge & Privacy

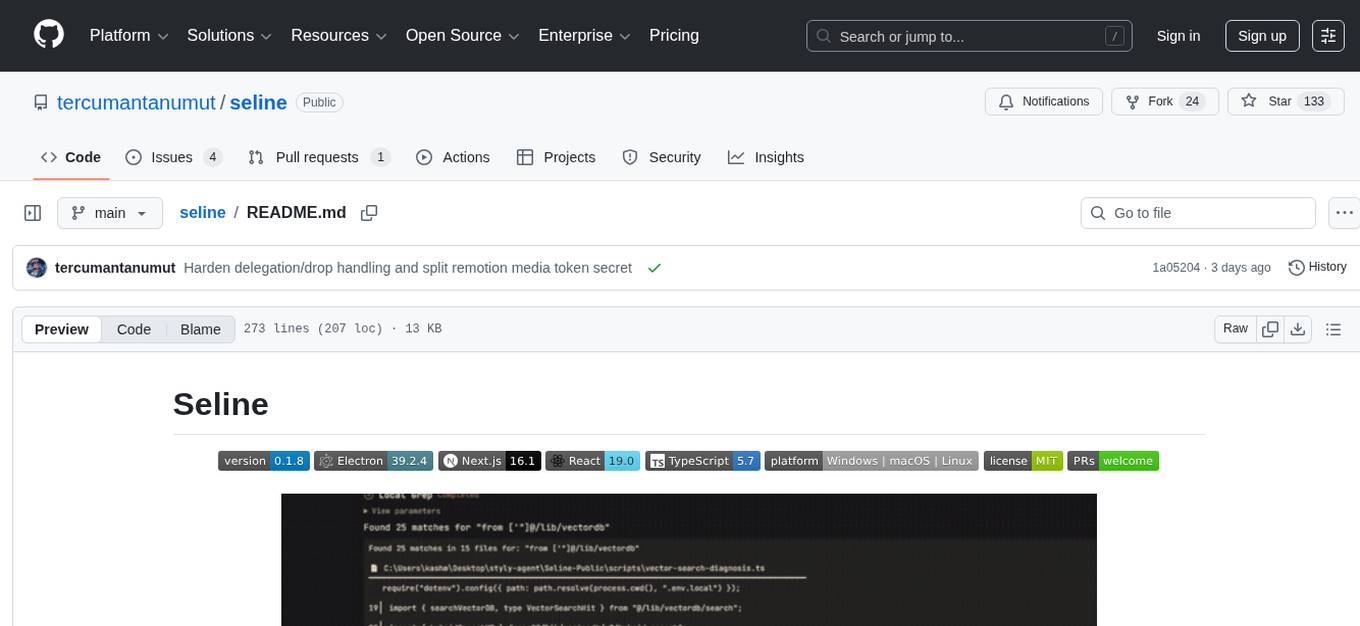

- Local or API Vector search with LanceDB — Hybrid dense + lexical retrieval, AI-powered result synthesis. Embedding provider can be local (on-device) or API-based.

- Document RAG — Attach files to agents, indexed and searchable instantly with configurable sync ignore patterns.

- Local grep (ripgrep) — Fast pattern search across synced folders.

Visual & Creative Tools

- Image generation — Flux.2, GPT-5, Gemini, Z-Image, FLUX.2 Klein 4B/9B (local), WAN 2.2. Reference-based editing, style transfer, virtual try-on.

- Video assembly — AI-driven scene planning, professional transitions (fade/crossfade/slide/wipe/zoom), Ken Burns effect, text overlays, session-wide asset compilation into cohesive videos via Remotion.

- Custom ComfyUI workflows — Import JSON, auto-detect inputs/outputs, real-time WebSocket progress.

Automation & Agents

- Task scheduler — Recurring cron jobs with presets (Daily Standup, Weekly Digest, Code Review). Pause, resume, trigger on demand. Live streaming output. Background task system with zombie run detection and channel delivery.

- Persistent memory — Agents remember preferences and workflows across sessions, categorized and user-controlled.

- Configurable agents — Persistent sessions, long-running context, active session indicators.

- Plan tool & UI — Models create and track multi-step task plans inline with collapsible status UI. Tool calls grouped into compact badge rows (handles 15+ concurrent calls cleanly).

Developer Experience

- Prompt caching — Claude API and OpenRouter cache tracking in observability dashboard. Explicit cache breakpoints with configurable TTL (5m/1h) for Claude direct API.

- Execute commands — Safely run commands within synced/indexed folders.

| Provider | Models | Prompt Caching |

|---|---|---|

| Anthropic | Claude (direct API) | Explicit cache breakpoints, configurable TTL (5m / 1h) |

| OpenRouter | Claude, Gemini, OpenAI, Grok, Moonshot, Groq, DeepSeek | Provider-side (automatic for supported models) |

| Kimi / Moonshot | Kimi K2.5 (256K ctx, vision, thinking) | Provider-side automatic |

| Antigravity | Gemini 3, Claude Sonnet 4.5, Claude Haiku 4.5 | Not supported |

| Codex | GPT-5, Codex | Not supported |

| Ollama | Local models | Not supported |

Seline ships with full MCP support. Servers are configured per-agent and auto-connect on startup.

-

${SYNCED_FOLDER}— path of the primary synced folder for the current agent. -

${SYNCED_FOLDERS}— comma-separated list of all synced folders. -

${SYNCED_FOLDERS_ARRAY}— expands to one argument per folder (useful for thefilesystemserver).

Node.js is bundled inside the app on macOS and Windows, so MCP servers that need npx or node work out of the box without a system Node.js installation.

Turn your agents into always-on bots by connecting WhatsApp, Telegram, or Slack. Each agent can have its own channel connections—inbound messages route to the assigned agent with full context, and responses flow back through the same channel automatically.

WhatsApp (via Baileys)

- QR code pairing — scan with your WhatsApp mobile app

- Persistent auth across restarts

- Text messages and image attachments (send/receive)

- Self-chat mode for testing

- Auto-reconnection on connection drops

Telegram (via Grammy)

- Bot token authentication (create via @BotFather)

- Message threads/topics support

- Automatic message chunking for long responses (3800 char limit)

- Text and image support

- Handles polling conflicts (multiple instances)

Slack (via Bolt SDK)

- Socket mode (no public webhook needed)

- Requires: bot token, app token, signing secret

- Channels, DMs, and threaded messages

- File uploads with captions

- Auto-resolves channel/user names

- Unified routing — Messages route to the agent assigned to each connection

- Background task delivery — Scheduled task results can be sent to channels automatically with formatted summaries (task name, status, duration, errors, session links)

- Full context — Agents see message history, attachments, and thread context

- Status tracking — Connection status (disconnected/connecting/connected/error) shown in UI

- Auto-bootstrap — All connections auto-reconnect on app startup

- macOS — DMG installer available.

- Windows — NSIS installer and portable builds available.

- Linux — not tested.

For end users: none beyond the OS installer.

For developers:

- Node.js 20+ (22 recommended for Electron 39 native module rebuilds)

- npm 9+

- Windows 10/11 or macOS 12+

npm installnpm run electron:devThis runs the Next.js dev server (with stdio fix) and launches Electron against http://localhost:3000.

Set these in .env (and in CI/test environments that load server modules):

-

INTERNAL_API_SECRET- internal API auth secret used by scheduler/delegation/internal routes. -

REMOTION_MEDIA_TOKEN- token appended to Remotion media URLs during video assembly.

Use different random values for each secret.

# Windows installer + portable

npm run electron:dist:win

# macOS (DMG + dir)

npm run electron:dist:macFor local packaging without creating installers, use npm run electron:pack. See docs/BUILD.md for the full pipeline.

If you prefer to download models manually (or have slow/no internet during Docker build), place them in the paths below. Models are mounted via Docker volumes at runtime.

Base path: comfyui_backend/ComfyUI/models/

| Model | Path | Download |

|---|---|---|

| Checkpoint | checkpoints/z-image-turbo-fp8-aio.safetensors |

HuggingFace |

| LoRA | loras/z-image-detailer.safetensors |

HuggingFace |

Base path: comfyui_backend/flux2-klein-4b/volumes/models/

| Model | Path | Download |

|---|---|---|

| VAE | vae/flux2-vae.safetensors |

HuggingFace |

| CLIP | clip/qwen_3_4b.safetensors |

HuggingFace |

| Diffusion Model | diffusion_models/flux-2-klein-base-4b-fp8.safetensors |

HuggingFace |

Base path: comfyui_backend/flux2-klein-9b/volumes/models/

| Model | Path | Download |

|---|---|---|

| VAE | vae/flux2-vae.safetensors |

HuggingFace |

| CLIP | clip/qwen_3_8b_fp8mixed.safetensors |

HuggingFace |

| Diffusion Model | diffusion_models/flux-2-klein-base-9b-fp8.safetensors |

HuggingFace |

comfyui_backend/

├── ComfyUI/models/ # Z-Image models

│ ├── checkpoints/

│ │ └── z-image-turbo-fp8-aio.safetensors

│ └── loras/

│ └── z-image-detailer.safetensors

│

├── flux2-klein-4b/volumes/models/ # FLUX.2 Klein 4B models

│ ├── vae/

│ │ └── flux2-vae.safetensors

│ ├── clip/

│ │ └── qwen_3_4b.safetensors

│ └── diffusion_models/

│ └── flux-2-klein-base-4b-fp8.safetensors

│

└── flux2-klein-9b/volumes/models/ # FLUX.2 Klein 9B models

├── vae/

│ └── flux2-vae.safetensors

├── clip/

│ └── qwen_3_8b_fp8mixed.safetensors

└── diffusion_models/

└── flux-2-klein-base-9b-fp8.safetensors

Note: The VAE (

flux2-vae.safetensors) is the same for both Klein 4B and 9B. You can download it once and copy to both locations.

The Z-Image Turbo FP8 workflow uses a LoRA for detail enhancement. You can swap it with any compatible LoRA.

Place your LoRA file in:

comfyui_backend/ComfyUI/models/loras/your-lora-name.safetensors

Edit comfyui_backend/workflow_to_replace_z_image_fp8.json and find node 41 (LoraLoader):

"41": {

"inputs": {

"lora_name": "z-image-detailer.safetensors", // ← Change this

"strength_model": 0.5,

"strength_clip": 1,

...

},

"class_type": "LoraLoader"

}Change lora_name to your LoRA filename.

The workflow JSON is mounted as a volume, so just restart:

cd comfyui_backend

docker-compose restart comfyui workflow-api-

Native module errors (

better-sqlite3,onnxruntime-node): runnpm run electron:rebuild-nativebefore building. -

Black screen in packaged app: verify

.next/standaloneandextraResourcesare correct; seedocs/BUILD.md. -

Missing provider keys: ensure

ANTHROPIC_API_KEY,OPENROUTER_API_KEY, orKIMI_API_KEYis configured in settings or.env. -

Embeddings mismatch errors: reindex Vector Search from Settings or run

POST /api/vector-syncwithaction: "reindex-all". - MCP servers not starting: Node.js is bundled in the app; if you still see ENOENT errors, check that the app was installed from the latest DMG/installer (not copied manually).

-

docs/ARCHITECTURE.md- system layout and core flows -

docs/AI_PIPELINES.md- LLM, embeddings, and tool pipelines -

docs/DEVELOPMENT.md- dev setup, scripts, tests, and build process -

docs/API.md- internal modules and API endpoints

Seline is built using amazing open-source libraries. See THANKS.md for the full list of credits and acknowledgments.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for seline

Similar Open Source Tools

seline

Seline is a local-first AI desktop application that integrates conversational AI, visual generation tools, vector search, and multi-channel connectivity. It allows users to connect WhatsApp, Telegram, or Slack to create always-on bots with full context and background task delivery. The application supports multi-channel connectivity, deep research mode, local web browsing with Puppeteer, local knowledge and privacy features, visual and creative tools, automation and agents, developer experience enhancements, and more. Seline is actively developed with a focus on improving user experience and functionality.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

screenpipe

Screenpipe is an open source application that turns your computer into a personal AI, capturing screen and audio to create a searchable memory of your activities. It allows you to remember everything, search with AI, and keep your data 100% local. The tool is designed for knowledge workers, developers, researchers, people with ADHD, remote workers, and anyone looking for a private, local-first alternative to cloud-based AI memory tools.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

explain-openclaw

Explain OpenClaw is a comprehensive documentation repository for the OpenClaw framework, a self-hosted AI assistant platform. It covers various aspects such as plain English explanations, technical architecture, deployment scenarios, privacy and safety measures, security audits, worst-case security scenarios, optimizations, and AI model comparisons. The repository serves as a living knowledge base with beginner-friendly explanations and detailed technical insights for contributors.

indexify

Indexify is an open-source engine for building fast data pipelines for unstructured data (video, audio, images, and documents) using reusable extractors for embedding, transformation, and feature extraction. LLM Applications can query transformed content friendly to LLMs by semantic search and SQL queries. Indexify keeps vector databases and structured databases (PostgreSQL) updated by automatically invoking the pipelines as new data is ingested into the system from external data sources. **Why use Indexify** * Makes Unstructured Data **Queryable** with **SQL** and **Semantic Search** * **Real-Time** Extraction Engine to keep indexes **automatically** updated as new data is ingested. * Create **Extraction Graph** to describe **data transformation** and extraction of **embedding** and **structured extraction**. * **Incremental Extraction** and **Selective Deletion** when content is deleted or updated. * **Extractor SDK** allows adding new extraction capabilities, and many readily available extractors for **PDF**, **Image**, and **Video** indexing and extraction. * Works with **any LLM Framework** including **Langchain**, **DSPy**, etc. * Runs on your laptop during **prototyping** and also scales to **1000s of machines** on the cloud. * Works with many **Blob Stores**, **Vector Stores**, and **Structured Databases** * We have even **Open Sourced Automation** to deploy to Kubernetes in production.

stenoai

StenoAI is an AI-powered meeting intelligence tool that allows users to record, transcribe, summarize, and query meetings using local AI models. It prioritizes privacy by processing data entirely on the user's device. The tool offers multiple AI models optimized for different use cases, making it ideal for healthcare, legal, and finance professionals with confidential data needs. StenoAI also features a macOS desktop app with a user-friendly interface, making it convenient for users to access its functionalities. The project is open-source and not affiliated with any specific company, emphasizing its focus on meeting-notes productivity and community collaboration.

burp-ai-agent

Burp AI Agent is an extension for Burp Suite that integrates AI into your security workflow. It provides 7 AI backends, 53+ MCP tools, and 62 vulnerability classes. Users can configure privacy modes, perform audit logging, and connect external AI agents via MCP. The tool allows passive and active AI scanners to find vulnerabilities while users focus on manual testing. It requires Burp Suite, Java 21, and at least one AI backend configured.

claudian

Claudian is an Obsidian plugin that embeds Claude Code as an AI collaborator in your vault. It provides full agentic capabilities, including file read/write, search, bash commands, and multi-step workflows. Users can leverage Claude Code's power to interact with their vault, analyze images, edit text inline, add custom instructions, create reusable prompt templates, extend capabilities with skills and agents, connect external tools via Model Context Protocol servers, control models and thinking budget, toggle plan mode, ensure security with permission modes and vault confinement, and interact with Chrome. The plugin requires Claude Code CLI, Obsidian v1.8.9+, Claude subscription/API or custom model provider, and desktop platforms (macOS, Linux, Windows).

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.