openapi-to-skills

OpenAPI to Agent Skill for context-efficient AI agents

Stars: 90

Convert OpenAPI specifications into Agent Skills format - structured markdown documentation that minimizes context size for AI agents. Agent Skills solves the limitations of feeding raw OpenAPI specs to agents by structuring documentation for on-demand reading, enabling agents to load only what they need. It features semantic structure, smart grouping, filtering, and customizable templates. The tool provides options for output directory, skill name, include/exclude tags, paths, deprecated operations, grouping operations, custom templates directory, force overwrite, and quiet mode. The output structure includes SKILL.md, references, resources, operations, schemas, and authentication. The tool also offers a programmatic API for integration into build pipelines or custom tooling.

README:

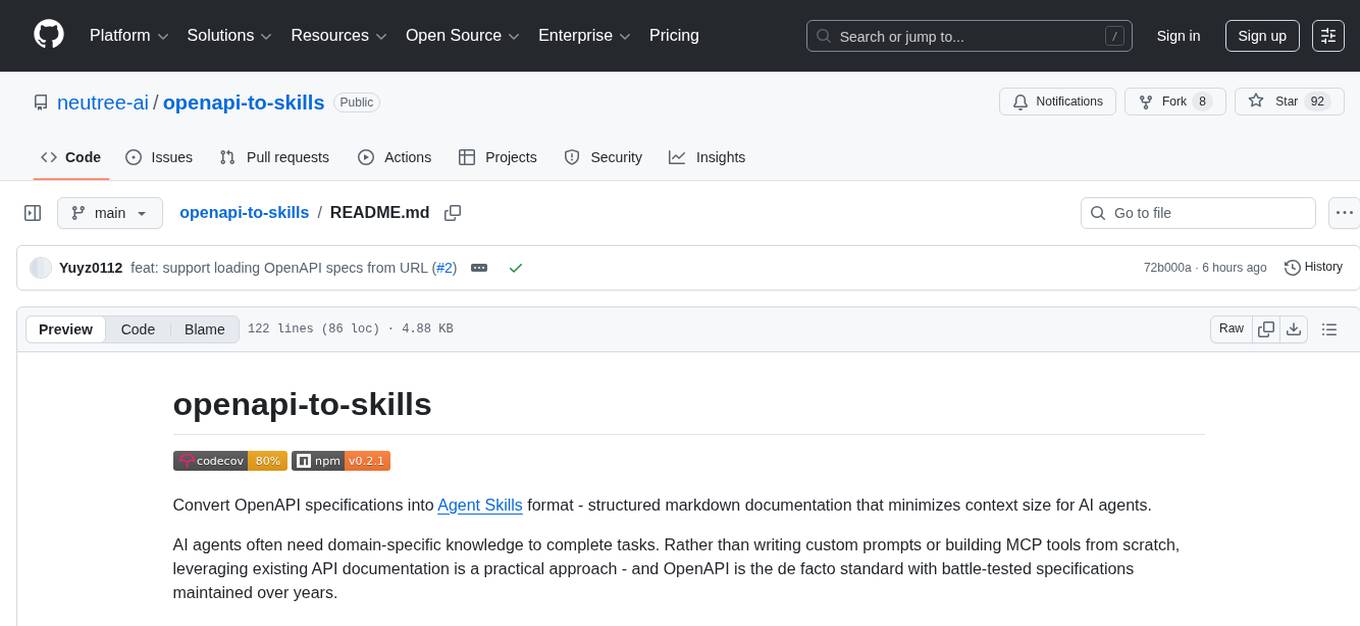

Convert OpenAPI specifications into Agent Skills format - structured markdown documentation that minimizes context size for AI agents.

AI agents often need domain-specific knowledge to complete tasks. Rather than writing custom prompts or building MCP tools from scratch, leveraging existing API documentation is a practical approach - and OpenAPI is the de facto standard with battle-tested specifications maintained over years.

However, feeding raw OpenAPI specs to agents has limitations. Complex specifications can exceed LLM context limits, and even when they fit, loading the entire document for every request wastes valuable context.

Agent Skills solves this by structuring documentation for on-demand reading. Agents load only what they need - starting with an overview, then drilling into specific operations or schemas. Since file reading is a universal capability across agent frameworks, this approach works everywhere without special integrations.

- Semantic structure - Output organized by resources, operations, and schemas, enabling agents to load only relevant sections

- Smart grouping - Operations grouped by tags or path prefix (auto-detected), schemas grouped by naming prefix

- Filtering - Include/exclude by tags, paths, or deprecated status

- Customizable templates - Override default Eta templates for custom output format

npx openapi-to-skills ./openapi.yaml -o ./output

# or

bunx openapi-to-skills ./openapi.yaml -o ./output

# or from URL

npx openapi-to-skills https://example.com/openapi.yaml -o ./output| Option | Alias | Description |

|---|---|---|

--output |

-o |

Output directory (default: ./output) |

--name |

-n |

Skill name (default: derived from API title) |

--include-tags |

Only include specified tags (comma-separated) | |

--exclude-tags |

Exclude specified tags (comma-separated) | |

--exclude-paths |

Exclude paths matching prefixes (comma-separated) | |

--exclude-deprecated |

Exclude deprecated operations | |

--group-by |

-g |

How to group operations: tags, path, or auto (default: auto) |

--templates |

-t |

Custom templates directory |

--force |

-f |

Overwrite existing output directory |

--quiet |

-q |

Suppress output except errors |

{skill-name}/

SKILL.md # Entry point with API overview

references/

resources/ # One file per tag

operations/ # One file per operation

schemas/ # Grouped by naming prefix

authentication.md # Auth schemes (if any)

If your OpenAPI spec contains external $ref references (e.g., ./common.yaml#/components/schemas/Error), bundle them first:

npx swagger-cli bundle ./api.yaml -o ./bundled.yaml

npx openapi-to-skills ./bundled.yaml -o ./outputThe package exports convertOpenAPIToSkill for integration into build pipelines or custom tooling:

import { convertOpenAPIToSkill } from 'openapi-to-skills';

const spec = { /* OpenAPI spec object */ };

await convertOpenAPIToSkill(spec, {

outputDir: './output',

parser: {

skillName: 'my-api',

filter: {

includeTags: ['users', 'orders'],

excludeDeprecated: true,

},

},

});For advanced use cases, individual components (createParser, createRenderer, createWriter) are also exported.

See examples/ for a simple demo with the classic Petstore spec (3 resources, 19 operations).

public-api-skills demonstrates real-world usage across a large collection of public APIs:

| Metric | Original | Agent Skills |

|---|---|---|

| Source | 1 file (7.2 MB) | 2,135 files |

| Resources | - | 77 index files |

| Operations | 588 (all in one) | 588 individual files |

| Schemas | 1,315 (all in one) | 1,468 individual files |

A 7.2 MB monolithic spec becomes 2,135 focused files that agents can navigate on-demand.

- [ ] Detect unresolved external

$refand warn users - [x] Support fetching OpenAPI specs from URL

Development guidelines are maintained in CLAUDE.md, designed as a shared reference for both human contributors and AI agents.

We welcome AI-assisted development. However, whether a patch is written manually or with AI collaboration, the author must fully review all changes before submission and take responsibility for the content.

Apache-2.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openapi-to-skills

Similar Open Source Tools

openapi-to-skills

Convert OpenAPI specifications into Agent Skills format - structured markdown documentation that minimizes context size for AI agents. Agent Skills solves the limitations of feeding raw OpenAPI specs to agents by structuring documentation for on-demand reading, enabling agents to load only what they need. It features semantic structure, smart grouping, filtering, and customizable templates. The tool provides options for output directory, skill name, include/exclude tags, paths, deprecated operations, grouping operations, custom templates directory, force overwrite, and quiet mode. The output structure includes SKILL.md, references, resources, operations, schemas, and authentication. The tool also offers a programmatic API for integration into build pipelines or custom tooling.

jido

Jido is a toolkit for building autonomous, distributed agent systems in Elixir. It provides the foundation for creating smart, composable workflows that can evolve and respond to their environment. Geared towards Agent builders, it contains core state primitives, composable actions, agent data structures, real-time sensors, signal system, skills, and testing tools. Jido is designed for multi-node Elixir clusters and offers rich helpers for unit and property-based testing.

vision-parse

Vision Parse is a tool that leverages Vision Language Models to parse PDF documents into beautifully formatted markdown content. It offers smart content extraction, content formatting, multi-LLM support, PDF document support, and local model hosting using Ollama. Users can easily convert PDFs to markdown with high precision and preserve document hierarchy and styling. The tool supports multiple Vision LLM providers like OpenAI, LLama, and Gemini for accuracy and speed, making document processing efficient and effortless.

slidev-mcp

slidev-mcp is an intelligent slide generation tool based on Slidev that integrates large language model technology, allowing users to automatically generate professional online PPT presentations with simple descriptions. It dramatically lowers the barrier to using Slidev, provides natural language interactive slide creation, and offers automated generation of professional presentations. The tool also includes various features for environment and project management, slide content management, and utility tools to enhance the slide creation process.

ai

A TypeScript toolkit for building AI-driven video workflows on the server, powered by Mux! @mux/ai provides purpose-driven workflow functions and primitive functions that integrate with popular AI/LLM providers like OpenAI, Anthropic, and Google. It offers pre-built workflows for tasks like generating summaries and tags, content moderation, chapter generation, and more. The toolkit is cost-effective, supports multi-modal analysis, tone control, and configurable thresholds, and provides full TypeScript support. Users can easily configure credentials for Mux and AI providers, as well as cloud infrastructure like AWS S3 for certain workflows. @mux/ai is production-ready, offers composable building blocks, and supports universal language detection.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

moatless-tools

Moatless Tools is a hobby project focused on experimenting with using Large Language Models (LLMs) to edit code in large existing codebases. The project aims to build tools that insert the right context into prompts and handle responses effectively. It utilizes an agentic loop functioning as a finite state machine to transition between states like Search, Identify, PlanToCode, ClarifyChange, and EditCode for code editing tasks.

thepipe

The Pipe is a multimodal-first tool for feeding files and web pages into vision-language models such as GPT-4V. It is best for LLM and RAG applications that require a deep understanding of tricky data sources. The Pipe is available as a hosted API at thepi.pe, or it can be set up locally.

Consistency_LLM

Consistency Large Language Models (CLLMs) is a family of efficient parallel decoders that reduce inference latency by efficiently decoding multiple tokens in parallel. The models are trained to perform efficient Jacobi decoding, mapping any randomly initialized token sequence to the same result as auto-regressive decoding in as few steps as possible. CLLMs have shown significant improvements in generation speed on various tasks, achieving up to 3.4 times faster generation. The tool provides a seamless integration with other techniques for efficient Large Language Model (LLM) inference, without the need for draft models or architectural modifications.

DB-GPT-Hub

DB-GPT-Hub is an experimental project leveraging Large Language Models (LLMs) for Text-to-SQL parsing. It includes stages like data collection, preprocessing, model selection, construction, and fine-tuning of model weights. The project aims to enhance Text-to-SQL capabilities, reduce model training costs, and enable developers to contribute to improving Text-to-SQL accuracy. The ultimate goal is to achieve automated question-answering based on databases, allowing users to execute complex database queries using natural language descriptions. The project has successfully integrated multiple large models and established a comprehensive workflow for data processing, SFT model training, prediction output, and evaluation.

manim-generator

The 'manim-generator' repository focuses on automatic video generation using an agentic LLM flow combined with the manim python library. It experiments with automated Manim video creation by delegating code drafting and validation to specific roles, reducing render failures, and improving visual consistency through iterative feedback and vision inputs. The project also includes 'Manim Bench' for comparing AI models on full Manim video generation.

ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project facilitates sharing and exchanging technologies related to large model semantic cache through open-source collaboration.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

can-ai-code

Can AI Code is a self-evaluating interview tool for AI coding models. It includes interview questions written by humans and tests taken by AI, inference scripts for common API providers and CUDA-enabled quantization runtimes, a Docker-based sandbox environment for validating untrusted Python and NodeJS code, and the ability to evaluate the impact of prompting techniques and sampling parameters on large language model (LLM) coding performance. Users can also assess LLM coding performance degradation due to quantization. The tool provides test suites for evaluating LLM coding performance, a webapp for exploring results, and comparison scripts for evaluations. It supports multiple interviewers for API and CUDA runtimes, with detailed instructions on running the tool in different environments. The repository structure includes folders for interviews, prompts, parameters, evaluation scripts, comparison scripts, and more.

langcheck

LangCheck is a Python library that provides a suite of metrics and tools for evaluating the quality of text generated by large language models (LLMs). It includes metrics for evaluating text fluency, sentiment, toxicity, factual consistency, and more. LangCheck also provides tools for visualizing metrics, augmenting data, and writing unit tests for LLM applications. With LangCheck, you can quickly and easily assess the quality of LLM-generated text and identify areas for improvement.

For similar tasks

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

repo-to-text

The `repo-to-text` tool converts a directory's structure and contents into a single text file. It generates a formatted text representation that includes the directory tree and file contents, making it easy to share code with LLMs for development and debugging. Users can customize the tool's behavior with various options and settings, including output directory specification, debug logging, and file inclusion/exclusion rules. The tool supports Docker usage for containerized environments and provides detailed instructions for installation, usage, settings configuration, and contribution guidelines. It is a versatile tool for converting repository contents into text format for easy sharing and documentation.

openapi-to-skills

Convert OpenAPI specifications into Agent Skills format - structured markdown documentation that minimizes context size for AI agents. Agent Skills solves the limitations of feeding raw OpenAPI specs to agents by structuring documentation for on-demand reading, enabling agents to load only what they need. It features semantic structure, smart grouping, filtering, and customizable templates. The tool provides options for output directory, skill name, include/exclude tags, paths, deprecated operations, grouping operations, custom templates directory, force overwrite, and quiet mode. The output structure includes SKILL.md, references, resources, operations, schemas, and authentication. The tool also offers a programmatic API for integration into build pipelines or custom tooling.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.